There have been a multitude of announcements recently relative to the incorporation of machine learning (ML) methods into EDA tool algorithms, mostly in the physical implementation flows. For example, deterministic ML-based decision algorithms applied to cell placement and signal interconnect routing promise to expedite… Read More

Artificial Intelligence

Teaching AI to be Evil with Unethical Data

An Artificial Intelligence (AI) system is only as good as its training. For AI Machine Learning (ML) and Deep Learning (DL) frameworks, the training data sets are a crucial element that defines how the system will operate. Feed it skewed or biased information and it will create a flawed inference engine.

Why Go Custom in AI Accelerators, Revisited

I believe I asked this question a year or two ago and answered it for the absolute bleeding edge of datacenter performance – Google TPU and the like. Those hyperscalars (Google, Amazon, Microsoft, Baidu, Alibaba, etc) who want to do on-the-fly recognition in pictures so they can tag friends in photos, do almost real-time machine… Read More

Seeing is Believing, the Benefits of Delta’s Low-Resolution Vision Chip

Presto Engineering recently held a webinar discussing vision chip technology – what a vision chip is, what are the applications and how can you optimize its use. Samer Ismail, a design engineer at Presto Engineering with deep domain expertise in vision chip technology was the presenter. Samer takes you on a very informative … Read More

Where’s the Value in Next-Gen Cars?

Value chains can be very robust and seemingly unbreakable – until they’re not. One we’ve taken for granted for many years is the chain for electronics systems in cars. The auto OEM, e.g. Toyota, gets electronics module from a Tier-1 supplier such as Denso. They, in turn, build their modules using chips from a semiconductor chip maker… Read More

How Blockchain Is Revolutionizing Crowdfunding

According to experts, there are five key benefits of crowdfunding platforms: efficiency, reach, easier presentation, built-in PR and marketing, and near-immediate validation of concept, which explains why crowdfunding has become an extremely useful alternative to venture capital (VC), and has also allowed non-traditional… Read More

Predicting Bugs: ML and Static Team Up. Innovation in Verification

Can we predict where bugs are most likely to be found, to better direct testing? Paul Cunningham (GM of Verification at Cadence), Jim Hogan and I continue our series on novel research ideas, again through a paper in software verification we find equally relevant to hardware. Feel free to comment if you agree or disagree.

The Innovation… Read More

8 Key Tech Trends in a Post-COVID-19 World

COVID-19 has demonstrated the importance of digital readiness, which allows business and people’s life to continue as usual during pandemics. Building the necessary infrastructure to support a digitized world and stay current in the latest technology will be essential for any business or country to remain competitive in a … Read More

What a Difference an Architecture Makes: Optimizing AI for IoT

Last week Mentor hosted a virtual event on designing an AI accelerator with HLS, integrating it together with an Arm Corstone SSE-200 platform and characterizing/optimizing for performance and power. Though in some ways a recap of earlier presentations, there were some added insights in this session, particularly in characterizing… Read More

Cadence – Redefining EDA Through Computational Software

Based on what I’m seeing, I believe Cadence is looking at the world a bit differently these days. I first reported about their approach to machine learning for EDA in March, and then there was their white paper about Intelligent System Design in April. It’s now May, and Cadence is shaking things up again with a new white paper entitled… Read More

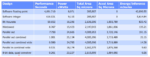

TSMC Process Simplification for Advanced Nodes