Support for AI at the edge has prompted a good deal of innovation in accelerators, initially in CNNs, evolving to DNNs and RNNs (convolutional neural nets, deep neural nets, and recurrent neural nets). Most recently, the transformer technology behind the craze in large language models is proving to have important relevance at the edge for better reasons than helping you cheat on a writing assignment. Transformers can increase accuracy in vision and speech recognition and can even extend learning beyond a base training set. Lots of possibilities but an obvious question is at what cost? Will your battery run down faster, does your chip become more expensive, how do you scale from entry-level to premium products?

Scalability

One size might be able to fit all, but does it need to? A voice activated TV remote can be supported by a CNN-based accelerator, keeping cost down and extending battery life in the remote. Smart speakers must support a wider range of commands and surveillance systems must be able to trigger on suspicious activity, not a harmless animal passing by; both cases demand a higher level of inference, perhaps through DNNs or RNNs.

Vision transformers (ViT) are gaining popularity through higher accuracy in classification than CNNs. This is further enhanced through the global attention nature of transformer algorithms, allowing them to consider a whole scene in classification. That said, ViT is commonly paired with CNNs since CNNs can recognize objects much faster. Together performance and vision accuracy demand both a CNN and a ViT. In natural language processing, we have all seen how large language models can provide eerily high accuracy in recognition, now also practical at the edge. For these applications you must use transformer-based algorithms.

But wait… before you can use AI, an edge device employing voice-based control also needs a voice pickup front-end for audio beamforming, noise/echo cancellation, and wake word recognition. Image-based systems need a computer vision front end for image signal processing, de-mosaicing, noise reduction, dynamic range scaling, and so on.

These smart systems demand a lot of functionality, adding complexity and concerns to hardware development, silicon, margin costs, battery lifetimes, together with software development and maintenance for a family of products spanning a range of capabilities. How do you build a range of edge inference solutions to meet competitive inference rates, cost, and energy goals?

Configurable DSPs plus an optional configurable AI accelerator

Cadence has been in the DSP IP business for a long time, offering among other options their HiFi DSPs for audio, voice, and speech (popular in always-on very low power home and automotive infotainment) and their vision DSPs (used in mobile, automotive, VR/AR, surveillance, and drones/robots). In all of these they have established hardware and software solutions for audio/video pre-processing and AI. Intelligence extends from always-on functions – voice or visual activity detection for example – running at very low power to more complex neural net (NN) models running on the same DSP.

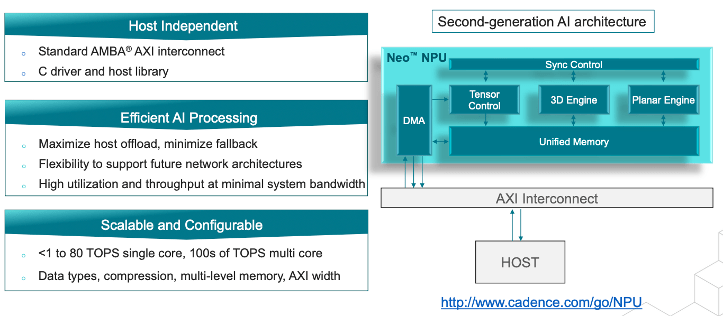

Higher performance recognition or classification requires a dedicated AI engine to run a specialized NN model, offloaded from the DSP processor. Cadence’s NNE 110 core handles full-featured convolutional models to provide this acceleration, supporting up to 256 GOPS per core. They have now announced a next-generation neural net accelerator, the Neo® NPU, raising performance significantly to 80 TOPS per core, also with support for multi-core.

The Neo NPU and the NeuroWeave SDK

Neo NPUs are targeted to a wide range of edge applications, from hearables, wearables and IoT; to smart speakers, smart TVs, to AR/VR and gaming; all the way up to automotive infotainment and ADAS.

The hardware architecture for such cores is becoming familiar. A tensor control unit manages accessing models, downloading/uploading data, and feeding operations to the 3D engine for tensor operations or a planar unit for scalar/vector operations. In an LLM the 3D engine might be used for self-attention operations, the planar engine for normalizations. For a CNN, the 3D engine would perform convolution operations and the planar engine would handle pooling.

Control and both engines are closely coupled through unified memory, again common in these state-of-art accelerators, to minimize not only off-chip memory accesses but even out-of-core memory accesses.

The SDK for this platform is called NeuroWeave, providing a unified development kit not only for Neo NPUs but also for Tensilica DSPs and the NNE 110. Scalability is important not only for hardware and models but also for model developers. With the NeuroWeave SDK model, developers have one development kit to map trained models to any of the full range of Cadence DSP/AI platforms. NeuroWeave supports all the standard (and growing number) of network development interfaces to develop compiled networks, also interpreted delegate options such as TensorFlow Lite Micro and Android Neural Network, continuing compatibility with flows for existing NNE 110 users. In all cases I am told, translation to a target platform is code-free. It is only necessary to dial in optimization options as needed.

Back to efficiency. Cadence has particularly emphasized both power and area efficiency in combined DSP + Neo solutions. In benchmarking (same process, 7nm, and 1.25GHz clock for Neo, 1Ghz for NNE 110), comparing HiFi 5 alone versus HiFi5 with NNE 110, they show 5X to 60X improvement in IPS (inferences per second) per microjoule, and 5X to 12X on top of that when replacing NNE 110 with Neo. When comparing IPS/mm2 between NNE 110 and Neo they show an average 2.7X improvement. In other words, you can get much better inference performance at the same energy and in the same area using Neo, or you can get the same performance at lower energy and in smaller area. Cadence provide lots of knobs to configure both DSPs and Neo as you tune for IPS, power and area, helping you dial down to the targets you need to meet.

Availability

Cadence already has early customers and is planning their official release for Neo NPU and NeuroWeave in December. You can learn more HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.