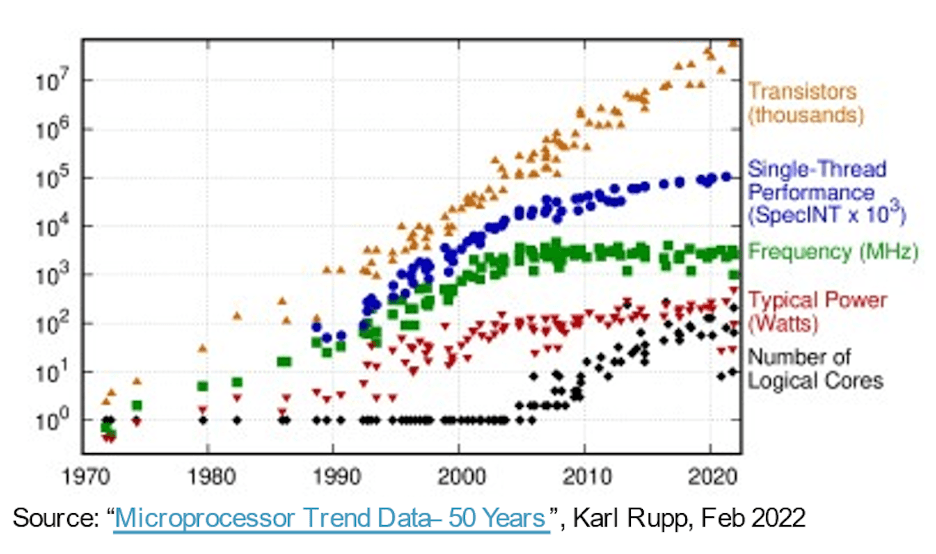

In the march to more capable, faster, smaller, and lower power systems, Moore’s Law gave software a free ride for over 30 years or so purely on semiconductor process evolution. Compute hardware delivered improved performance/area/power metrics every year, allowing software to expand in complexity and deliver more capability with no downsides. Then the easy wins became less easy. More advanced processes continued to deliver higher gate counts per unit area but gains in performance and power started to flatten out. Since our expectations for innovation didn’t stop, hardware architecture advances have become more important in picking up the slack.

Drivers for increasing core-count

An early step in this direction used multi-core CPUs to accelerate total throughput by threading or virtualizing a mix of concurrent tasks across cores, reducing power as needed by idling or powering down inactive cores. Multi-core is standard today and a trend in many-core (even more CPUs on a chip) is already evident in server instance options available in cloud platforms from AWS, Azure, Alibaba and others.

Multi-/many-core architectures are a step forward, but parallelism through CPU clusters is coarse-grained and has its own performance and power limits, thanks to Amdahl’s law. Architectures became more heterogenous, adding accelerators for image, audio, and other specialized needs. AI accelerators have also pushed fine-grained parallelism, moving to systolic arrays and other domain-specific techniques. Which was working pretty well until ChatGPT appeared with 175 billion parameters with GPT-3 evolving into GPT-4 with 100 trillion parameters – orders of magnitude more complex than today’s AI systems – forcing yet more specialized acceleration features within AI accelerators.

On a different front, multi-sensor systems in automotive applications are now integrating into single SoCs for improved environment awareness and improved PPA. Here, new levels of autonomy in automotive depend on fusing inputs from multiple sensor types within a single device, in subsystems replicating by 2X, 4X or 8X.

According to Michał Siwinski (CMO at Arteris), sampling over a month of discussions with multiple design teams across a wide range of applications suggests those teams are actively turning to higher core counts to meet capability, performance, and power goals. He tells me they also see this trend accelerating. Process advances still help with SoC gate counts, but responsibility for meeting performance and power goals is now firmly in the hands of the architects.

More cores, more interconnect

More cores on a chip imply more data connections between those cores. Within an accelerator between neighboring processing elements, to local cache, to accelerators for sparse matrix and other specialized handling. Add hierarchical connectivity between accelerator tiles and system level buses. Add connectivity for on-chip weight storage, decompression, broadcast, gather and re-compression. Add HBM connectivity for working cache. Add a fusion engine if needed.

The CPU-based control cluster must connect to each of those replicated subsystems and to all the usual functions – codecs, memory management, safety island and root of trust if appropriate, UCIe if a multi-chiplet implementation, PCIe for high bandwidth I/O, and Ethernet or fiber for networking.

That’s a lot of interconnect, with direct consequences for product marketability. In processes below 16nm, NoC infrastructure now contributes 10-12% in area. Even more important, as the communication highway between cores, it can have significant impact on performance and power. There is real danger that a sub-optimal implementation will squander expected architecture performance and power gains, or worse yet, result in numerous re-design loops to converge. Yet finding a good implementation in a complex SoC floorplan still depends on slow trial-and-error optimizations in already tight design schedules. We need to make the jump to physically aware NoC design, to guarantee full performance and power support from complex NoC hierarchies and we need to make these optimizations faster.

Physically aware NoC designs keeps Moore’s law on track

Moore’s law may not be dead but advances in performance and power today come from architecture and NoC interconnect rather than from process. Architecture is pushing more accelerator cores, more accelerators within accelerators, and more subsystem replication on-chip. All increase the complexity of on-chip interconnect. As designs increase core counts and move to process geometries at 16nm and below, the numerous NoC interconnects spanning the SoC and its sub-systems can only support the full potential of these complex designs if implemented optimally against physical and timing constraints – through physically aware network on chip design.

If you also worry about these trends, you might want learn more about Arteris FlexNoC 5 IP technology HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.