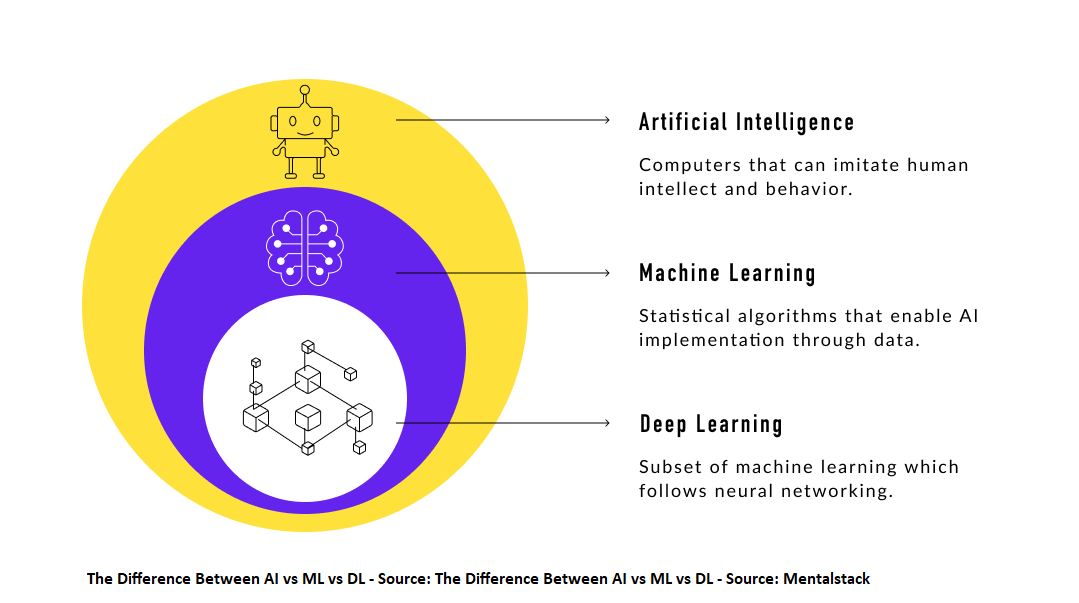

To understand AI’s capabilities and abilities we need to recognize the different components and subsets of AI. Terms like Neural Networks, Machine Learning (ML), and Deep Learning, need to be define and explained.

In general, Artificial intelligence (AI) refers to the simulation of human intelligence in machines that are programmed to think like humans and mimic their actions. The term may also be applied to any machine that exhibits traits associated with a human mind such as learning and problem-solving.

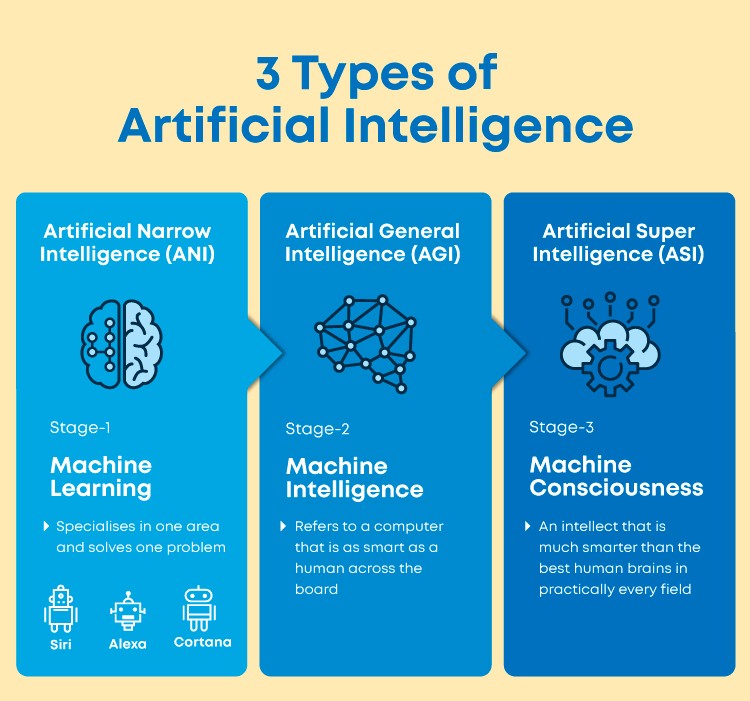

There are three types of artificial intelligence (AI)

· Artificial Narrow Intelligence (ANI)

· Artificial General Intelligence (AGI)

· Artificial Super Intelligence (ASI)

The following chart explains them

Neural networks

In information technology, a neural network is a system of programs and data structures that approximates the operation of the human brain. A neural network usually involves a large number of processors operating in parallel, each with its own small sphere of knowledge and access to data in its local memory.

Typically, a neural network is initially “trained” or fed large amounts of data and rules about data relationships (for example, “A grandfather is older than a person’s father”). A program can then tell the network how to behave in response to an external stimulus (for example, to input from a computer user who is interacting with the network) or can initiate activity on its own (within the limits of its access to the external world).

Deep learning vs. machine learning

To understand what Deep Learning is, it’s first important to distinguish it from other disciplines within the field of AI.

One outgrowth of AI was #machinelearning , in which the computer extracts knowledge through supervised experience. This typically involved a human operator helping the machine learn by giving it hundreds or thousands of training examples, and manually correcting its mistakes.

While machine learning has become dominant within the field of #ai , it does have its problems. For one thing, it’s massively time consuming. For another, it’s still not a true measure of machine intelligence since it relies on human ingenuity to come up with the abstractions that allow a computer to learn.

Unlike machine learning, deep learning is mostly unsupervised. It involves, for example, creating large-scale neural nets that allow the computer to learn and “think” by itself — without the need for direct human intervention.

Deep learning “really doesn’t look like a computer program” where ordinary computer code is written in very strict logical steps, but what you’ll see in deep learning is something different; you don’t have a lot of instructions that say: ‘If one thing is true do this other thing.’“.

Instead of linear logic, deep learning is based on theories of how the human brain works. The program is made of tangled layers of interconnected nodes. It learns by rearranging connections between nodes after each new experience.

Deep learning has shown potential as the basis for software that could work out the emotions or events described in text (even if they aren’t explicitly referenced), recognize objects in photos, and make sophisticated predictions about people’s likely future behavior. Example of deep learning in action is voice recognition like Google Now and Apple’s Siri.

Deep Learning is showing a great deal of promise — and it will make self-driving cars and robotic butlers a real possibility. The ability to analyze massive data sets and use deep learning in computer systems that can adapt to experience, rather than depending on a human programmer, will lead to breakthroughs. These range from drug discovery to the development of new materials to robots with a greater awareness of the world around them.

Deep Learning and Affective Computing

Affective computing is the study and development of systems and devices that can recognize, interpret, process, and simulate human affects. It is an interdisciplinary field spanning computer science (#deeplearning ), psychology, and cognitive science. While the origins of the field may be traced as far back as to early philosophical inquiries into emotion (“affect” is, basically, a synonym for “emotion.”), the more modern branch of computer science originated with Rosalind Picard’s 1995 paper on affective computing. A motivation for the research is the ability to simulate empathy. The machine should interpret the emotional state of humans and adapt its behavior to them, giving an appropriate response for those emotions.

Affective computing technologies using deep learning sense the emotional state of a user (via sensors, microphone, cameras and/or software logic) and respond by performing specific, predefined product/service features, such as changing a quiz or recommending a set of videos to fit the mood of the learner.

The more computers we have in our lives the more we’re going to want them to behave politely, and be socially smart. We don’t want it to bother us with unimportant information. That kind of common-sense reasoning requires an understanding of the person’s emotional state.

One way to look at affective computing is human-computer interaction in which a device has the ability to detect and appropriately respond to its user’s emotions and other stimuli. A computing device with this capacity could gather cues to user emotion from a variety of sources. Facial expressions, posture, gestures, speech, the force or rhythm of key strokes and the temperature changes of the hand on a mouse can all signify changes in the user’s emotional state, and these can all be detected and interpreted by a computer. A built-in camera captures images of the user and algorithm s are used to process the data to yield meaningful information. Speech recognition and gesture recognition are among the other technologies being explored for affective computing applications.

Recognizing emotional information requires the extraction of meaningful patterns from the gathered data. This is done using deep learning techniques that process different modalities, such as speech recognition, natural language processing, or facial expression detection.

Emotion in machines

A major area in affective computing is the design of computational devices proposed to exhibit either innate emotional capabilities or that are capable of convincingly simulating emotions. A more practical approach, based on current technological capabilities, is the simulation of emotions in conversational agents in order to enrich and facilitate interactivity between human and machine. While human emotions are often associated with surges in hormones and other neuropeptides, emotions in machines might be associated with abstract states associated with progress (or lack of progress) in autonomous learning systems in this view, affective emotional states correspond to time-derivatives in the learning curve of an arbitrary learning system.

Two major categories describing emotions in machines: Emotional speech and Facial affect detection.

Emotional speech includes:

- Deep Learning

- Databases

- Speech Descriptors

Facial affect detection includes:

- Body gesture

- Physiological monitoring

The Future

Affective computing using deep learning tries to address one of the major drawbacks of online learning versus in-classroom learning _ the teacher’s capability to immediately adapt the pedagogical situation to the emotional state of the student in the classroom. In e-learning applications, affective computing using deep learning can be used to adjust the presentation style of a computerized tutor when a learner is bored, interested, frustrated, or pleased. Psychological health services, i.e. counseling, benefit from affective computing applications when determining a client’s emotional state.

Robotic systems capable of processing affective information exhibit higher flexibility while one works in uncertain or complex environments. Companion devices, such as digital pets, use affective computing with deep learning abilities to enhance realism and provide a higher degree of autonomy.

Other potential applications are centered around Social Monitoring. For example, a car can monitor the emotion of all occupants and engage in additional safety measures, such as alerting other vehicles if it detects the driver to be angry. Affective computing with deep learning at the core has potential applications in human computer interaction, such as affective mirrors allowing the user to see how he or she performs; emotion monitoring agents sending a warning before one sends an angry email; or even music players selecting tracks based on mood. Companies would then be able to use affective computing to infer whether their products will or will not be well received by the respective market. There are endless applications for affective computing with deep learning in all aspects of life.

Ahmed Banafa, Author the Books:

Secure and Smart Internet of Things (IoT) Using Blockchain and AI

Blockchain Technology and Applications

References

https://www.mygreatlearning.com/blog/what-is-artificial-intelligence/

http://www.technologyreview.com/news/524026/is-google-cornering-the-market-on-deep-learning/

http://www.forbes.com/sites/netapp/2013/08/19/what-is-deep-learning/

http://www.fastcolabs.com/3026423/why-google-is-investing-in-deep-learning

http://www.deeplearning.net/tutorial/

http://searchnetworking.techtarget.com/definition/neural-network

https://en.wikipedia.org/wiki/Affective_computing

http://www.gartner.com/it-glossary/affective-computing

http://whatis.techtarget.com/definition/affective-computing

http://curiosity.discovery.com/question/what-is-affective-computing

Also Read:

Synopsys Vision Processor Inside SiMa.ai Edge ML Platform

Ultra-efficient heterogeneous SoCs for Level 5 self-driving

Samtec is Fueling the AI Revolution

Share this post via:

Facing the Quantum Nature of EUV Lithography