Talk about an unusual position. Intel finds themselves very much currently outside when it comes to mobile SoCs for phones and tablets. After several attempts at soul-searching and a true understanding of the term “low-power” (not meaning 3W, but instead < 1W), they finally have a part – in the form of “Medfield”, aka the Atom Processor Z2460 – that can technically compete for business while their roadmap develops. Continue reading “Smart mobile SoCs: Intel”

CEVA is the undisputed DSP worldwide leader

Anybody working in the wireless handset segment probably knows that CEVA is the provider of DSP IP cores, and if you are simply a wireless handset user, you should know that the baseband digital signal processing is the function allowing your phone to process the RF (analog) signal coming from the outside world. If you have been involved in the wireless segment before 2002, you may remember a company named DSP Group: the merge of the IP licensing division of DSP Group and Parthus has been named CEVA, and CEVA has enjoyed 90% market share for DSP IP products in 2011, according with the Linley Group. A 70% market share simply means that CEVA’ DSP IP have been integrated into 1 billion IC shipped in production in 2011!

Some of you may remember that, during the 90’s, when the wireless phone segment has been created from nothing, to emerge in 1995 and sky rocket in the 2000, the baseband processing was digital (by opposition of the very expensive but low sound quality analog wireless) and one company was dominating the IC market: Texas Instruments, thanks to their DSP. Initially, the handset made by Ericsson where even using the TMS320 DSP standard products from TI, before TI was pushed to propose a DSP core, which could be integrated into a more complex ASIC. But this was not a DSP IP core. In other words, Ericsson, Nokia, Alcatel and so on had to develop their devices, using TI ASIC technology, and TI fabs. At that time, it was seen as a very good strategy by TI: they had architecture a new DSP specially tailored for the wireless handset application and this core was the hook allowing to fill the fab with the devices which will eventually become application processor later on, except that, at that time, most of the processing power was in the DSP and the processor core was… an ARM 7!

When DSP Group (CEVA today) came on this market, TI competition (VSLI Technology, STMicroelectronics and more) was certainly happy as they could propose an alternative solution to the Nokia et al., but it took some time before these OEM decide to move the S/W installed base from TI DSP to CEVA DSP IP core, that they had to do if they decide to move from TI to another supplier. It was a long route, but when you consider the 1 billion devices integrating a CEVA DSP IP, this means that (probably) 800 million of the 1,500+ million of wireless handset shipped in 2011 uses CEVA DSP, the remaining devices addressing a broad range of applications including cellular baseband, imaging, vision, audio, voice, voice-over-IP and more. In term of architecture, it was also a long route: if in 2000, it was possible to use a simple DSP IP core to support the baseband processing, today’s communication standards including LTE-Advanced, LTE and HSPA+ are supported by CEVA-XC4000 architecture, offered in a series of six fully programmable DSP cores, running at speed up to 1 GHz, as you can see on the above picture.

The market share numbers (once again, 70%, a magic number for an IP vendor!) were published in The Linley Group’s recent report, titled ‘A Guide to CPU Cores and Processor IP’, and have been commented by one of the co-author: “CEVA continues to be the most successful supplier of DSP IP — its licensees shipped more than one billion chips in 2011,” said J. Scott Gardner, an analyst at The Linley Group. “CEVA has an impressive customer base for its DSP portfolio, especially in communications and multimedia. Furthermore, with the 4G transition well underway, high-performance programmable DSPs are required to efficiently handle complex multimode baseband processing. CEVA is well positioned to capitalize on this trend.”

The next comment, from the CEO of CEVA, is reflecting the satisfaction of being the market leader with 70% market share, and I am sure I would have the same behavior, would I be in this position!

“CEVA is pleased to be ranked yet again as the worldwide leader in DSP IP by The Linley Group,” said Gideon Wertheizer, CEO of CEVA. “Our unrivalled expertise in DSP technology for high volume mobile and digital home applications drives our success and is the reason we are the DSP-of-choice for many of the world’s leading semiconductors and OEMs.”

CEVA is also proposing Controller IP for SATA and SAS, we will come back to these IP in another blog. The focus of today was DSP IP core, and, like ARM IP core is coming in mind immediately when you consider a wireless handset phone or smartphone, CEVA DSP IP is the dominant solution for the same. Just a final remark: CEVA is claiming to be the only Israeli company who’s product is integrated into the Apple iPhone, if anybody knows what product CEVA has sold to Apple (I strongly guess that Apple does not allow their supplier to communicate about this), feel free to put a comment, or to send me a note.

Eric Esteve from IPNEST –

Analyzing Cortex Performance

CPAK sounds like something politicians create to collect money, but in fact it is a Carbon Performance Analysis Kit. It consists of models, reference platform, initialization software (for bare metal CPAKs) or OS binary (for Linux and Android based CPAKs). They are (or will soon be) available for ARM Cortex A9, ARM Cortex A15 and ARM big.LITTLE (which is an A15 and an A7).

Designing a complex microprocessor-based system, especially a multi-core one, has two major challenges:

- getting the software written and debugged

- analyzing the system performance for speed, power and unanticipated bottlenecks

CPAKs primarily address the second problem: what clock-rate do I need to use? how many cores? is my cache big enough? does my bus fabric work as advertised? Since source code is included for all of the software provided with the CPAK, it can also get you up and running quickly from the software side too, eliminating the need to figure out how to initialize all the system components.

A CPAK saves weeks or months in time to market by providing an off-the-shelf reference platform complete with all the software to bring them up. So users can start analyzing, optimizing and verifying performance of ARM processor-interconnect-memory subsystems within minutes. The platforms can further be customized using the Carbon IP Exchange model portal.

A CPAK contains fully cycle-accurate models and so can be used for this sort of detailed performance analysis. Fast models, which are great for software development, only have rudimentary performance analysis and are not accurate enough for system architecture work.

Bare metal CPAKs are free of charge (you need to have Carbon SoC Designer Plus to be able to use it though). Other CPAKs have a one-time fee.

CPAKs will also work with Swap & Play. This means that you will be able to boot the operating system with a fast model (without it being accurate enough to do any detailed analysis) and then switch out the fast model for a cycle accurate model to analyze the performance of the system doing something more interesting than running its bootstrap.

The CPAK family for ARM Cortex Processors will be available in bare metal and Linux configurations in Q2 and in Android configurations in the second half of the year.

Come and find out more at DAC. Carbon will be in the ARM Connected Community Pavilion, booth 802, and in their own booth 517.

Piper Jaffray Chip Analyst Spanks Intel!

This just in from Tech Trader Daily, quoting Piper Jaffraychip analyst Gus Richard:

The whole issue for Qualcomm, based on Richard’s conversations with industry types, is that the company has started making its “MSM8960″ chip with Taiwan Semiconductor Manufacturing (TSM) only two to three quarters after TSM introduced its new “28-nanometer” chip-making technology. That’s faster than the five to six quarters Qualcomm waited for the last process technology, at 45 nanometers.

This is absolutely true. Qualcomm has not had the “wafer allocation” variable in past product launching equations thus the bumbling this time. I still question when CEO’s blame supplier shortages for missed quarterly expectations. Either way you slice it they screwed up. Let’s see what excuses the fabless semiconductor CEO’s use when 28nm capacity is no longer an issue. My favorite three are:

[LIST=1]

Mr. Richard thinks Qualcomm is seeking second and third sources of supply:

QCOM is using TSMC’s LP process based on a PolySiON gate stack. We believe Globalfoundries, Samsung and UMC have compatible processes. We are confident QCOM is working with GF and UMC and these sources will likely come up in Q4:12.

Clearly Mr. Richard missed my blog on just that last month:

UMC Wins Qualcomm 28nm Second Source Contract!

Mr Richard believes Qualcomm has the best product in baseband chips to which I agree:

While there are other LTE modems in the market, the power consumption of these products is unacceptably high. In addition, no other company offers the level of integration that QCOM provides in the MSM 8960 and its derivatives. In particular, QCOM’s main competitor, Intel, does not have an LTE modem. In addition, we believe Intel’s 22nm SoC process, 1271, will not be out until mid-2013.

More importantly Mr Richard shares my view on Intel’s recent assault on the fabless semiconductor ecosystem:

The use of fear uncertainty and doubt, FUD, in our view is a tactic used by companies when they are trying to obscure their own shortcomings. In our view, Intel’s narrative of exclusivity in process development and the end of the foundry model is disingenuous. So, the question is what is Intel trying to hide? We believe it is the following:

[LIST=1]

Absulutely. The only point that I would add is cost. The mobile SoC market is VERY cost sensitive and that is a market that Intel knows little about. As you may have read, even though the process technology scales with smaller die sizes, faster clock speeds, and lower power leakage, the cost may be exponentially higher, especially for Intel.

In summary, I really like this guy! Gus Richard has a graduate degree in Physics from Purdue and spent his first five of 25+ years working at a semiconductor mask shop in Silicon Valley before moving to the analyst side of the business. Lunch anytime Gus!

MIPS, ARM, ARC, Imagination, Ceva

The Linley Group, whose conference on mobile I recently attended, has some interesting data about the processor core market. Firstly, the numbers are big: CPU cores shipped in over 10 billion chips last year which is up 25% on last. ARM has a share of 78% of that entire market. The big surprise to me was the #2 was not MIPS but Synopsys with the ARC processor that they acquired with Virage. They have a 10% share. MIPS can only manage 6%.

ARM licensees shipped 7.9 billions chips on which ARM collected an average royalty of 4.6¢ down a little from 4.8¢ the year before. By contrast, MIPS licensees shipped just 650M chips although they made around 7¢ per chip.

Given that kind of imbalance it is hard to see how MIPS can compete with ARM and there are plenty of rumors that MIPS is being shopped around. But that kind of instability make licensing really difficult. Nobody wants to invest in licensing a core when the company is short of resources to invest in R&D, and if the future of the whole company is uncertain the problem is compounded. Especially since a likely acquirer might be a competitor.

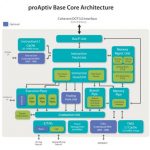

However, having said all that, MIPS announced three new cores today under the brand name Aptiv. The three cores are the microAptiv, interAptiv and proAptiv in increasing order of performance, power and area. The high end proAptiv is firmly positioned as a competitor to ARM’s Cortex-A15. Whether these new cores are enough to convince anyone other than current licensees remains to be seen. One point I just re-discovered today that may or may not be significant is that the Chinese Loongson processor uses the MIPS instruction set. I’m not sure if this is a good thing for MIPS (wider use of software in the MIPS instruction set) or not (a competitor with the same instruction set).

The DSP core market is dominated by Ceva with a market share of over 90%. Linley classify Tensilica and ARC as CPU blocks as opposed to DSPs but Ceva would still dominate the sector if they were classified as DSPs.

The graphics GPU market is dominated by Imagination Technologies (just a short drive from ARM) with 82% market share (that’s what’s in your iPhone for example). ARM have their own GPU Mali but it has very limited acceptance so far. There is clearly some potential leverage for integrating the CPU and the GPU but so far ARM don’t seem to have found the magic formula. Qualcomm both licenses GPUs and has one of their own but since it is not externally licensed I assume that it is not included in these numbers. Samsung also has their own GPU Exynos which is the current performance leader.

Linley expects CPU IP to maintain a CAGR of 10% for a least another 3 or 4 years until the market matures and smartphone growth starts to slow.

View from the top: Michael Buehler-Garcia

Yesterday I met with Michael Buehler-Garcia, Director of Marketing at Mentor Graphics for Calibre in Wilsonville, Oregon to get an update on what’s coming up at DAC, the premier conference and trade show for our industry.

Continue reading “View from the top: Michael Buehler-Garcia”

Cadence Update 2012!

What’s new at Cadence? Quite a bit actually. I have always been a Cadence fan, I mean really, they gave birth to modern EDA. Unfortunately, Cadence really lost me during the Avant! legal action, the Mike Fister years, and EDA360. Recently, however, Cadence has made some big changes that will definitely get them back on my good side. The most recent change being the hiring of Martin Lund, SVP of the SoC Realization Business Unit.

SoC Realization is a strategic growth area for Cadence,” said Lip-Bu Tan, president and CEO. “While at Broadcom, Martin Lund grew the company to become the global leader in Ethernet switch SoCs for data center, service provider, enterprise, and SMB markets, and successfully drove several strategic acquisitions. His extensive operational experience equips him well to scale our SoC Realization business.”

As I have said before, IP is the center of the semiconductor universe and Martin Lund certainly knows IP from his 12 years at Broadcom and 20+ patents. Hopefully Martin accepts my LinkedIn invitation so we can talk in more detail.

“Cadence is in an excellent position to deliver differentiated IP for memory, storage and connectivity,” said Martin Lund. “Customers are looking to Cadence to be their trusted partner in delivering these IP reliably and with high quality. This is an exciting time to join Cadence and I am looking forward to helping accelerate the company’s success.”

Also new at Cadence is one of the mostaggressive DAC schedules I have seen from them in a long time. They have numerous luncheons, breakfasts, exhibits, tutorials, and workshops planned where they will take on the challenges of the evolving semiconductor ecosystem. And of course, you won’t want to miss the Denali party, especially if you are looking forward to hearing loss.

Click the banner above and it will take you to the main Cadence DAC page. Here are some of the descriptions and associated links for Cadence content at DAC:

Registration:http://www.cadence.com/dac2012/Pages/luncheons.aspx

Monday, June 4, luncheon: 12:00 PM – 1:00 PM (doors open and lunch is served at 11:30 AM)

Overcoming Variability and Productivity Challenges in Your High-Performance, Advanced Node, Custom/Analog Design

Tuesday, June 5, breakfast: 8:00 AM – 10:00 AM (doors open and food is served at 7:30 AM)

Addressing Hardware/Software Co-Development, System Integration, and Time to Market

Tuesday, June 5, luncheon: 12:00 PM – 1:00 PM (doors open and lunch is served at 11:30 AM)

Overcoming the Challenges of Embedding Ultra Low-Power, ARM 32-bit Processors into Analog/Mixed-Signal Designs

Wednesday, June 6, breakfast: 8:00 AM – 9:00 AM(doors open and breakfast is served at 7:30 AM)

The Path to Yielding at 2(x)nm and Beyond

The Denali Party by Cadence

Tuesday, June 5: 8:30 PM – 12:30 AM

Ruby Skye nightclub

Registration:http://www.cadence.com/dac2012/Pages/denali_party.aspx#regform

Also, Cadence is involved in a number ofworkshops tutorials and panels. Please visit http://www.cadence.com/dac2012/Pages/speakers.aspx for details.

I hope to see you there!

TSMC Tops Intel, Samsung in Capacity!

While I was marlin fishing in Hawaii last week I missed some interesting comments from TSMC executives at the Technology Symposium in Taiwan, a much different show than the one here in San Jose I’m told. It is good to see TSMC setting the record straight and taking a little credit for what they have accomplished! I’m sorry I missed it but I know quite a few people who didn’t and they were quite impressed.

Y.P. Chin, TSMC Vice President for operations and product development (Y.P. joined TSMC when it was founded in 1987):

Citing data from SEMI, TSMC’s capacity for logic chips was 1.5 times greater than Intel and 2 times greater than Samsung in 2011.

Interesting perspective. If you look inside your smartphone or tablet you will see both logic (brains) and DRAM (memory) chips. TSMC does the brains, Samsung does mostly memory, Intel does the big fat brain in your PC and laptop (my wife edits my blogs so this is for her).

Jason Chen, TSMC’s Senior Vice President of Worldwide Sales and Marketing (Prior to joining TSMC in 2005, Jason worked at Intel for 14+ years):

Smartphones have beat PCs in shipments since 2010. In 2012, shipments of smartphones will beat PCs by 50%. Tablets join smartphones to make mobile computing an even bigger market. Smartphones have emerged as the primary tools for internet access with even more features fit inside in the future. Online payment for example will become a standard smartphone feature.

I never thought I would give up my laptop but my iPhone 4s and iPad2 see much more action than I would have ever imagined. My wife and kids (ages 16, 18, 22, and 24) will tell you the same, absolutely. I even used my iPhone projected boarding pass to get to and from Hawaii avoiding check-in lines, very cool!

That is why TSMC is leading off 20nm with a process optimized for mobile and Intel will need to optimize their 22nm process for mobile before mainstream customers take their foundry claims seriously.

That is also why TSMC is increasing CAPEX and R&D spending to record rates this year, to capture as much mobile demand as possible. One thing you have to remember about the foundry business is that wafer price is everything, especially to the mobile market. Today TSMC is the only foundry shipping production 28nm silicon which means they have a big lead on the manufacturing yield/cost curve. Even when second source 28nm silicon hits the market it will be at a higher cost/lower margin. TSMC will also be the first with 20nm silicon (my opinion) so lather, rinse, repeat…

A question I have is: How much longer will TSMC stock (TSM) continue to trade for under $16? Anyone? Given the success of 28nm, I think you will be able to measure that in months versus years (my opinion).

Disclaimer: I do not partake in the financial markets so do not buy this or any other stock based on my comments. Seriously, you would be better off consulting your neighbor’s pet.

DAC: Finding Somewhere to Eat

I am not going to attempt to give you any restaurant advice beyond what I’ve already done by listing the good places near the conference center. San Francisco reputedly has over 3000 restaurants so I don’t know anything about more than a tiny fraction. However, with that many restaurants, most places are pretty good. If not, they close and the space gets taken over by someone else.

Here is the San Francisco Chronicle’s food reviewer Michael Bauer’s list of the top 100 restaurants in the Bay Area. But you don’t need to go to an expensive restaurant to get a good meal in San Francisco. The city is made up of neighborhoods with lots of restaurants that are mostly frequented by locals, sprinkled with a few “destination restaurants” where people travel from all over to visit and usually with commensurately high prices. Two neighborhoods with especially high numbers of restaurants are the Mission (Valencia and Mission Streets between about 14[SUP]th[/SUP] Street and 24[SUP]th[/SUP] Street) and the Marina (Chestnut Street between about Laguna and Divisadero, and Union Street between the same cross streets ).

The online reservation system OpenTable started in San Francisco (their offices are just a block away from Moscone) and most restaurants seem to be on it. They have a good iPhone and Android App too. So it is easy to find restaurants that still have reservations even at short notice.

There are a huge number of gourmet food trucks in San Francisco. You can follow them on Twitter to know where they are. Or RoamingHunger follows them for you and puts them all on a map so you can see who is nearby. They have an iPhone App too (and presumably an Android one). I’ve only been to a couple of the trucks but the quality of the food is high and the prices low. Most only take cash.

The best restaurant in San Francisco is almost always reckoned to be Gary Danko. But you need to make reservations a couple of months in advance, so you probably didn’t. However you can sit at the bar and have an incredible meal at a special low price (well, not low, but not stratospheric). Best thing to do is go there at 5.30pm when they open and grab a bar seat. Corner of Hyde and North Pointe.

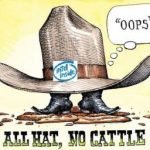

Intel Foundry All Hat No Cattle?

If you look real close at the #49 DAC floor plan you will see the tiny Intel booth dwarfed by those of TSMC, GlobalFoundries, Samsung, and ARM. The number one semiconductor company in the world does not have the budget for the cornerstone conference of the semiconductor ecosystem? Oh my…… Intel has a big foundry hat and no cattle this year.

Now in its 49th year (this will be my 29[SUP]th[/SUP]), the Design Automation Conference features a wide array of technical presentations, tutorials, and workshops, as well as more than 200 of the leading semiconductor ecosystem partners in a colorful, well-attended trade show that attracts thousands of semiconductor professionals from around the world.

This year, industry luminaries from ARM, Inc., IBM Corp., Intel Corp. and the National Tsing Hua University will give the three keynote addresses. DAC 2012 will be held at my absolute favorite venue, other than Las Vegas, the Moscone Center in San Francisco, California, from June 3-7, 2012.

“In assembling the 49th DAC series of distinguished keynotes speakers, I am excited to announce that DAC is covering all bases, providing refreshing viewpoints for systems designers, IC designers and EDA software professionals,” said Patrick Groeneveld, General Chair of the 49th DAC.

“Tuesday kicks off with ARM’s Mike Muller, who will share his vision for a future of embedded computing systems. Given that ARM’s processors power most smartphones, this will show the way for computing in the future.”

“On Wednesday, Joshua Friedrich and Brad Heaney will outline the design practices for high-performance microprocessors. This unique dual-keynote provides a look in the kitchen of leading microprocessor companies designing the world’s most advanced chips,” Patrick enthusiastically continued.

“Finally, the Thursday keynote by Kaufman Award winner Dave Liu addresses the algorithmic revolution behind EDA. Prof. Liu’s contributions and insights have enabled the remarkable design automation revolution that actually powers today’s trillion-transistor devices.”

Keynote Schedule:

All keynotes will be held in rooms 102/103.

Tuesday, June 5, 2012 from 8:30am to 9:30am

Scaling for 2020 solutions

Mike Muller, CTO, ARM Inc., Cambridge, U.K.

Comparing the original ARM design of 1985 to those of today’s latest microprocessors, Mike will look at how far design has come and what EDA has contributed to enabling these advances in systems, hardware, operating systems, and applications as well as how business models have evolved over 25 years. He will then speculate on the needs for scaling designs into solutions for 2020 from tiny embedded sensors through to cloud-based servers that together enable the “Internet of things.” Mike will look at the major challenges that need to be addressed to design and manufacture these systems and propose some solutions.

Wednesday, June 6, 2012 from 10:45am to 11:45am

Designing High Performance Systems-on-Chip

Joshua Friedrich, Senior Technical Staff Member and Senior Manager of POWERTM Technology Development in IBM’s Server and Technology Group. Brad Heaney, Intel Architecture Group Project Manager, Intel Corp., Folsom, CA.

Experience state-of-the art design through the eyes of these two experts. Joshua Friedrich will talk about POWER processor design and methodology directions and Brad Heaney will discuss designing the latest Intel architecture multi-CPU and GPU. In this unique dual-keynote, the speakers will cover key challenges, engineering decisions and design methodologies to achieve top performance and turn-around time. The presentations describe where EDA meets practice under the most advanced nodes.

Thursday, June 7, 2012 from 11:00am to 12:00pm

My First Design Automation Conference – 1982

C. L. (Dave) Liu of Tsing Hua University and also the recipient of the 2012 Phil Kaufman award.

Dave tells us: “It was June 1982 that I had my first technical paper in the EDA area presented at the 19th Design Automation Conference. It was exactly 20 years after I completed my doctoral study and exactly 30 years ago from today. I would like to share with the audience how my prior educational experience prepared me to enter the EDA field and how my EDA experience prepared me for the other aspects of my professional life.”

I hope to see you all there!