If you have missed the announcement that Cosmic Circuits has been acquired by Cadence, dated February 7, you may want to read this PR. In any case, by reading this article you will understand why this acquisition is ringing the start of the “IP battle” between cadence and Synopsys. Let me remind you that I said in Semiwiki last September (at that time I was qualifying the IP battle as being a chess game in my article):

“Coming back to the chess game, my personal conviction is that the “Queen” will be the PHY IP, as the company being able to provide an integrated IP solution, PHY and Controller, should be able to run the game. You may prefer to put it this way: the company unable to provide a PHY (supporting the latest standard release like PCIe Gen-3 or MIPI M-PHY) on the most advanced technology node, will most certainly lose the game, on the long term…”

In fact, the IP war between Cadence and Synopsys has really started in 2010, when Cadence has acquired Denali (DDRn memory controller IP, PCIe controller IP and large VIP port-folio) and Synopsys Virage Logic, both companies being sold for more than $300 million, or about X6 or X7 their 2009 revenue. We don’t know how much Cadence has spent to buy Cosmic and we don’t think it was cheap, as the company had a very good path:

- Founded in 2005 and based in Bangalore, India, Cosmic Circuits has been profitable from its first year of operation and has more than 75 customers worldwide. The company received TSMC’s 2010 and 2012 awards for Analog/Mixed Signal IP Partner of the Year.

- Provides IP for mobile devices including USB, MIPI, Audio and WIFI at advanced process nodes including 40nm and 28nm

- Top tier customer base has shipped more than 50 million ICs containing Cosmic Circuits IP in 2012

Cosmic Circuits has started, like ChipIdea, by selling mixed-signal IP (ADC, DAC, PLL, WiFi…), and recently add the Interface PHY IP support to the port-folio. They put a strong focus on MIPI D-PHY and M-PHY, the latest being supported on TSMC 85nm, 65nm, 40nm, 28nm and finally 20nm! They also support a complete SuperSpeed USB PHY, including both USB 2.0 and USB 3.0 PHY IP.

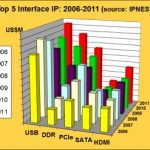

If we look at the Interface IP business during the last 5 years, USB, PCIe and DDR were the most important, followed by HDMI and SATA. But the forecast for the 2012-2016 (see above) clearly shows that, if USB and PCIe are still part of the Top 5, DDRn and MIPI IP will see the higher growth (DDRn IP being also the largest segment). This simply means that Cadence is well positioning to fight with Synopsys in the near future, as the company being already strong in DDRn IP, present in PCIe IP, is now strong in MIPI PHY IP and present in USB PHY IP, thanks to this acquisition.

So, I would agree with Martin Lund, Cadence’s senior vice president of R&D for SoC Realization when he says “The addition of Cosmic Circuits’ stellar technology and talent enhances Cadence’s position as a leading provider for analog/mixed-signal IP. The combination of Cadence and Cosmic Circuits will provide customers with high quality IP to accelerate getting products to market.”

And please don’t forget it: the PHY IP support will be an important part of the winning strategy, in this IP battle between Synopsys and cadence!Eric Esteve from IPNEST –