One of the best things about being part of SemiWiki is the exposure to new technologies and the people behind them. SemiWiki now works with more than 35 companies and I get to spend time with each and every one of them. Much like I do, IROC Technologies works closely with the foundries and the top semiconductor companies so it was a pleasure to do this CEO interview:

Q: What are the specific design challenges your customers are facing?

A: The design flow is an always evolving, ever-demanding beast. The continuing evolution of the technology allows building increasingly complex electronic devices integrating more and more functions. This evolution is not free of problems, or more appropriate, challenges to overcome. Reliability is a natural concern. Particularly, perturbations induced by radiation – Single Event Effects (SEEs) may cause system downtime, data corruption and maintenance incidents. Thus, the SEEs are a threat to the overall system reliability performance causing engineers to be increasingly concerned about the analysis and the mitigation of radiation-induced failures, even for commercial systems performing in a natural working environment. Our observation is that from the classical Power, Area and Timing-driven design flows, going through the Design for Manufacturability, Yield and Test (DFM/DFY/DFT) design and manufacturing frameworks, our customers are increasingly aware and adepts of the Design for Reliability (DFR) paradigm.

Q: What does your company do?

A: We provide services, tools and expertise to qualify and improve the reliability of electronic designs and systems with a focus on radiation induced effects, process variation and ageing. We help engineers to evaluate the susceptibility of their designs to different perturbations. We assist them in improving the primary reliability characteristics (uptime, event/fault/error/failure rate …) that are relevant for their specific application. We accompany the reliability engineers in their exchanges with the suppliers in order to select best performing materials and processes. We also help them to prove the fitness of the delivered solution for the reliability expectations of the current application. Ultimately, we assist all the actors from the design and manufacturing flow to select and improve the best reliability-aware processes, designs approaches and frameworks with the overall goal of providing high reliability solutions to demanding end users. Moreover, our continuous 10+ years’ service as an independent trusted advisors and test experts has been positively recognized by technology & solution providers and end-users alike.

Q: Why did you start/join your company?

A: I’ve joined the company in September 2000 as one of the earliest employees. From my initial R&D Engineer position, through the role of VP Engineering and moving up to the CEO position in February 2013, I’ve accompanied the company through a long history of challenges and opportunities. My current focus is on preparing the organization for growth, technical excellence and leadership and aligning our solutions, services and tools to the needs of our partners and customers.

On the academic side, I hold a Ph.D in Microelectronics from INPG, Grenoble Institute of Technology, France and I’m fairly active in the R&D community, both industry and academy. I’m currently helping as a program chair, the organization of the International Online Test Symposium (IOLTS) in Crete, Greece, event focusing on the reliability evaluation and improvement of very deep submicron and nanometer technologies.

Q: How does your company help with your customers’ design challenges?

A: Our solutions, tools and testing services have been designed to evaluate and improve the Soft Error Rate of sophisticated systems – from the technological process and standard cell library up to the complexity of a failure mechanism in complex systems. Our tools can present an itemized contribution of the SER of every design feature (individual cell, memory block, IPs, hierarchical blocks) and our expertise can address the reliability concerns of cell, chip or system designer by improving the underlying technology reliability and adding error management IPs and solutions to the chip and system.

Q: What are the tool flows your customers are using?

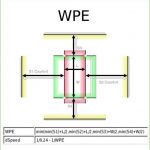

A: Our TFIT (transistor/cell SER evaluation) and SoCFIT (platform for SER evaluation and improvement of complex systems) tools have been designed for a seamless integration in existing design flows and methodologies. The TFIT tool is usually used by the designers of standard cells, in conjunction with a SPICE simulator (HSPICE, Spectre). SoCFIT integrates a collection of modules and tools that aim at providing SER-aware evaluation and improvement capabilities in standard design flows (i.e. Architecture>RTL/HLS>GLN), possibly connecting to commercial simulation tools (NCSim, VCS, ModelSim, QuestaSim) or synthesis, verification and prototyping platforms (Synopsys DC, PrimeTime, First Encounter)

Q: What will you be focusing on at the Design Automation Conference this year?

A: We want to meet our customers, and our prospective customers. Austin is a large design center, and chances are we will be meeting many designers and engineers who don’t usually travel to Silicon Valley or other places for conferences.

Q: Where can SemiWiki readers get more information?

www.iroctech.com

http://www.semiwiki.com/forum/content/section/2094-iroc-technologies.html

Vision: We believe that every modern chips should have the highest level of reliability throughout the product life time. IROC Technologies helps the semi-conductor industry significantly lower the risks of soft errors by providing software and expert services to prevent soft errors when designing IC’s.

With the introduction of submicron technologies in the semiconductor industry, chips are becoming more vulnerable to radiation induced upsets. IROC Technologies provides chip designers with soft error analysis software, services and expert advisors to improve a chip’s reliability and quality.

Exposure of silicon to radiation will happen throughout the lifetime of any IC or device. This vulnerability will grow as development moves to smaller and smaller geometries. IROC proved that the soft errors that cause expensive recalls, time-to-volume slow-down, and product problems in the field can be significantly reduced. The mission of the company’s soft error prevention software and expert advisors is to allow users to increase reliability and quality while significantly lowering the risk of radiation-induced upsets, throughout the lifetime of products under development.

Also Read:

CEO Interview: Jens Andersen of Invarian