Two weeks ago I blogged about analog verification and it started a discussion with 16 comments, so l’ve found that our readers have an interest in this topic. For decades now the Digital IC design community has used and benefited from regression testing as a way to measure both design quality and progress, ensuring that first silicon will work with a higher degree of confidence.

So, what can be done to make automated Analog Verification a reality for the average IC designer? In the Analog design space, a subset of testing will always remain manual. Often there is no way (or desire) to replace pulling up a SPICE waveform or looking at an eye diagram. But there is a large class of testing that can be scripted and automated.

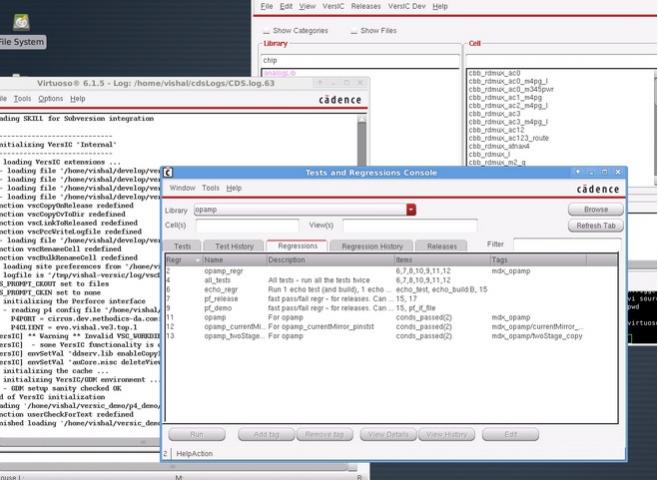

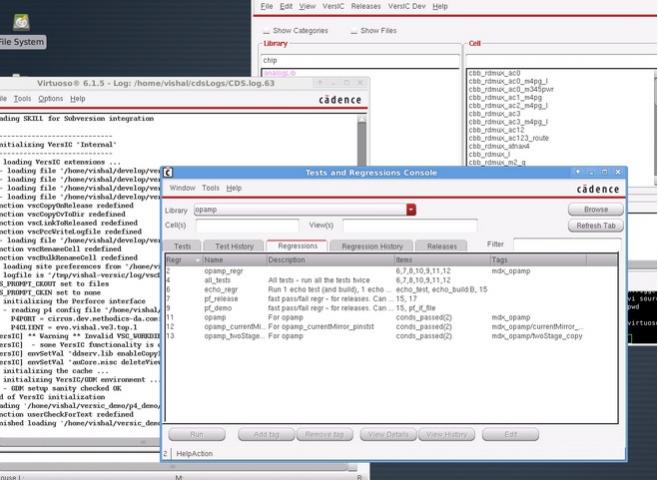

In the last blog in this series, I looked at how a tool like VersICfrom Methodicscan help you discover, run and track the history of scripted tests right from within your Cadence environment. If you are willing to absorb the initial cost of setting up automation scripts, the benefits are manifold. Scripted tests can be standardized and run multiple times in identical fashion. Tests can be tracked, ported and shared easily across cells, libraries and designs.

Once some scripted tests are available, an additional benefit can be obtained – automatic regressions. A regression is a collection of tests that are run as a group. This group can then be manipulated, tracked and managed as a single entity.

Regressions as collections of scripted tests in Virtuoso

Grouping tests into regressions makes it very easy to run a set of consistent checks on a design change. Tests that are related in some way – say LVS/DRC, port checks and RTL consistency checks can all be grouped into a single regression for hand-off criteria. There is now no need to remember if all the hand-off criteria were met – simply run this regression and it ensures that all checks were in place.

Other examples of regressions include ADEXL functional tests that can be grouped as a regression. Each library or cell can have its own functional tests, run through a common ADEXL automation framework, so that essentially the same regression is run on all the cells of a library.

When handing a design off to integration, a good practice would be to run some critical subset of your tests as a handoff – or ‘sanity’ – regression. This regression, commonly referred to as a ‘smoke’ regression in Digital Verification circles, ensures that minimum quality is met before integration is attempted. This way, the integration team only concentrates on issues at the subsystem or system level, knowing that the individual cells or libraries are consistent.

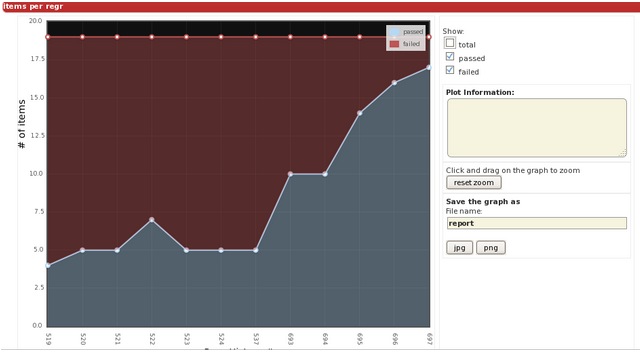

Regression Progress Graph

Regression can also be tracked for progress – plotting the number of passing/failing tests in a regression over a period of time is a good indicator of the health of the design.

Think of regressions as a powerful communication tool. Regression results automatically provide a view into the current state of the design. The tests included in a regression are an indicator of what tests are important. Passing or failing regressions automatically become a gating criteria for acceptance of a design.

Summary

The new discipline of regression testing for AMS designs is of benefit and Methodics has EDA tools that will help you add automated regression testing into your IC design flow.

If you’re traveling to DAC in Austin then visit the engineers at Methodics in booth #1731, ask for Simon Butler.

Further Reading

- Methodics CEO on Managing Design Quality!

- Analog IC Verification – A Different Approach

- A Brief History of Methodics

lang: en_US

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.