You can often tell how important blogging and social media is to an EDA company by how much effort it takes to find their blog from the Home page. For the folks at Mentor Graphics I’d say that blogging is quite important, because it shows up as a top-level menu item. Notice also how important Twitter is, their latest tweets show up on the Home page just below the logo, a prime real estate position for the web.

Continue reading “Social Media at Mentor Graphics”

Correct by Construction Semiconductor IP

Semiconductor IP re-use enables modern SoC designs to be realized in a timely fashion, yet with hundreds of IP blocks in a chip the chances are higher that an error in any IP block could cause the entire system to fail. At advanced nodes like 28nm and smaller, the number of Process, Voltage and Temperature (PVT) corners is increasing to account for the variability.

Qualification of each cell or IP block is then critical to ensure a correct by construction methodology. There are several modeling formats used for IP blocks:

ECSM Timing Model

To ensure the quality of these models you should be checking:

- Are all arcs populated with data?

- Is the data trustworthy?

- Do the arcs match what is found in the cells’ functional model?

- Does the data track with capacitance and temperature?

Fractal Technologiesis an EDA company with a validation tool called Crossfire designed to answer exactly these types of IP quality questions.

A design engineer would run the Crossfire tool during the characterization flow and would define a golden reference like a Verilog model or a standardized cell-definition format. The timing arcs shown previously in the ECSM timing model are validated against the reference model. This validation process can be fully automated and by embedding Crossfire into the characterization flow you will gain confidence that the model data is trusted.

An example output qualification report from running Crossfire is shown below:

This report shows both errors and warnings for all quality checks. You even get to see which checks were waived by your IP provider and decide if you agree with them. Additional reports show documentation like: data sheet, terminals, functional model and delay arcs.

The Crossfire Diagnose Option lets you visually traverse all checks to see errors that can be clicked on as hyperlinks, and the error is then highlighted in the appropriate file or database. This feature really speeds up your understanding of what is wrong with your IP without having to be familiar with structure of the IP.

Another way to use the Crossfire tool is during the IP design flow:

In this flow you would run Crossfire checks on your IP block or component before checking it in. This would catch any inconsistency early in the design process when changes are easier and less time consuming to implement. You could even include Crossfire in nightly QA checks.

Summary

Validating IP and libraries is critical to achieving a correct by construction SoC design flow that works. Quality checks can be run both during the handoff of IP and cell libraries, plus during the IP design flow itself. Fractal Technologies is focused on automating these important quality checks. For more details, read the White Paper: Correct by Construction Design Using Crossfire.

More articles by Daniel Payne…

lang: en_US

MEMS in The World of ICs – How to Quickly Verify?

In the modern electronic world, it’s difficult to imagine any system working as a whole without MEMS (Micro-electromechanical Systems) such as pressure sensors, accelerometers, gyroscopes, microphones etc. working in sync with other ICs. Specifically in AMS (Analog Mixed-Signal) semiconductor designs, there can be significant occurrence of MEMS. In the coming era of internet-of-things, MEMS will have a significant existence in every aspect of our day to day life. MEMS are 3D devices, often handcrafted and highly simplified or finite-element analysis (FEA) based that run through multiple build-test cycles through the Fab, involving time consuming verification. So, how do we realize MEMS’s high potential, particularly in fast-paced consumer markets? We must have an easy-to-use design environment and automated simulation and verification of MEMS with other ICs, quicker and with desired level of accuracy.

I came across thispress release of Coventorabout their MEMS+ 4.0 software release and then got an opportunity talking to their V.P. of Engineering, Dr. Steve Breit. It was a great conversation with Dr. Breit who provided a lot of insight about how MEMS+ 4.0 changes the whole paradigm for co-designing MEMS and ICs and makes it the most efficient way to design and verify them together.

The Conversation –

Q: It’s interesting to see the MEMS and IC Co-design and verification environment provided by MEMS+ 4.0. How efficient it is? What’s new?

[MEMS+IC Co-design and Verification Environment]

MEMS are modeled in 3D with multiple variables such as geometry, motion, pressure, temperature and so on. The current industry practice is to use models that are handcrafted and simplified to a large extent. On the other hand, conventional finite-element analysis (FEA) is used which is too time consuming. Overall, it leads to several build and test cycles without taking into account full coupling effects and extremely slow turn-around affecting time-to-market. In MEMS+ 4.0, MEMS components can be designed in a 3D design entry system by choosing elements (building blocks) from a library. The models can then be imported as symbols into the MathWorks(MATLAB, Simulink) and Cadence(Virtuoso) schematic environments. What’s new for MEMS+ 4.0 is that the MEMS models can also be automatically exported in Verilog-A, which can then be simulated together with IC description in any environment that supports Verilog-A; Cadence (Virtuoso) or other AMS simulators. These models simulate extremely fast; up to 100X faster than full MEMS+ models. By automating hand-off between MEMS and IC designers, this approach can eliminate design errors and thereby require fewer build-test cycles.

Q: You say that MEMS+ 4.0 platform provides tunable accuracy-versus-speed. How does that work?

Yes, the tool provides option to choose one or two or as many required non linear variables including temperature, pressure, electrostatic parameters etc. to simulate against them. By choosing fewer important variables, the simulation speed can be faster. Other variables can be added as per need. This procedure provides a good tradeoff between accuracy and speed, hence optimizing the overall scenario. Of course simulating with full MEMS+ models is still useful in analyzing corner cases.

Q: What are Reduced Order Models? How do they help?

In MEMS, there is large number of degrees of freedom (i.e. unknowns) ranging from several 100s to 1000s. To make it practical and reasonable, Reduced Order Models (ROMs) are used that reduce the degrees to freedom to say, about 10. For example, in a microphone, barometric and acoustic effects would be important. With ROMs in Verilog-A, simulation speed is increased to large extent, as good as handcrafted Verilog-A, and with multiple degrees of freedom.

Q: How does that look like, a real life model exposed to environmental parameters and being simulated through these Verilog-A models?

MEMS+ has a library of parametric High-Order Finite Elements that includes mechanical components along with electro-mechanical coupling through electrodes and piezo layers, fluid damping and loading, gas damping and others. Fast transient simulations are performed on complete models. Below is an example of gyroscope model simulated for its drive amplitude and sense output.

Q: In MEMS+ 4.0, capacity of the platform has been increased to 64bit. Is it to do with the design size or more compute space required for the design complexity?

It’s for both. The size of the designs is increasing, as well as more compute space is required for higher accuracy. With 64bit, more detailed models can be generated.

It was an interesting discussion for learning about the benefits of MEMS+ models over traditional FEA and how the MEMS+ 4.0 platform enables a faster design closure with its automatic Verilog-A export that can be simulated (with reasonable required accuracy) with any of the industry standard AMS simulator that takes Verilog-A as input. Another advantage with export as Verilog-A in the world of SoCs and IPs is that the MEMS IP is reasonably protected. Happy designing!!

TSMC ♥ Mentor (Calibre PERC)

As semiconductors become more integrated into our lives reliability is becoming a critical issue. As IP consumes more of our die, IP reliability is becoming a critical issue. As we pack more transistors into a chip, reliability is becoming a critical issue. As we move from 28nm to 20nm to 16nm, reliability is becoming a critical issue. The last time one of my “always on” mobile devices became unreliable I tossed it out my car window on Highway 101. So yes, semiconductor reliability is an issue.

PERC is now integrated into the TSMC 9000 IP program so the hundreds, if not thousands, of IP that flow through TSMC can be verified using focused reliability checks for 28nm, 20nm, and 16nm process nodes. SoC designers can then run PERC at the chip level to revalidate the IP after integration. Given the shrinking design cycles and re-spin costs this is a no brainer. The ROI of a thorough and trusted (repeatable) verification environment is compelling.

To investigate further there are several articles about Calibre PERC on SemiWiki:

- How to Simplify Complexities in Power Verification

- A Programmable Electrical Rule Checker

- Static Low-Power Verification in Mixed-Signal SoC Designs

- Robust Reliability Verification: Beyond Traditional Tools and Techniques

- ESD – Key issue for IC reliability, how to prevent?

- Automating Complex Circuit Checking Tasks

- High Frequency Analysis of IC Layouts

Mentor also has a nice Calibre PERC page HERE with white papers, webinars, and videos including this one:

More Articles by Daniel Nenni…..

lang: en_US

Pigs Fly. Altera Goes with ARM on Intel 14nm

Altera announced in February that they would be using Intel as a foundry at 14nm. Historically they have used TSMC. Then in June they announced the Stratix 10 family of FPGAs that they would build on the Intel process. At the Globalpress summit in May I asked Vince Hu about their processor strategy. Here is what I wrote about itat the time:”Microprocessors? ARM is a great partner, at 20nm we are committed to ARM. What about 14nm? Is Intel going to manufacture ARM? Is Altera going to put Atoms on FPGAs? Too soon to comment but there may be an announcement soon. So my guess would be that Intel isn’t going to be building ARMs into Altera arrays and some sort of Altera/Intel processor deai will be announced in the future.”

It turns out I was wrong. Today Altera announced their processor strategy for the Stratix 10 series. They will use a quad-core 64-bit ARM Cortex A-53. Yes, Intel is going to be building 64-bit ARM processors in their 14nm FinFET (they call it Trigate) process. And yes, that is a pig you see in the sky.

I asked Altera about the schedule for all of this. Currently they have over 100 customers using the beta release of their software to model their applications in the Stratix 10. They have taped out a test-chip that is currently in the Intel fab. In the first half of next year they will have a broader release of the software to everyone. They will tape out the actual designs late in 2014 and have volume production starting in early 2015.

Why did they pick this processor? It has the highest power efficiency of any 64-bit processor. Plus it is backwards compatible with previous Altera families which used (32-bit) ARM Cortex-A9. The A53 has a 32-bit mode that is completely binary compatible with the A9. As I reported last week from the Linley conference, ARM is on a roll into communications infrastructure, enterprise and datacenter so there is a huge overlap between the target markets for the A53 and the target markets for the Stratix 10 SoCs.

You probably know that FPGAs are typically used as a process driver in foundries. They are very regular and so can be used to generate lots of statistical yield data. But this means that FPGA companies are typically working on both a new architecture and a new process at the same time. Intel doesn’t use FPGAs as a process driver, they use microprocessors of course. With all that on-chip cache they have some of the same desirable features as a process driver. Altera feel that they are in better shape as a result. They will be ramping in early 2015 but not in a new process, in Intel’s 14nm process which should have been in volume production for about a year with Intel’s own microprocessor families.

So what about the Stratix 10 family. Of course it is faster (2X) and lower power (70%). They will also have the Arria 10 midrange FPGA family, which will be build in TSMC’s 20nm planar process. But the Stratix 10 has a gigahertz fabric, 10 teraflops of signal processing…and a quad-core 64-bit ARM. The programming chain is based on OpenCL. You can start by programming the ARM and ignoring the FPGA, then gradually use the FPGA fabric to accelerate various functions, transparently to the programmer.

Diagnosing Double Patterning Violations

I’ll bet you’ve read a bunch of stuff about double patterning, and you’re probably hoping that the design tools will make all your double patterning issues just go away. Well, the truth is that the foundries and EDA vendors have worked really hard to make that true.

However, for some critical portions of your design, there is just no getting away from some degree of layout debugging, and double patterning can be especially problematical in that respect. That’s because the cycle errors that can occur when layouts are not perfectly decomposed can be tough to fix. It’s like the old “whack-a-mole” game—every time you move a layout feature to fix one cycle error, a new error, whether it be a new cycle error, a spacing error or some other violation, tends to pop up. It can be very frustrating.

One of the ways that design tools are addressing this problem is to provide immediate feedback when you make a change to fix a DP error. This two minute demo shows how this works in Mentor’s Calibre RealTime tool.

The patent-pending warning rings in the video are part of the Calibre Multi-Patterning debugging technology, which predicts multi-patterning error propagation so you can avoid creating new errors as you correct existing errors. This information simplifies the debugging of multi-patterning errors, and also lets you learn and apply this knowledge to other designs. If you need to know more, take a gander at the Calibre double patterning datasheet.

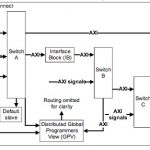

Getting the Most Out of the ARM CoreLink™ NIC-400

SoC designers are attracted to ARM as an IP provider because of their popular offerings and growing ecosystem of EDA partners like Carbon Design Systems. At the upcoming ARM Techcon on October 31 this week in Santa Clara you’ll have an opportunity to hear a joint presentation from ARM and Carbon Design Systems on: Getting the Most Out of the ARM CoreLink NIC-400.

Here’s a top-level block diagram of the CoreLink NIC-400 network interconnect:

ARM’s CoreLink NIC-400 interconnect is a versatile piece of IP capable of maximizing performance for high-throughput applications, minimizing power consumption for mobile devices, and guaranteeing a consistent quality of service. To achieve this versatility, the IP is highly configurable. Finding the right configuration for your particular application can be time-consuming. This presentation highlights the key features of the NIC-400 as well as a methodology, based on real system traffic and software, for making the best design decisions.

Speakers

Bill Neifert | CTO, Founder, VP Business Development, Carbon Design Systems

Bill Neifert is the founder and chief technical officer at Carbon Design Systems. He has more than 20 years of EDA and design experience. After starting his career as an ASIC design and verification engineer at Bull HN/Zenith Data Systems, Bill held various applications engineering and AE management roles at Quickturn. He has a BSCE and an MSCE from Boston University.

William Orme | Verification IP Solutions Architect, ARM

As a strategic marketing manager, William Orme is responsible for the CoreLink NIC-400 and the next-generation on-chip interconnect. At ARM since 1996, he has lead the introduction of many new products, including the ETM and subsequent CoreSight multicore debug and trace products. Prior to joining ARM, he spent 12 years designing embedded systems, from financial dealing rooms through industrial automation to smartcard systems. William holds degrees in Electronics and Computer Science as well as an MBA.

2012 Presentation

One year ago Bill Neifert of Carbon presented with Rob Kaye of ARM on: High Performance or Cycle Accuracy? You can view that White Paper online now.

Registration

Online registration for the ARM Techcon is now closed, however you can still register on-site.

More articles by Daniel Payne…

lang: en_US

Cadence’s Mixed-Signal Technology Summit

On October 10, I attended another Cadence Summit, this one titled the Cadence Mixed-Signal Technology Summit. Recently, I had written about the Cadence Silicon Verification Summit. The verification event was the first of its kind, and I thought it had terrific content. Being more of a digital guy myself, I was unaware that Cadence had been holding these mixed-signal events for a several years now and that this one was the fourth. I’ll admit that as the day went on, more of the discussion went beyond my technical background. Of course, I have a BSEE and I have worked in the SPICE world before, but it is an entirely different thing to be a mixed-signal designer. I now have a much deeper appreciation of the field.

Brian Fuller was once again the moderator of the event. As I mentioned before, if you haven’t seen Brian’s videos on the Cadence website they are truly entertaining and you should check them out. Dr. Chi-Ping Hsu, Cadence’s Sr. VP R&D and Chief Strategy Officer, spoke next. His remarks were brief but informative, reminding us of Cadence’s huge investment (~10,000 man-years) and long history (more than 20 years!) of providing tools for mixed-signal design. The initial driving force for this effort was SDA founder Jim Solomon; as I understand no slouch in mixed-signal design himself. The two speakers that followed gave very enlightening keynote presentations that made the entire day worth it for me.

First, was the Academic Keynote: Challenges in Emerging Mixed-Signal Systems and Applications by Prof. Terri S. Fiez, Professor and Head of the EECS Department at Oregon State University. Professor Fiez started by listing the most challenging and fastest growing applications requiring mixed-signal design expertise: medical, smart home, communications, energy, and transportation. One of the topics focused on was the evolution of solar panels. Traditional solar panels send DC power off the panel to a separate inverter to convert it to AC power with additional logic to integrate it worth the power grid or the user’s home. Smart solar panels need to go straight form the panel to the grid, meaning the previous off-panel intelligence needs to be built into the solar panel itself. A significant future goal is to have scaled systems able to work from/between 200W to 500kW. There is a need to eliminate the electrolyte capacitor currently used in order to achieve 25-year reliability while increasing energy harvesting by 4x! Did I mention I have a new appreciation for mixed-signal designers? As a preview to the next presentation, Prof. Fiez went on to talk about energy harvesting for use in remote sensors. This was getting fun…

Next up was Geoff Lees, SVP and GM microcontrollers for Freescale. Mr. Lees’ presentation was titled Designing for the Internet of Things (IoT), Peering Into the Future, Edge Node Integration. This was a fascinating presentation. The IoT will include a huge variety of remote sensors and microcontrollers that need to be powered by something other than an outlet or a battery (energy harvesting). Mr. Lees’ discussed nine different techniques for energy harvesting in development in industry now. In addition, security is critical; in Mr. Lees’ opinion, IPV6 is the “holy grail” for the IoT security concerns. Power is also challenging at each technology node because computing capabilities have been going up by 2.8x at each node while leakage seems to be increasing exponentially. So, edge nodes (the remote devices in the IoT) require off-states and low power is more important than performance. Looking at semiconductor processes, Mr. Lees indicated that 28nm will become the workhorse process for mixed-signal design for a very long time due to both the cost of using more advanced nodes and the fact that the transistors are changing from HKMG to FinFET. Which leads us to the next presentation…

Douglas Pattullo the Technical Director of TSMC North America spoke next. He talked about the processes TSMC is developing, and more importantly, what he sees in the future for mixed-signal design. Looking at that transition to FinFET, it is clear that we should not expect the W/L control over transistors that we have been accustomed to. Analog designers will need to live with unitized transistors (transistors with an integer number of fins). What was possible in planar transistors just cannot be done with fins. While I would say the TSMC view of mixed-signal design at 14nm was more positive than Freescale’s view, I think it is agreed that 28nm will be the home of mixed-signal design for a long time.

There were many other presentations by Cadence personnel, as well as Cadence’s customers, after the TSMC talk. Most of them went over my head. My respect for mixed-signal designers having reached a new high, I headed out of the Cadence Building 10 auditorium before the conclusion of the event feeling a bit humbled by the geniuses still in the room.

lang: en_US

Smartphones Are More Amazing That You Can Believe

I’ve written before about just how widespread mobile phones are: more people use mobile phones that a a toothbrush and more know how to use one than to use a pencil to write. This all happened very recently. I bet if you are asked when you got your first mobile phone you will guess too early. I had a car-phone in 1993 and a real mobile phone around 1996. By then I was working in the planning group for cellular phones and I was almost obligated to have the latest Ericsson phone…they were our biggest customer, up to 40% of total revenue for a single high-volume chip. Anyway, I wasn’t going to show up with a Motorola handset. In that era, in the business, handsets were called ‘terminals’ and several companies had divisions that used that word. But it wasn’t exactly consumer facing and morphed into different words in different countries: handset, mobile, handy, cell, and more.

iPhone is the most successful consumer launch…ever. Compared to say PCs or television, the ramp is much steeper. And when I say iPhone, I mean iPhone. If you want to look at the whole smartphone market it is even more incredible, mostly due to Samsung who are the other significant player in the market, but recently lots of low-cost Chinese suppliers too, many enabled by MediaTek’s chipsets.

How do iPhone sales stack up:

- Apple or Boeing vs iPhone? Apple

- Proctor and Gamble verus iPhone? Apple

- Coca Cola and McDonalds…combined vs iPhone? Apple

OK, so you probably assumed that those above results were for Apple. No, just iPhone. There is another half of Apple that is not iPhone: iPods, iTunes, MacBooks and more. Nobody seems to know this but Apple isn’t just bigger than Microsoft, iPhone alone is…or the rest of Apple. When you are twice as big you have two bits that are bigger.

So want to know some more amazing statistics: iPhone (not the whole of Apple) has revenue bigger than the turnover of the top 474 of the Fortune 500 (the top ones are mostly oil companies). Or all the non-bank companies on the London FTSE. Mobile is unbelievable large. It is the size of automotive but grew in a dozen years instead of a century. It is also very concentrated. Last time I looked, Apple and Samsung made over 100% of the profits in mobile. Meaning everyone else in aggregate lost money.

Google dominate market share with Android but since it is free it is hard to know how much money they make. But one theory is that by being ubiquitous they get all the consumer data that the networks do at a fraction of the price of building a network. We’ll see. In China there are lots of Android phones with no Google Apps: Baidu, Alibaba, QQ and all those Chinese alternatives.

Let’s comment… a comment from Sonics (about Arteris)

We still don’t know the precise status about a potential acquisition of Arteris by Qualcomm, and I prefer not to comment a rumor and wait for the official announcement, if any. But I would like to comment … a comment about this rumor, recently made by Sonics. This comment has taken the form of an Open Letter, from “Grant Pierce, CEO of Sonics, written directly to Charlie Janac, CEO of Arteris”. As far as I know, this is probably the first time that a CEO is publicly commenting such a rumor of an acquisition. This is probably a way to remind us that Sonics is also able to be innovative, after all.

Sonics CEO Letter to Arteris CEO

I have extracted some quotes from this open letter, and I will comment the comment.

– Are your customers (like Samsung, TI, and LG) who compete with

Qualcomm expected to simply let you hand over their confidential

data to Qualcomm in order to get bugs fixed?

This first point is quite interesting, especially when looking at Qualcomm’s competitors mentioned here: Samsung and TI. Even if TI has been the first company serving the wireless IC market segment, and the clear leader for years in the 2000’s, the company has decided to completely exit this segment a while ago. In other words, TI is no more a competitor for Qualcomm.

Samsung is clearly playing in the wireless segment, in multiple ways:

- Foundry, serving Apple, to process Apple’ Application Processor IC

- Chip Maker, designing Baseband and AP IC (the latest integrating Arteris NoC IP)

- OEM, selling smartphones, some of them integrating… Qualcomm IC

As you can see, Samsung can be at the same time a supplier (and direct competitor) for Apple, and a customer (and direct competitor) of Qualcomm. If these companies are able to manage these complexes relationship, there is no reason why they could not manage the new situation. Qualcomm makes big business of licensing technology so this a walk on the beach.

– Surely you are not expecting Arteris new hires to come in and

support the large code base your R&D has developed over the

10 year history of Arteris?

I love this comment starting with: “Surely you are not expecting new hires…”. I thought that Charles Janac was CEO of Arteris, taking the decision to hire (or not hiring) engineers to support customers, not Grant Pierce. Being specific, we can guess (but only guess as we have no official information…) that the (potential) sale of NoC technology to Qualcomm will generate some cash, as it’s usually the case. Maybe enough cash to hire missing engineers. The acquisition amount will be good indication, whether the new Arteris will have enough money at bank to do so…

– How will you keep up with new system requirements, new protocols,

and customer feature requests without your original engineering team?

I think that Sonics should wait for the potential sale to occur before asking these –relevant- questions…

Last but not least:

In the past, you have claimed market leadership by both

revenue and technology, although this has never been the case. Sonics

has always been the leader in this overall segment!

We have blogged in Semiwiki about the “NoC History”, our readers know that, if Sonics was innovative in the 90’s with their crossbar based solution, the Network-on-Chip was the real innovation of the late 2000’s. If we look at the ranking by revenue, that’s true that Sonics leads, with License revenue of $12.8M in 2012, after $4.8M in 2011. That’s also true that the jump in revenue in 2012 came from a huge $20M (one time) PO from Intel, when the $14.5M license revenue made by Arteris comes from several dozen NoC IP sales to various customers. Nevertheless, we have no doubt that new Sonics customers will get the same level of support than Intel has.

More Articles by Eric Esteve …..

lang: en_US