This year’s OIP was much more lighthearted than I remember which is understandable. TSMC is executing flawlessly, delivering new process technology every year. Last year’s opening speaker, David Keller, used the phrase “Celebrate the way we collaborate” which served as the theme for the conference. This year David’s catch phrase was “Insatiable computing trend” which again set the theme.

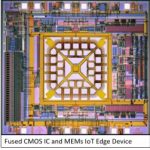

First up was Dr. Cliff Hou’s update on the design enablement for TSMC’s advanced process nodes. Cliff again hit on the Mobile, HPC, IoT and Automotive markets with a focus on 55ULP, 40ULP, 28HPC+, 22ULP/ULL, 16FFC, and 12FFC. Speaking of 16FFC, TSMC’s Fab 16 in Nanjing, China is on track to start production in the second half of 2018 approximately two years after the ground breaking. This will be the first FinFET wafers manufactured in China which is another first for TSMC. China represents the largest growth opportunity for TSMC so this is a very big deal.

Not surprisingly 10nm was missing from the presentations but as we all know Apple is shipping 10nm SoCs in the new iPads and iPhones. As you may have read, the new iPhone X supports the “Insatiable computing trend” but we can talk about that in more detail when the benchmarks and teardowns become available. Needless to say I will be one of the first ones on the block to own one.

Cliff made comparisons between 16nm and 7nm giving 7nm a 33% performance or 58% power advantage. 7nm is now in risk production with a dozen different tape-outs confirmed for 2017 and you can bet most of those are SoCs with a GPU and FPGA mixed in. 7nm HVM is on track for the first half of 2018 followed by N7+ (EUV) in 2019. N7+ today offers a 1.2x density and a 10% performance or 20% power improvement. The key point here is that the migration from N7 to N7+ is minimal meaning that TSMC 7nm will be a very “sticky” process. Being the first to EUV production will be a serious badge of honor so I expect N7+ will be ready for Apple in the first half of 2019.

Finally, Cliff updated us on TSMC’s packaging efforts: InFO_OS, InFO_POP, CoWos, and the new InFO_MS (integrated logic and memory). Packaging is now a key foundry advantage so we will be doing a much more detailed look at the different options in the coming weeks as the presentations are made available.

As you all know I’m a big fan of Cliff’s (having known him for many years) and he has never led me astray so you can take what he says to the bank, absolutely.

The other keynotes were done by our three beloved EDA companies who celebrated TSMC’s accomplishments over the last 30 years. I would give Aart de Geus the award for the most content without the use of slides. Aart offered a nice retrospective since Synopsys is also 30 years old so they really grew up together. Anirudh Devgan of Cadence talked about systems companies doing specialized chips to meet the need for their insatiable computing. As I mentioned before, systems companies now dominate SemiWiki readership so I found myself nodding my head quite a bit here. Wally Rhines gets the award for the funniest slide illustrating the yield improvements TSMC logos have accomplished over the years:

All-in-all it was time very well spent. It was a good crowd, the food was great, and I gave away another 100 books and SemiWiki pens in an effort to stay relevant. There were more than 30 technical papers that we will cover as soon as they are made available and if you have specific questions hit me up in the comments section.

Also read: TSMC Design Enablement Update