Open Silicon hosted a webinar today focusing on their High Bandwidth Memory (HBM) IP-subsystem product offering. Their IP-subsystem is based on the HBM2 standard and includes blocks for the memory controller, PHY and high-speed I/Os, all targeted to TSMC 16nm FF+ process. The IP-subsystem supports the full HBM2 standard with two 128-bit wide channels that can deliver up to a 256 GBps bandwidth memory access.

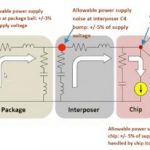

Open Silicon’s IP-subsystem has been verified with TSMC silicon, including the use of TSMC’s 65nm-based CoWoS (chip-on-wafer-on-substrate) interposer and silicon-measured results correlated well with predicted simulations before manufacturing. This bodes well for designers that plan to use the IP as it means that Open Silicon’s simulation and modeling strategy is working well. Open Silicon also characterized their IP over the commercial range with different supply voltages and showed results at the webinar with the IP-subsystem successfully delivering data rates of 1.6Gbps/pin from -10[SUP]0[/SUP]C to +85[SUP]0[/SUP]C over a supply voltage range of 1.14V to 1.26V and 2.0Gbps/pin over the same temperature range with supply voltages between 1.28V and 1.42V.

The HBM2 IP-subsystem is currently offered in two options. The first option is to license the IP-subsystem from Open Silicon, in which case the licensee gets RTL for the memory controller, hard IP for the PHY and high-speed I/Os in the form of GDS, Verilog models for the main subsystem blocks and subsystem documentation.

The second option is to use Open Silicon’s 2.5D HBM2 ASIC SiP (system-in-package) IP, that includes Open Silicon’s help to integrate the IP-subsystem into their customer’s ASIC, and to design and integrate the customer’s ASIC and chosen HBM2 memory into a SiP using the TSMC CoWoS interposer.

Customers also have access to Open Silicon’s validation/evaluation platform to perform early testing of the memory interface against expected system-level memory access patterns. The validation platform uses a FPGA-based reference board with a prefabricated version of the HBM2 IP-subsystem combined with HBM2 memory. The rest of the customer’s ASIC system is synthesized into the on-board FPGA where the logic can be used in conjunction with HBM2 IP-subsystem and HBM memory.

A USB connection is available to connect the evaluation board to a workstation where Open Silicon runs a software suite known as “HEAT”. HEAT stands for Hardware Enabled Algorithm Testing and combines the hardware portions of the evaluation board with design-for-test (DFT) JTAG and BIST logic that can be used to access, control and monitor CSR registers in the HBM controller IP and Test Chip registers. The HEAT software GUI allows designers to build customized generators to drive data traffic to mimic real system usage and to then monitor system bandwidth, latency, and data mismatch results. The software is capable of using fixed, or variable patterns using pseudo random binary sequences (PRBS) as well as standard increment, decrement, walking zero, and walking one addressing patterns.

The current HBM2 IP sub-system supports up to 2Gbps/pin data rates with up to 8 channels (or 16 pseudo channels) delivering up to a total of 256 GBps total bandwidth. It’s compliant to the JEDEC HBM 2.2 specification and supports up to 5mm interposer trace lengths with the above stated performance. It also supports AXI and a native host interface.

Open Silicon also showed a road map of their next development including a port of their HBM2 IP-subsystem to TSMC’s 7nm CMOS process with the intent to have it silicon validated by the middle of 2018. This version will support a multi-port version of the AXI interface with different schemes for arbitration and quality of service (QoS) levels and programmability of different address mapping modes. The 7nm technology will enable greater than 300 GBps of total bandwidth while running data rates of up to 2.4Gbps/pin. This time line matches up with when major HBM memory providers will be delivering products capable of supporting the 2.4Gbps data rates. Additionally, Open Silicon already has eyes on the evolving HBM3 standard and is targeting development of an IP-subsystem to support that with a 7nm TSMC process. They are expecting HBM3 work to begin sometime in 2019.

So, to summarize, Open Silicon presented on their silicon proven HBM2 IP-subsystem based on TSMC 16nm FF+ and showed good modeling and simulation predictability. They also showed some interesting details of their design along with their FPGA-based evaluation and validation board and test methodology. And, they followed up with some real characterization data from their runs with TSMC showing impressive data rates of 1.6Gbps/pin to 2Gbps/pin using 5mm interposer trace length.

All in all, a very impressive set of IP that can be used in a wide variety of markets where high bandwidth memories are key to success. System designers building high-performance computing and networking solutions for data centers, networking, augmented and virtual reality, neural networks, artificial intelligence and cloud computing can all benefit from Open Silicon’s HBM2 IP-subsystem that not only completes their solutions but lowers their risk and shortens their time to market.

Nice job Open Silicon! You can see the replay HERE.

See also: Open Silicon Solutions