Terms like avalanche breakdown and impact ionization sound like they come from the world of science fiction. They do indeed come from a high stakes world, but one that plays out over and over again here and now, on a microscopic scale in semiconductor devices – namely as part of electrostatic discharge (ESD) protection. Semiconductor devices are highly vulnerable to the high voltage spikes that commonly occur when triboelectric charged objects are exposed to their terminal pins. ESD can be lethal to a device if there is inadequate protection designed into chips.

In an unprotected or incorrectly protected circuit ESD events can lead to failures or affect chip reliability. The mechanisms for these failures fall into several categories. These include oxide breakdown, junction burnout or metal burnout. Many failures are immediately obvious, but ESD can also cause latent failures that can go undetected during testing. Latent failures can become worse over time if there is inadequate ESD protection in the design, and can ultimately lead to field failures months or years later.

Typically, ESD protection is provided by creating alternative parallel electrical paths that harmlessly direct discharge energy so that it dissipates in ESD devices. These circuit paths contain so-called clamp devices and diodes that are usually switched off to allow normal circuit operation. However, clamps begin conducting current and limit voltage once a specified threshold voltage is reached. Many types of ESD clamps rely on the internal structure of MOS FET devices to perform their job.

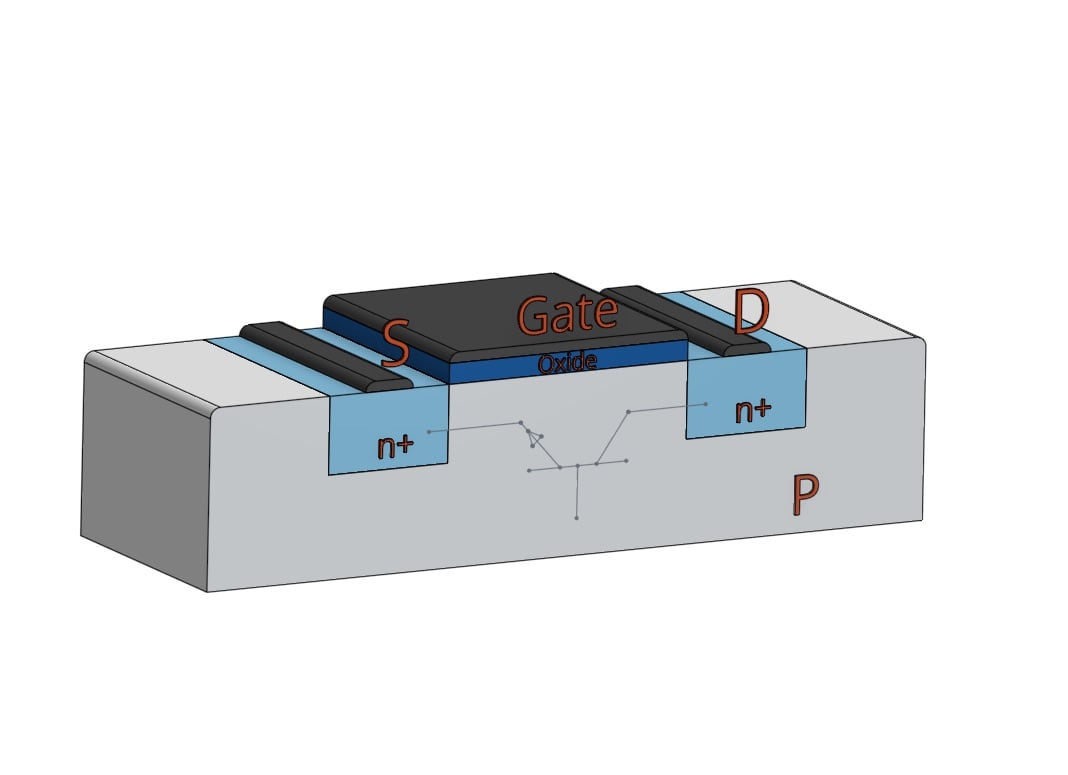

Every NMOS FET contains a parasitic bipolar junction transistor (BJT) due to the configuration of the doped material. In the normal operation of an NMOS device the parasitic BJT does not come into play. As shown in Figure 1, the BJT has the source and drain as its emitter and collector respectively, and the bulk node underneath can serve as the base of the BJT under the right conditions. This is where impact ionization comes into play. A mobile electron or hole will move under the influence of an electric field. With a low applied electric field, it will move without causing any changes to the system. However, under a strong electric field, like the one found in a device with a high voltage across its terminals, the mobile charge carrier will energetically strike bound charge carriers, which can then break free. These new charge carriers can in turn repeat the process, leading to an avalanche current.

When this avalanche current is moving toward the base of the parasitic BJT, the base current can trigger the device, allowing large current flow between the collector and emitter. The most interesting thing about this is that once the device triggers, the high electric field that started the process is no longer necessary, or even present, to sustain the current. Nevertheless, the conduction continues with increasing current, but at much lower voltages. This phenomenon of triggering at a relatively high voltage and then falling back to conduction at a lower voltage is called snapback. For an effective ESD protection device the trigger voltage should not be so high that it will cause damage to sensitive circuit devices. Also, the lower hold voltage should be above the normal circuit operating voltage so that the device will switch off once the ESD event ends.

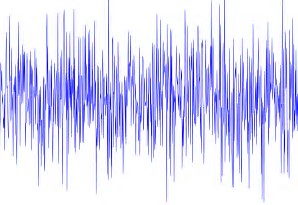

The IV-characterization curves for ESD devices are normally created by running carefully timed and controlled square waves through the device. When done correctly joule heating will not cause the device to fail and a series of measurements can be taken. This process is called transmission line pulse (TLP) testing. It is possible to verify proper operation of the ESD device in the circuit with the IV curves created from TLP measurements. However, normal SPICE simulation will not work well because SPICE cannot model the device turn on through impact ionization and the subsequent voltage snapback. Negative differential resistance during snapback causes convergence problems in SPICE. If analysis is performed on an ESD protection network without proper modeling of snapback behavior, incorrect results may be obtained. In some cases, a chip failure or ESD violation could be overlooked, leading to tester or field failures after tapeout.

Let’s examine one such case. A software tool that can properly analyze the full dynamics of an ESD event involving snapback devices must have two critical capabilities: a) proper handling of snapback behavior, and b) the ability to correctly simulate triggering of two or more parallel devices. Both are necessary. A tool designed to handle cases like this is Magwel’s ESDi® which can simulate an ESD event where there are snapback devices and competitive triggering between parallel devices. The case below shows how a serious violation can be overlooked if the analysis tool cannot properly model circuit behavior under ESD stress.

In figure 2 the NMOS device “esd_cell2” is a snapback device. If during an ESD event on pad “IO” the voltage across esd_cell2 does not reach its snapback trigger voltage, all the current will pass through the already triggered PMOS esd_cell1. This will violate margins on PMOS esd_cell1 and cause an ESD related failure. On the other hand, if esd_cell2 is not modeled with snapback and its holding voltage is used as the trigger voltage, it will appear to trigger, seemingly lowering the voltage and providing another current path.

First here is the example of an ESD event on “IO” with no snapback modeling, where NMOS esd_cell2 appears to trigger:

However, we can see below what actually happens in the circuit with an HBM test on the pad “IO”. With the snapback device esd_cell2 below its trigger threshold, the pad voltage reaches 10.369V and only PMOS esd_cell1 is triggered. In this instance, the voltage across esd_cell1 is 9.027V and the total current of 1.33A passes on this one path. There is both an overcurrent and overvoltage violation on PMOS esd_cell1, -14% and -6.6% margin respectively.

Magwel’s ESDi® uses a built-in simulator designed to take snapback into consideration. It correctly identifies which devices will and won’t trigger based on Vt1 trigger voltage. It will also account for the correct Vh1 holding voltage of snapback devices. This is important because once triggered, higher current values are sustained only above the holding voltage. Simply modeling snapback devices as ordinary diodes can cause serious ESD violations to go unnoticed.

Magwel ESDi Fieldviewer showing current density violation during ESD event

Magwel ESDi® runs using layout data and does not require the design to be LVS clean. It comes with a field viewer that shows current densities in metal routing in the discharge path, so that electro-migration (EM) issues can be visually inspected after reviewing the interactive error report. HBM checks are performed with simulation on all pin-pair combinations. This is possible due to ESDi’s rapid simulation capability. Though users can select a subset of pin pairs if focused analysis is needed.

With much tougher requirements for reliability due to standards like ISO 26262, preventing ESD related failures during testing, or even more importantly in the field, is becoming paramount. Using the right analysis tool can make the difference between catching or missing a potential failure. With the special requirements of ESD simulation it makes sense to apply a tool that is known for consistent and reliable results, and can handle snapback devices and parallel current paths. For more information on ESDi®, please look at the Magwel website.