In the old days to learn about new semiconductor IP you would have to schedule a sales call, listen to the pitch, then decide if the IP was promising or not. Today we have webinars which offer a lot less drama than a sales call, plus you get to ask your questions by typing away at the comfort of your desk, hopefully wearing headphones as to not disrupt your co-workers at the next cubicle. I’ll be attending a webinar from Moortec about their IP for monitoring process and voltage variations on April 25, 10AM PDT and invite you to join the event online. After the webinar I’ll write up a summary of the salient points, saving you some time and effort in the process if you cannot attend virtually.

Intro

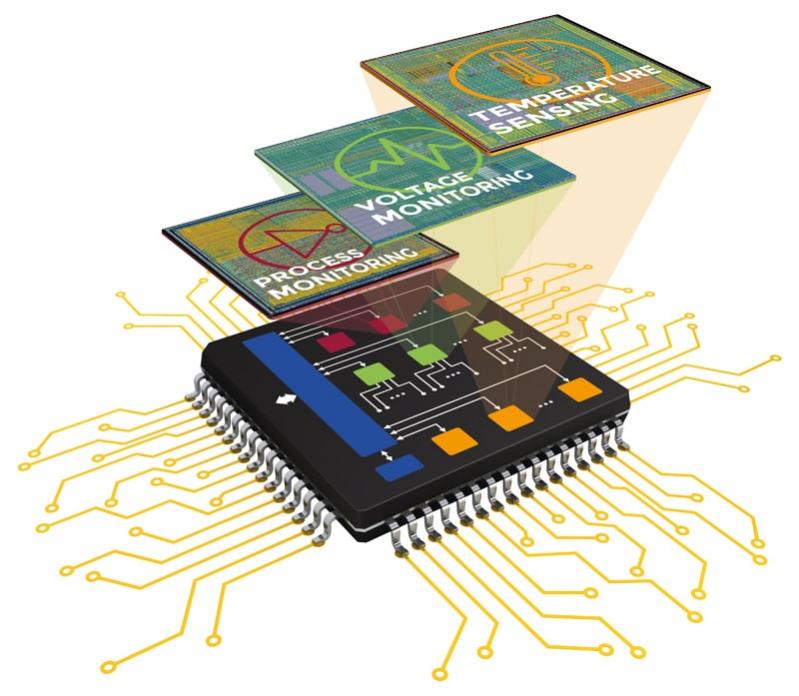

Historically temperature has always been the first thing engineers think about when it comes to monitoring in-chip conditions, however as we move into more complex designs on advanced nodes, process and voltage start to become equally critical considerations. The associated challenges manifest in multiple ways, including: process variability; exposure to timing violations; excessive power consumption; and the effects of aging. Each of these can lead to ICs failing to perform as expected.

Webinar Content

In this latest Moortec webinar we will look at how process and voltage monitoring combine to enhance the performance and reliability of the design and how they can be used to implement various power management control systems.

This webinar is aimed at IC developers and engineers working on advanced node CMOS technologies including 40nm, 28nm, 16nm, 12nm and 7nm. It will seek to outline the two main pressures that designers are grappling with today, being: i) the desire for lower supplies, enabling compelling power performance for products, especially consumer technologies; and ii) the challenge posed of placing in jeopardy the functional operation of SoCs and an entire product range. The dilemma for the designer is that to maximize the former, optimization schemes used today are algorithmically treading an increasingly thinner line between robust operation and having failing devices within the field

Moortec provide complete PVT Monitoring Subsystem IP solutions on 40nm, 28nm, FinFET and 7nm. As advanced technology design is posing new challenges to the IC design community, Moortec are able to help our customers understand more about the dynamic and static conditions on chip in order to optimize device performance and increase reliability. Being the only PVT dedicated IP vendor, Moortec is now considered a centre-point for such expertise.

After registering, you will receive a confirmation email containing information about joining the webinar.

Webinar Registration

It’s easy to register online here for Wednesday, April 25 at 10AM PDT (US, Europe, Israel).

About Moortec Semiconductor

Established in 2005 Moortec provides compelling embedded sub-system IP solutions for Process, Voltage & Temperature (PVT) monitoring, targeting advanced node CMOS technologies from 40nm down to 7nm. Moortec’s in-chip sensing solutions support the semiconductor design community’s demands for increased device reliability and enhanced performance optimization, enabling schemes such as DVFS, AVS and power management control systems. Moortec also provides excellent support for IP application, integration and device test during production.

Related Blogs