Have you ever wondered how process variation, thermal self-heating and Vdd levels affect the timing and yield of your SoC design? If you’re clock specification calls for 3GHz, while your silicon is only yielding at 2.4GHz, then you have a big problem on your hands. Such are the concerns of many modern day chip designers. To learn more about this topic I attended the 45 minute webinar from Moortec, titled “The Importance of Monitoring Process & Voltage in Advanced Node SoCs“. Ramsay Allan provided the introduction and overview, then Stephen Crosher, CEO presented about 20 slides on the challenges for IC design at 40nm down to 7nm, along with their semiconductor IP used for in-chip PVT monitoring.

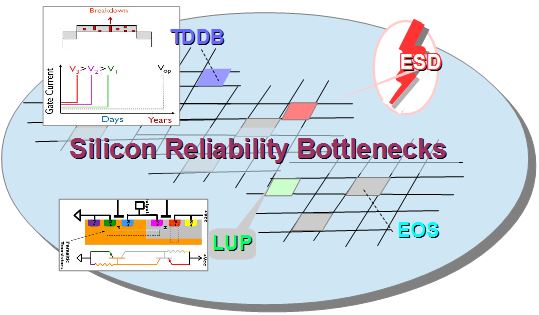

Here’s a summary of physical effects that adversely effect chip timing and reliability:

- Thermal hot-spots

- Device and process variability

- Increased resistance of interconnect from 40nm to 7nm

- Power Delivery Network

- Lower Vdd trends from 40nm to 7nm, lower design margins

- Ageing that changes Vt

- Self-heating accelerates BTI and HCI

- Delays increasing from interconnect resistance

- Delays increasing from Vdd variations

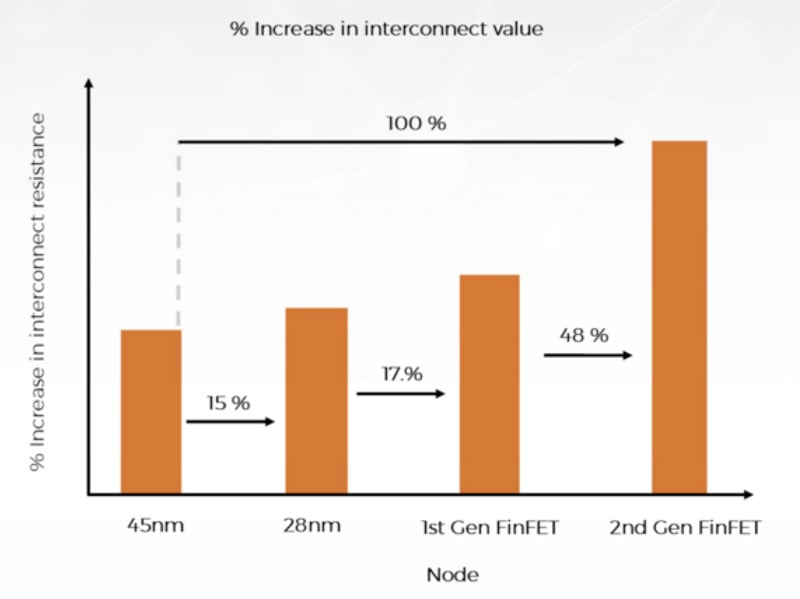

The webinar was chocked full of diagrams and charts to point out all of the effects that make you worried at night, for example the increase in interconnect resistance as you progress from 40nm to 7nm nodes:

Total delays within a chip are the combination of gate delays and wire delays, so as we used smaller process nodes the percentage of total delay caused by interconnect is now approaching 50%:

Process variation now shows it’s ugly side when on the same chip you can see different process corners, making it especially challenging to do timing analysis and reach timing closure.

To mitigate these issues clever IC designers have come up with several approaches that use in-chip monitors:

- Voltage scaling optimization per chip by finding the lowest center functional voltages to meet frequency

- Adaptive Voltage Scaling (AVS) as a closed-loop system using on-chip monitors

- Self-adaptive tuning

- Embedded chip monitoring to minimize power consumption at the enterprise datacenter level

- Using AVS to do speed binning of parts

The British chaps from Moortec founded the company back in 2005 are are experts at applying in-chip monitoring to a wide range of commercial ICs. Their semiconductor IP has both hard blocks and soft blocks combined to create a subsystem for in-chip monitoring.

Placing multiple PVT sensors on a single chip makes sense, but how many of them should you add, and exactly where should they be placed for optimum impact? Good questions, so rely upon the technical support that comes along with their service. Placement of IP is really application specific.

For me the most powerful bit of information was saved for near the end when they unveiled a list of customers using their in-chip monitoring.

I plan to visit Moortec at DAC in San Francisco, however they’re also having multiple events across the globe to support their unique IP.

Summary

Having a plan to meet critical timing by having in-chip PVT monitors as part of a subsystem makes more sense as you reach the 40nm process node and going to ever smaller geometries. Yes, you could cobble together something proprietary in-house if you have lots of spare engineering resources and the time to design, verify, fabricate and test a one-off system. My hunch is that your product schedule and budget would be better served by looking at something off the shelf from Moortec, because that is their sole focus and the proof is in their ever-expanding list of adopters. They’ve setup distributors in all of the high-tech centers around the world and that would be a good starting point to learn more about their technology, approach and benefits.

Related Articles