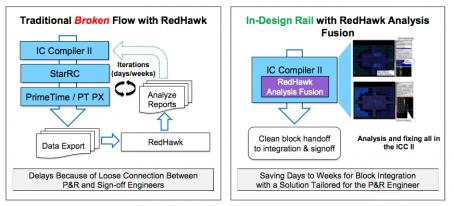

There is a nice serendipity in discovering that two companies I cover are working together. Good for them naturally but makes my job easier because I already have a good idea about the benefits of the partnership. Synopsys and ANSYS announced a collaboration at DAC 2017 for accelerating design optimization for HPS, mobile and automotive. In February 2018 they pulled the covers back a little, announcing a product launch integrating ICC II with RedHawk Analysis Fusion (see my earlier blog on Fusion). Now they’re pulling the covers back further, getting into more of the detail on what this Fusion integration provides.

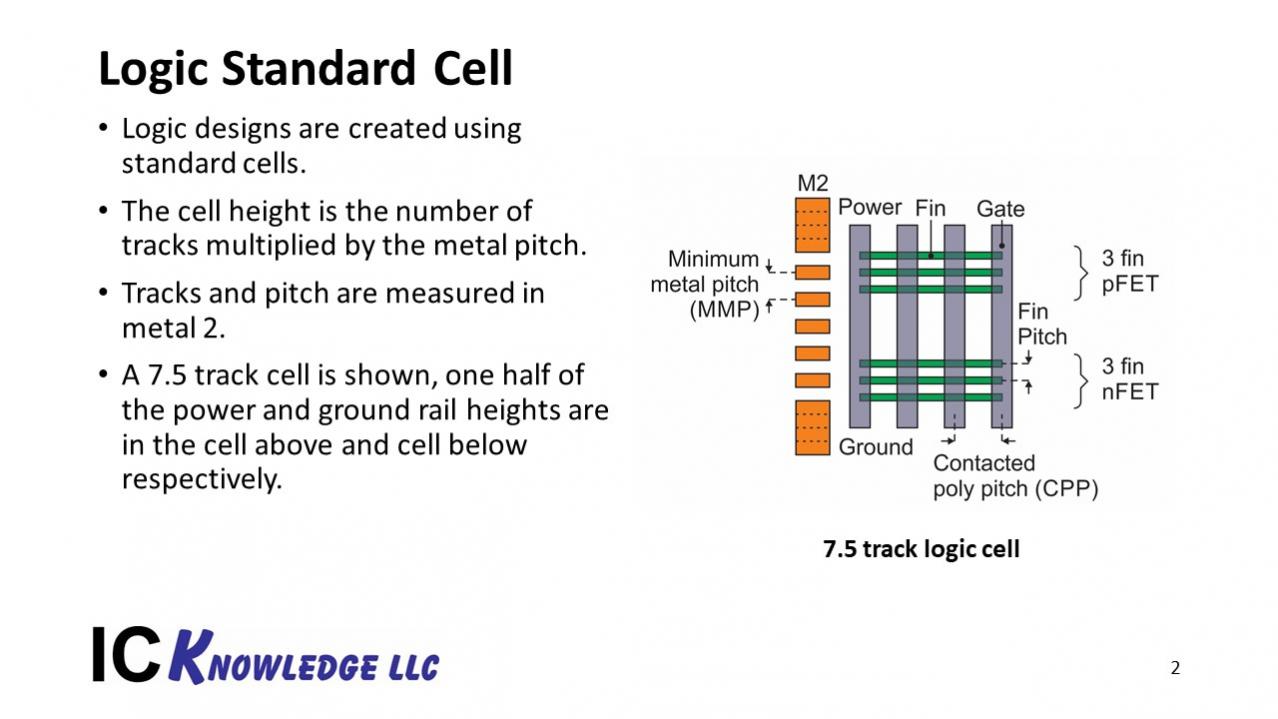

Why is this important? Because it’s becoming more difficult to continue handling integrity and reliability as a (pre-) signoff step. The conventional approach is to agree global margins for IR-drop, current surge and other power factors, to guide timing closure, EM security and so on. Then you design the power distribution network (PDN) to meet those objectives and signoff, most likely using RedHawk. That worked well for quite a while but in advanced processes, lower operating voltages reduce margin above threshold, increasing relative sensitivity to power noise. Meanwhile increased dependence on power-switching through reduced width power rails and vias increases risk of EM. Simply cranking up the margins even higher to compensate becomes an untenable solution.

If you can’t globally margin, what can you do? Obviously you really don’t have to increase rail widths globally and strapping everywhere; you can be more selective because (hopefully) not every part of the design will be subject to the worst stresses. RedHawk supports this kind of differential analysis across the design but taking action on that guidance will clearly affect implementation. In older flows, you could do the RedHawk analysis outside of ICC and back-annotate to the implementation database, but that’s obviously cumbersome. More importantly, tuning IR-drop across the design versus other implementation objectives becomes a painful manual task or dependent on complex in-house scripting. A better approach is move this analysis into the implementation flow where it can be used to guide local optimizations.

Kenneth Chang (Product Marketing Manager at Synopsys) tell me that this is more than simply displaying RedHawk results inside ICC II (though they do that too through various heat maps). Synopsys provides newly developed features to take action on RedHawk feedback, adding straps where needed. In fact, they have reduced the task of doing this analysis to one command – analyze_rail – within IC Compliler II, so a designer simply runs this command to both analyze and optimize for power integrity.

Synopsys generally does a good job of validating customer needs before they build a solution, so I was interested to hear from their perspective what drove this integration. He told me the biggest problem for many of their customers was having to over-margin (sounds familiar). Being the good engineers that they are, customers have built their own solutions with scripting and loose tool integration, but they acknowledge these are not ideal and leave a lot of opportunities for optimization untouched, particularly since they also have to worry about DRC-correctness and timing when scripting their fixes.

Hence the attractiveness of integrating RedHawk into IC Compiler II, complemented by automated fixes. RedHawk is dominant in power integrity/reliability analysis and clearly continuing to innovate. Integrating into physical design enables in-design analysis and optimization through these new functions which, being native, are designed to be DRC-aware and timing-aware.

Nice, but is this really essential? Kenneth told me of one design example he had seen recently where rail analysis at the end of design showed an unfixable problem (no reasonable late-stage ECO possible), which could have been fixed if it had been addressed earlier. That design had to be abandoned. If you’ve read any of my blogs on RedHawk, you know these challenges are increasing. More generally, when you consider that 30%+ of metal may be devoted to power in advanced geometry designs, this kind of solution is likely to become essential in delivering competitive products.

The integration makes sense; it simplifies the design task, it reduces the need to over-margin and it ensures correlation between in-design IR-drop optimization and final signoff. Kenneth mentioned that Synopsys also continues to work on other opportunities to leverage this integration, including more possibilities for IR-drop-driven optimizations. He tells me we should also stay tuned for updates on work they are doing together around thermal and EM analysis. You can learn more HERE, and you should check this at the Synopsys booth at DAC, where they may have further updates.