At DAC 2018, Synopsys held a lunch panel discussing verification challenges faced by the industry leaders, their adopted approaches and the overall verification technology trends. This panel of industry experts from Intel, AMD, Samsung, STM and Qualcomm also shared their viewpoints on what drives the SoC complexity and how their teams have tackled them and achieved successes.

From the Synopsys Verification Group, Michael Sanie (VP of Marketing) and Chris Tice (VP of Verification Continuum Solutions), kicked-off the session by highlighting the current state of Synopsys verification landscape.

As an established verification leader a TTM 40%+ emulation growth, Synopsys showcased at the DAC exhibit floor, ZeBu® Server 4. It offers a 2x emulation performance gain, 5x lower power consumption and lower cost of ownership with half the datacenter footprint. Other features include scalable capacity to 19B+ gates, 12x faster waveform data transfer vs ZeBu Server-3, architected for simulation acceleration, with 16x higher host-bandwidth vs dual PCI/e Gen3 I/F; faster compile, hybrid emulation and an advanced debug. Also dubbed as his ‘15th generation emulation system’ by Chris Tice.

HAPS-80 (High-Performance ASIC Prototyping System), Synopsys prototyping systems had 1700+ successful deployments. Newly introduced, HAPS-80 Desktop comes with built-in software and increased debug throughput.

The maturity of FGP (Fine Grain Parallelism), originally announced as Cheetah Technology (2016), then part of the standard VCS release and now available to every VCS user as VCS-FGP (2018). FGP eliminates any manual work by dividing the design into groups of events and exploits many-core processor architectures to parallelize these clustered tasks.

Also highlighted the investment made in Verdi debug format support (interactive, text, waveform based debug) and VCFormal experienced the fastest software growth in Synopsys verification group. Other summary includes SpyGlass has over 300+ customers using static verification; new features RDC and improved performance. VC Lint and VC CDC was introduced as the next generation Static technology with advanced word-level data model from VC Formal combined with rules/engines from SpyGlass (Rules, Engine) with integration compatibility with DesignCompiler and PrimeTime.

Accelerating Digital IP Verification Methodology (STMicroelectronics)

According to Mirella Negro, MCD Verification Group Manager, being a leading supplier for Smart Driving and the Internet of Things through its 32-bit general-purpose microcontrollers requires digital IP robustness, very aggressive market-introduction schedules and complex MCU verification need. STM deployed VC Formal last year, augmenting its coverage driven dynamic verification to prevent simulation iterations due to RTL refreshes, which occur between bug huntings and final coverage analysis.

Using Synopsys comprehensive formal Apps such as Property Verification (FPV), Formal Coverage Analyzer (FCA) and Automatic Extraction of Properties (AEP), STM was able to achieve faster property convergence in many scenarios. VC Formal’s broad portfolio of formal assertion IPs, also uncovered significant number of pre-silicon corner-case bugs, enabling STM to deliver more designs in less time, without compromising quality.

The native integration of VC Formal with VCS and Verdi debug engine, allows design and verification teams to easily leverage formal technologies and automate root cause analysis of formal results –such as the code unreachability issue which affect final coverage. Although some complex STM IP’s still need simulations, a growing number of IPs are validated using formal only.

Acceleration of Pre-Silicon Emulation (Qualcomm)

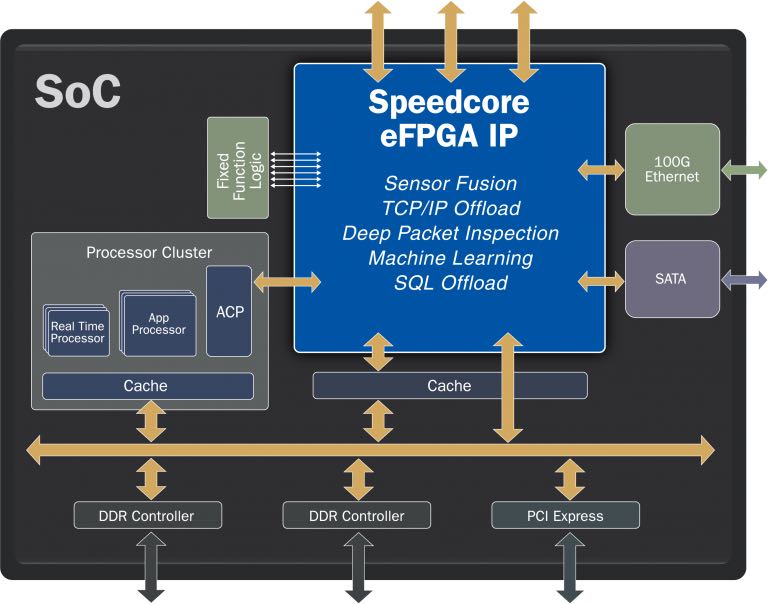

Senthil Dayanithi, Sr. Engineering Director at Qualcomm concurred on the continuing shift-left trend in SOC H/W development, which can be achieved through migrating to system emulation and a high-level S/W development with real peripherals.

Pre-Silicon Emulation Efficiency Improvement (Intel)

Raju Kothandaraman, Graphics H/W Director at Intel described the increasing visual experiences as driver to complexity in both design sizes and workloads. He believes that while emulation spending is growing it is necessary to efficiently use hardware to keep-up with verification complexity. The prerequisite to that is to understand the key metrics which include the following:

- Compile time – Try to reduce bottlenecks during compile step through the use of emulation friendly RTL, the best known methods and the selection type of models. All of which could deliver 4-5x improvement.

- Model frequency – Use emulation friendly transactors, evaluate and fix any unusually slow frequency due to long timing paths (may be attributed to timing loop). Work and collaborate with EDA vendor. A 2-3x improvement and higher model frequency can be gained here.

- Wall clock efficiency, Utilization and Debug TAT – Improving how fast regression time, identifying inefficient DPI calls, having more offline debug capacity and local memory solution on emulator. Also key to pre-silicon validation is to have effective board packing, an enhanced debug methodology and model types, ID of right content to run. All of these could bring in 3-4x performance improvement.

His final take on gaining emulation efficiency includes a change of mindset to a more efficiency driven, knowing when to empower design versus automation, an internal cross-team partnership and external EDA collaboration.

Driving Performance and Power Tuning Presilicon (AMD)

Andrew Ross, Principal MTS from AMD stated that the basic premise of the two metrics (performance, power) is to complete more work with less time and completing more work with less energy. ZeBu emulation system has been at the center of this solution handling full SOC RTL (1B+ gates), GPU, clock domain ratios modeled for silicon accuracy and applied performance tuned register init and fusing. As part of the virtualize execution environment, the (hypervisor) VirtualBox interfaced through PCie transactors to the ZeBu box. The App running visualization (GPU) reporting # of cycles required for execution. AMD also leveraged ZeBu zDPI passive monitoring activity and fast waveform capture.

Performance analysis can be done using full S/W and H/W stack as in silicon bring-up, allowing high fidelity observability of the combined system behaviors. In summary, system level emulation solution enables power and performance analysis with real world workloads, a more data driven analysis and augmenting simulation based approaches.

Addresing Unique Verification Challenges of Era Constant Changes (Samsung)

According to Seonil Brian Choi, Master Principal Engineer at Samsung, an increased in design complexity has led to less verification and development time. Moreover, multiple specification changes triggers a redesign and subsequent verification efforts that may eventually shift the completion verification time closer to the deadline leaving no time to allocate for S/W verification.

Seonil shared the methodology evolution for verification and S/W development from simulation centric to a more system level virtual prototyping. A shift from little modeling to more modeling while enabling an early S/W development.

The takeaway from this panel session is that leading SOC’s require advanced technologies including simulation, formal verification, fast emulation, hybrid emulation prototyping, and debug to complete the challenging task of verification of such SoCs.

To watch a video of the DAC Verification Panel, visit HERE.