From my restaurant seat today in Lake Oswego, Oregon I watched as an SUV driver backed out and nearly collided with a parked car, so I wanted to wave my arms or start shouting to the driver to warn them about the collision. Cases like this are a daily occurrence to those of us who drive or watch other drivers on the road, so the promises of using Advanced Driver Assistance Systems (ADAS) is especially relevant in keeping us alive and injury free. I did some online research to better understand what’s happening with the networks used in automotive applications.

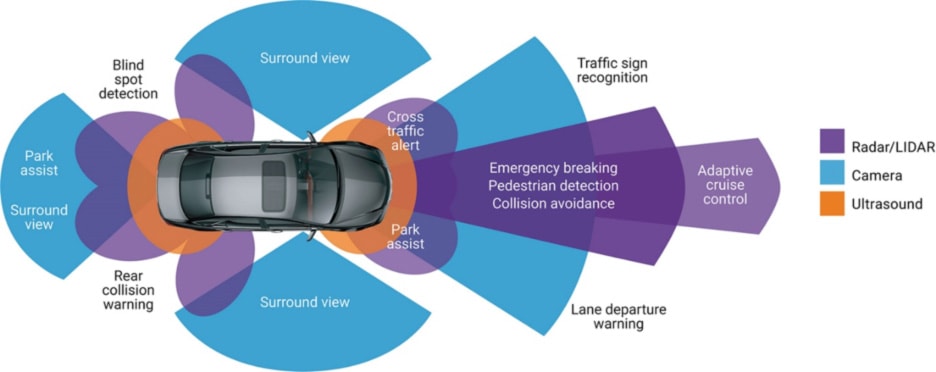

Automotive networks are tasked with moving massive amounts of data to process and help make decisions, just think about the data that these safety features and systems require:

- Emergency braking

- Collision avoidance with other vehicles

- Pedestrian and cyclist avoidance

- Lane departure warning

- HD cameras

- Radars

- LIDARs

- Fully autonomous driving

Our electronics industry is often driven by standards committees, and for networking in ADAS applications we thankfully have the Time Sensitive Networking (TSN) IEEE working group that has thought through all of this and come up with standards and specifications. So let’s take a closer look at how the Ethernet TSN standards can be used in automotive scenarios, then ultimately why you would use automotive-certified Ethernet IP in your SoC design.

Going back to 2005, there was an Ethernet standard for Audio Video Bridging (AVB) used for things like automotive infotainment and in-vehicle networking. These applications aren’t really all that time critical and the data volume was low in comparison to higher-demand tasks like braking control, so in 2012 the IEEE revamped things a bit by transforming this AVB working group into TSN. So with TSN we now have a handful of very specific standards to deal with ADAS requirements:

[table] style=”width: 500px”

|-

| TSN Standard

| Specification Description

|-

| IEEE 802.1Qbv-2015

| Time-aware shaper

|-

| IEEE 802.1Qbu-2016

IEEE 802.3br-2016

| Preemption

|-

| IEEE 802.1Qch-2017

| Cyclic queuing and forwarding

|-

| IEEE 801.1Qci-2017

| Per stream filtering and policing

|-

| IEEE 802.1CB-2017

| Frame replication and elimination

|-

| IEEE 802.1AS-REV

| Enhanced generic precise timing protocol

|-

Time-Aware Shaper

An engineered network will provide a predicted, guaranteed latency. They do this with a time-aware shaper that allows scheduling so that critical traffic gets a higher priority. As an example consider four queues of data, so the IEEE 802.1 Qbv scheduler controls which queue goes first (shown in orange) and so on.

Preemption

In the next example, Queue 2 in orange starts transmitting its frame first, but then a higher priority happens and Queue 3 in green preempts, so in the lower timing diagram we see how the green frame travels ahead of the orange frame. The green frame which is time-critical has preempted the orange frame, providing a predictable latency.

Cyclic Queuing and Forwarding

To make network latencies across bridges more consistent regardless of the network topology there’s a technique called Cycling Queuing and Forwarding. The complete specification is on the IEEE 802 site. Shown below in dark blue is a stream with the shortest cycle packet, while in light blue is a stream in the presence of multiple packets.

Frame Replication and Elimination

How do you find and fix from: cyclical redundancy check (CRC) errors, opens in wires, and flakey connections? With frame replication and elimination. In the following example there’s a time-critical data frame sent along two separate paths, orange and green, then where they join up, any duplicate frames are removed from the streams, so applications can receive frames out of order.

In the IEEE specification there are three ways to implement frame replication and elimination:

- Talker replicates, listener removes duplicates

- Bridge replicates, listener removes duplicates

- Bridge replicates, bridge removes duplicates

Enhanced Generic Precise Timing Protocol

Knowing what time it really is across a network is fundamental, so synchronizing clocks comes up and this protocol lets you use either a single grand master or multiple grand masters, as shown in the next two figures:

Single grand master, sending two copies

Two grand masters, each sending two copies

Each of these TSN specifications have grown over time in order to meet the rigors in automotive design to support real-time networking of ADAS features.

Summary

Ethernet in automobiles has come a long way over the past decade, and now we have TSN to enable the ADAS features of modern SoCs, inching towards autonomous vehicles. With Ethernet in the car we get:

- Wide range of data rates

- Reliable operation

- Interoperability between vendors

- TSN to standardize on how data travels with predictable latency

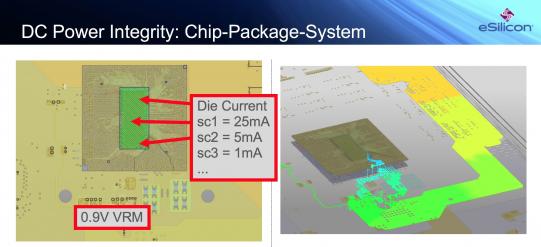

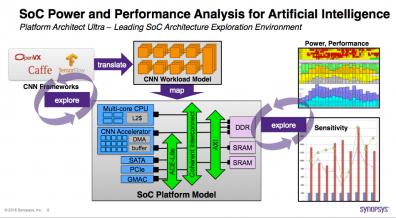

SoCs for automotive also need to meet the functional safety standard ISO 26262 and AEC-Q100 for reliability. In the make versus buy decision process for networking chips you can consider IP from Synopsys, like their DesignWare Ethernet Quality-of-Service (QoS) IP because it is ASIL B Ready ISO 26262 certified.

John Swanson from Synopsys has written a detailed Technical Bulletin on this topic.