Last month I attended the 2018 U.S. Executive Forum where Wally Rhines was one of the keynotes. I was also lucky enough to have lunch with Wally afterwards and talk about his presentation in more detail and he sent me his slides which are attached to the end of this blog.

The nice thing about Wally’s presentations is that they are not company specific while a lot of keynotes are company pitches in disguise. The other thing is that his slides are very detailed and tell a story so reading them really is the next best thing to being there.

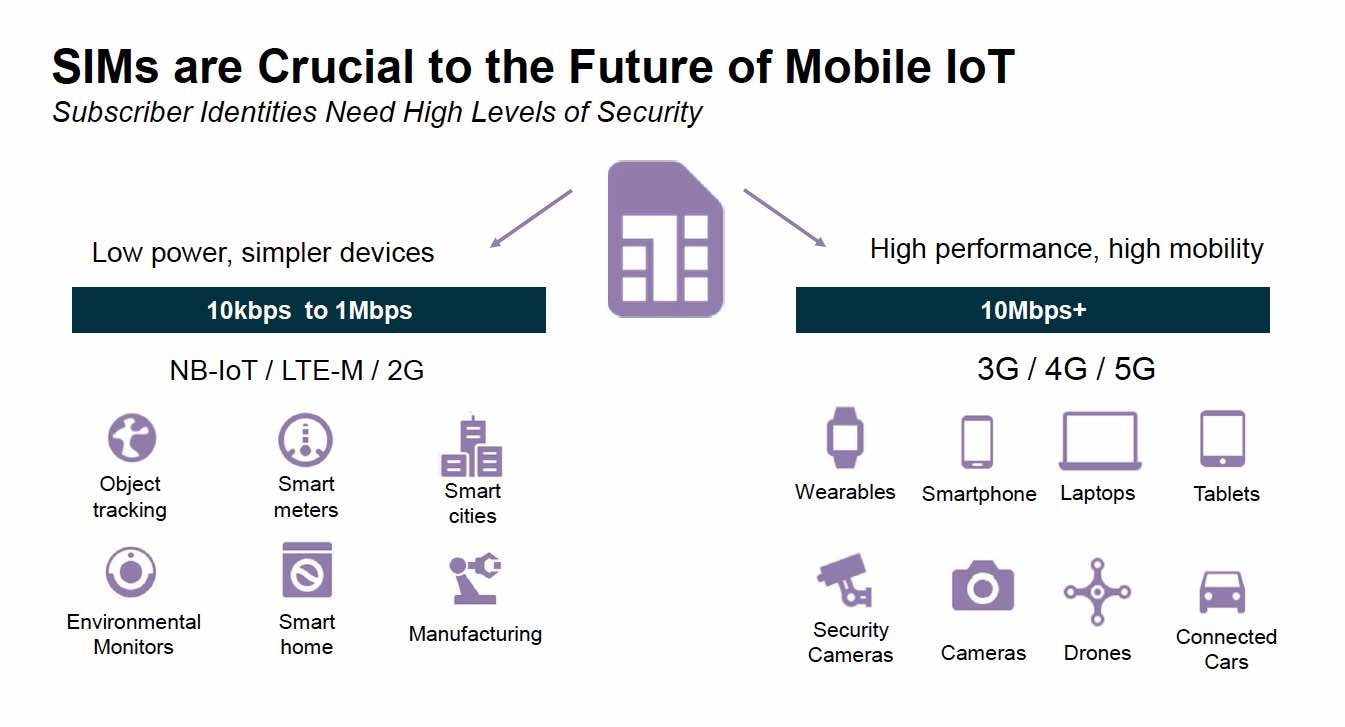

When I first started in Silicon Valley in the 1980s we all designed and manufactured our own CPUs which I consider domain specific architectures. Intel then came around with a more general architecture and the personal computing revolution began. Fabless semiconductor companies then restarted domain specific computing with GPUs and SoCs that are now replacing Intel chips at an alarming pace. System companies (Apple) then took the lead with custom SoCs and now even software companies (Google) are making their own domain specific chips (TPU). There are also IoT and automotive domain specific chips flooding the markets.

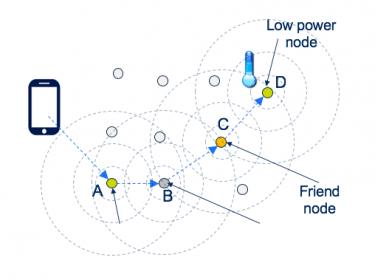

We have a front row seat to this transformation on SemiWiki because we see the domains that read our site. The first IoT blogs started in 2014 and we now have over 400 that have been read close to 2 million times. Automotive also started for us in 2014 and we now have more than 300 blogs that have been read more than 1 million times. AI started for us in 2016 and now we have close to 100 blogs that have been read more than 250 thousand times. IoT wins but AI has just begun.

Wally has some interesting slides on AI, Automotive, VC Funds, and the China semiconductor initiative. Definitely worth a look. Here is his summary slide for those who are short of time:

Here are the other keynotes. I have access to the slides and will blog about them when I have time but since Wally sent me his slides he goes first. I will end this blog with the perilous thoughts I had on this subject during my long and dark drive home.

Opening Keynote: Looking To The Future While Learning From The Past

Presentation by Daniel Niles / Founding Partner / AlphaOne Capital Partners

Keynote: Convergence of AI Driven Disruption: How multiple digital disruptions are changing the face of business decisions

Presentation by Anthony Scriffignano / Senior Vice President & Chief Data Scientist / Dun & Bradstreet

AI and the Domain Specific Architecture Revolution

Presentation by Wally Rhines / President and CEO / Mentor, a Siemens Business

AI Led Security

Presentation by Steven L. Grobman / Senior Vice President and CTO / McAfee

AI is the New Normal – 3 key trends for the path forward

Presentation by Kushagra Vaid / General Manager & Distinguished Engineer – Azure Infrastructure / Microsoft

Innovating for Artificial Intelligence in Semiconductors and Systems

Presentation by Derek Meyer / CEO / Wave Computing

The Evolution of AI in the Network Edge

Presentation by Remi El-Ouazzane/Vice President and COO, Artificial Intelligence Products Group / Intel

GSA Expert Panel Discussion

Moderated by Aart de Geus / Chairman and Co-CEO / Synopsys

Keynote: Long Term Implications of AI & ML

Presentation by Byron Reese / CEO, Gigaom / Technology Futurist / Author

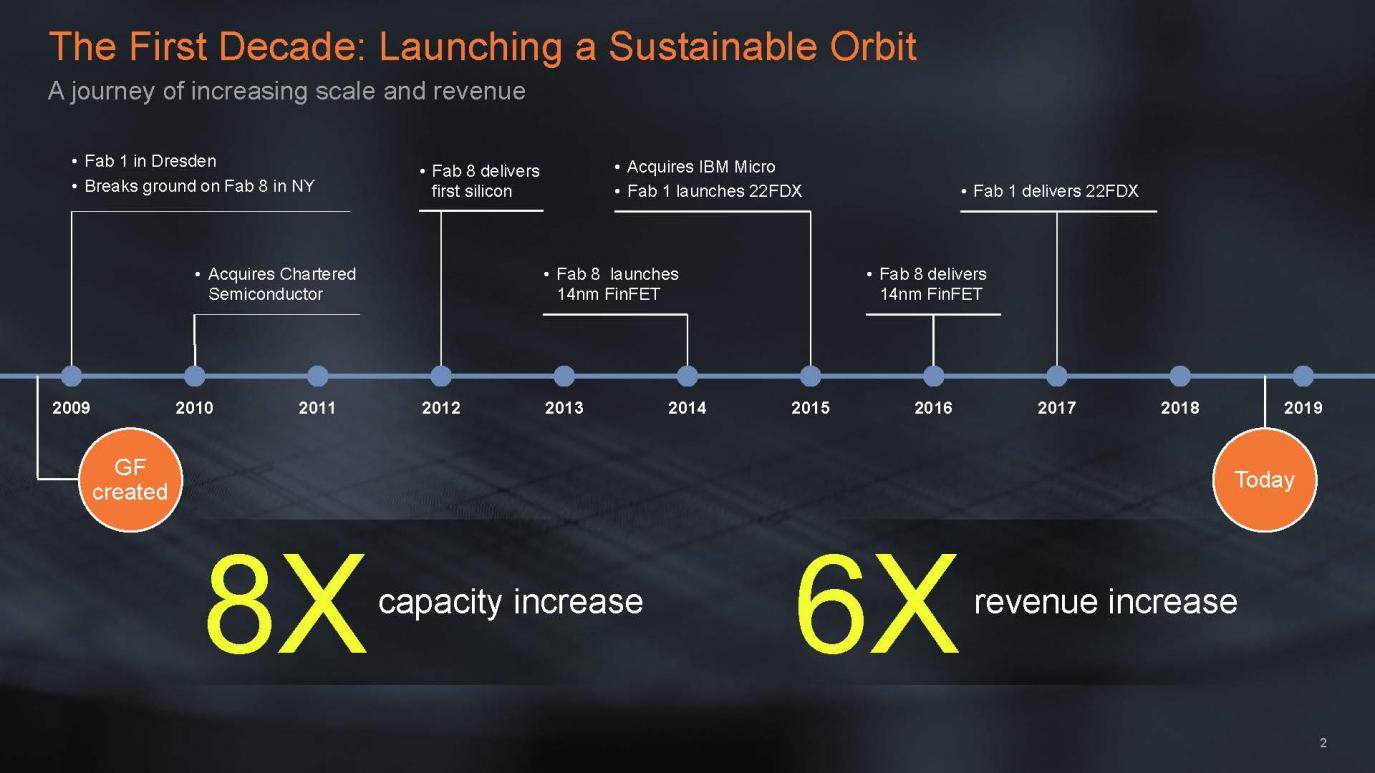

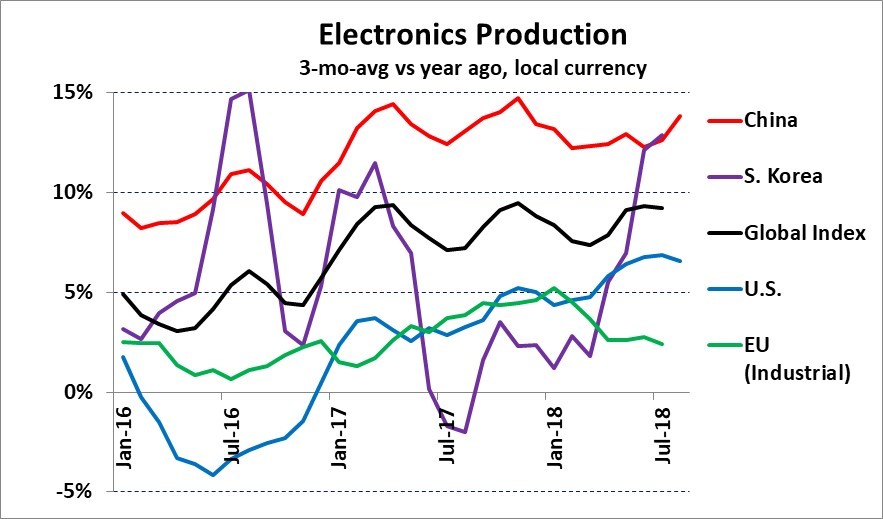

The semiconductor industry (EDA included) has posted some very nice gains in the past two years but how long can that continue? Take a look at this graph and you will see a pattern that will no doubt repeat itself but the question is how low will we go?

One thing I can tell you is that EDA is definitely in a bubble. Look at the VC money and all of the fabless startups that are buying tools, especially the ones in China. At some point in time money will run out and only a fraction of these companies will continue to expand and buy more tools. Someone else can run the numbers but my bet is that the EDA bubble will pop in 2019, absolutely.