During my Taiwan visit, prior to Las Vegas, I was fortunate to spend time with Willy Chen and Vivian Jiang to prepare for the cloud panel I moderated at #56thDAC. Willy and Vivian are part of the ever-important Design Infrastructure Marketing Division of TSMC, which includes the internal and external cloud efforts. TSMC first announced their external cloud offering last year: TSMC announces initial availability of Design-in-the-Cloud via OIP VDE and OIP Ecosystem Partners and has made follow-up announcements with all of the key vendors and participated in multiple cloud panels last week in Las Vegas. Make no mistake, TSMC is a semiconductor cloud pioneer, absolutely.

There are however a couple of things I would like to point out as an objective semiconductor cloud insider. I first heard of TSMC seriously considering the cloud more than 10 years ago. Back then the big hurdle was customer security and having been through TSMC’s security protocol for EDA and IP vendors many times I can tell you TSMC is all about security. But TSMC is also all about enabling customers of all types and getting high quality wafers to the masses and today that means cloud.

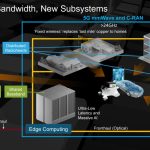

Another interesting point in the semiconductor cloud transformation is that systems companies are driving the leading edge foundry business instead of traditional fabless chip companies. Some of these systems companies are actually cloud based companies (Google, Microsoft, Amazon, and Facebook) so there is no security concern there. In fact, cloud security is above and beyond anything we have ever seen in the semiconductor industry and TSMC knows this by direct experience with their cloud customers.

As more systems companies use the cloud for chip design the fabless companies have no choice but to follow. The cloud company chip designers are the extreme case. They can do simulations and verification in hours versus days or weeks. Imagine being able to run a SPICE simulation or characterization run in an hour versus over night?

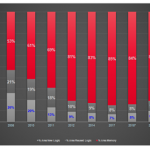

As I mentioned before, investment in fabless chip companies more than tripled in 2017 and doubled again in 2018. Similar to the fabless transformation, where semiconductor companies no longer had to build fabs, today’s fabless companies don’t have to buy computers and tools, they just go to the cloud where TSMC and EDA is already there waiting for them, absolutely.

One of the more interesting cloud events at #56thDAC was the Mentor Calibre Luncheon (FREE FOOD). SemiWiki Blogger Tom Simon sat in front of me and will blog this in more detail so spoiler alert: Willy Chen was on the panel and he talked about TSMC cutting down the DRC runtime of an N5 testchip from 24 hours to 4 hours using Azure Cloud. AMD was on the panel and they talked about doing the same thing with their N7 products scaling to 4000 CPU cores using a Microsoft Azure VM (which is an AMD EPYC based server).

Admittedly the AMD presentation was a little self-serving but my takeaway was that AMD partnering with TSMC and pivoting to the cloud for chip design before their much bigger competitors do is a VERY big deal.