At TechCon I had a 1×1 with Steve Roddy, VP of product marketing in the Machine Learning (ML) Group at Arm. I wanted to learn more about their ML direction since I previously felt that, amid a sea of special ML architectures from everyone else, they were somewhat fudging their position in this space. What I heard earlier was that the vast majority of ML functions are still being run on standard smartphones. Since there are (or were?) vastly more of these than any other devices, that meant they already dominated ML usage. Which is true, but that’s not really a direct contribution to ML (same platform, different software) and it’s not where ML is headed.

In fairness I wasn’t considering Mali. GPUs are already prominent in ML (clearly evidenced by NVIDIA offerings). The high levels of parallelism on GPUs enable much faster neural net (NN) processing than on a traditional CPU. Still, the key metric for a lot of ML hardware, TOPS/W, is pushing for more specialized accelerators designed specifically for NN algorithms.

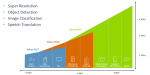

Arm didn’t have an entry in this field until they introduced their Ethos family, heralded earlier this year by the Ethos-N77 for premium applications. At TechCon they also announced Ethos-N57 for balanced performance and power and Ethos-N37 for performance in the smallest area. They see the N77 having applications in computational photography, top-end smartphones and AR/VR. N57 is for smart home hubs and midrange smartphones. The N37 is for DTVs (and I would imagine home appliances), entry-level phones and security cameras.

The architecture shows that this isn’t a bunch of MACs bolted onto a Cortex engine or even a respin of Mali. Arm details this as 4 primary functions around a bunch of SRAM (varying amounts depending on the core you use). The first function is a MAC engine supporting weight decompression and built in support to reduce multiplications in convolution by more than a factor of 2 (using the Winograd algorithm, in case you wanted to know). The second is a programmable layer engine. I couldn’t find a lot of detail on this but I think I get the concept. Networks are evolving fast, so hard-coded layers and layer types are not good; you need to be able to adapt the network in software without losing the performance advantages of the hardware. So you need configurability in convolution layers, pooling layers, activation models, etc.

The third function is a network control unit to manage traffic and control of all the other functions and the fourth is a DMA. In any other system this might be a ho-hum kind of block but in machine learning, this is central to performance and power efficiency. Vast amounts of data flow around these systems – images and weights in particular. AI accelerators live or die based on how effectively they can manage memory accesses on-chip to the greatest extent possible, without needing to go off-chip. The DMA controller, together with compression and other techniques ensures that 90% of accesses can be kept local.

Unsurprisingly Arm offer extensive software ecosystem support and libraries. They also offer support for a concept that is becoming increasingly popular in this domain – the ability to target such solutions starting from one of the mainstream NN networks across a broad range of platforms – Cortex, DynamIQ, NEOVERSE, Mali, Ethos and even 3rd-party platforms (DSPs, FPGAs and accelerators) – with suitable optimizations to take best advantage of the target platform.

I still buy that a lot of ML applications will continue to run on traditional Cortex platforms, but now I really believe Arm has an end-to-end IP story including real NN cores. You can learn more about the Ethos solutions HERE.