Charlie Janac is president and CEO of Arteris IP where he is responsible for growing and establishing a strong global presence for the company that is pioneering the concept of NoC technology. Charlie’s career spans over 20 years and multiple industries including electronic design automation, semiconductor capital equipment,… Read More

Tag: noc

Design in the Time of COVID

There’s a lot of debate about how and when we are going to emerge from the worldwide economic downturn triggered by the pandemic. Everyone agrees we will emerge. This isn’t humanity’s first pandemic, nor will it be our last. But do we come out quickly or slowly? And what does the economy look like on the other side, particularly for … Read More

Atos Crafts NoC, Pad Ring, More Using Defacto

I’ve talked before about how Defacto provides a platform for scripted RTL assembly. Kind of a rethink of the IP-XACT concept but without need to get into XML (it works directly with SV), and with a more relaxed approach in which you decide what you want to automate and how you want to script it.

They’re hosting a webinar on May 28th 10-11am… Read More

AI, Safety and Low Power, Compounding Complexity

The nexus of complexity in SoC design these days has to be in automotive ADAS devices. Arteris IP highlighted this in the Linley Processor Conference recently where they talked about an ADAS chip that Toshiba had built. This has multiple vision and AI accelerators, both DSP and DNN-based. It is clearly aiming for ISO 26262 ASIL D … Read More

Webinar – FPGA Native Block Floating Point for Optimizing AI/ML Workloads

Block floating point (BFP) has been around for a while but is just now starting to be seen as a very useful technique for performing machine learning operations. It’s worth pointing out up front that bfloat is not the same thing. BFP combines the efficiency of fixed point operations and also offers the dynamic range of full floating… Read More

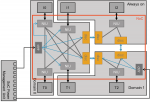

Free webinar – Accelerating data processing with FPGA fabrics and NoCs

FPGAs have always been a great way to add performance to a system. They are capable of parallel processing and have the added bonus of reprogramability. Achronix has helped boost their utility by offering on-chip embedded FPGA fabric for integration into SoCs. This has had the effect of boosting data rates through these systems… Read More

How Should I Cache Thee? Let Me Count the Ways

Caching intent largely hasn’t changed since we started using the concept – to reduce average latency in memory accesses and to reduce average power consumption in off-chip reads and writes. The architecture started out simple enough, a small memory close to a processor, holding most-recently accessed instructions and data … Read More

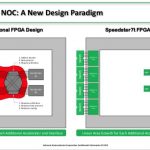

An evolution in FPGAs

Why does it seem like current FPGA devices work very much like the original telephone systems with exchanges where workers connected calls using cords and plugs? Achronix thinks it is now time to jettison Switch Blocks and adopt a new approach. Their motivation is to improve the suitability of FPGAs to machine learning applications,… Read More

ML and Memories: A Complex Relationship

No, I’m not going to talk about in-memory-compute architectures. There’s interesting work being done there but here I’m going to talk here about mainstream architectures for memory support in Machine Learning (ML) designs. These are still based on conventional memory components/IP such as cache, register files, SRAM and various… Read More

Qualcomm Intel Facebook and Semiconductor IP

What does Qualcomm, Intel, and Facebook have in common? Well, for one thing they all bought network onchip communications (NoC) IP companies. As I have mentioned before, semiconductor IP is the foundation of the fabless semiconductor ecosystem and I believe this trend of acquisitions will continue. So, if you are going to start… Read More