The use of machine learning (ML) to solve complex problems that could not previously be addressed by traditional computing is expanding at an accelerating rate. Even with advances in neural network design, ML’s efficiency and accuracy are highly dependent on the training process. The methods used for training evolved from CPU… Read More

Tag: machine learning

Autonomous Driving Still Terra Incognita

I already posted on one automotive panel at this year’s Arm TechCon. A second I attended was a more open-ended discussion on where we’re really at in autonomous driving. Most of you probably agree we’ve passed the peak of the hype curve and are now into the long slog of trying to connect hope to reality. There are a lot of challenges, … Read More

Characteristics of an Efficient Inference Processor

The market opportunities for machine learning hardware are becoming more succinct, with the following (rather broad) categories emerging:

- Model training: models are evaluated at the “hyperscale” data center; utilizing either general purpose processors or specialized hardware, with typical numeric precision of 32-bit

New Generation of FPGA Based Distributed Accelerator Cards Offer High Performance and Adaptability

We have learned from nature that two characteristics are helpful for success, diversity and adaptability. The same has been shown to be true for computing systems. Things have come a long way from when CPU centric computing was the only choice. Much heavy lifting these days is done by GPUs, ASICs, and FPGAs, with CPUs in a support … Read More

Formal in the Field: Users are Getting More Sophisticated

Building on an old chestnut, if sufficiently advanced technology looks like magic, there are a number of technology users who are increasingly looking like magicians. Of course when it comes to formal, neither is magical, just very clever. The technology continues to advance and so do the users in their application of those methods.… Read More

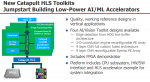

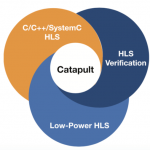

AI Hardware Summit, Report #2: Lowering Power at the Edge with HLS

I previously wrote a blog about a session from Day 1 of the AI Hardware Summit at the Computer History Museum in Mountain View, CA, held just last week. From Day 2, I want to delve into this presentation by Bryan Bowyer, Director of Engineering, Digital Design & Implementation Solutions Division at Mentor, a Siemens Business.… Read More

Mentor Highlights HLS Customer Use in Automotive Applications

I’ve talked before about Mentor’s work in high-level synthesis (HLS) and machine learning (ML). An important advantage of HLS in these applications is its ability to very quickly adapt and optimize architecture and verify an implementation to an objective in a highly dynamic domain. Design for automotive applications – for … Read More

An evolution in FPGAs

Why does it seem like current FPGA devices work very much like the original telephone systems with exchanges where workers connected calls using cords and plugs? Achronix thinks it is now time to jettison Switch Blocks and adopt a new approach. Their motivation is to improve the suitability of FPGAs to machine learning applications,… Read More

Mentor Extends AI Footprint

Mentor are stepping up their game in AI/ML. They already had a well-established start through the Solido acquisition in Variation Designer and the ML Characterization Suite, and through Tessent Yield Insight. They have also made progress in prior releases towards supporting design for ML accelerators using Catapult HLS. Now… Read More

Real Time Object Recognition for Automotive Applications

The basic principles used for neural networks have been understood for decades, what have changed to make them so successful in recent years are increased processing power, storage and training data. Layered on top of this is continued improvement in algorithms, often enabled by dramatic hardware performance improvements.… Read More