Earlier this month during my conversation with Dr. Walden C. Rhines, he emphasised the need for our next generation designers to think at system level and design everything keeping the system’s view in mind. The verification will go through major transformation at the system level. I can see the FPGA prototyping systems already… Read More

Tag: fpga

Verification with Tcl for what? – part 2

In Orion Bytes we use Tcl both for the internal research, product and different verification services. We use also SystemVerilog UVM and Python based Cocotb for different approaches. I think it’s no need to deep into the SystemVerilog and UVM principles here – today’s main verification fashion is well described through… Read More

Verification with Tcl for what?

Nowadays, verification as one of the most complex SoC, FPGA, and ASIC development flow stages always requires new approaches. The following is an introduction to TcL vs/ with SystemVerilog and VHDL, the first in a 3 part series. Part 2 will be “Tcl vs Python, Bluespec” and part 3 will be “VerTcl description”.… Read More

S2C ships UltraScale empowering SoFPGA

Most of the discussion around Xilinx UltraScale parts in FPGA-based prototyping modules has been on capacity, and that is certainly a key part of the story. Another use case is developing, one that may be even more important than simply packing a bigger design into a single part without partitioning. The real win with this technology… Read More

Nine Cost Considerations to Keep IP Relevant –Part2

In the first part of this article I wrote about four types of costs which must be considered when an IP goes through design differentiation, customization, characterization, and selection and evaluation for acquisition. In this part of the article, I will discuss about the other five types of costs which must be considered to enhance… Read More

Xilinx Skips 10nm

At TSMC’s OIP Symposium recently, Xilinx announced that they would not be building products at the 10nm node. I say “announced” since I was hearing it for the first time, but maybe I just missed it before. Xilinx would go straight from the 16FF+ arrays that they have announced but not started shipping, and to the… Read More

A Brief History of FPGA Prototyping

Verifying chip designs has always suffered from a two-pronged problem. The first problem is that actually building silicon is too expensive and too slow to use as a verification tool (when it happens, it is not a good thing and is called a “re-spin”). The second problem is that simulation is, and has always been, too slow.

When Xilinx… Read More

Secret Sauce of SmartDV and its CEO’s Vision

SmartDV started as a small setup in Bangalore in 2008 and by now is one of the most respectable VIP (Verification IP) companies in the world. Having a portfolio of 83 VIPs in its kitty and growing, it has a large customer base, including the top semiconductor companies around the world. The company has grown significantly and is raring… Read More

NIWeek: Xilinx Inside

Being from Britain, NI always means Northern Ireland when I see it. After all the official name of my country is the United Kingdom of Great Britain and Northern Ireland, giving us the same problem as the United States of America, the full name is a mouthful. So we abbreviate the country to UK and call ourselves British or even Brits.… Read More

More FPGA-based prototype myths quashed

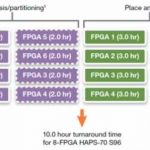

Speaking of having the right tools, FPGA-based prototyping has become as much if not more about the synthesis software than it is about the FPGA hardware. This is a follow-up to my post earlier this month on FPGA-based prototyping, but with a different perspective from another vendor. Instead of thinking about what else can be done… Read More