Flex Logix is a unique company. It is one of the few that supplies both FPGA and embedded FPGA technology based on a proprietary programmable interconnect that uses half the transistors and half the metal layers of traditional FPGA interconnect. Their architecture provides some rather significant advantages. I wrote about their… Read More

Tag: ai inference

Flex Logix Brings AI to the Masses with InferX X1

In April, I covered a new AI inference chip from Flex Logix. Called InferX X1, this part had some very promising performance metrics. Rather than the data center, the chip focused on accelerating AI inference at the edge, where power and form factor are key metrics for success. The initial information on the chip was presented at … Read More

Efficiency – Flex Logix’s Update on InferX™ X1 Edge Inference Co-Processor

Last week I attended the Linley Fall Processor Conference held in Santa Clara, CA. This blog is the first of three blogs I will be writing based on things I saw and heard at the event.

In April, Flex Logix announced its InferX X1 edge inference co-processor. At that time, Flex Logix announced that the IP would be available and that a chip,… Read More

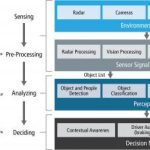

AI Inference at the Edge – Architecture and Design

In the old days, product architects would throw a functional block diagram “over the wall” to the design team, who would plan the physical implementation, analyze the timing of estimated critical paths, and forecast the signal switching activity on representative benchmarks. A common reply back to the architects was, “We’ve… Read More

Highly Modular, AI Specialized, DNA 100 IP Core Target IoT to ADAS

The Cadence Tensilica DNA100 DSP IP core is not a one-size-fits-all device. But it’s highly modular in order to support AI processing at the edge, delivering from 0.5 TMAC for on-device IoT up to 10s or 100 TMACs to support autonomous vehicle (ADAS). If you remember the first talks about IoT and Cloud, a couple of years ago, the IoT … Read More