Why burst EDA workloads to the cloud

Time to market challenges are nothing new to those of us who have worked in the semiconductor industry. Each process node brings new opportunities s along with increasingly complex design challenges. 7nm, 5nm and 3nm process nodes have introduced scale, growth, and data challenges at a level previously unheard of, particularly for backend design processes.

Design teams are looking to hyperscale clouds providers like AWS, Azure and Google Cloud as a way of providing the on-demand scale and elasticity required to meet the time to market challenges. An ever-increasing number of semiconductor companies have either evaluated or are regularly using the cloud for burst capacity. Runtime analytics as well as Spot pricing has lowered cost barriers of Cloud by enabling jobs to run on the lowest cost servers for the required job and for the right amount of time. This has nearly removed the cost barriers to enable increased use of cloud – particularly for burst or peak periods of the design process.

Increased use of AI and GPU enabled EDA tools are driving a need to include more and more GPU enabled servers in the flow. The cloud enables design teams to quickly spin up the right mix of server types to match the workloads based on feature requirements and cost.

Bursting cloud might seem like the right solution but data mobility and data transfer to and from the cloud makes bursting outside of traditional on-prem data centers challenging due to the size and gravity of data.

Example workloads burst to the cloud

Front-end verification jobs are often the first jobs companies attempted to burst to the cloud. The ever-increasing number of simulations, Lint, CDC, DFT and power analysis runs at the block, sub-system and full chip level are as many as 20k-50k jobs in a nightly run. The more jobs that can be run in parallel, the faster the jobs will finish, and the faster issues can be detected and resolved.

The server requirements of these jobs can vary widely from very small IP level jobs that take just a few minutes to Fullchip runs which require large core count, high memory servers. The range of jobs are ideal for the cloud where the wide range of server types and sizes can match the requirements of each job. Frontend jobs tend to be tolerant to job failure, preemption, and restart, which makes them ideal for running on lower cost Cloud SPOT instances.

AWS Quote “You can launch Spot Instances on spare EC2 capacity for steep discounts in exchange for returning them when Amazon EC2 needs the capacity back. When Amazon EC2 reclaims a Spot Instance, we call this event a Spot Instance interruption. You can specify that Amazon EC2 will Stop, Hibernate or terminate interrupted Spot Instances (Terminate is the default behavior).”

New AI driven workflows like DSO.ai lend themselves to burst to cloud use models. Instead of doing a single run, analyzing results, then tweaking parameters and re-running. These new workflows kick off 30-40 runs in parallel all with different optimization parameter settings, then AI analyzes which of the runs had the best outcomes and then uses those results to seed the next 30-40 runs.

Designs that have 20 or more blocks/subsystems/Fullchip runs will then require 30-40 runs per analysis for a 30-40x increase in the number of jobs and data required to run the analysis. The tradeoff of increased compute demand, supplied by additional cloud compute capacity, to quickly zero in on improved PPA results in a fixed schedule can be achieved. Fabs are also getting in on the burst to cloud use model. OPC (Optical Process Correction), RET (reticle enhancement tech) and MDP (mask data prep) jobs are some of the most compute intensive and time to market sensitive jobs in the chips design cycle. Cloud scale and availability enables fast turn-around and speeds chip production.

Challenges with Bursting to Cloud

Setting up an automated burst to cloud use model is the first challenge. Cloud providers have EDA reference architectures for setting license servers, grid engines (LSF, Grid, and SLURM) and providing automation for provisioning the compute, network, and storage infrastructures. The FlexLM based license setup is typically unchanged from on-prem or can even use the same on-prem license server. The biggest challenge is often figuring out which data needs to be replicated (copied) to the cloud to run the workflows.

Most design flows point to a myriad of different design files scattered across many different volumes of data. Tools, libraries, 3rd party IP, CAD flow scripts, RCS files (like P4, ICManage, etc.) and even in some files in users’ directories. The first challenge is figuring out WHAT files (or volumes) of data are needed for the flow which is being burst to the cloud. The second obvious issue is HOW do you transfer these files to the cloud.

Sadly, there is no simple solution to the WHAT Files question. The obvious RCS, tools and library files are typically easy to identify. The others might require running the job in the cloud and then repeated trial and error, copy missing files, repeat. This can be very challenging and time consuming particularly if you must copy lots of data.

The other issue is the size of the data. The easy thing to do is to copy the entire /mnt/tools/ directory to the cloud. But then do you really need every tool and version of tool, or maybe just the latest or the specific versions your flow requires. Simply copying ALL files will result in long data transfer times and increased storage costs.

Then there is the question of HOW to copy the files to the cloud. Rsync, ssh scp, gtar/FTP, or even reinstall the tools in the cloud. All these methods work and are tried and true, but then how do you keep the cloud data in sync with the on-prem data. Tool versions and new libraries are always being updated, how do you ensure the environment you setup and got working in the cloud will work tomorrow after someone commits a change that points to new tools or libraries versions. The challenges of keeping data in-sync between the on-prem and cloud can become a maintenance headache.

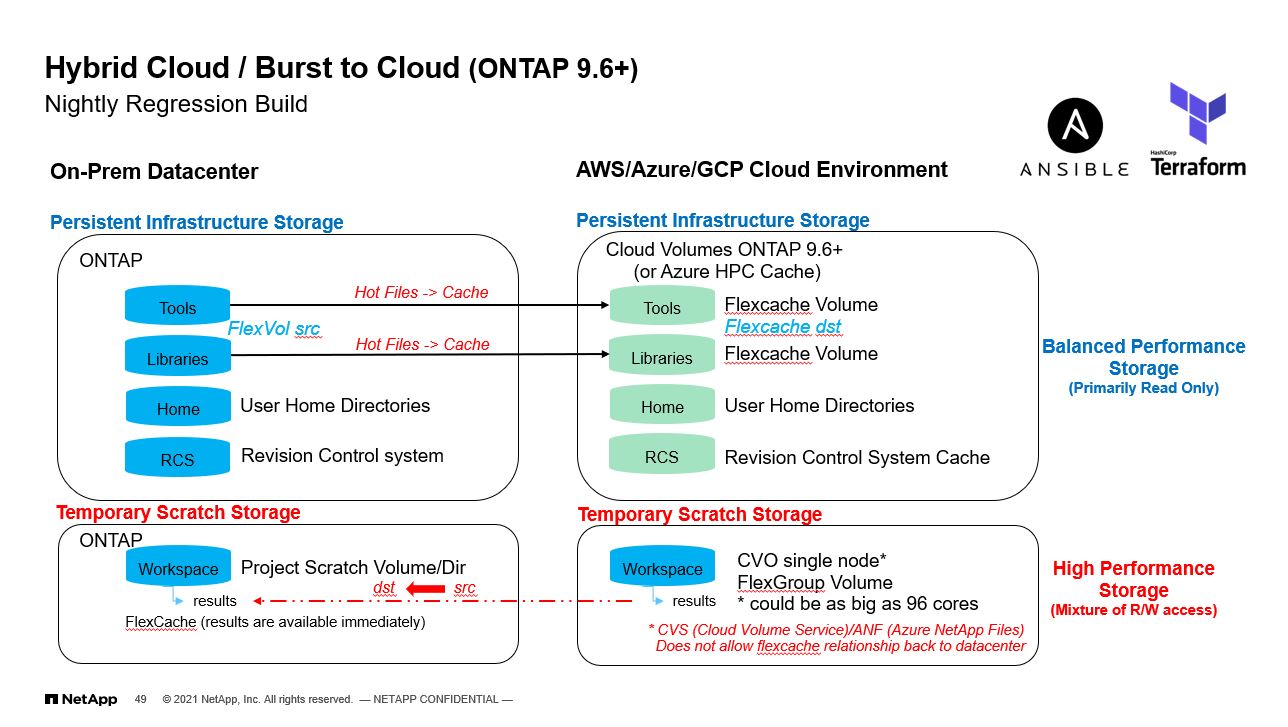

The following diagram shows the various directories (or volumes of data) which need to be exported to the cloud. Design flows will typically only use just one version of tools and libraries in a given flow but will point to tool and library installations which contain many different versions. By definition, burst to cloud is a short-term activity – it needs to be available at a moment’s notice, but for cost control, it needs to be terminated when no longer needed.

NetApp makes data mobile and on-demand

NetApp’s ONTAP storage operating system has been the tried-and-true solution for 20 years of semiconductor innovation on-prem. Cloud Volume ONTAP (CVO) is the same tried and true feature rich storage operating system your IT teams have relied on for years, and it runs in all three clouds. CVO has been available in AWS, Azure and Google Cloud since before 2017 and has made migrating or bursting to cloud as easy as running on-prem.

ONTAP’s FlexCache technology data replication technology that enables fast and secure replication of data into the cloud. FlexCache is ideal for replicating tool and library data to the cloud. Once a FlexCache volume is provisioned in the cloud and set to cache a pre-existing on-prem volume, almost instantly all the files on-prem are visible in the cached volume in the cloud. Even though the files appear to be in the cloud, it is until the file is read, that the data is actually transferred to the cache. The second read from the cache is instant since the file is already cached. This means that when a job is run in the cloud, the flow will find all the files it needs – even if the on-prem data was recently changed. With ONTAP 9.8 the cache can be pre-warmed via a script, but it is more common to just run one job first to warm the cache, so when the large job set runs, the files are already pre-populated in the cache.

Cached tools and library volumes are typically read heavy with writes only occurring when new tools or libraries are installed. FlexCache makes the distribution of new tools and libraries easy – requiring no additional automation or synchronization. The CAD teams only need to install tools in the Source volume and then those new files are instantly visible and available on the FlexCache volumes. ONTAP’s FlexCache volumes can support a fan-out of up to 100 FlexCache volumes from a single Source volume. This means a single tool volume can be replicated to many remote datacenters and cloud regions.

FlexCache makes replicating on-prem environments into the cloud fast, easy and storage efficient, since only the files that are need get copied to the cloud. FlexCache volumes are also a great way to DNS load balance tools and library mounts across large server farm installations. Instead of having 10k cores all reading from a single set of tools and library mount, multiple FlexCache replicas can be created to spread out NFS mounts to improve read access performance.

FlexCache volumes can also be used in the reverse. Instead of replicating data to the cloud, a FlexCache volume on-prem can point to a volume in the cloud. Reverse Caching can enable designers to view and debug data on-prem without having to log into the cloud.

Summary/Conclusion

Hybrid cloud use models have matured and are ready for mainstream semiconductor development. Rapidly spinning up cloud environments to enable “peak sharing” or “burst to clouding” has proven to meet aggressive project schedule.

NetApp’s ONTAP storage operating system makes connecting on-prem data to the cloud easy. It can eliminate manual or other ways of managing multiple copies of data or accelerating data access. It can dramatically reduce storage footprint via sparse volumes ensuring storage needs are a fraction of the original dataset. Data connections between on-prem and cloud are secure utilizing secure connections, including encryption both at rest and while in flight.

If you would like to learn more contact your local NetApp sales or support.

Also Read:

NetApp Enables Secure B2B Data Sharing for the Semiconductor Industry

NetApp’s FlexGroup Volumes – A Game Changer for EDA Workflows

Concurrency and Collaboration – Keeping a Dispersed Design Team in Sync with NetApp

Share this post via:

A Century of Miracles: From the FET’s Inception to the Horizons Ahead