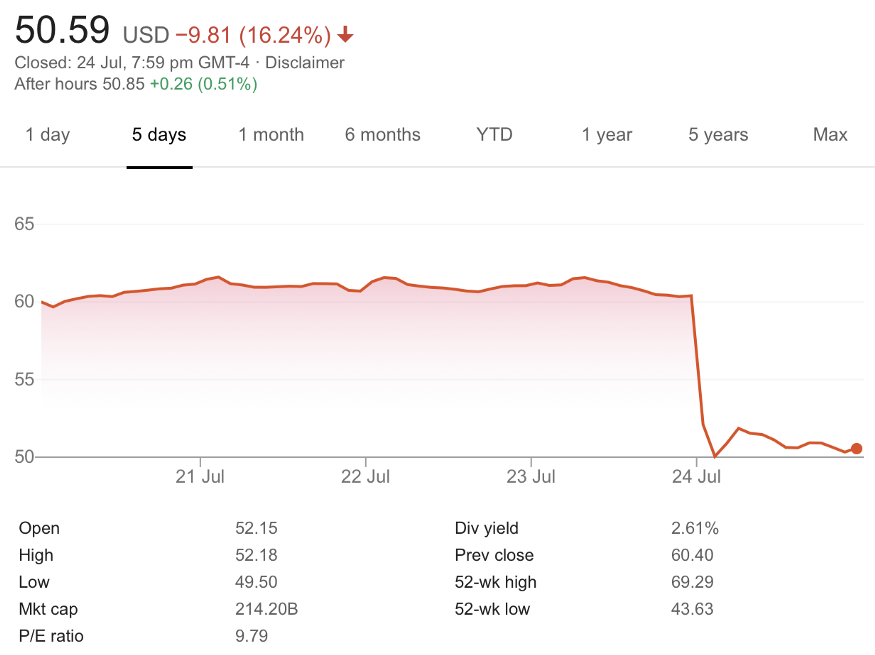

Last week, Intel announced its second-quarter financial results which easily beat the analysts’ consensus expectations by a handsome margin. Yet the stock price plummeted by over 16% right after the earnings call with management. Seven analysts downgraded the stock to a sell and the common theme on all the downgrades was that their 7-nanometer process was delayed again which meant that Intel had fallen behind in its process technology, and was lagging TSMC by a wide margin.

On its earnings call, Intel posted $19.7BN in revenues vs. the street at $18.54BN and generated EPS of $1.23 vs. $1.12 but it also delivered more bad news on its manufacturing process technology. In a note from Jefferies analyst, Jared Weisfeld, after the earnings call:

But the 7nm commentary is a thesis changer for many as the Company pushed out its roadmap and outsourcing CPU manufacturing to a 3rd party foundry is officially part of the conversation. The company is seeing a 6 month shift in 7nm product timing vs. prior expectations with yields as the primary culprit, which are 12 months behind target. The Company identified a defect which has caused the delay and they claim to have have root caused the issue and believe there are no fundamental roadblocks.

There’s a lot to unpack in that paragraph, but the key question it raises is how could it be that Intel, which was for decades the undisputed leader in manufacturing process technology which allowed it to deliver to market the highest performance and highest margin CPUs for PCs and servers, lost its lead so dramatically?

I believe that Intel is in the middle of what Andy Grove called a “Strategic Inflection Point” (SIP) that goes to the heart of its business. The last time Intel faced such an existential SIP was in 1984 when it was losing market share in memory chips, which was its core business, and it pivoted to become a microprocessor company. In Grove’s book, Only the Paranoid Survive, he described how Intel made that transition and, as a result, by 1995 became the world’s largest and most influential semiconductor company. Clearly Grove made the right decision to pivot into microprocessors as that got the company out of a highly competitive low-margin commodity business and into a ridiculously high margin business of providing a highly differentiated and proprietary product with well over 90% market share.

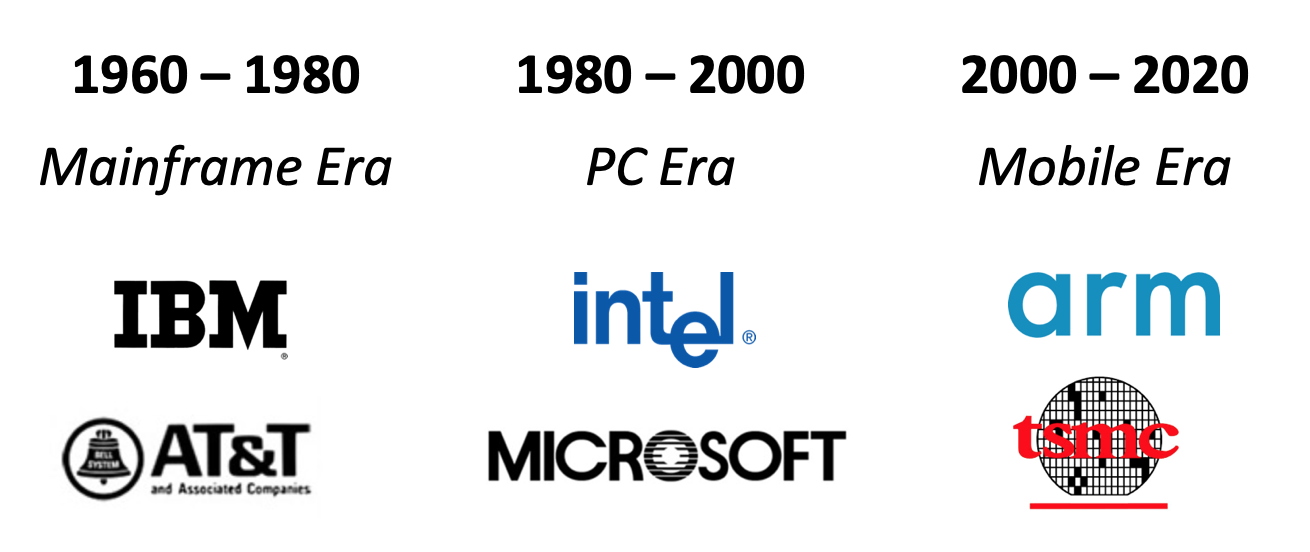

Intel continued to hold its position as the world’s largest semiconductor company until 2017 when Samsung overtook it by revenues, and TSMC caught up on manufacturing process technology. To understand how Intel became the dominant semiconductor company and hold that position for over 20 years and then lose it, one has to go back in time to 1985 when Intel’s 386 chip was its lead product in the then-nascent PC market.

What Grove could not have fully appreciated at the time he was navigating the memory to microprocessor pivot was how significant that move would later turn out to be. At the time, PCs were still a relatively small market and ran MS-DOS from Microsoft which had a clunky text-based user interface. PCs were underpowered compared with the mainframes, and relegated to simple tasks such as word processing and spreadsheets, while mainframes, minicomputers, and workstations continued to be used for “real” computer work. Nobody back then could have imagined that microprocessors from Intel would become the brains behind the entire computer industry for decades to come. When I joined Intel in 1983, nobody even at Intel used PCs for their work. As a software developer, I was coding for a Digital Equipment Corporation (DEC) VAX minicomputer and Apollo workstations. Neither of these two companies exists anymore.

What Intel was able to accomplish was harnessing the advanced manufacturing expertise that it had honed through manufacturing memory chips in volume, and create an engine of innovation that leveraged the tight coupling of Intel’s microprocessor design and a manufacturing capability that was able to give the CPU designers more and more transistors to build each generation of microprocessor, making it faster and cheaper. Moore’s law was less of a law than a mandate to the manufacturing side of Intel to keep refining the process technology and shrink the size of transistors so as to pack more on to a single chip of silicon while also clocking the processors at faster speeds. To put it in perspective, the 386 had 275,000 transistors and the next generation the 486, which I worked on, had over one million transistors. Today’s Core i7 has around 3 billion transistors.

I remember going to meetings with engineers working in the mainframe and minicomputer companies to get their feedback on the 486, because we, of course, wanted them to use that chip in their next-generation systems, and they laughed at us for even being so presumptions as to assume they would ever use what they considered a toy. However, the were very generous with their suggestions and explained to us how their advanced designs worked and why we would never meet their requirements, but what they failed to take into account, was that as transistor budgets grew, Intel engineers were able to add all these kinds of advanced capabilities, and more, to its CPUs to eventually overtake their proprietary systems in performance, and at far lower costs.

The result of Intel’s rapid innovations and improvements in the price-performance of the x86 CPUs was that it created a Strategic Inflection Point for the entire computer industry. To explain this, I’ll refer back to Grove’s Only the Paranoid Survive book where he wrote:

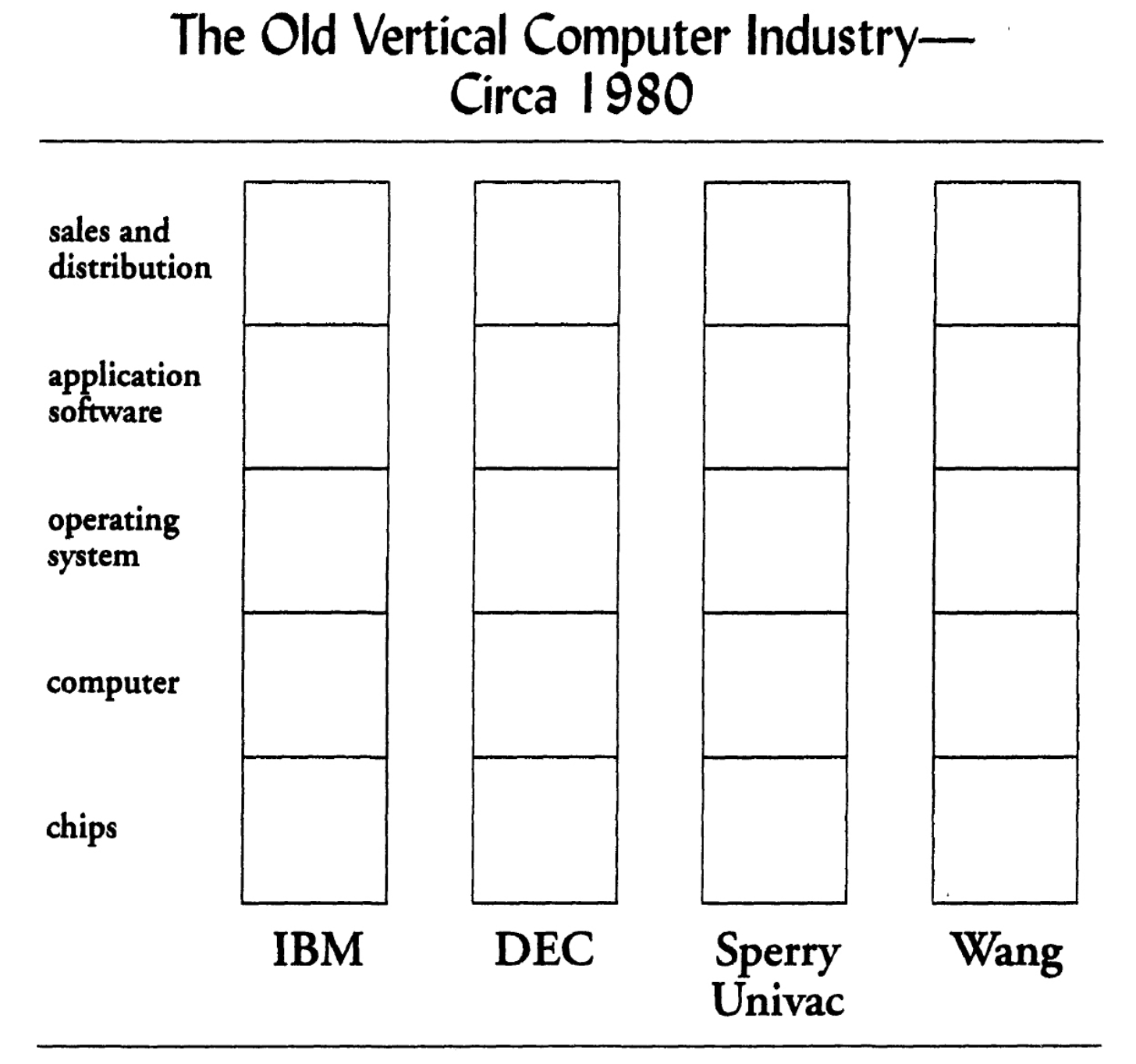

The computer industry used to be vertically aligned. As shown in the diagram that follows, this means that an old-style computer company would have its own semiconductor chip implementation, build its own computer around these chips according to its own design and in its own factories, develop its own operating system software (the software that is fundamental to the workings of all computers) and market its own applications software (the software that does things like accounts payable or airline ticketing or department store inventory control). This combination of a company’s own chips, own computers, own operating systems and own applications software would then be sold as a package by the company’s own salespeople. This is what we mean by a vertical alignment. Note how often the word “own” occurs in this description. In fact, we might as well say “proprietary,” which, in fact, was the byword of the old computer industry.

This vertically integrated approach had its pros and cons. The advantage is that when a company develops every piece themselves, the parts work better together as a whole. The disadvantage is that customers got locked into one vendor and it limited choice. The other disadvantages, which are more important, is that the rate of innovation was only as fast as the slowest link in the chain and that the market was more fragmented, which prevented any one company from reaching economies of scale. The end result was that the computer industry was made up of independent islands with no interoperability or scale. Once a customer chose one solution, they were stuck with it for a very long time and paid a lot more.

Then the microprocessor came along and as it became the basic building block for the industry. Economies of scale kicked in, which greatly accelerated the rate of improvement, and which also vastly expanded the market for PCs, then later servers, eventually replacing the proprietary systems. Back to Grove:

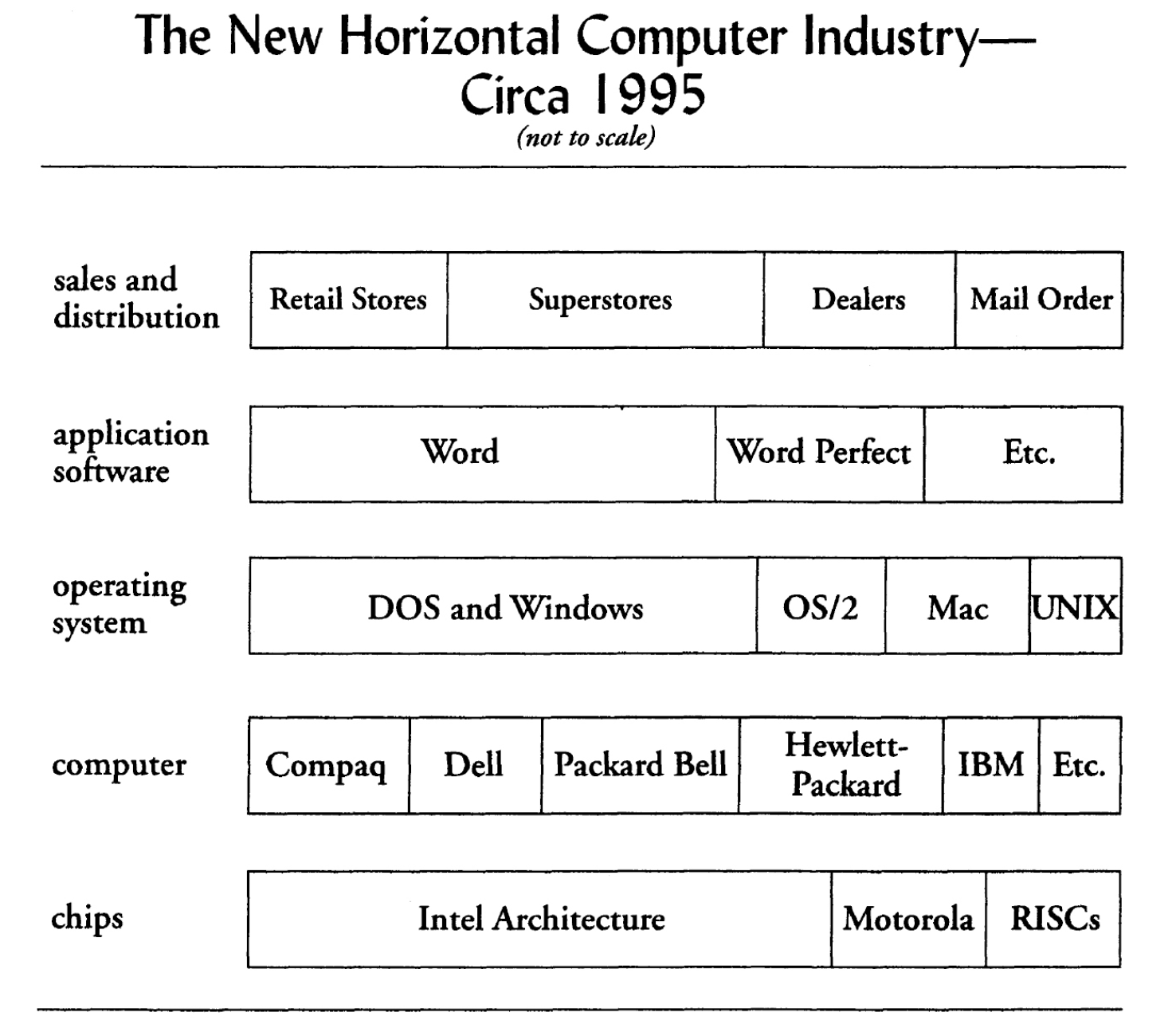

Over time, this changed the entire structure of the industry and a new horizontal industry emerged. In this new model, no one company had its own stack. A consumer could pick a chip from the horizontal chip bar, pick a computer manufacturer from the computer bar, choose an operating system out of the operating system bar, grab one of several ready-to-use applications off the shelf at a retail store or at a computer superstore and take the collection of these things home. Then he or she fired them up and hoped that they would all work together. He might have trouble making them work but he put up with that trouble and worked a bit harder because for $2,000 he had just bought a computer system that the old way couldn’t deliver for less than ten times the cost. This was such a compelling proposition that he put up with the weaknesses in order to avail himself of the power of this new way of doing business. Over time, this changed the entire structure of the computer industry, and a new horizontal industry, depicted below, emerged.

As a result of the reorientation of the industry from vertical to horizontal, many computer companies did not survive their Strategic Inflection Point. DEC, Unisys, Apollo, Data General, Prime, Wang, and many others, went out of business or got acquired by PC companies such as Compaq’s acquiring of DEC. One of the key lessons of this massive change in the computer industry as explained by Grove:

By virtue of the functional specialization that prevails, horizontal industries tend to be more cost-effective than their vertical equivalents. Simply put, it’s harder to be the best of class in several fields than in just one.

As industries shift from the vertical to the horizontal model, each participant will have to work its way through a strategic inflection point. Consequently, operating by these rules will be necessary for a larger and larger class of companies as time goes on.

This modularization theory was also thoroughly in Clayton Christensen’s paper on Disruption, disintegration and the dissipation of differentiability and explored in more detail in his book The Innovators Solution where he pointed out that:

Modularity has a profound impact on industry structure because it enables independent, nonintegrated organizations to sell, buy, and assemble components and subsystems. Whereas in the interdependent world you had to make all of the key elements of the system in order to make any of them, in a modular world you can prosper by outsourcing or by supplying just one element. Ultimately, the specifications for modular interfaces will coalesce as industry standards. When that happens, companies can mix and match components from best-of-breed suppliers in order to respond conveniently to the specific needs of individual customers.

By 1995, this transformation was in full swing and the transition from the “Old Computer Industry” to the “New Computer Industry” was complete, Intel had won. However Intel missed the next inflection point, and by missing that, laid the seeds of the problems that it is facing today.

The strategic inflection point that Intel missed was mobile, more specifically Apple’s iPhone which was launched in January 2007. Since the Intel x86 CPUs used too much energy, Apple chose to go with chips based on the much more power-efficient ARM architecture. As it happened, Intel acquired StrongARM from Digital Equipment Corporation which then went into the XScale processor and was a low-power chip designed for mobile (subsequently sold to Marvell in 2006). Intel certainly had the engineering and manufacturing capabilities to design and supply the kind of chips that Apple needed in the new iPhone. Apple had already switched from the IBM PowerPC chips to the x86 chips in its Macs and Steve Jobs had a very good relationship with Andy Grove and later Paul Otellini who had become the CEO at the time the iPhone was being designed. As Otellini described it in a 2013 interview in the Atlantic to Alexis Madrigal:

We ended up not winning it or passing on it, depending on how you want to view it. And the world would have been a lot different if we’d done it,” Otellini told me in a two-hour conversation during his last month at Intel. “The thing you have to remember is that this was before the iPhone was introduced and no one knew what the iPhone would do… At the end of the day, there was a chip that they were interested in that they wanted to pay a certain price for and not a nickel more and that price was below our forecasted cost. I couldn’t see it. It wasn’t one of these things you can make up on volume. And in hindsight, the forecasted cost was wrong and the volume was 100x what anyone thought.

Not being willing to win over Apple meant that Intel was shut out of participating in the mobile phone market, but more importantly, it gave TSMC an opening to become the manufacturer of choice for the chips going into the Apple iPhone and then all the other Android-based mobile devices. It took the horizontal layer that Intel controlled in semiconductors, and split it into two narrower layers: One was the ARM-based CPU architecture which ended up dominating the mobile phone market and the other layer below it was the manufacturing of these devices which TSMC ended up with the lion’s share of. Since ARM licenses its design to other so-called “fab-less” chip design companies, such as Qualcomm, it vastly increased the number of companies innovating around the ARM architecture at a magnitude that Intel couldn’t dream of matching which accelerated the rate of innovation and variety, yet all still compatible with the ARM architecture, so the software designed for mobile phones had a larger market. In addition, TSMC, as the chip fab of choice, was able to scale up and enjoy massive economies of scale which then allowed it to push its process technology forward at an even faster rate and eventually surpass Intel as it recently did. Even Samsung was able to catch up with Intel, first as a supplier of the memory chips and later more advanced logic chips. The result is that the leadership of the mobile era has shifted from Intel and Microsoft, sometimes referred to as Wintel, to ARM and TSMC.

This trend was very thoroughly analyzed in Chips and Geopolitics and Intel and the Danger of Integration by Ben Thompson in his Stratechery blog which is an excellent analysis of how TSMC was able to get ahead of Intel in manufacturing of advanced semiconductor products.

Exacerbating these external events, Intel also had the misfortune of poor leadership under Brian Krzanic, which resulted in an increased pace of senior management turnover. Even more recently, Apple has successfully lured many of Intel’s top VLSI chip designers in Israel and Oregon to work on designing Apple’s processors for its next-generation iPhones and iPads and soon, even Macbooks.

Intel is potentially facing as big of a strategic inflection point today as it was in 1984, but the major difference is that its core data center business is highly profitable and still growing due to the continued strength of cloud computing and Intel still dominates as a supplier CPUs used in cloud data centers. That sector requires very high-performance CPUs and power consumption, although a factor, is not as important as it is in a battery-powered mobile device. However, there are a growing number of fabless chip design groups, including Amazon, Google, Huawei, as well as startups such as Ampere that are working on ARM-based high-performance CPUs for data centers, and that market will get even bigger with the future growth of edge-computing in 5G networks. Since TSMC will be manufacturing these ARM server chips, and the new ARM-based chips in the Macbooks are rumored to be faster than Intel’s CPUs (and lower power), Intel’s advantage will erode even in its core data center business.

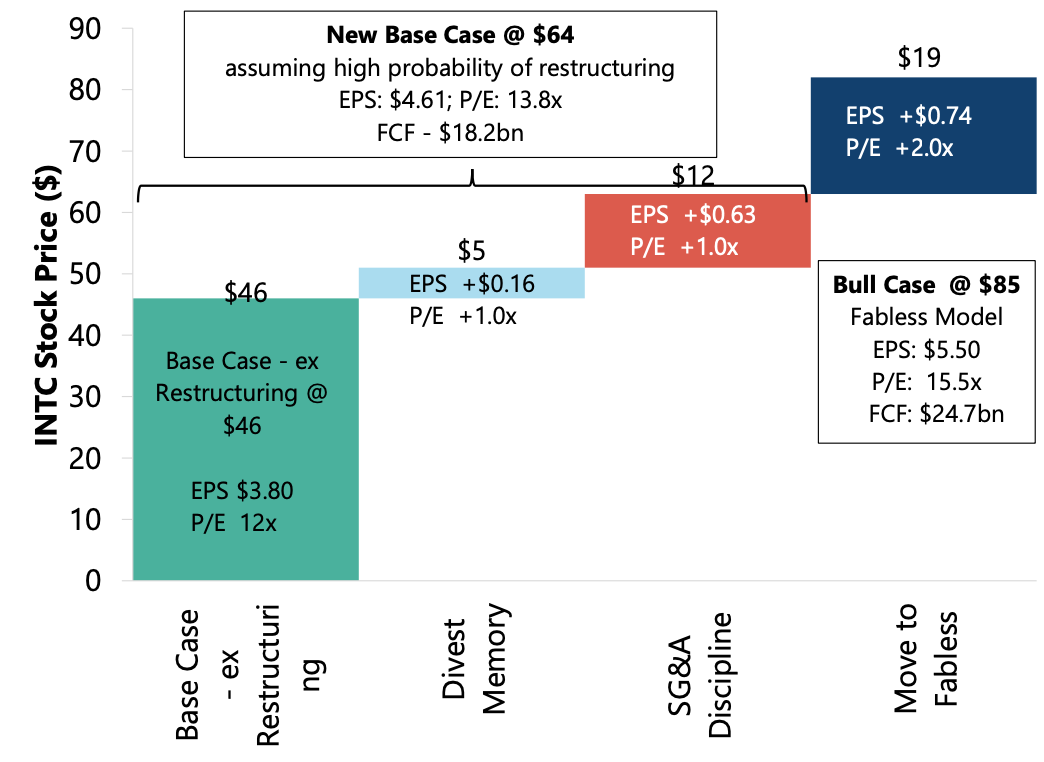

In a January research report from Marc Lipacis, an equity analyst from Jefferies, he makes a strong case that Intel should spin off its manufacturing business which could then directly compete with TSMC. His analysis was that Intel would add $19 to its share price by going fab-less.

By not playing a leading role in the growth of the mobile phone market Intel has lost more than just market share and revenues, but its leadership role in the next era of computing and communications. Intel’s role in the PC era went beyond supplying the CPUs, as it controlled the ecosystem which allowed it to influence the direction of technology to its benefit. In the mobile computing era, Intel is absent and it left the role of the mobile ecosystem leadership to ARM and TSMC.

As mentioned earlier, Intel also lost a large number of senior managers and has recruited new management from fab-less companies who may be more receptive to spin off the manufacturing part of Intel. The new CEO Bob Swan was brought in from outside the company, first as CFO, and later promoted to CEO, so he may have less attachment to the old ways and have the courage to do something bold. The board, on the other hand, still has a lot of legacy directors, who may not be receptive to such a bold but necessary move. The market certainly has made its position clear as the stock price reflects. History is against Intel’s chances of catching up on the process technology side and the external 10x force of TSMC and ARM. As Grove referred to these types of changes in his book Only the Paranoid Survive, cannot be wished away. Even if Intel has enough inertia to continue on its current path, the macro trends will eventually catch up with it and if it does not manage its way through this strategic inflection point, it will lose its preeminent position in the technology industry.

Share this post via:

Comments

10 Replies to “Murphy’s Law vs Moore’s Law: How Intel Lost its Dominance in the Computer Industry”

You must register or log in to view/post comments.