As promised in my May 27th blog, I visited an ESL company at DAC three weeks ago that introduced two new tools:

- Thermal Profiler

- Power Intelligence

WEBINAR: Edge AI Optimization: How to Design Future-Proof Architectures for Next-Gen Intelligent DevicesEdge AI is rapidly transforming how intelligent solutions…Read More

WEBINAR: Edge AI Optimization: How to Design Future-Proof Architectures for Next-Gen Intelligent DevicesEdge AI is rapidly transforming how intelligent solutions…Read More WEBINAR Unpacking System Performance: Supercharge Your Systems with Lossless Compression IPsIn today's data-driven systems—from cloud storage and AI…Read More

WEBINAR Unpacking System Performance: Supercharge Your Systems with Lossless Compression IPsIn today's data-driven systems—from cloud storage and AI…Read More ChipAgents Tackles Debug. This is ImportantInnovation is never ending in verification, for performance,…Read More

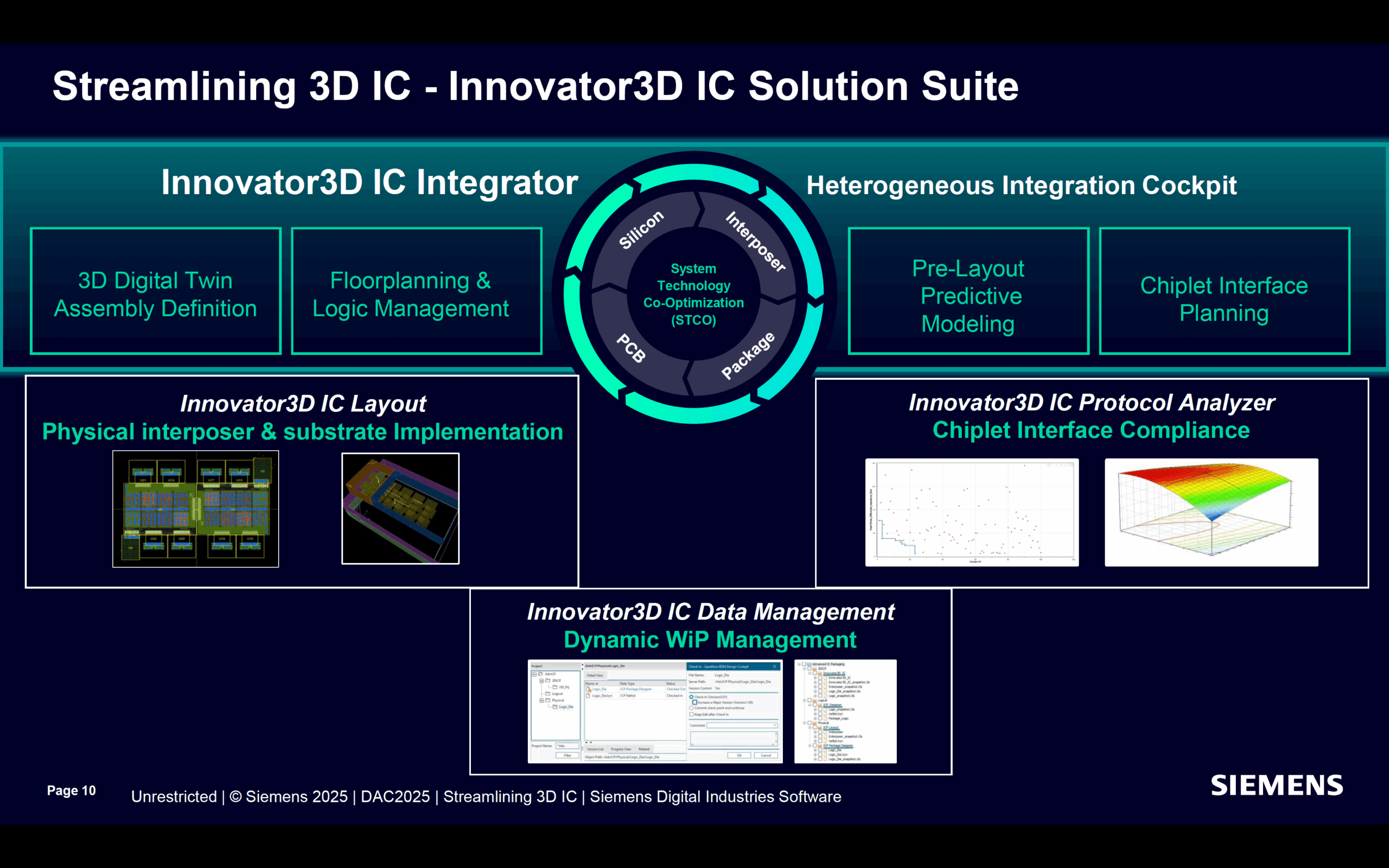

ChipAgents Tackles Debug. This is ImportantInnovation is never ending in verification, for performance,…Read More Siemens EDA Unveils Groundbreaking Tools to Simplify 3D IC Design and AnalysisIn a major announcement at the 2025 Design…Read More

Siemens EDA Unveils Groundbreaking Tools to Simplify 3D IC Design and AnalysisIn a major announcement at the 2025 Design…Read More CEO Interview with Faraj Aalaei of CognichipFaraj Aalaei is a successful visionary entrepreneur with…Read More

CEO Interview with Faraj Aalaei of CognichipFaraj Aalaei is a successful visionary entrepreneur with…Read MoreAs promised in my May 27th blog, I visited an ESL company at DAC three weeks ago that introduced two new tools:

It’s hard to believe that SEMICON West is upon us once again at the Moscone Center in San Francisco. This is the premier semiconductor conference for cutting-edge equipment, processes, and materials, to solutions to today’s design and manufacturing challenges. Remember, it’s not what you know (because … Read More

Introduction

There have been some claims made recently that planar Fully Depleted Silicon On Insulator (FDSOI) is less expensive than bulk planar processes and FinFETs at various nodes. Some of these claims suggest that FinFETs in particular are significantly more expensive. My company, IC Knowledge LLC produces the most widely… Read More

If we wanted to reduce the definition of authentication to its most Zen-like simplicity, we could say authentication is “keeping things real.” To keep something real you need to have some sort of confirmation of its identity, as confirmation is the key (so to speak).… Read More

Cliff Hou had two major appearances at DAC this year. He gave the opening day keynote…and he wrote the forward to Dan and my bookFabless: the Transformation of the Semiconductor Industry which about 1500 lucky people got a copy of courtesy of several companies, most notably eSilicon who sponsored the Tuesday evening post-conference… Read More

Today the semiconductor industry along with electronics industry is looking up to capitalize from massive expansion foreseen in IoT (Internet of Things) domain. In simple terms we can consider IoT as connectivity between machines which can communicate with each other and work as programmed. In localized applications such … Read More

During my illustrious career one of the most useful axioms that I use just about everyday day is: “Understand what people say but also understand why they are saying it.” This certainly applies to press releases so let’s take a look at what Intel unleashed during #51DAC (in alphabetical order):

ANSYS And Intel Collaborate… Read More

The ARC EM family is the low-power, embedded and low footprint processor part of the larger ARC processor. To target the ultra low-power markets like wearable and IoT, Synopsys has added DSP capabilities to EM5D and EM7D. To be specific, these cores are optimized for ultra low-power control and DSP, thanks to:

One of the benefits of spending the last 30 years working in Silicon Valley and publishing a fabless semiconductor book is that I get invitations to speak at events I would normally be attending. Being on the other side of the podium is truly a unique experience and one worth pursuing, absolutely. This month I spoke at #51DAC about … Read More

My favorite method to learn about EDA tools at DAC is by listening to actual IC designers, so on June 3rd I heard Jacob Bakker from NXP talk about his experience with noise coupled analysis for advanced mixed-signal automotive ICs.… Read More

Array

(

[title] => Recent Forum Threads

[title_url] =>

[ignore_sticky] => 0

[exclude_current] => 0

[limit] => 10

[sluglist] => ["jobs-dashboard"]

[rw_opt] => Array

(

[widget_select] => 1

[pageid_281769] => 1

[pageid_281772] => 1

)

[display_widget_mobile] =>

[rw_opt_exclude] => Array

(

[pageid_274493] => 1

[cpt_podcast] => 1

[cpta_podcast] => 1

[category_16613] => 1

[category_16631] => 1

[taxonomy_series] => 1

)

[node_id] => Array

(

[0] => 2

)

)

XF\Mvc\Entity\ArrayCollection Object

(

[entities:protected] => Array

(

[14021] => ThemeHouse\XPress\XF\Entity\Thread Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 51

[rootClass:protected] => XF\Entity\Thread

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[thread_id] => 14021

[node_id] => 2

[title] => Radically Improved Battery, Uses Silicon instead of Graphite

[reply_count] => 19

[view_count] => 6451

[user_id] => 10499

[username] => Arthur Hanson

[post_date] => 1617796378

[sticky] => 0

[discussion_state] => visible

[discussion_open] => 1

[discussion_type] => discussion

[first_post_id] => 46387

[first_post_reaction_score] => 1

[first_post_reactions] => {"1":1}

[last_post_date] => 1752389186

[last_post_id] => 88461

[last_post_user_id] => 398779

[last_post_username] => xu fei

[prefix_id] => 0

[tags] => []

[custom_fields] => []

[vote_score] => 0

[vote_count] => 0

[type_data] => []

)

[_relations:protected] => Array

(

[User] => ThemeHouse\XLink\XF\Entity\User Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 48

[rootClass:protected] => XF\Entity\User

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[user_id] => 10499

[username] => Arthur Hanson

[username_date] => 0

[username_date_visible] => 0

[email] => a-j-hanson@att.net

[custom_title] =>

[language_id] => 1

[style_id] => 1

[timezone] => America/Los_Angeles

[visible] => 1

[activity_visible] => 1

[user_group_id] => 2

[secondary_group_ids] =>

[display_style_group_id] => 2

[permission_combination_id] => 8

[message_count] => 3256

[question_solution_count] => 0

[conversations_unread] => 0

[register_date] => 1366028460

[last_activity] => 1751760746

[last_summary_email_date] => 1606051785

[trophy_points] => 83

[alerts_unviewed] => 9

[alerts_unread] => 9

[avatar_date] => 0

[avatar_width] => 0

[avatar_height] => 0

[avatar_highdpi] => 0

[gravatar] =>

[user_state] => valid

[security_lock] =>

[is_moderator] => 0

[is_admin] => 0

[is_banned] => 0

[reaction_score] => 432

[warning_points] => 0

[is_staff] => 0

[secret_key] => ta_8_Pj20m6PDO6RnrGK-VnSP_vyhP_m

[privacy_policy_accepted] => 0

[terms_accepted] => 0

[vote_score] => 0

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[Forum] => XF\Entity\Forum Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 50

[rootClass:protected] => XF\Entity\Forum

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[discussion_count] => 7530

[message_count] => 52695

[last_post_id] => 88461

[last_post_date] => 1752389186

[last_post_user_id] => 398779

[last_post_username] => xu fei

[last_thread_id] => 14021

[last_thread_title] => Radically Improved Battery, Uses Silicon instead of Graphite

[last_thread_prefix_id] => 0

[moderate_threads] => 0

[moderate_replies] => 0

[allow_posting] => 1

[count_messages] => 1

[find_new] => 1

[allow_index] => allow

[index_criteria] =>

[field_cache] => []

[prefix_cache] => []

[prompt_cache] => []

[default_prefix_id] => 0

[default_sort_order] => last_post_date

[default_sort_direction] => desc

[list_date_limit_days] => 0

[require_prefix] => 0

[allowed_watch_notifications] => all

[min_tags] => 0

[forum_type_id] => discussion

[type_config] => {"allowed_thread_types":["poll"]}

)

[_relations:protected] => Array

(

[Node] => XF\Entity\Node Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 49

[rootClass:protected] => XF\Entity\Node

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[title] => SemiWiki Main Forum ( Ask the Experts! )

[description] => Post your questions to the experts here!

[node_name] =>

[node_type_id] => Forum

[parent_node_id] => 1

[display_order] => 1

[display_in_list] => 1

[lft] => 2

[rgt] => 3

[depth] => 1

[style_id] => 0

[effective_style_id] => 0

[breadcrumb_data] => {"1":{"node_id":1,"title":"Main Category","depth":0,"lft":1,"node_name":null,"node_type_id":"Category","display_in_list":true}}

[navigation_id] =>

[effective_navigation_id] =>

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[22788] => ThemeHouse\XPress\XF\Entity\Thread Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 55

[rootClass:protected] => XF\Entity\Thread

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[thread_id] => 22788

[node_id] => 2

[title] => GlobalWafers to open U.S.' first 12-inch silicon wafer fab soon

[reply_count] => 3

[view_count] => 2604

[user_id] => 5

[username] => Daniel Nenni

[post_date] => 1747070249

[sticky] => 0

[discussion_state] => visible

[discussion_open] => 1

[discussion_type] => discussion

[first_post_id] => 86148

[first_post_reaction_score] => 4

[first_post_reactions] => {"1":4}

[last_post_date] => 1752389073

[last_post_id] => 88460

[last_post_user_id] => 398779

[last_post_username] => xu fei

[prefix_id] => 0

[tags] => []

[custom_fields] => []

[vote_score] => 0

[vote_count] => 0

[type_data] => []

)

[_relations:protected] => Array

(

[User] => ThemeHouse\XLink\XF\Entity\User Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 52

[rootClass:protected] => XF\Entity\User

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[user_id] => 5

[username] => Daniel Nenni

[username_date] => 0

[username_date_visible] => 0

[email] => dnenni@semiwiki.com

[custom_title] => Admin

[language_id] => 1

[style_id] => 0

[timezone] => America/Los_Angeles

[visible] => 1

[activity_visible] => 1

[user_group_id] => 3

[secondary_group_ids] => 4,5,132

[display_style_group_id] => 3

[permission_combination_id] => 88

[message_count] => 13902

[question_solution_count] => 0

[conversations_unread] => 0

[register_date] => 1280720820

[last_activity] => 1752363934

[last_summary_email_date] => 1605968657

[trophy_points] => 113

[alerts_unviewed] => 32

[alerts_unread] => 32

[avatar_date] => 1663211649

[avatar_width] => 110

[avatar_height] => 107

[avatar_highdpi] => 0

[gravatar] =>

[user_state] => valid

[security_lock] =>

[is_moderator] => 1

[is_admin] => 1

[is_banned] => 0

[reaction_score] => 7002

[warning_points] => 0

[is_staff] => 1

[secret_key] => 0HwyUVVHCwJotUUVEpvqAclfYJdGNPpw

[privacy_policy_accepted] => 0

[terms_accepted] => 0

[vote_score] => 0

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[Forum] => XF\Entity\Forum Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 50

[rootClass:protected] => XF\Entity\Forum

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[discussion_count] => 7530

[message_count] => 52695

[last_post_id] => 88461

[last_post_date] => 1752389186

[last_post_user_id] => 398779

[last_post_username] => xu fei

[last_thread_id] => 14021

[last_thread_title] => Radically Improved Battery, Uses Silicon instead of Graphite

[last_thread_prefix_id] => 0

[moderate_threads] => 0

[moderate_replies] => 0

[allow_posting] => 1

[count_messages] => 1

[find_new] => 1

[allow_index] => allow

[index_criteria] =>

[field_cache] => []

[prefix_cache] => []

[prompt_cache] => []

[default_prefix_id] => 0

[default_sort_order] => last_post_date

[default_sort_direction] => desc

[list_date_limit_days] => 0

[require_prefix] => 0

[allowed_watch_notifications] => all

[min_tags] => 0

[forum_type_id] => discussion

[type_config] => {"allowed_thread_types":["poll"]}

)

[_relations:protected] => Array

(

[Node] => XF\Entity\Node Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 49

[rootClass:protected] => XF\Entity\Node

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[title] => SemiWiki Main Forum ( Ask the Experts! )

[description] => Post your questions to the experts here!

[node_name] =>

[node_type_id] => Forum

[parent_node_id] => 1

[display_order] => 1

[display_in_list] => 1

[lft] => 2

[rgt] => 3

[depth] => 1

[style_id] => 0

[effective_style_id] => 0

[breadcrumb_data] => {"1":{"node_id":1,"title":"Main Category","depth":0,"lft":1,"node_name":null,"node_type_id":"Category","display_in_list":true}}

[navigation_id] =>

[effective_navigation_id] =>

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[23162] => ThemeHouse\XPress\XF\Entity\Thread Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 59

[rootClass:protected] => XF\Entity\Thread

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[thread_id] => 23162

[node_id] => 2

[title] => Intel’s CEO: ‘We are not in the top 10’ of leading chip companies

[reply_count] => 40

[view_count] => 4675

[user_id] => 332012

[username] => osnium

[post_date] => 1752113431

[sticky] => 0

[discussion_state] => visible

[discussion_open] => 1

[discussion_type] => discussion

[first_post_id] => 88377

[first_post_reaction_score] => 6

[first_post_reactions] => {"1":6}

[last_post_date] => 1752388432

[last_post_id] => 88459

[last_post_user_id] => 332041

[last_post_username] => XYang2023

[prefix_id] => 0

[tags] => []

[custom_fields] => []

[vote_score] => 0

[vote_count] => 0

[type_data] => []

)

[_relations:protected] => Array

(

[User] => ThemeHouse\XLink\XF\Entity\User Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 56

[rootClass:protected] => XF\Entity\User

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[user_id] => 332012

[username] => osnium

[username_date] => 0

[username_date_visible] => 0

[email] => prelim_upturn_0p@icloud.com

[custom_title] =>

[language_id] => 1

[style_id] => 0

[timezone] => America/Los_Angeles

[visible] => 1

[activity_visible] => 1

[user_group_id] => 2

[secondary_group_ids] =>

[display_style_group_id] => 2

[permission_combination_id] => 8

[message_count] => 302

[question_solution_count] => 0

[conversations_unread] => 4

[register_date] => 1708476721

[last_activity] => 1752383024

[last_summary_email_date] => 1708476721

[trophy_points] => 43

[alerts_unviewed] => 10

[alerts_unread] => 10

[avatar_date] => 0

[avatar_width] => 0

[avatar_height] => 0

[avatar_highdpi] => 0

[gravatar] =>

[user_state] => valid

[security_lock] =>

[is_moderator] => 0

[is_admin] => 0

[is_banned] => 0

[reaction_score] => 237

[warning_points] => 0

[is_staff] => 0

[secret_key] => LslDOzdhXWoAXmgbm-AZIYJy2n9ao8Yy

[privacy_policy_accepted] => 1708476721

[terms_accepted] => 1708476721

[vote_score] => 0

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[Forum] => XF\Entity\Forum Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 50

[rootClass:protected] => XF\Entity\Forum

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[discussion_count] => 7530

[message_count] => 52695

[last_post_id] => 88461

[last_post_date] => 1752389186

[last_post_user_id] => 398779

[last_post_username] => xu fei

[last_thread_id] => 14021

[last_thread_title] => Radically Improved Battery, Uses Silicon instead of Graphite

[last_thread_prefix_id] => 0

[moderate_threads] => 0

[moderate_replies] => 0

[allow_posting] => 1

[count_messages] => 1

[find_new] => 1

[allow_index] => allow

[index_criteria] =>

[field_cache] => []

[prefix_cache] => []

[prompt_cache] => []

[default_prefix_id] => 0

[default_sort_order] => last_post_date

[default_sort_direction] => desc

[list_date_limit_days] => 0

[require_prefix] => 0

[allowed_watch_notifications] => all

[min_tags] => 0

[forum_type_id] => discussion

[type_config] => {"allowed_thread_types":["poll"]}

)

[_relations:protected] => Array

(

[Node] => XF\Entity\Node Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 49

[rootClass:protected] => XF\Entity\Node

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[title] => SemiWiki Main Forum ( Ask the Experts! )

[description] => Post your questions to the experts here!

[node_name] =>

[node_type_id] => Forum

[parent_node_id] => 1

[display_order] => 1

[display_in_list] => 1

[lft] => 2

[rgt] => 3

[depth] => 1

[style_id] => 0

[effective_style_id] => 0

[breadcrumb_data] => {"1":{"node_id":1,"title":"Main Category","depth":0,"lft":1,"node_name":null,"node_type_id":"Category","display_in_list":true}}

[navigation_id] =>

[effective_navigation_id] =>

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[23074] => ThemeHouse\XPress\XF\Entity\Thread Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 63

[rootClass:protected] => XF\Entity\Thread

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[thread_id] => 23074

[node_id] => 2

[title] => Is Silicon Carbide the future of Semiconductors?

[reply_count] => 9

[view_count] => 1274

[user_id] => 10499

[username] => Arthur Hanson

[post_date] => 1750693545

[sticky] => 0

[discussion_state] => visible

[discussion_open] => 1

[discussion_type] => discussion

[first_post_id] => 87856

[first_post_reaction_score] => 0

[first_post_reactions] => []

[last_post_date] => 1752388205

[last_post_id] => 88458

[last_post_user_id] => 398779

[last_post_username] => xu fei

[prefix_id] => 0

[tags] => []

[custom_fields] => []

[vote_score] => 0

[vote_count] => 0

[type_data] => []

)

[_relations:protected] => Array

(

[User] => ThemeHouse\XLink\XF\Entity\User Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 48

[rootClass:protected] => XF\Entity\User

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[user_id] => 10499

[username] => Arthur Hanson

[username_date] => 0

[username_date_visible] => 0

[email] => a-j-hanson@att.net

[custom_title] =>

[language_id] => 1

[style_id] => 1

[timezone] => America/Los_Angeles

[visible] => 1

[activity_visible] => 1

[user_group_id] => 2

[secondary_group_ids] =>

[display_style_group_id] => 2

[permission_combination_id] => 8

[message_count] => 3256

[question_solution_count] => 0

[conversations_unread] => 0

[register_date] => 1366028460

[last_activity] => 1751760746

[last_summary_email_date] => 1606051785

[trophy_points] => 83

[alerts_unviewed] => 9

[alerts_unread] => 9

[avatar_date] => 0

[avatar_width] => 0

[avatar_height] => 0

[avatar_highdpi] => 0

[gravatar] =>

[user_state] => valid

[security_lock] =>

[is_moderator] => 0

[is_admin] => 0

[is_banned] => 0

[reaction_score] => 432

[warning_points] => 0

[is_staff] => 0

[secret_key] => ta_8_Pj20m6PDO6RnrGK-VnSP_vyhP_m

[privacy_policy_accepted] => 0

[terms_accepted] => 0

[vote_score] => 0

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[Forum] => XF\Entity\Forum Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 50

[rootClass:protected] => XF\Entity\Forum

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[discussion_count] => 7530

[message_count] => 52695

[last_post_id] => 88461

[last_post_date] => 1752389186

[last_post_user_id] => 398779

[last_post_username] => xu fei

[last_thread_id] => 14021

[last_thread_title] => Radically Improved Battery, Uses Silicon instead of Graphite

[last_thread_prefix_id] => 0

[moderate_threads] => 0

[moderate_replies] => 0

[allow_posting] => 1

[count_messages] => 1

[find_new] => 1

[allow_index] => allow

[index_criteria] =>

[field_cache] => []

[prefix_cache] => []

[prompt_cache] => []

[default_prefix_id] => 0

[default_sort_order] => last_post_date

[default_sort_direction] => desc

[list_date_limit_days] => 0

[require_prefix] => 0

[allowed_watch_notifications] => all

[min_tags] => 0

[forum_type_id] => discussion

[type_config] => {"allowed_thread_types":["poll"]}

)

[_relations:protected] => Array

(

[Node] => XF\Entity\Node Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 49

[rootClass:protected] => XF\Entity\Node

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[title] => SemiWiki Main Forum ( Ask the Experts! )

[description] => Post your questions to the experts here!

[node_name] =>

[node_type_id] => Forum

[parent_node_id] => 1

[display_order] => 1

[display_in_list] => 1

[lft] => 2

[rgt] => 3

[depth] => 1

[style_id] => 0

[effective_style_id] => 0

[breadcrumb_data] => {"1":{"node_id":1,"title":"Main Category","depth":0,"lft":1,"node_name":null,"node_type_id":"Category","display_in_list":true}}

[navigation_id] =>

[effective_navigation_id] =>

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[23108] => ThemeHouse\XPress\XF\Entity\Thread Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 67

[rootClass:protected] => XF\Entity\Thread

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[thread_id] => 23108

[node_id] => 2

[title] => Better Battery Technologies to Change the Entire Electric Ecosystem

[reply_count] => 1

[view_count] => 343

[user_id] => 10499

[username] => Arthur Hanson

[post_date] => 1751284865

[sticky] => 0

[discussion_state] => visible

[discussion_open] => 1

[discussion_type] => discussion

[first_post_id] => 88066

[first_post_reaction_score] => 0

[first_post_reactions] => []

[last_post_date] => 1752387997

[last_post_id] => 88457

[last_post_user_id] => 398779

[last_post_username] => xu fei

[prefix_id] => 0

[tags] => []

[custom_fields] => []

[vote_score] => 0

[vote_count] => 0

[type_data] => []

)

[_relations:protected] => Array

(

[User] => ThemeHouse\XLink\XF\Entity\User Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 48

[rootClass:protected] => XF\Entity\User

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[user_id] => 10499

[username] => Arthur Hanson

[username_date] => 0

[username_date_visible] => 0

[email] => a-j-hanson@att.net

[custom_title] =>

[language_id] => 1

[style_id] => 1

[timezone] => America/Los_Angeles

[visible] => 1

[activity_visible] => 1

[user_group_id] => 2

[secondary_group_ids] =>

[display_style_group_id] => 2

[permission_combination_id] => 8

[message_count] => 3256

[question_solution_count] => 0

[conversations_unread] => 0

[register_date] => 1366028460

[last_activity] => 1751760746

[last_summary_email_date] => 1606051785

[trophy_points] => 83

[alerts_unviewed] => 9

[alerts_unread] => 9

[avatar_date] => 0

[avatar_width] => 0

[avatar_height] => 0

[avatar_highdpi] => 0

[gravatar] =>

[user_state] => valid

[security_lock] =>

[is_moderator] => 0

[is_admin] => 0

[is_banned] => 0

[reaction_score] => 432

[warning_points] => 0

[is_staff] => 0

[secret_key] => ta_8_Pj20m6PDO6RnrGK-VnSP_vyhP_m

[privacy_policy_accepted] => 0

[terms_accepted] => 0

[vote_score] => 0

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[Forum] => XF\Entity\Forum Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 50

[rootClass:protected] => XF\Entity\Forum

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[discussion_count] => 7530

[message_count] => 52695

[last_post_id] => 88461

[last_post_date] => 1752389186

[last_post_user_id] => 398779

[last_post_username] => xu fei

[last_thread_id] => 14021

[last_thread_title] => Radically Improved Battery, Uses Silicon instead of Graphite

[last_thread_prefix_id] => 0

[moderate_threads] => 0

[moderate_replies] => 0

[allow_posting] => 1

[count_messages] => 1

[find_new] => 1

[allow_index] => allow

[index_criteria] =>

[field_cache] => []

[prefix_cache] => []

[prompt_cache] => []

[default_prefix_id] => 0

[default_sort_order] => last_post_date

[default_sort_direction] => desc

[list_date_limit_days] => 0

[require_prefix] => 0

[allowed_watch_notifications] => all

[min_tags] => 0

[forum_type_id] => discussion

[type_config] => {"allowed_thread_types":["poll"]}

)

[_relations:protected] => Array

(

[Node] => XF\Entity\Node Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 49

[rootClass:protected] => XF\Entity\Node

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[title] => SemiWiki Main Forum ( Ask the Experts! )

[description] => Post your questions to the experts here!

[node_name] =>

[node_type_id] => Forum

[parent_node_id] => 1

[display_order] => 1

[display_in_list] => 1

[lft] => 2

[rgt] => 3

[depth] => 1

[style_id] => 0

[effective_style_id] => 0

[breadcrumb_data] => {"1":{"node_id":1,"title":"Main Category","depth":0,"lft":1,"node_name":null,"node_type_id":"Category","display_in_list":true}}

[navigation_id] =>

[effective_navigation_id] =>

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[23088] => ThemeHouse\XPress\XF\Entity\Thread Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 71

[rootClass:protected] => XF\Entity\Thread

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[thread_id] => 23088

[node_id] => 2

[title] => Semiconductor foundry landscape to transform by 2030

[reply_count] => 6

[view_count] => 7750

[user_id] => 5

[username] => Daniel Nenni

[post_date] => 1750976263

[sticky] => 0

[discussion_state] => visible

[discussion_open] => 1

[discussion_type] => discussion

[first_post_id] => 87943

[first_post_reaction_score] => 3

[first_post_reactions] => {"1":3}

[last_post_date] => 1752387896

[last_post_id] => 88456

[last_post_user_id] => 398779

[last_post_username] => xu fei

[prefix_id] => 0

[tags] => []

[custom_fields] => []

[vote_score] => 0

[vote_count] => 0

[type_data] => []

)

[_relations:protected] => Array

(

[User] => ThemeHouse\XLink\XF\Entity\User Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 52

[rootClass:protected] => XF\Entity\User

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[user_id] => 5

[username] => Daniel Nenni

[username_date] => 0

[username_date_visible] => 0

[email] => dnenni@semiwiki.com

[custom_title] => Admin

[language_id] => 1

[style_id] => 0

[timezone] => America/Los_Angeles

[visible] => 1

[activity_visible] => 1

[user_group_id] => 3

[secondary_group_ids] => 4,5,132

[display_style_group_id] => 3

[permission_combination_id] => 88

[message_count] => 13902

[question_solution_count] => 0

[conversations_unread] => 0

[register_date] => 1280720820

[last_activity] => 1752363934

[last_summary_email_date] => 1605968657

[trophy_points] => 113

[alerts_unviewed] => 32

[alerts_unread] => 32

[avatar_date] => 1663211649

[avatar_width] => 110

[avatar_height] => 107

[avatar_highdpi] => 0

[gravatar] =>

[user_state] => valid

[security_lock] =>

[is_moderator] => 1

[is_admin] => 1

[is_banned] => 0

[reaction_score] => 7002

[warning_points] => 0

[is_staff] => 1

[secret_key] => 0HwyUVVHCwJotUUVEpvqAclfYJdGNPpw

[privacy_policy_accepted] => 0

[terms_accepted] => 0

[vote_score] => 0

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[Forum] => XF\Entity\Forum Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 50

[rootClass:protected] => XF\Entity\Forum

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[discussion_count] => 7530

[message_count] => 52695

[last_post_id] => 88461

[last_post_date] => 1752389186

[last_post_user_id] => 398779

[last_post_username] => xu fei

[last_thread_id] => 14021

[last_thread_title] => Radically Improved Battery, Uses Silicon instead of Graphite

[last_thread_prefix_id] => 0

[moderate_threads] => 0

[moderate_replies] => 0

[allow_posting] => 1

[count_messages] => 1

[find_new] => 1

[allow_index] => allow

[index_criteria] =>

[field_cache] => []

[prefix_cache] => []

[prompt_cache] => []

[default_prefix_id] => 0

[default_sort_order] => last_post_date

[default_sort_direction] => desc

[list_date_limit_days] => 0

[require_prefix] => 0

[allowed_watch_notifications] => all

[min_tags] => 0

[forum_type_id] => discussion

[type_config] => {"allowed_thread_types":["poll"]}

)

[_relations:protected] => Array

(

[Node] => XF\Entity\Node Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 49

[rootClass:protected] => XF\Entity\Node

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[title] => SemiWiki Main Forum ( Ask the Experts! )

[description] => Post your questions to the experts here!

[node_name] =>

[node_type_id] => Forum

[parent_node_id] => 1

[display_order] => 1

[display_in_list] => 1

[lft] => 2

[rgt] => 3

[depth] => 1

[style_id] => 0

[effective_style_id] => 0

[breadcrumb_data] => {"1":{"node_id":1,"title":"Main Category","depth":0,"lft":1,"node_name":null,"node_type_id":"Category","display_in_list":true}}

[navigation_id] =>

[effective_navigation_id] =>

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[23140] => ThemeHouse\XPress\XF\Entity\Thread Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 75

[rootClass:protected] => XF\Entity\Thread

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[thread_id] => 23140

[node_id] => 2

[title] => Intel layoffs begin: Chipmaker is cutting many thousands of jobs

[reply_count] => 9

[view_count] => 2023

[user_id] => 14042

[username] => hist78

[post_date] => 1751923769

[sticky] => 0

[discussion_state] => visible

[discussion_open] => 1

[discussion_type] => discussion

[first_post_id] => 88295

[first_post_reaction_score] => 2

[first_post_reactions] => {"1":2}

[last_post_date] => 1752387280

[last_post_id] => 88453

[last_post_user_id] => 398779

[last_post_username] => xu fei

[prefix_id] => 0

[tags] => []

[custom_fields] => []

[vote_score] => 0

[vote_count] => 0

[type_data] => []

)

[_relations:protected] => Array

(

[User] => ThemeHouse\XLink\XF\Entity\User Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 72

[rootClass:protected] => XF\Entity\User

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[user_id] => 14042

[username] => hist78

[username_date] => 0

[username_date_visible] => 0

[email] => ckckhcdc@gmail.com

[custom_title] =>

[language_id] => 1

[style_id] => 0

[timezone] => America/Los_Angeles

[visible] => 0

[activity_visible] => 0

[user_group_id] => 2

[secondary_group_ids] =>

[display_style_group_id] => 2

[permission_combination_id] => 8

[message_count] => 3606

[question_solution_count] => 0

[conversations_unread] => 0

[register_date] => 1389969050

[last_activity] => 1752381004

[last_summary_email_date] => 1605978520

[trophy_points] => 113

[alerts_unviewed] => 2

[alerts_unread] => 4

[avatar_date] => 1604959511

[avatar_width] => 807

[avatar_height] => 384

[avatar_highdpi] => 1

[gravatar] =>

[user_state] => valid

[security_lock] =>

[is_moderator] => 0

[is_admin] => 0

[is_banned] => 0

[reaction_score] => 3128

[warning_points] => 0

[is_staff] => 0

[secret_key] => 2YRSnPrl4fVQSdv3R6uqHxqi6BDQOXve

[privacy_policy_accepted] => 0

[terms_accepted] => 0

[vote_score] => 0

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[Forum] => XF\Entity\Forum Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 50

[rootClass:protected] => XF\Entity\Forum

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[discussion_count] => 7530

[message_count] => 52695

[last_post_id] => 88461

[last_post_date] => 1752389186

[last_post_user_id] => 398779

[last_post_username] => xu fei

[last_thread_id] => 14021

[last_thread_title] => Radically Improved Battery, Uses Silicon instead of Graphite

[last_thread_prefix_id] => 0

[moderate_threads] => 0

[moderate_replies] => 0

[allow_posting] => 1

[count_messages] => 1

[find_new] => 1

[allow_index] => allow

[index_criteria] =>

[field_cache] => []

[prefix_cache] => []

[prompt_cache] => []

[default_prefix_id] => 0

[default_sort_order] => last_post_date

[default_sort_direction] => desc

[list_date_limit_days] => 0

[require_prefix] => 0

[allowed_watch_notifications] => all

[min_tags] => 0

[forum_type_id] => discussion

[type_config] => {"allowed_thread_types":["poll"]}

)

[_relations:protected] => Array

(

[Node] => XF\Entity\Node Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 49

[rootClass:protected] => XF\Entity\Node

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[title] => SemiWiki Main Forum ( Ask the Experts! )

[description] => Post your questions to the experts here!

[node_name] =>

[node_type_id] => Forum

[parent_node_id] => 1

[display_order] => 1

[display_in_list] => 1

[lft] => 2

[rgt] => 3

[depth] => 1

[style_id] => 0

[effective_style_id] => 0

[breadcrumb_data] => {"1":{"node_id":1,"title":"Main Category","depth":0,"lft":1,"node_name":null,"node_type_id":"Category","display_in_list":true}}

[navigation_id] =>

[effective_navigation_id] =>

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[23171] => ThemeHouse\XPress\XF\Entity\Thread Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 79

[rootClass:protected] => XF\Entity\Thread

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[thread_id] => 23171

[node_id] => 2

[title] => Intel’s Gamble: Bringing in UMC to Tap into Taiwan’s Secret Sauce

[reply_count] => 1

[view_count] => 102

[user_id] => 398583

[username] => karin623

[post_date] => 1752382853

[sticky] => 0

[discussion_state] => visible

[discussion_open] => 1

[discussion_type] => discussion

[first_post_id] => 88449

[first_post_reaction_score] => 0

[first_post_reactions] => []

[last_post_date] => 1752385101

[last_post_id] => 88450

[last_post_user_id] => 331287

[last_post_username] => psantodomingo

[prefix_id] => 0

[tags] => []

[custom_fields] => []

[vote_score] => 0

[vote_count] => 0

[type_data] => []

)

[_relations:protected] => Array

(

[User] => ThemeHouse\XLink\XF\Entity\User Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 76

[rootClass:protected] => XF\Entity\User

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[user_id] => 398583

[username] => karin623

[username_date] => 0

[username_date_visible] => 0

[email] => karin623@gmail.com

[custom_title] =>

[language_id] => 1

[style_id] => 0

[timezone] => Asia/Hong_Kong

[visible] => 1

[activity_visible] => 1

[user_group_id] => 2

[secondary_group_ids] =>

[display_style_group_id] => 2

[permission_combination_id] => 8

[message_count] => 7

[question_solution_count] => 0

[conversations_unread] => 0

[register_date] => 1748753435

[last_activity] => 1752382931

[last_summary_email_date] => 1748753435

[trophy_points] => 3

[alerts_unviewed] => 1

[alerts_unread] => 2

[avatar_date] => 0

[avatar_width] => 0

[avatar_height] => 0

[avatar_highdpi] => 0

[gravatar] =>

[user_state] => valid

[security_lock] =>

[is_moderator] => 0

[is_admin] => 0

[is_banned] => 0

[reaction_score] => 6

[warning_points] => 0

[is_staff] => 0

[secret_key] => PBnrLVmUtkEL7YUKL8Ij_6L3tfKYRzDQ

[privacy_policy_accepted] => 1748753435

[terms_accepted] => 1748753435

[vote_score] => 0

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[Forum] => XF\Entity\Forum Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 50

[rootClass:protected] => XF\Entity\Forum

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[discussion_count] => 7530

[message_count] => 52695

[last_post_id] => 88461

[last_post_date] => 1752389186

[last_post_user_id] => 398779

[last_post_username] => xu fei

[last_thread_id] => 14021

[last_thread_title] => Radically Improved Battery, Uses Silicon instead of Graphite

[last_thread_prefix_id] => 0

[moderate_threads] => 0

[moderate_replies] => 0

[allow_posting] => 1

[count_messages] => 1

[find_new] => 1

[allow_index] => allow

[index_criteria] =>

[field_cache] => []

[prefix_cache] => []

[prompt_cache] => []

[default_prefix_id] => 0

[default_sort_order] => last_post_date

[default_sort_direction] => desc

[list_date_limit_days] => 0

[require_prefix] => 0

[allowed_watch_notifications] => all

[min_tags] => 0

[forum_type_id] => discussion

[type_config] => {"allowed_thread_types":["poll"]}

)

[_relations:protected] => Array

(

[Node] => XF\Entity\Node Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 49

[rootClass:protected] => XF\Entity\Node

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[title] => SemiWiki Main Forum ( Ask the Experts! )

[description] => Post your questions to the experts here!

[node_name] =>

[node_type_id] => Forum

[parent_node_id] => 1

[display_order] => 1

[display_in_list] => 1

[lft] => 2

[rgt] => 3

[depth] => 1

[style_id] => 0

[effective_style_id] => 0

[breadcrumb_data] => {"1":{"node_id":1,"title":"Main Category","depth":0,"lft":1,"node_name":null,"node_type_id":"Category","display_in_list":true}}

[navigation_id] =>

[effective_navigation_id] =>

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[22690] => ThemeHouse\XPress\XF\Entity\Thread Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 83

[rootClass:protected] => XF\Entity\Thread

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[thread_id] => 22690

[node_id] => 2

[title] => Huawei, the leader in Chinese semiconductor development… ‘Life or death’ for SMIC 5nm mass production next year

[reply_count] => 13

[view_count] => 8042

[user_id] => 5

[username] => Daniel Nenni

[post_date] => 1745605124

[sticky] => 0

[discussion_state] => visible

[discussion_open] => 1

[discussion_type] => discussion

[first_post_id] => 85511

[first_post_reaction_score] => 0

[first_post_reactions] => []

[last_post_date] => 1752379549

[last_post_id] => 88448

[last_post_user_id] => 395487

[last_post_username] => LLL0955

[prefix_id] => 0

[tags] => []

[custom_fields] => []

[vote_score] => 0

[vote_count] => 0

[type_data] => []

)

[_relations:protected] => Array

(

[User] => ThemeHouse\XLink\XF\Entity\User Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 52

[rootClass:protected] => XF\Entity\User

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[user_id] => 5

[username] => Daniel Nenni

[username_date] => 0

[username_date_visible] => 0

[email] => dnenni@semiwiki.com

[custom_title] => Admin

[language_id] => 1

[style_id] => 0

[timezone] => America/Los_Angeles

[visible] => 1

[activity_visible] => 1

[user_group_id] => 3

[secondary_group_ids] => 4,5,132

[display_style_group_id] => 3

[permission_combination_id] => 88

[message_count] => 13902

[question_solution_count] => 0

[conversations_unread] => 0

[register_date] => 1280720820

[last_activity] => 1752363934

[last_summary_email_date] => 1605968657

[trophy_points] => 113

[alerts_unviewed] => 32

[alerts_unread] => 32

[avatar_date] => 1663211649

[avatar_width] => 110

[avatar_height] => 107

[avatar_highdpi] => 0

[gravatar] =>

[user_state] => valid

[security_lock] =>

[is_moderator] => 1

[is_admin] => 1

[is_banned] => 0

[reaction_score] => 7002

[warning_points] => 0

[is_staff] => 1

[secret_key] => 0HwyUVVHCwJotUUVEpvqAclfYJdGNPpw

[privacy_policy_accepted] => 0

[terms_accepted] => 0

[vote_score] => 0

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[Forum] => XF\Entity\Forum Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 50

[rootClass:protected] => XF\Entity\Forum

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[discussion_count] => 7530

[message_count] => 52695

[last_post_id] => 88461

[last_post_date] => 1752389186

[last_post_user_id] => 398779

[last_post_username] => xu fei

[last_thread_id] => 14021

[last_thread_title] => Radically Improved Battery, Uses Silicon instead of Graphite

[last_thread_prefix_id] => 0

[moderate_threads] => 0

[moderate_replies] => 0

[allow_posting] => 1

[count_messages] => 1

[find_new] => 1

[allow_index] => allow

[index_criteria] =>

[field_cache] => []

[prefix_cache] => []

[prompt_cache] => []

[default_prefix_id] => 0

[default_sort_order] => last_post_date

[default_sort_direction] => desc

[list_date_limit_days] => 0

[require_prefix] => 0

[allowed_watch_notifications] => all

[min_tags] => 0

[forum_type_id] => discussion

[type_config] => {"allowed_thread_types":["poll"]}

)

[_relations:protected] => Array

(

[Node] => XF\Entity\Node Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 49

[rootClass:protected] => XF\Entity\Node

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[title] => SemiWiki Main Forum ( Ask the Experts! )

[description] => Post your questions to the experts here!

[node_name] =>

[node_type_id] => Forum

[parent_node_id] => 1

[display_order] => 1

[display_in_list] => 1

[lft] => 2

[rgt] => 3

[depth] => 1

[style_id] => 0

[effective_style_id] => 0

[breadcrumb_data] => {"1":{"node_id":1,"title":"Main Category","depth":0,"lft":1,"node_name":null,"node_type_id":"Category","display_in_list":true}}

[navigation_id] =>

[effective_navigation_id] =>

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[23168] => ThemeHouse\XPress\XF\Entity\Thread Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 87

[rootClass:protected] => XF\Entity\Thread

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[thread_id] => 23168

[node_id] => 2

[title] => TSMC Exits GaN Foundry Business — Is IDM the Winning Formula for Gallium Nitride?

[reply_count] => 5

[view_count] => 1070

[user_id] => 5

[username] => Daniel Nenni

[post_date] => 1752251830

[sticky] => 0

[discussion_state] => visible

[discussion_open] => 1

[discussion_type] => discussion

[first_post_id] => 88420

[first_post_reaction_score] => 2

[first_post_reactions] => {"1":2}

[last_post_date] => 1752371755

[last_post_id] => 88444

[last_post_user_id] => 36448

[last_post_username] => Paul2

[prefix_id] => 0

[tags] => []

[custom_fields] => []

[vote_score] => 0

[vote_count] => 0

[type_data] => []

)

[_relations:protected] => Array

(

[User] => ThemeHouse\XLink\XF\Entity\User Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 52

[rootClass:protected] => XF\Entity\User

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[user_id] => 5

[username] => Daniel Nenni

[username_date] => 0

[username_date_visible] => 0

[email] => dnenni@semiwiki.com

[custom_title] => Admin

[language_id] => 1

[style_id] => 0

[timezone] => America/Los_Angeles

[visible] => 1

[activity_visible] => 1

[user_group_id] => 3

[secondary_group_ids] => 4,5,132

[display_style_group_id] => 3

[permission_combination_id] => 88

[message_count] => 13902

[question_solution_count] => 0

[conversations_unread] => 0

[register_date] => 1280720820

[last_activity] => 1752363934

[last_summary_email_date] => 1605968657

[trophy_points] => 113

[alerts_unviewed] => 32

[alerts_unread] => 32

[avatar_date] => 1663211649

[avatar_width] => 110

[avatar_height] => 107

[avatar_highdpi] => 0

[gravatar] =>

[user_state] => valid

[security_lock] =>

[is_moderator] => 1

[is_admin] => 1

[is_banned] => 0

[reaction_score] => 7002

[warning_points] => 0

[is_staff] => 1

[secret_key] => 0HwyUVVHCwJotUUVEpvqAclfYJdGNPpw

[privacy_policy_accepted] => 0

[terms_accepted] => 0

[vote_score] => 0

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[Forum] => XF\Entity\Forum Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 50

[rootClass:protected] => XF\Entity\Forum

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[discussion_count] => 7530

[message_count] => 52695

[last_post_id] => 88461

[last_post_date] => 1752389186

[last_post_user_id] => 398779

[last_post_username] => xu fei

[last_thread_id] => 14021

[last_thread_title] => Radically Improved Battery, Uses Silicon instead of Graphite

[last_thread_prefix_id] => 0

[moderate_threads] => 0

[moderate_replies] => 0

[allow_posting] => 1

[count_messages] => 1

[find_new] => 1

[allow_index] => allow

[index_criteria] =>

[field_cache] => []

[prefix_cache] => []

[prompt_cache] => []

[default_prefix_id] => 0

[default_sort_order] => last_post_date

[default_sort_direction] => desc

[list_date_limit_days] => 0

[require_prefix] => 0

[allowed_watch_notifications] => all

[min_tags] => 0

[forum_type_id] => discussion

[type_config] => {"allowed_thread_types":["poll"]}

)

[_relations:protected] => Array

(

[Node] => XF\Entity\Node Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 49

[rootClass:protected] => XF\Entity\Node

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[title] => SemiWiki Main Forum ( Ask the Experts! )

[description] => Post your questions to the experts here!

[node_name] =>

[node_type_id] => Forum

[parent_node_id] => 1

[display_order] => 1

[display_in_list] => 1

[lft] => 2

[rgt] => 3

[depth] => 1

[style_id] => 0

[effective_style_id] => 0

[breadcrumb_data] => {"1":{"node_id":1,"title":"Main Category","depth":0,"lft":1,"node_name":null,"node_type_id":"Category","display_in_list":true}}

[navigation_id] =>

[effective_navigation_id] =>

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[23160] => ThemeHouse\XPress\XF\Entity\Thread Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 91

[rootClass:protected] => XF\Entity\Thread

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[thread_id] => 23160

[node_id] => 2

[title] => Jeff Williams, Tim Cook's Tim Cook, stepping down from Apple

[reply_count] => 3

[view_count] => 1090

[user_id] => 14042

[username] => hist78

[post_date] => 1752095275

[sticky] => 0

[discussion_state] => visible

[discussion_open] => 1

[discussion_type] => discussion

[first_post_id] => 88371

[first_post_reaction_score] => 0

[first_post_reactions] => []

[last_post_date] => 1752299644

[last_post_id] => 88427

[last_post_user_id] => 35033

[last_post_username] => GYHTBT

[prefix_id] => 0

[tags] => []

[custom_fields] => []

[vote_score] => 0

[vote_count] => 0

[type_data] => []

)

[_relations:protected] => Array

(

[User] => ThemeHouse\XLink\XF\Entity\User Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 72

[rootClass:protected] => XF\Entity\User

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[user_id] => 14042

[username] => hist78

[username_date] => 0

[username_date_visible] => 0

[email] => ckckhcdc@gmail.com

[custom_title] =>

[language_id] => 1

[style_id] => 0

[timezone] => America/Los_Angeles

[visible] => 0

[activity_visible] => 0

[user_group_id] => 2

[secondary_group_ids] =>

[display_style_group_id] => 2

[permission_combination_id] => 8

[message_count] => 3606

[question_solution_count] => 0

[conversations_unread] => 0

[register_date] => 1389969050

[last_activity] => 1752381004

[last_summary_email_date] => 1605978520

[trophy_points] => 113

[alerts_unviewed] => 2

[alerts_unread] => 4

[avatar_date] => 1604959511

[avatar_width] => 807

[avatar_height] => 384

[avatar_highdpi] => 1

[gravatar] =>

[user_state] => valid

[security_lock] =>

[is_moderator] => 0

[is_admin] => 0

[is_banned] => 0

[reaction_score] => 3128

[warning_points] => 0

[is_staff] => 0

[secret_key] => 2YRSnPrl4fVQSdv3R6uqHxqi6BDQOXve

[privacy_policy_accepted] => 0

[terms_accepted] => 0

[vote_score] => 0

)

[_relations:protected] => Array

(

)

[_previousValues:protected] => Array

(

)

[_options:protected] => Array

(

)

[_deleted:protected] =>

[_readOnly:protected] =>

[_writePending:protected] =>

[_writeRunning:protected] =>

[_errors:protected] => Array

(

)

[_whenSaveable:protected] => Array

(

)

[_cascadeSave:protected] => Array

(

)

[_behaviors:protected] =>

)

[Forum] => XF\Entity\Forum Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 50

[rootClass:protected] => XF\Entity\Forum

[_useReplaceInto:protected] =>

[_newValues:protected] => Array

(

)

[_values:protected] => Array

(

[node_id] => 2

[discussion_count] => 7530

[message_count] => 52695

[last_post_id] => 88461

[last_post_date] => 1752389186

[last_post_user_id] => 398779

[last_post_username] => xu fei

[last_thread_id] => 14021

[last_thread_title] => Radically Improved Battery, Uses Silicon instead of Graphite

[last_thread_prefix_id] => 0

[moderate_threads] => 0

[moderate_replies] => 0

[allow_posting] => 1

[count_messages] => 1

[find_new] => 1

[allow_index] => allow

[index_criteria] =>

[field_cache] => []

[prefix_cache] => []

[prompt_cache] => []

[default_prefix_id] => 0

[default_sort_order] => last_post_date

[default_sort_direction] => desc

[list_date_limit_days] => 0

[require_prefix] => 0

[allowed_watch_notifications] => all

[min_tags] => 0

[forum_type_id] => discussion

[type_config] => {"allowed_thread_types":["poll"]}

)

[_relations:protected] => Array

(

[Node] => XF\Entity\Node Object

(

[_uniqueEntityId:XF\Mvc\Entity\Entity:private] => 49

[rootClass:protected] => XF\Entity\Node

[_useReplaceInto:protected] =>