From our book “Mobile Unleashed”, this is the semiconductor history of Apple computer:

Observed in hindsight after the iPhone, the distant struggles of Apple in 1997 seem strange, almost hard to fathom. Had it not been for the shrewd investment in ARM, Apple may have lacked the cash needed to survive its crisis. However, cash was far from the only ingredient required to conjure up an Apple comeback.

Apple found its voice and immense profits from a combination of excellent consumer-friendly designs and relentless lifestyle marketing. No technology company in history has undergone a greater transformation, reaching a near-religious status with its followers. At its head during the makeover was its old leader, chastened but not quite humbled.

Better Call Steve

Steve Jobs rev 2.0 publicly insisted he did not want to be Apple CEO again, preferring a role as advisor returning after a self-described NeXT “hiatus”. In fact, by May 16, 1997, he had taken his company and the CTO role back, and taken a lot of good people and products to the woodshed. He then took to the pulpit at the close of the Apple Worldwide Developer Conference (WWDC).

Jobs walked on stage, sat on a wooden stool, sipped a beverage from a paper cup, expressed gratitude for the warm welcome, and proclaimed he would chat and take questions. The first inquiry: What about OpenDoc? It was one of dozens of cancelled initiatives. Jobs sarcastically but emphatically said, “What about it? It’s dead, right?” Amid audience laughter, the befuddled developer who asked the question said he didn’t know; it must be alive because he was still working on it.

Standing up and setting down the cup, Jobs began pacing. He first apologized to developers, saying he felt their pain. Folding his hands for effect, he then said exactly what a room full of tormented engineers wanted to hear. “Apple suffered for several years from lousy engineering management. I have to say it. There were people going off in 18 different directions doing arguably interesting things in each one of them. Good engineers, lousy management.”

With disdain for what anyone outside his circle of trust might think, Jobs planted his key message: “Focusing is about saying no. Focusing is about saying no.” We are not just killing stuff – we’re focusing. Genius. For the next several years, probably three or four, he said the task was not to “reinvent the world” but to reinforce the Macintosh. He did not worry about being different for the sake of different; he wanted the Mac to be better, much better than the alternatives. The new operating system project Rhapsody, incorporating NeXT technology that would become Mac OS X, had to win with developers and third parties. That included Adobe and (gasp) Microsoft. He alluded to a less parochial strategy in the future, saying, “There are a lot of smart people that don’t work at Apple.”

After more Mac-related questions, there was a curve ball: What do you think Apple should do with Newton? Jobs recoiled and laughed uncomfortably, muttering, “You had to ask that.” He propped himself against a display table and paused an agonizing 15 seconds, staring at the floor. He sighed deeply, and finally slightly nodded his head in self affirmation of what he was about to say.

In very measured tones, Jobs said rarely can companies manage two large operating systems, and he could not imagine succeeding with three. With Mac OS and Rhapsody, Newton made three – it had nothing to do with how good or bad Newton was, it was unsustainable. The follow-up: Have you used a Newton? The first one was “a piece of junk” he threw away. The Motorola Envoy lasted three months before it received a junk label. He heard the latest Newtons were a lot better, but hadn’t tried one. Shouts from the crowd implored that he should.

Jobs was about to tread a fine line between calling the mobile baby ugly and tossing it in a dumpster, and positioning for a future he was already planning.

“My problem is … to me, the high order bit is connectivity.” In 1997, Wi-Fi was not nearly as pervasive or fast as it is today, and 3G mobile networks were still on the drawing board. He said it was about email, and to do email, you needed a keyboard and connectivity. He said he didn’t care what OS was inside such a device, but he didn’t want “a little scribble thing.” That was the end of Newton.

Legions of the faithful waited nervously. The June 1997 cover of Wired depicted an Apple logo superimposed on a sunburst and wrapped in a crown of thorns, with the caption “Pray”, a not-so-subtle reference. Gil Amelio resigned as Apple CEO in July, outmaneuvered and powerless to say or do anything else.

At Macworld Boston on August 6, 1997, Jobs announced a sweeping agreement with Microsoft. Tired of fighting unproductive IP battles, Apple and Microsoft settled and cross-licensed patents. Next came a five-year pledge for Microsoft Office on Macintosh, with parallel releases to Windows versions. Apple licensed Internet Explorer, and the parties agreed to collaborate on Java.

To a chorus of boos and catcalls from the audience, Jobs revealed a Microsoft equity position in Apple to the tune of $150M in at-market purchases of stock. The shares were non-voting, allaying concerns. This was a clear signal to institutional investors that Apple stock was not only safe, but also a growth opportunity. Jobs declared, “The era of setting this up as a competition between Apple and Microsoft is over as far as I’m concerned.”

Apple stock rose 33% that day, and Jobs secured the interim CEO role the next month, a title he kept for 14 years. Refocused, and financed in part with cash from sales of ARM stock, Apple began its recovery. Investors saw rewards as the stock climbed – rising 212% in 1998, and another 151% in 1999.

Design and Digital Dreams

Restoring the shine to Apple would take more than a focus on new software. Jobs had always been passionate about design, evolving from early interest in Eichler-style homes in the Bay Area, to the gunmetal gray of Sony consumer electronics, to a simplistic Bauhaus style that permeated the Macintosh.

Hartmut Esslinger of Frog Design was an early confidant of Jobs, creating the “Snow White” design language of the Lisa and Macintosh days. Esslinger also created the NeXTcube. Jobs turned to Esslinger in early 1997 as an advisor to help build his recovery strategy. Esslinger was the voice calling for making peace with Microsoft and Bill Gates, focusing a messy hardware product line, and elevating industrial design to the highest priority.

Fixing hardware would take a strong manager. Jon Rubinstein managed hardware design at NeXT, then departed to help form what became FirePower Systems, a Mac cloner acquired by Motorola in 1996. Rubinstein joined Apple in February 1997 as senior VP of hardware engineering. His first months were spent scuttling projects, overseeing layoffs, and convincing key performers to stay for a strategy he knew was coming, but could not fully share.

One of those key performers was Apple’s young industrial design leader, Jonathan Ive. An employee since 1992, Ive and his team had redesigned the later versions of Newton and other products. Prior to Jobs’ return, the industrial design team was sequestered off-campus with dwindling resources – even the almighty Cray supercomputer had disappeared in cost-cutting measures.

Understandably discouraged, Ive harbored thoughts of quitting. Rubinstein intervened, telling Ive that after Apple was recovered, they “were going to make history”. Ive still had a resignation letter in his back pocket when summoned to meet with Jobs for the first time in the summer of 1997. They clicked instantly. The first product of the Jobs Ive partnership was the highly acclaimed translucent, egg-shaped iMac. It succeeded, buying Apple time for the next move.

Esslinger had thought beyond a three-to-four year Mac reboot, suggesting the quest for Apple should be “digital consumer technology.” This was more than creating smart, elegant devices; it would be a convergence play combining technology and content to deliver new experiences. It would pit Apple directly against Jobs’ first infatuation – Sony, who Esslinger had also worked with and characterized as “asleep” – and firms like Dell, HP, and Samsung, as well as mobile device companies like Motorola and Nokia.

“Steve understood that both technology and aesthetics are a means to do something else on a higher level,” said Esslinger. “Most people think features, performance, blah-blah-blah, whatever. Steve was the first to say, it’s for everybody.” The higher level: “Realize what people would love to dream.”

Music and dreams go hand in hand. In 1999, the MP3 file sharing service Napster debuted, forever disrupting music publishing. Users could easily browse and download songs to a PC, then transfer them to a personal MP3 player such as the SaeHan MPMan or the Diamond Multimedia Rio PMP300. Napster drew copyright infringement fire from artists including Metallica, Dr. Dre, and even the Recording Industry Association of America (RIAA) representing the entire industry. However, some lesser-known artists embraced downloadingsharing as a new channel, connecting with their listeners in new ways, and freeing them from album production costs.

That sounded exactly like a job for a design-conscious Apple.

Sony dominated personal audio players in 1999, with its latest CD Walkman D-E01 (or Discman) featuring sophisticated “G-protection” for electronic skip mitigation. As a traditional music publisher, Sony was concerned with producing and selling CDs, delivering the highest possible audio quality, and maintaining copyright protection. Personal CD players had an inherent limit in physical size – the compact disc itself – and took considerable battery power to spin the disc.

Flash-based audio players offered smaller physical size and longer battery life, and were skip-free. Sony responded with the Walkman NW-MS7 in September 1999. It combined their MagicGate Memory Stick and proprietary ATRAC3 encoding format – offering higher sound quality than MP3 files. In May 2000, they launched the ‘bitmusic’ site in Japan offering digital downloads priced at ¥350 (about $3.30) per song. This strategy screamed end-to-end protection, built around emerging Secure Digital Music Initiative (SDMI) technology and a cumbersome check-in, check-out procedure implemented with the OpenMG specification.

You’re Doing It Wrong, Here’s an iPod

The personal audio opportunity lay somewhere between two extremes. There were expensive, proprietary players delivering high quality, highly protected content. There were cheaper players spewing freely shared, lower quality files risking legal battles. A digital audio player as a peripheral to a Mac made sense for Apple, if they could safely piece together the rest of a differentiated solution.

In its first move into music, Apple secretly purchased rights to the popular Mac-based MP3 management application SoundJam MP in July 2000, and hired its creators Jeff Robbin and Bill Kincaid (who both worked for Apple previously). Robbin and his team went to work on their next “jukebox” software, launched on January 9, 2001 as the rebranded iTunes 1.0 for Mac OS 9. Incremental releases added support for Mac OS X in the following months. 346,347

iTunes was a hit, compatible with the Rio PMP300 and newer players such as the Creative NOMAD Jukebox – but those devices weren’t “cool” enough for Apple tastes.

An Apple-branded device could take control of the look-and-feel and enhance the user experience through better iTunes integration. It could also open a new segment offsetting the dot-com meltdown in progress. As part of a Mac media hub strategy leveraging FireWire as the interface for transferring large audio and video files, Rubinstein was tasked to make an Apple digital audio player happen.

Flash memory was still very expensive, and that limited how many songs an affordable portable audio player stored. On a factory visit to Toshiba, Rubinstein saw a new 5 GB 1.8” hard disk drive, and seized its potential. Creative was already using a 6 GB 2.5” Fujitsu hard drive in their larger CD-sized unit.

Tony Fadell, a veteran of General Magic and Phillips, was starting Fuse Systems with the idea of a hard disk-based music player for homes. Just as things looked darkest and funding was running out, Fadell went on vacation. His holiday was interrupted by a Rubenstein phone call. Fadell was offered an eight-week contract, with few details.

Upon arrival, Fadell learned of the idea for “1,000 songs in your pocket”. Foam mock-ups representing a device with a display and battery were created, and shown to Jobs, Rubinstein, marketing guru Phil Schiller, Ive, and industrial designer Mike Stazger among others. Schiller’s input was to lose the buttons and add the iconic jog shuttle wheel. Ive and Stazger set off to create a case design. Fadell hired on to run P-68, the code name for the iPod project. 350

FireWire offered a much faster interface than the prevalent USB 1.x of the day. The diminutive Toshiba hard drive was a huge advantage in capacity, size and weight, and still cost effective. A distinctive case design with a unique interaction method was on the way. What about the chipset and software? Audio decoding required DSP-like horsepower … or an ARM processor.

There had been talk of a one-year development schedule, but as with any consumer electronics product, shipping for the holidays is crucial. The 2001 solution for iPod: bootstrap with external resources. A then little-known fabless firm came into play. PortalPlayer formed in June 1999 from a group of National Semiconductor execs targeting digital media solutions for consumer devices. They evaluated MIPS and other RISC architectures for how well they could decode MP3s in software, and settled on ARM as their core. Their ‘Tango’ platform was SDMI-compliant with both MP3 playback and record capability, also supporting higher quality Advanced Audio Codec (AAC) formats.

PortalPlayer did a first version of Tango, the PP5001, fabbed at Oki Semiconductor in 0.25 micron. It integrated the audio playback core, LCD driver, and USB interface. IBM and Sony were working with the PP5001 in 2000, but its 60 MHz single-core implementation using a proprietary co-processor proved too slow, and several flaws affected yields and performance. Needing a reliable high-volume, high-performance chip to win customers, PortalPlayer engaged with eSilicon, a firm with more experience moving fabless chip designs into foundries for production. The new partnership redid the design, quickly creating the PP5002 with a dual-core 90 MHz ARM7TDMI, delivering 0.18 micron chips from TSMC in a 208-pin package. This time, it worked.

Apple reportedly benchmarked nine MP3 chips, including parts from Cirrus Logic, Micronas, STMicro, and TI. When Apple called, PortalPlayer dropped everything. The PP5002 chip produced the best sound and was exactly what Apple was looking for, and they wanted to use it off-the-shelf. Fadell’s team went to work on software for the PP5002, extending playlists, adding equalization, and improving power management among many enhancements.

An application framework including user interface software came from another small firm, Pixo, run by ex-Apple engineer Paul Mercer. Efforts were made to obfuscate what Pixo was actually working on, with prototypes in shoeboxes, but one thing was clear: Steve Jobs was heavily involved. Notes would show up daily with requests ranging from changing fonts (Jobs insisted on Chicago) to increasing loudness to reducing menu clicks. The iTunes team joined the effort, adding Auto-Sync capability to their iTunes 2 release and harmonizing user interfaces for the device and the Mac.

Apple held a special event to announce the iPod on October 23, 2001. It was a stunning demonstration of how ARM-based chips could enable radically new software and design quickly, in this case just over eight months from concept to shipment. The $399 device with a 160×128 pixel display measured 102x62x20mm, weighed 184g, and offered up to 10 hours play time before needing a recharge. During the two-month 2001 holiday season, 125,000 units sold.

On the way to nearly 400M iPod units shipped over its lifetime, three other developments stand out. Launched on April 28, 2003, the iTunes Music Store teamed Apple with publishers BMG, EMI, Sony, Universal, and Warner for over 200,000 downloadable songs. Each AAC formatted song was just 99 cents. Over the next five years, Apple sold 4 billion songs.

PortalPlayer said its actual orders from Apple were “100 times the original forecast.” They grew up along with the iPod, issuing shares in a NASDAQ public offering in 2004. They moved into a third generation series of parts, starting with the PP5020 adding USB 2.0, FireWire, and digital video output, and shifted into TSMC and UMC for foundry services to support the huge demand.

iPods were about to change radically. The 84x25x8.4mm, 22g iPod shuffle broke the $100 barrier in January 2005 by discarding the display and hard drive, using 512 MB of flash and an ARM-based MCU to control it. Samsung then convinced Apple it could supply massive quantities of NAND flash memory at bargain pricing. (More ahead in Chapter 8.) In September, the iPod nano debuted, a fully featured small player – 89x41x6.8mm and 43g – with a 176×132 pixel display and up to 4 GB flash.

Deal making became even nastier as the stakes rose. One day in late April 2006, PortalPlayer was selling chips to Apple. The next day, they were gone. Samsung unexpectedly announced they had replaced PortalPlayer as the processor supplier for future iPods, with an undisclosed ARM core on custom SoCs. Taken off guard, saying Apple “never talked to us” about changing chips, PortalPlayer considered legal action but thought better of it. PortalPlayer agreed to an acquisition by NVIDIA in November 2006, its designs becoming the starting point for the Tegra mobile SoC family.

Several more Apple surprise maneuvers were in progress.

ROKR Phones You’ll Never Buy

Motorola’s last flip phone hurrah was the RAZR V3, a sleek design carved from aircraft-grade aluminum. Just before its launch, new Motorola CEO Ed Zander and his team ventured into Apple headquarters in July 2004 to discuss a possible collaboration with his friend Steve Jobs: an iTunes-compatible phone.

Zander handed Jobs a RAZR prototype, the first effort from the new and improved Motorola. Jobs fondled it with more than passing interest, asking a barrage of questions about its construction and manufacturing. A thought flashed through Zander’s head: “That SOB is going to do a phone.” If Zander had realized just how fast Apple was closing in, he might have backed off the deal. Mobile phones grew in 2004 with a 30% increase to 674M units. Nokia grew units but shrank four points in share to 30.7%. Motorola grew a point in second at 15.4%, and Samsung grew two points in third at 12.6%, followed by Siemens, LG, and Sony Ericsson. Motorola thought an iTunes play could cut further into Nokia’s share. Apple saw Motorola as a way into cellular carriers.

The Motorola ROKR E1, a respun Motorola E398, measured 108x46x20.5mm and weighed 107g. Inside was the same engine powering the RAZR: the Freescale i.250-21 quad-band GSM/GPRS chipset. A DSP56631 baseband processor coupled a Freescale DSP core with an ARM7TDMI core. The phone attached via USB cable to iTunes running on a Mac or PC, and firmware allowed it to hold only 100 songs. A knob-joystick provided navigation.

After numerous delays and consternation over its non-wireless download method, the ROKR E1 was ready to go. The exclusive carrier was Cingular, soon to become AT&T Mobility. Zander wanted to announce ROKR at a Motorola analyst event in July 2005. Jobs and Cingular overruled him, opting for a higher-profile Apple event scheduled for September.

Positioned behind carrier qualification and marketing concerns was a bit of subterfuge. Starting in February 2005, “Project Vogue” had Jobs in top-secret discussions with Cingular – with no Motorola in the room. Jobs was formulating ideas for his own phone, and had already decided he wanted Cingular as his exclusive carrier. Cingular initially balked, allowing the ROKR E1 to proceed.

Some say Jobs didn’t actually want ROKR to succeed. He definitely didn’t like compromises in design and marketing, and he wasn’t impressed with the ROKR E1 itself. Subterfuge was about to escalate, approaching full-scale sabotage.

On September 7, 2005, Jobs took the stage to discuss the digital music revolution. He invoked Harry Potter and Madonna before introducing iTunes 5. Using his signature “one more thing” hook, he then brought out the Motorola ROKR E1. 100 songs. USB cable. “It’s basically an iPod shuffle for your phone.” He then uncharacteristically botched the music interrupted by phone call demo, returning to dead air instead of the song. Wrong button, he claimed.

22 minutes later, Jobs blew the room up debuting the iPod nano.

That explosion left Motorola covered in indelible bad ink. Zander blurted out “Screw the nano” in an interview a few weeks later. Those expecting an iPod-plus-RAZR, or even a sorta-iPod alternative, were disappointed. Reviews termed ROKR E1 performance as “slow” in both downloading and responsiveness. Competitive carriers scoffed at the wired downloads. At $249, ROKR E1 sales peaked at just a sixth of the weekly rate for the popular RAZR, with above-average customer returns, and then plummeted despite price cuts.

Once you’re gone, you can’t come back. Motorola continued with ROKR phones – just without iTunes capability. Their next attempt, the ROKR E2 released first in China in June 2006, went with the Intel XScale PXA270 processor and bundled RealPlayer running on Linux.

As it turned out, Jobs had played the ROKR card perfectly. Motorola took all the damage, and Cingular was unscathed. Next to a ROKR E1, a well-executed Apple phone could look fabulous. Customers would have seen the idea of an iTunes phone already, and be anticipating what Apple could do better. All that remained was to persuade Cingular, and actually deliver a better phone.

“Project Purple” and the iPhone

Apple had already started asking what-if touch interface questions as early as 2003, and in late 2004 discussion turned to phones. Software engineer Scott Forstall began recruiting within the company for the secretive “Project Purple.”

Preparing to sneak out for his top-secret meeting with Cingular’s Stan Sigman in Manhattan in February 2005, Jobs pressed the Purple user experience team led by Greg Christie for a hard-hitting presentation. He got it, and put Christie in front of Apple director Bill Campbell and Jonathan Ive to tell it twice again. Jobs wanted validation, but he also was assimilating details so he could deliver the pitch.

Cingular likely understood what they were seeing was vaporware, but were nonetheless intrigued. An Apple smartphone with Wi-Fi and music capability could be a must-have for consumers – and Jobs offered an exclusive. In return, Jobs wanted control of design and marketing, and to be a mobile virtual network operator (MVNO), bundling service with phones sold in Apple Stores and online.

There were just a few problems. High profile MVNO deals such as Disney Mobile were making headlines, but no phone vendor had ever been granted control by a carrier like Jobs was requesting. An Apple phone could draw new subscribers, but how many, and how much data they would they pay for?

To break the deadlock, Jobs turned to Adventis, a Boston telecom strategy firm who had consulted on the Disney MVNO. A team led by Raul Katz including Garrick Gauch and others descended on Apple headquarters, slides in hand. Jobs walked in, smashed his hands holding a pretend iPod and phone together, and stated his case for selling phones with voice and data for $49.95 per month.

Adventis built a story. Jobs and Cingular’s Ralph de la Vega ended up “talking past each other” at their meeting. Jobs framed his ask in terms of better phone design, too vague. Cingular execs thought in hard terms like phone subsidies, network infrastructure costs, and average revenue per user (ARPU). New-age technology vision ran into old school, we’re-the-phone-company conservatism. However, Jobs and de la Vega forged a personal bond that grew to trust.

CSMG acquired Adventis and continued discussions with Eddy Cue, Jobs’ designee. In early 2006, Gauch built a detailed model of longterm value (LTV) with variables including ARPU, customer churn, and cost per gross addition (CPGA). Calculations showed ARPU improved $5, churn reduced significantly, and an unsubsidized Apple phone for $500 reduced CPGA by $300.

Specifics were what Cingular needed. By July 2006, an exclusive 5-year deal was in place, complete with revenue sharing: a royalty on iTunes Store purchases through phones for Cingular, and $10 per new phone subscriber for Apple. It wasn’t the pure MVNO deal Jobs thought he wanted at the onset, but revenue sharing was all Apple really needed.

Extended negotiations were a break for Apple, because they were still nowhere near ready. From a tiny band of UX designers in early 2005, Project Purple swelled. The familiar rounded rectangular outline emerged in an August 2005 mock-up, and by November, serious software and hardware effort began.

The Purple team looked at Linux, but Jobs insisted on modifying Apple code to maintain control. Mac OS X was far too big, over 10 times too much, and Apple had resources tied up in a port to new Intel-based Macs until early 2006. Engineers rotated to Purple and began ripping and stripping code, shaping iOS.

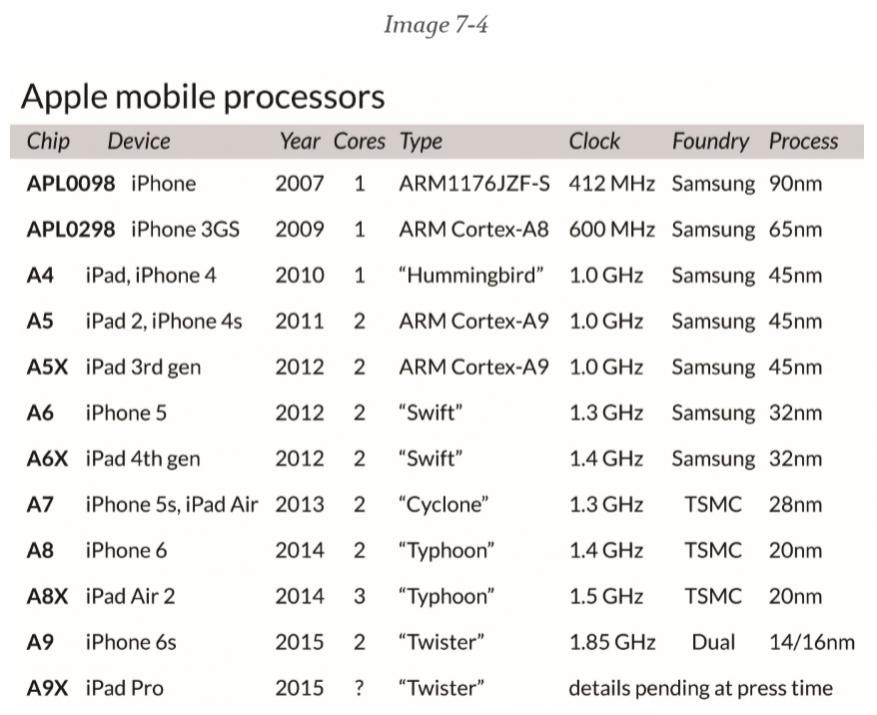

Samsung supplied the S5L8900 processor, labeled APL0098 for Apple. Fabbed in 90nm technology, it packed a 412 MHz ARM1176JZF-S core with an Imagination Technologies PowerVR MBX Lite GPU. One innovation was stacked die, placing two 512Mb SRAM dies on the SoC in a single package. This allowed faster memory transfers, and reduced circuit board size and overall system cost.

Radio technology was new territory. Ive and his team created an elegant brushed aluminum prototype, but Apple’s RF experts sent them back to the drawing board. Anechoic chambers were built, human heads were modeled to measure radiation, and millions were spent on cellular network simulation.

Trying to smooth out network qualification, Jobs called de la Vega for their guidelines. Emailed was a 1,000 page document, the first 100 of which explained how keyboards should work. Jobs called back and went nuts. de la Vega laughed, assuring Jobs that didn’t apply to Apple. When Cingular’s CTO found out an exception was granted, he went nuts. de la Vega said more or less, “trust me.”

Everyone from Apple employees to de la Vega was under strict secrecy. Apple teams were signing into Cingular for visits listing their firm as “Infineon”. It was a white lie; they were using the Infineon PMB8876 SGold 2 multimedia engine – also with an ARM926EJ-S core and a CEVA TEAKlite DSP core inside.

Only about 30 individuals in Apple had a complete picture. Purple staffers were dispersed across campus. Telling a friend about the project at the bar was grounds for dismissal. Hardware engineers had fake software, and software engineers had simulators or fake boards in fake wooden boxes. Chipset vendors had fake schematics, suggesting a next-gen iPod.

On January 7, 2007, at Macworld, thunderous applause erupted when Jobs said he was introducing a revolutionary mobile phone, along with a wide screen iPod with touch controls, and a breakthrough Internet communications device. He repeated that three times, and then revealed they were one device: the iPhone.

Calling out smartphone contemporaries – the BlackBerry Pearl, the Motorola Q, the Nokia E62, and the Palm Treo 650 – as a little smarter than cell phones, he pointed out they were really hard to use. Mocking a thumb-board and stylus, he introduced multi-touch, and told the story of a slimmed-down Mac OS X, iTunes syncing, and advanced built-in sensors. The live demo was a tour de force, all with a Cingular logotype prominently on the display, enabled by an ARM11 core.

What happened next was unprecedented. The 115x61x11.6mm, 135g quad-band GSM/EDGE phone with a luxurious 3.5”, 320×480 pixel, 165 pixel per inch display and 2 MP camera went on sale in the US on June 29, 2007. A 4GB model was $499, and an 8GB model was $599. Lines formed waiting for Apple and the just-rebranded AT&T stores to open. 270,000 iPhones sold in the first 30 hours. After only 74 days, 1 million units were in customer hands.

Even with a spectacular debut, Apple was barely a blip on mobile radar in 2007. On 1.1 billion phones shipped worldwide for the year, Nokia still enjoyed a large lead and recovered 3 points to 37.8%. Motorola was second at 14.3%, Samsung third at 13.4%, followed by Sony Ericsson and LG. Siemens had disappeared from mobile entirely, first sold to BenQ and then bankrupt. A note in a Gartner press release: “The market saw three new entrants into the top ten in the fourth quarter of 2007 … Research in Motion, ZTE, and Apple.”

Faster Networks and Faster Chips

Data monsters required feeding. One of the few complaints with the iPhone was its slow 2G EDGE capability. Moving up to 3G and HSDPA seemed urgent. There was already big trouble brewing for AT&T. Those questions about how much data iPhone subscribers would use had been answered – 50% more than AT&T projections. Urban hubs like New York and San Francisco experienced the worst service issues. AT&T pointed to iPhone customers consuming 15 times more bandwidth than the “average smartphone customer”; read that as an email user who did occasional web surfing with a primitive mobile browser.

AT&T asked Apple to consider data throttling.

Apple told AT&T to fix their network.

They were both wrong. AT&T claimed it had spent $37B on 3G infrastructure since the iPhone deal, and was preparing to spend another $17B on an HSPA speed upgrade, and billions more on 4G LTE when it was ready to launch. Apple’s early baseband code was sloppy, leading to dropped calls and excessive bandwidth usage. Fortunately, Wi-Fi in the iPhone allowed much of the data traffic to be offloaded from the network, heading off an even bigger fiasco. 394

RF school was back in session. Thinking AT&T might just be the problem, Scott Forstall and his iPhone team paid a visit to Qualcomm in late 2007. What they found was changing carriers, to Verizon for instance, required changing baseband chips. There were physical problems with board layouts, and software problems, but in the end, there was another complex set of carrier qualification issues. The Infineon choice had been super in Europe (their home turf) and OK in the US. A hasty switch from Infineon to Qualcomm solved some issues, but created others, and maybe a Verizon network really wouldn’t hold up any better.

Trust was strained, but the Apple-AT&T partnership continued.

Apple quickly spun the iPhone 3G, using the same Samsung S5L8900 processor but enhancing the baseband chip to an Infineon PMB8877 XGold 608 with both an ARM926EJ-S and an ARM7TDMI-S inside. GPS was added, and a slightly bigger case available in black or white squeezed in a better battery increasing talk time to 10 hours. iOS 2.0 debuted with the new App Store. The phone went on sale in 22 countries on July 11, 2008 for a much lower price – $199 for an 8GB model, and $299 for 16GB. 1 million units sold in 3 days.

Gunning for more speed, the iPhone 3GS carried a new chip when it appeared on June 19, 2009. The Samsung S5L8920 processor (later known as the S5PC100), labeled as the Apple APL0298, delivered a 600 MHz ARM Cortex-A8 core with an Imagination PowerVR SGX535 GPU. Its initial versions were in 65nm. The same Infineon PMB8877 baseband processor was used, and a single Broadcom BCM4325 chip replaced separate Marvell Wi-Fi and CSR Bluetooth controllers. A new 3 MP camera was incorporated, and iOS 3.0 was included.

During the iPhone introduction, Jobs had quoted Alan Kay, “People who are really serious about software should make their own hardware.” Software seriousness was a given at Apple. For the mobile device, the lessons of the iPod and iPhone showed making hardware now meant licensing IP and making SoCs.

A VLSI design team within Apple had done logic designs – for example, a custom ASIC in the Newton – and had been collaborating with IBM on Power Architecture processors and creating larger “northbridge” parts for Macs. There hadn’t been a start-to-finish Apple processor design attempt since the Aquarius project two decades earlier. Designing a SoC in RTL using ARM processor IP and Samsung libraries was certainly feasible, but it would have to be much more efficient than desktop designs Apple was accustomed to working with.

Expertise was nearby. Dan Dobberpuhl, of StrongARM fame, formed fabless firm P. A. Semi in 2003 with industry veterans including Jim Keller and Pete Bannon. They embarked on research of Power Architecture, creating the PA6T core and the highly integrated PWRficient family of processors. PWRficient featured an advanced crossbar interconnect along with aggressive clock gating and power management. Its near-term roadmap had single and dual 2 GHz 64-bit cores. The approach delivered similar performance to an IBM PowerPC 970 – the Apple G5 – at a fraction of the power consumption. This made PWRficient well suited for laptops, or embedded applications.

Timing of PWRficient was inopportune. Before P. A. Semi got its first parts out in early 2007, Apple had switched from PowerPC to Intel architecture. Prior to the changeover, there had certainly been in-depth discussions between Apple and the P. A. Semi design team – but there was no going back for the Mac.

Niche prospects such as defense computing and telecom infrastructure made P. A. Semi viable, and they continued forward. Unexpectedly, Apple bought P. A. Semi and many of its 150 employees in April 2008 for $278M. PWRficient disappeared instantly. The US Department of Defense staged a brief protest over the sudden unavailability. Apple brokered “lifetime” purchases of the processor and a final production run, satisfying both buyers and bureaucrats.

Now Serving Number 4

For months prior to the P. A. Semi acquisition, rumors ran rampant that given Apple switching the Mac to Intel processors, the iPhone would be next with a move to Intel’s latest “Silverthorne” Atom processor. Aside from an unnamed source quoted in numerous outlets, this was never more than media speculation.

According to ex-CEO Paul Otellini, Intel had been in discussions about a mobile chip for Apple before the original iPhone design. The cloak of secrecy on the iPhone gave Intel little to go on, and they were skeptical of Apple’s volume projections. “There was a chip [Apple was] interested in that they wanted to pay a certain price for and not a nickel more, and that price was below our forecasted cost. I couldn’t see it,” said Otellini. Intel passed.

Once again, power, cost, and customization favored ARM in mobile.

Samsung did the physical layout, fabbed, packaged, and shipped three SoCs with ARM cores to Apple RTL specifications: the APL0098 for the iPhone, the APL0278 for the 2nd gen iPod Touch, and the APL0298 for the iPhone 3GS. For chip number 4 in the sequence, Apple would take over the complete SoC design from RTL to handoff for fab.

With the P. A. Semi acquisition in place and a VLSI design team bolstered with that new engineering talent, Apple signed a deal with ARM during the second quarter of 2008. ARM was non-specific when discussing its prospects in mobile on its earnings call, referring briefly to “an architecture license with a leading OEM for both current and future ARM technology.” The agreement was for multiple years and multiple cores, and an architecture license gave Apple rights to derivative core designs.

Many SoCs were hitting the market with the ARM Cortex-A8 core, and the Samsung S5PC100 was already in the pipeline. Apple wanted to get beyond what was widely available, and get in front of Samsung who was about to launch their own flagship smartphone. Apple was also about to launch an entirely new device, one that would stretch mobile processing power farther than it had been taken.

For a new core, Apple could have asked the P. A. Semi team to develop an ARMv7-compliant design. That would have taken 3 years, or longer. Another Power Architecture refugee was making its name redesigning cores with unique technology – and they were already working on an effort for Samsung.

Intrinsity, formed from the ashes of PowerPC chip designer Exponential Technology, had one of the hottest technologies in SoC design. Their Fast14 methodology combined multi-phase clocking with multi-valued logic using transistor dominos and 1-of-N encoding. The approach left instruction cycle behavior unchanged, but greatly boosted performance while simultaneously lowering power consumption. Their first big success in 2002 was FastMATH, a 2 GHz MIPS-based signal processor chip.

On July 27, 2009, Intrinsity announced “Hummingbird”, an implementation of the ARM Cortex-A8 core using Fast14 on a Samsung 45nm LP process reaching 1 GHz. Retaining strict instruction and cycle accuracy meant there were no operating system or application software changes needed. Intrinsity touted that their semi-custom approach could yield a SoC design in as little as four months.405 Exactly six months later on January 27, 2010, Apple announced the iPad with a new chip inside – the Apple A4. This powered the tablet’s 1024×768 pixel, 9.7” multi-touch display, running the same iOS as the iPhone family and enhanced apps working with the larger screen. A Wi-Fi iPad model with 16GB of memory started at $499, with models including 3G connectivity up to $829. One million units sold in the first 28 days of availability.

The A4 chip was fabbed at Samsung on its 45nm LP process, featuring a 1 GHz Hummingbird core paired with an Imagination PowerVR SGX535 GPU at 250 MHz. One pundit suggested it “just [wasn’t] anything to write home about”, other than its cachet as an Apple-only device. Another group of observers termed it evolutionary, not revolutionary. However, its relative speed to market and the associated cycles of learning made it a stepping-stone to bigger things.

In March 2010, Apple bought Intrinsity for $121M. Fast14 disappeared – much to the chagrin of AppliedMicro, who was working with Intrinsity on a new Power Architecture core. Apple refunded $5.4M to AppliedMicro in appeasement.

The A4 appeared next in the iPhone 4, introduced on June 7, 2010. Even with a lower 800 MHz clock speed, the extra processing power enabled new features such as a 5 MP camera, FaceTime video calling, and an enhanced 3.5”, 960×640 pixel, 326ppi “retina” display. Apple proclaimed the iPhone 4 the thinnest smartphone ever at 9.3mm. Two basebands were supported: a CDMA version using a Qualcomm MDM6600, and an HSPA version using the Infineon PMB9801. In the first three days of iPhone 4 sales, Apple racked up 1.7 million units.

Mobile phone leadership was in a full-scale shakeup. Closing 2010, the worldwide mobile device market was at 1.6 billion units. Nokia still held first place with 28.9% share. Samsung had taken over second at 17.6%, and LG was in third with 7.1%. Smartphones were no longer “other”. Research in Motion held 3.0%, and Apple was fifth on the list with 2.9% and 46.6 million units shipped. Both Sony Ericsson and Motorola had shriveled, with ZTE, HTC, and Huawei on their heels.

Viewed by operating system, the shakeup was even more ominous. Symbian was still first in units but plummeted nearly 10 points in share to 37.6%, and the fall was accelerating. Android was in second and had grown almost 19 points to 22.7%. Blackberry barely hung on to third with 16%, having shrank 4 points. iOS was in fourth with 15.7% and was the only other platform growing. Far behind was a scuffling Microsoft in fifth at 4.2%. A two-horse race was developing.

Namaste, and a “Swift” Response

Alternating volleys of tablets and phones from Apple were in progress. Anxious users expected new SoCs for each subsequent generation. In his last major product introduction, Steve Jobs made an appearance while on medical leave to introduce the iPad 2 on March 2, 2011. It added front and rear cameras and a MEMS gyro, and reduced tablet thickness to 8.8mm.

Its A5 chip held two ARM Cortex-A9 cores on Samsung 45nm. Rumors had Intrinsity working on a Cortex-A9-like core pre-acquisition, but lithography photos revealed the A5 cores were stock ARM IP. Dual 1 GHz Cortex-A9 cores were fast, although not quite “twice as fast” compared to the A4 as Apple suggested. Graphics had improved, with an Imagination PowerVR SGX543MP2 clocked at 250 MHz running apps from three to seven times faster.

Analysts noted the A5 die was huge compared to other dual-core Cortex-A9 parts (122.2mm2). Integrated on the A5 was an image signal processor (ISP) for handling computational photography. It also had noise-reduction technology from Audience, with a DSP managing inputs from multiple microphones. On October 4, 2011, Tim Cook introduced the iPhone 4S with Siri voice-recognition technology powered by the A5. In the new phone, there was an 8 MP camera with 1080p video and digital stabilization. Qualcomm took over as the sole baseband chip supplier with the MDM6610.

The next day, Steve Jobs left this world, quietly and with dignity. Although nobody could ever replace Jobs, Tim Cook was fully prepared to carry on, having assumed CEO duties with Jobs’ recommendation on August 24, 2011. Cook had driven Apple operations since 2007, keeping Apple focused and highly cost competitive even as Jobs’ health deteriorated.

Opening the multicore SoC box for tablets had placed Apple in a battle with a relatively new foe – NVIDIA. The Tegra family with its Android support was finding homes in tablets positioned against Apple’s iPad. The latest Tegra 3 debuted in November 2011 with quad ARM CortexA9 cores along with a low-power companion core and a 12-core GeForce GPU.

A problem developing for Apple was how to give their tablets increased performance without penalizing their phones in cost and power consumption by having to use the same chip. Apple was using advanced dynamic voltage and frequency scaling which helped phones, but there was more headroom available. The solution was bifurcating the SoC family. This explains why Apple went with stock Cortex-A9 cores in the A5: there was a second chip in rapid development.

The first of the tablet-focused chips, the A5X, appeared in the 3rd generation iPad introduced on March 7, 2012. Where press expected a quad-core chipset, what everyone got was another dual Cortex-A9 design. However, Phil Schiller was ready with a quad-core graphics story, supporting more pixels on the tablet’s 2048×1536, 264ppi retina display.

The A5X held two key improvements: a bigger Imagination PowerVR SGX543MP4 GPU and a 128-bit wide memory subsystem doubling the 64-bit capability in the A5. Freeing up the memory bottleneck delivered results with the A5X beating the Tegra 3 on most graphics benchmarks. While Tegra 3 did outperform on CPU benchmarks, realworld tablet use rarely got that intense.

In the wings, instead of quad-core processing, Apple had a “Swift” response – the custom core everyone had waited for since the ARM architectural license was granted. Two of the new 1.3 GHz custom cores running the ARMv7 instruction set appeared in the A6, fabbed on a Samsung 32nm high-k metal gate (HKMG) low power process that reduced its die size to 97mm2. With a clear break between lines, the A6 fell back to a 64-bit memory subsystem and a three core PowerVR SGX543MP3 GPU, saving on power and cost for the next phone.

4G LTE highlighted the iPhone 5 with the A6 on September 12, 2012. The redesigned phone was slightly larger for a 4”, 1136×640 pixel, 326ppi display. Yet, thanks to an aluminum case, it was thinner and lighter, 123.8×58.6×7.6mm and 112g. The iPhone 5 also featured the new Lightning connector. A Qualcomm MDM9615M broadband chip delivered the 4G LTE capability. 5 million units shipped in the first weekend.

The A6X came shortly thereafter, cranked up with 1.4 GHz dual “Swift” cores with the quad-core PowerVR SGX554MP4 at 300 MHz and a slightly faster 128-bit memory subsystem, again on Samsung 32nm HKMG LP.426 The 4th generation iPad with the A6X was announced on October 23, 2012, immediately replacing the 3rd generation iPad announced just six months earlier. A combination of factors was at work: a nod to how much more competitive the A6X with “Swift” was compared to the A5X with Cortex-A9, moving the user base into Lightning connectors, and creating separation from the new iPad mini with a die-shrunk Samsung 32nm A5 chip inside. More records fell as first weekend sales of the tablet pair topped 3 million units.

“Cyclone” and The 64-bit Question

Qualcomm was both a supplier and competitor for Apple. Increasing acceptance of Snapdragon processors with their custom “Scorpion” core and more advanced “Krait” core on the way meant Apple needed a bigger leap ahead in processor technology.

ARM announced their next innovation in October of 2011, showing off the ARMv8 architecture in all its 64-bit glory. Many analysts saw that as the official move into server space. Companies producing 32-bit ARMv7 multicore server chip suppliers such as Calxeda met with a slow and painful death as opportunities dried up. In an Intel and AMD context, 64-bit multicore was a necessity for servers.

But, for mobile? Analysts giggled. Who needs that much physical memory in a phone? Wouldn’t a 64-bit processor take too much power? Where is the mobile operating system for 64-bit, and who is going to rewrite apps to take advantage of it? The consensus was in mobile, 64-bit wouldn’t happen quickly, if at all.

Dead wrong. What ARM and Apple sought was not physical memory, but virtual memory – managing multiple applications in a relatively small memory space by swapping them in and out from storage. This capability would be critical in getting one operating system to run on multiple devices. Also in play were benefits of wider registers in the processor, and wider paths between execution units in the SoC and memory, removing bottlenecks and increasing performance.

The rest of the mobile world was pressing for quad-core and octa-core, and leveraging the ARM big.LITTLE concept – octa-core was really two clusters of four processors, one for low power and one for bursts of performance. By October 2012, ARM had announced their first 64-bit ARMv8-A cores, the Cortex-A57 for the big side, and the Cortex-A53 for the LITTLE side. Android had yet to catch up with a 64-bit release, and many SoC vendors stood on the 64-bit sidelines waiting.

Apple was having none of that. Surprising just about everyone, Apple got in front of the 64-bit mobile SoC wave on September 10, 2013. The A7 chip and its dual custom “Cyclone” cores were supported in iOS 7 and inside the new iPhone 5s. Why Jobs pushed so hard to create iOS from Mac OS X was becoming clear.

The iPhone 5s carried enhancements in its camera and lens system, along with a new fingerprint sensor. However, the A7 and another ARM-based chip making its first appearance were the biggest developments.

Compliant with the ARMv8 specification, “Cyclone” doubled the number of general-purpose registers to 31, each 64 bits wide, and doubled floating-point registers to 32, each 128 bits wide. The A7 increased cache, with a 64KB data and 64KB instruction L1 for each core, a 1MB L2 shared by both cores, and a 4MB L3 for the CPU, GPU, and other resources. In the iPhone 5s, cores were clocked at 1.3 GHz. Cache latency was half of that seen with “Swift”, memory latency was 20% improved, and sustained memory bandwidth was 40 to 50% better.

Despite persistent rumors in the media that Apple was plotting to switch suppliers, like its predecessors the A7 was still fabbed by Samsung – correctly predicted by SemiWiki eight months before product release. On a 28nm process, the A7 was compact, with over 1 billion transistors in 102mm2. Along with the enhanced CPU cores and cache, it featured the Imagination PowerVR G6430 GPU, a four-cluster engine.434,435 Also inside the iPhone 5s was the M7 “motion co-processor”, a fancy name for a customized NXP LPC18A1 microcontroller with an ARM Cortex-M3 core. Its job was sensor fusion, combining readings from the gyroscope, accelerometer, and compass in real-time, offloading those tasks from the main processor. The M7 was the subject of “Sensorgate”, with uncorrected accelerometer bias leading to app errors – eventually fixed in an iOS 7.0.3 update.

Both the A7 and M7 went to work in the iPad Air and the enhanced iPad with Retina Display beginning October 22, 2013. In the iPad Air, the A7 was upclocked to 1.4 GHz, running iOS 7.0.3. Keeping the same 9.7” display as the 4th generation iPad, the iPad air was 20% thinner at only 7.5mm, and weighed 28% less at 469g. New antenna technology for MIMO enhanced Wi-Fi.

With 64-bit processing and 64-bit memory controller enhancements in the A7, and an iPad refresh complete, Apple passed on an A6X-style enhancement with a 128-bit memory interface for tablets. Whether by planning or coincidence, Apple avoided conflict over the #A7X hashtag with Avenged Sevenfold in social media. (Well played, Apple.)

Nothing circa 2013 in 28nm came close to the “Cyclone” core, especially in mobile space. Compared to 3-wide instruction decode in the ARM Cortex-A57, “Cyclone” issued 6 instructions at a time into a massive 16deep pipeline. For the next round in 2014, Apple tweaked the core under the name “Typhoon”, improving add and multiply latency by one cycle each, and speeding up L3 cache accesses.439 The biggest improvement in the A8 was a foundry and process change to a more advanced TSMC 20nm node. Apple bandied a 2 billion transistor count, yet the chip was just 89mm2. While not making sweeping microarchitecture changes, Apple teams had gone through the SoC design, optimized performance and power consumption, and adapted it for TSMC processes. A PowerVR GX6450 quad-core GPU gave the A8 some 84 times the graphics power of the original A4, while the dual-core 1.4 GHz processing power had increased 50 times.

Bigger was the theme for iPhone 6 with the A8 and iOS 8. Two versions debuted on September 9, 2014, one with a 4.7” display and 1334×750 pixels, and the second dubbed “Plus” featuring a 5.5” display with 1920×1080 pixels. A new M8 motion coprocessor, again a customized NXP LPC18B1 microcontroller, was inside. For the first time Apple incorporated an NFC chip in a phone, the NXP PN548 with an ARM Cortex-M0 core. Qualcomm’s MDM9625M provided baseband, including 4G LTE carrier aggregation technology.

Bigger it was. A record 10 million iPhone 6 units sold in the first weekend. 445

For the A8X, Apple planned two new tricks for its tablet refresh. The TSMC 20nm process allowed many more transistors on a chip yet stayed within power limits. Apple started with three enhanced “Typhoon” cores at 1.5 GHz. Next came an Apple-concocted GPU using Imagination IP – essentially bolting two PowerVR GXA6450s together for eight graphics clusters. With an increased 2MB L2 cache and the 128-bit memory subsystem, the result was 3 billion transistors on a 128mm2 die.

Slimming down to just 6.1mm thick, the iPad Air 2 launched on October 16, 2014 with the A8X inside. The iPad mini 3 was also announced, staying on the A7 chip. Apple didn’t trumpet first weekend shipments – a sign the tablet market was shifting, and faster processors alone would not be the answer.

Calling the Shots in Fabless

For a company that was not in the phone or chip business at the start of 2007, Apple now dominates both the mobile and fabless ecosystems based on design prowess and sheer volumes. Their voyage has gone from buying parts for iPods to feeling their way around a processor specification for the first iPhone to designing highly optimized SoCs with custom 64-bit ARMv8-A processors and multicore GPUs.

1.9 billion mobile phones shipped worldwide in 2014. With all phones taken into account, Samsung leads with 20.9%, followed by Apple with 10.2%, Microsoft (Nokia, assimilated) with 9.9%, and Lenovo (Motorola, expatriated) at 4.5%. Blackberry is now in “other”, while half of the top 10 names are Chinese: Lenovo, Huawei, TCL, Xiaomi, and ZTE. Taking just smartphones, the iPhone 6 introduction pushed Apple over Samsung by 1.8 million units in the fourth quarter of 2014.

The iPhone 6s ushered in the Apple A9 chip, along with the 12.9” iPad Pro and its A9X SoC inside, on September 9, 2015. It is intriguing both these devices launched in the same event, a timing change for Apple. Both devices run the latest iOS 9. Details on the A9 and A9X and their new ARMv8-A “Twister” core are just coming out. Initial reports indicate L2 cache has tripled, from 1MB on Typhoon to 3MB on Twister, and L3 has doubled to 8MB. LPDDR4 memory support has been added doubling bandwidth, and DRAM and cache latency has also been reduced. Branch prediction latency has been cut significantly. These steps, plus an increase to 1.85 GHz and a few other tweaks, means the A9 delivers a 50 to 80% bump in performance over the A8.

Incremental improvements in the iPhone 6s include an enhanced 12 MP camera, 23 LTE bands from a Qualcomm MDM9635, 3D Touch for pressure-sensitive “taptic” gesturing, and integration of the motion coprocessor on the A9. Once again, even without major new features, the iPhone 6s shattered shipment records, 13M in its first three days.450 The iPad Pro goes large, with 5.6 million pixels and a stunning new input device: the Apple Pencil. (Steve Jobs must have rolled over in his grave upon hearing that.)

Even in a smartphone world that is now 80.7% Android, Apple has an enormous amount of influence because of its iconic status and singular focus, compared to a fragmented pile of vendors. Focusing is about saying no – and that made Apple a $700 billion company based on market capitalization in 2015.

That gives Apple freedom to write very big checks for what they need.

Billions of dollars in foundry business shifted in 2015, as Apple switched some of its orders back to Samsung and a tuned 14nm FinFET LP process for the A9 amid higher volumes of the iPhone 6s. TSMC retained some A9 business (more on the dual sourcing controversy in Chapter 8) and the lower volume A9X business, on its enhanced 16nm FinFET++ process offering higher performance for the iPad Pro. Other TSMC customers, including Qualcomm and MediaTek, may see capacity loosen up as a result.

Demand for Apple mobile devices should continue to be strong. That assumes Tim Cook and his team keep Taylor Swift and millions of Apple Music listeners happy, execute on the IBM MobileFirst for iOS partnership to help tablets, and win with new ideas such as Apple Pay and the Apple Watch. (More ahead in Chapter 10.) The news to watch will be how the foundry business keeps up as Apple and others run into the 10nm process cauldron in 2016 and beyond.

Share this post via:

Comments

6 Replies to “How Apple Became a Force in the Semiconductor Industry”

You must register or log in to view/post comments.