Automotive applications are having a tremendous influence on semiconductor design. This influence is coming from innovations in cloud computing, artificial intelligence, communications, sensors that all serve the requirements of the automotive market. It should come as no surprise that ADAS and autonomous driving are creating the majority of the push in each of these areas. At DAC this year in San Francisco there was plenty of buzz about all things automotive. I was able to attend a very informative lunch event hosted by Synopsys – “Automotive Drives the Next Generation of Designs”.

The event was kicked off by Synopsys VP of Automotive Business Development, Burkhard Huhnke. There were presentations by each of the four panel members, representing a wide swath across the industry. First off there was Jonathan Colburn, Distinguished Engineer at Nvidia. He was followed by Dr. Akio Hirata, Chief Engineer at Panasonic. Next up was Hideki Sugimoto, CTO at NSI-TEXE. Last came an informative presentation by Tom Quan, Director of OIP Marketing at TSMC. The panel included chip makers, IP providers and foundry representation.

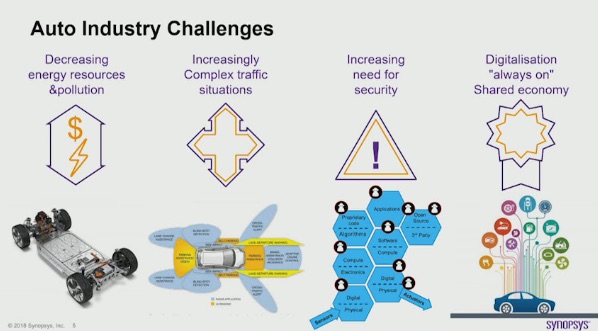

There were several important themes from the talks. Initially Burkhard spoke about the motivation for ADAS and Autonomous driving. Apparently 84% of accidents are caused by human error. So, while we cannot completely eliminate all these accidents with automation, this is low hanging fruit for improving safety. With over 100 people a day being killed in car accidents, reducing the accident rate is a goal we should pursue. In addition, there is a strong economic argument for reducing accidents, they have direct and indirect costs that go into the hundreds of billions of dollars per year.

Several speakers pointed out independently that the need for automation is greatest specifically for the least complex and most complex driving tasks. These are the occasions where humans perform most poorly. An example of a complex driving task is merging onto a busy freeway or turning at intersections. The least complex driving situations are those where distraction or loss of attention can occur, such as on long-distance trips or in traffic jams.

Nvidia, Panasonic and NSI-TEXE all talked about the changing needs for computing. Heterogeneous computing is universally considered the optimal solution for training and recognition functions. NSI-TEXE sees a large role for flow computing in offering the quickest response time for emergency events or system failures. Nvidia, as you might expect, touted the advantages of mixing GPUs with CPUs. One interesting twist that Jonathan mentioned was that Nvidia uses some of their gaming technology to modify training data to alter the conditions and create realistic, but hard to recreate training scenarios. It’s a given that the quantity and quality of training data has a big effect on the quality of recognition operations. Using real world physics, they can create virtual training data. As a result, Nvidia can generate massive numbers of hours of training data that simply would not be available in any other way.

In the TSMC talk, Tom Quan spoke about their efforts to support the automotive market. By 2020 the electronics in most cars will shift from the passive safety, infotainment and vehicle control categories to an expanded set that also includes many new functions. There will be big changes in autopilot and ADAS. New communications capabilities will include 4G/5G, V2X and over the air update. The help the environment and improve efficiency there will be greener engine controls for EV and HEV. To help drivers, there will be natural interface for voice command, gesture control and recognition tasks that could include driver alertness detection, personalization based on face recognition. These new categories will incorporate more and more TSMC technologies.

Infotainment is using 28nm and 16nm, ADAS and partial autonomous is using 16nm, and highly autonomous will require 7nm which will be prevalent after 2020. One particular area of interest for TSMC in the automotive market is sensor technology. Many cars already have 7 to 21 sensors, including multiple LIDAR, camera, radar, ultrasound and NIR camera units. Higher levels of automation will see the need for sensors to expand significantly. NSI-TEXE pointed out that the sensor data will require increased processing to extract every bit of useful information to make autonomous driving systems more reliable. Key TSMC technologies used in the sensor domain include MEMS, eNVM and high voltage processes such as BCD.

I can really only skim over the interesting content from this talk. Fortunately, Synopsys has posted a video of the lunch session. I highly recommend watching the entire session for more insights into what Synopsys, TSMC, Panasonic, Nvidia and NSI-TEXE are doing in the automotive space. Every time I attend a session like this on the topic of automotive electronics I come away with a better understanding of this rapidly changing area.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.