The electronics market for automotive applications is distinguished by multiple factors. This is a very fast  growing market – electronics now account for 40% of a car’s cost, up from 20% just 10 years ago. New technologies are gaining acceptance, for greener and safer operation and for a more satisfying consumer experience. Platforms to support these capabilities are becoming more complex, greatly increasing challenges in verification and validation. Safety, security and reliability expectations are much higher than in other consumer applications, making consumer-style field repair/upgrades impractical given $10M+ costs per recall. Finally, the supply chain – from chip maker to Tier1/OEM supplier to auto-manufacturer – has become much more interdependent in reaching these goals.

growing market – electronics now account for 40% of a car’s cost, up from 20% just 10 years ago. New technologies are gaining acceptance, for greener and safer operation and for a more satisfying consumer experience. Platforms to support these capabilities are becoming more complex, greatly increasing challenges in verification and validation. Safety, security and reliability expectations are much higher than in other consumer applications, making consumer-style field repair/upgrades impractical given $10M+ costs per recall. Finally, the supply chain – from chip maker to Tier1/OEM supplier to auto-manufacturer – has become much more interdependent in reaching these goals.

All of this points to a common question in systems design – how can I check the integrity of the design earlier – but with a wrinkle. Now the checking must span the supply chain because all parties have a vested interest in proving out the system as early as possible.

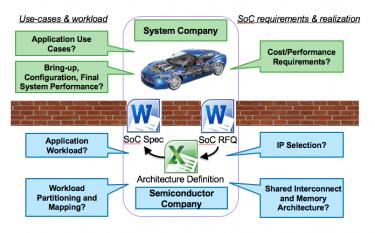

Synopsys captures this in a V-diagram (not pictured here, but easy to imagine), starting with architecture specification at the upper-left. First, how can the system architecture (software+hardware, quite possibly in the context of a larger system model) be optimized for system performance? This is where supply chain collaboration is important. The software to drive that hardware may not be written yet, but the Tier1/OEM has a sense of use models. Traditionally these would have been exchanged in static Word and Excel documents, but these static requirements are an imperfect and incomplete way to ensure a match in intent between supplier and systems provider.

Synopsys captures this in a V-diagram (not pictured here, but easy to imagine), starting with architecture specification at the upper-left. First, how can the system architecture (software+hardware, quite possibly in the context of a larger system model) be optimized for system performance? This is where supply chain collaboration is important. The software to drive that hardware may not be written yet, but the Tier1/OEM has a sense of use models. Traditionally these would have been exchanged in static Word and Excel documents, but these static requirements are an imperfect and incomplete way to ensure a match in intent between supplier and systems provider.

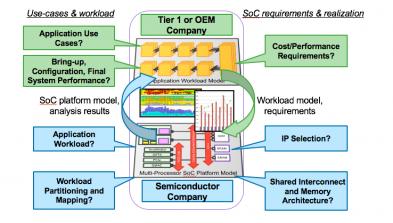

A better approach replaces static spec and requirements with a dynamic virtual model. The Tier1/OEM can start from an existing model (or develop one, perhaps with assistance), along with modeled application use-cases and workloads based on task-graph models. This dynamic specification become the driver for SoC design within the chip company. If iteration is required, to optimize power, performance, cost or other factors, the Tier1/OEM can adjust the virtual model and workloads to reflect updated requirements. All of this can be accomplished through Platform Architect MCO.

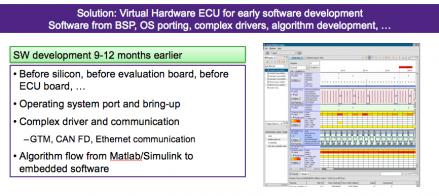

The bottom vertex in the V addresses starting on software development and integration well before hardware is available. FPGA prototypes are great in the late stages of development, but virtual models are a better approach before that point. They run almost in real time and require very limited understanding of the target architecture. Here of course the intention is to run real software as it is being developed, rather than modeled workloads. And again, the virtual hardware model provides an ideal dynamic reference point between the hardware supplier and Tier1/OEM. Experience shows that software development can start 9-12 months in advance of silicon when using a virtual hardware model.

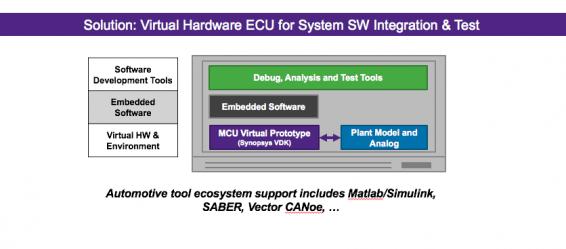

The upper-right vertex of the V is test, where again virtual models play an important role. Ultimately test must run on the real silicon (hardware in the loop testing) but test development can start much earlier using a virtual hardware model. Particularly important here is the ability to integrate with the larger system test environment, including tools like Matlab Simulink, Saber (Synopsys tool for simulating electronic/mechanical systems), systems like CANoe for testing individual MCUs and networks of MCUs, through Lauterbach and other platforms.

The upper-right vertex of the V is test, where again virtual models play an important role. Ultimately test must run on the real silicon (hardware in the loop testing) but test development can start much earlier using a virtual hardware model. Particularly important here is the ability to integrate with the larger system test environment, including tools like Matlab Simulink, Saber (Synopsys tool for simulating electronic/mechanical systems), systems like CANoe for testing individual MCUs and networks of MCUs, through Lauterbach and other platforms.

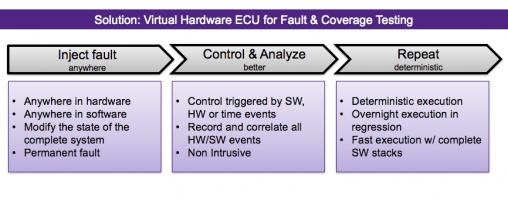

These types of testing are essential for building testbenches to validate mission-mode behavior and performance but ISO 26262 compliance requires additional testing to validate safe behavior in the presence of faults. The virtual model is equally important here, to model faults injected into the software or the virtual hardware model so that software and hardware designers can determine how/if faults are detected and how they will be managed.

These types of testing are essential for building testbenches to validate mission-mode behavior and performance but ISO 26262 compliance requires additional testing to validate safe behavior in the presence of faults. The virtual model is equally important here, to model faults injected into the software or the virtual hardware model so that software and hardware designers can determine how/if faults are detected and how they will be managed.

For both mission mode and fault testing, the deterministic nature of the virtual model is important in being able to replay and track down root-case problems in the system design. Also very important is the ability to run virtual models in regression on server farms (since these models are software-based, something that would not be possible for true hardware in loop testing). This enables parallel testing of changes to the software system, and running on variants of software stacks, greatly increasing productivity and coverage in testing.

Synopsys already provide virtual hardware models in the form of virtual development kits (VDKs) for the NXP MPC5xxx MCU Family, the Renesas RH850 MCU Family and for Infineon AURIX, so system developers are ready to go on some of the primary drivetrain platforms in the industry.

To watch the Webinar, click HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.