Verification has been going through a lot of changes in the last couple of years. Three technologies that used to be largely contained in their own silos have come together: simulation, emulation and virtual-platforms.

Verification has been going through a lot of changes in the last couple of years. Three technologies that used to be largely contained in their own silos have come together: simulation, emulation and virtual-platforms.

Until recently, the workhorse verification tool was simulation. Emulation had its place but limits on capacity and its high cost, and difficulty of use, kept it from the mainstream. Virtual platforms had their niche but the modeling challenge meant that they were not nearly as widely used as they could have been.

Then simulation ran out of steam. State of the art SoCs were just too big for simulation. And we are not talking about gate-level simulation here, that ran out of steam years ago. This is RTL simulation. At the same time emulation technology improved both in terms of capacity and usability. It used to be a multi-week or even month project to get a design moved onto an emulator and getting everything up and running. Also, the cost came down and the ability to share an emulator among multiple-users at the same time further reduced the amortized cost. I have seen statements that emulation is now the cheapest verification cycle you can get, compared to running simulation on server farms. I don’t know if that is strictly true but it seems to be getting to be in the same ballpark. I moderated a panel on emulation at DAC last year with companies like TI and Broadcom on the panel. They all used emulation extensively and their only real problem was not being able to get enough of it. But there is never enough time and money to do all the verification you might like on a modern SoC.

It turned out that once people had emulators, the modeling problem for virtual platforms could be made to go away. Instead of hand-crafting behavioral or transaction level models and then trying to keep them synchronized with the RTL, it became possible to just use the RTL. Run the processor and its associated software load using the virtual platform technology but run the rest of the design by compiling the RTL into an emulator.

As you probably remember, Synopsys acquired Eve and its Zebu emulation product line last year. With various flavors of VCS they already had RTL simulation, of course. Plus, 3 or so years ago, Synopsys acquired Virtio, VaST and CoWare giving them virtual platform technology. Now, with a lot more integration work having been done Synopsys has new capabilities most of which they market under the brand-name Verification Compiler.

As you probably remember, Synopsys acquired Eve and its Zebu emulation product line last year. With various flavors of VCS they already had RTL simulation, of course. Plus, 3 or so years ago, Synopsys acquired Virtio, VaST and CoWare giving them virtual platform technology. Now, with a lot more integration work having been done Synopsys has new capabilities most of which they market under the brand-name Verification Compiler.

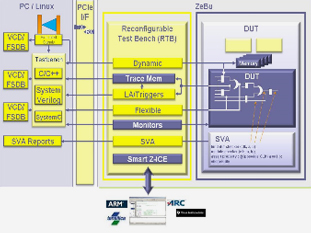

A couple of days ago Synopsys had a webinar Creating a High-performance Transaction-based Emulation Environment (yes, I know it would have been better to put this out a couple of days ago instead of today, but migraine struck. But there is a replay).Transaction-based emulation or TBE has become an increasingly popular method for utilizing emulators because of the high verification performance and flexibility in connecting to existing environments. Achieving high performance requires a combination of the emulator’s capabilities and tuning the environment that drives it to avoid bottlenecks. This tutorial will explain the necessary components and techniques to create a high performance emulation environment.

The webinar was presented by Lance Tamura, who is the CAE manager for the Zebu emulator.

The replay for the webinar is available here (registration).

And the non-silicon-valley SNUGs are coming up (with a bit better notice than for the webinar):

- Boston on September 11th

- Austin on September 23rd

- Ottowa on October 8th

More articles by Paul McLellan…

Comments

0 Replies to “Transaction-based Emulation”

You must register or log in to view/post comments.