A decade ago, many of us heard commentaries on how entrepreneurs were turned down by venture capitalists for not including a cloud strategy in their business plan, no matter what the core business was. Humorous punchlines such as, “It’s cloudy without any clouds” and “Add some cloud to your strategy and your future will be bright and sunny” were common.

Fast forward to now and there is no denying that cloud computing is well established across most industries. From on-prem computing resources being sufficient, conditions have changed to make on-cloud computing a necessity for almost everyone.

It’s true that early interest for EDA to move to the cloud was soft. Quite a lot has changed over the last decade. But there are still companies out there that are evaluating EDA in the cloud. This is the backdrop for a recently published whitepaper. It was authored by Michael White, senior director, physical verification product management, Calibre Design Solutions at Siemens EDA.

The whitepaper covers the history of EDA in the cloud, why the cloud became a viable option for EDA, and identifies ways for improving the bottom line for designs in both established nodes and leading-edge technologies. This blog covers the salient points I gleaned from the whitepaper.

Paving the Way

There were two primary reasons for EDA’s early hesitancy to move to the cloud. The significant reason was the deep concern over intellectual property (IP) security. The other reason was that there was no compelling need as the on-prem computing resources were able to handle all of the requirements.

Cloud providers took the IP security concern seriously. They started investing in and developing strong security mechanisms in their infrastructure and deploying tight security protocols and procedures for data access and during data transportation. With this intense focus on IP security by cloud providers, the chip industry started migrating to cloud computing.

Compute Demand Growth

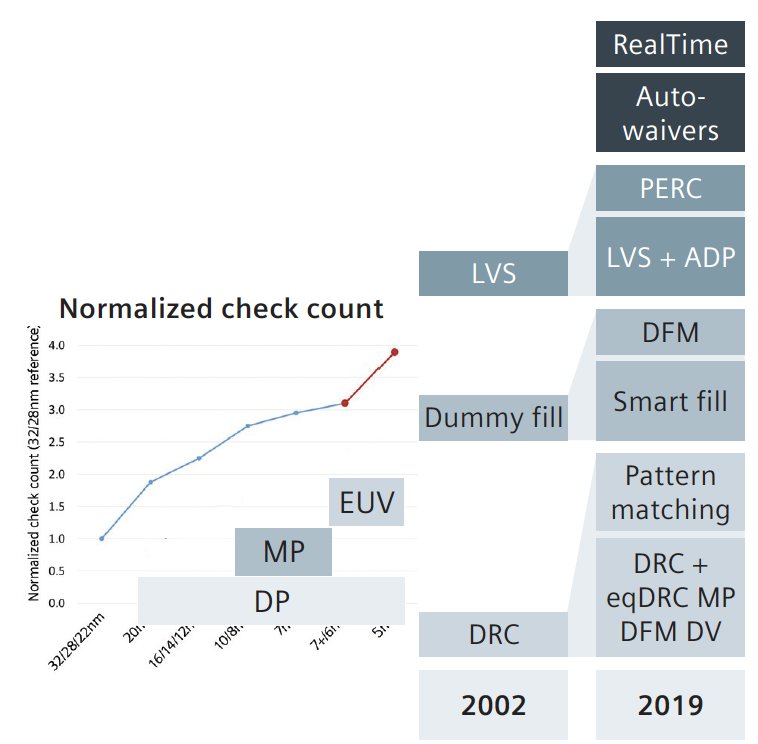

The demand for EDA compute power has been growing aggressively over time. As we advanced from one process node to the next, the number of rule checks that needs to be performed also increased. Refer to figure below. From 28nm to 5nm node, the number of rule checks has quadrupled. In addition, each task has also increased in complexity. For example, to optimize yield ramp, it is no longer simply adding in a few geometries. Design companies are trying to maximize yield using techniques such as cell-based fill.

The industry had hoped that innovations in lithography and transistors would bring down the amount of compute requirement by eliminating some of the rule checks that were added over the years. But that does not appear to be having a material impact on the long-term demand for compute power. And neither is the move toward chiplets integration (vs very large and complex SoC) expected to provide that much relief in regard to demand for compute power.

On-prem, Cloud or Hybrid?

Cloud computing can be more expensive than utilizing on-prem compute resources. To cost-effectively utilize cloud computing resources without sacrificing on turnaround times, there are many tools out there. Refer to one of my recent blogs for one such solution. But first start with how effectively current on-prem compute resources are being utilized. If utilization is low, first order of business is to improve the resource allocation and scheduling processes. If utilization is high without impacting project schedules, on-prem computing may be fine for current projects.

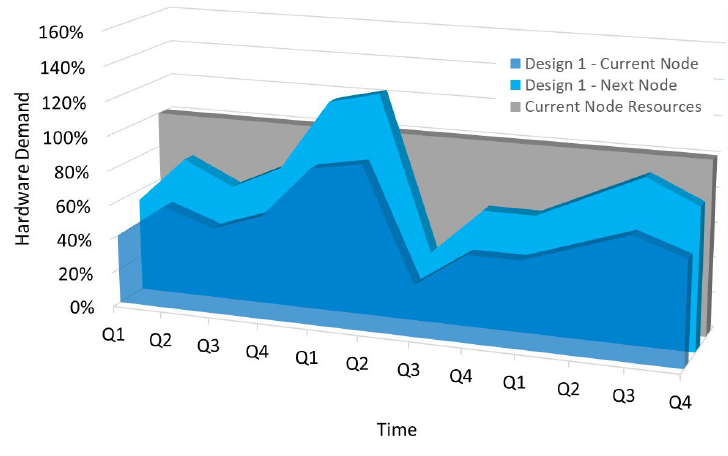

But even if the on-prem resources have been optimally planned, all it takes is for one or a few of the many projects to slip their schedules. Suddenly the situation changes from on-prem resources being sufficient to on-cloud computing becoming a necessity. May be in the form of hybrid computing environment. But what about the immediate future? Even moving an existing design to a new process node will increase the demand on compute power, making extending to the cloud a serious consideration. Refer to figure below.

Even if the above issues aren’t there, a company has to look at the market value for doing things faster than otherwise possible. What is the bottom-line benefit of tapping into the practically near-infinite cloud resources to get the company’s product faster to market.

Calibre Platform and Cloud Computing

Michael describes how their Calibre platform has been supporting distributed and cloud computing for more than a decade. Calibre engines and licensing can deliver the same level of performance whether a company is using a private cloud or a public cloud. Calibre engines can scale up to 10K+ CPU cores and process extremely large (GB to TB sized) files.

The whitepaper provides a specific example of how Advanced Micro Devices (AMD) was able to double their daily DRC iterations on a 7nm production design without increasing the memory usage on the cloud servers. He also describes how Siemens EDA was able to achieve a 30% further reduction in runtime by identifying optimizations to AMD’s runtime environment, Microsoft Azure’s cloud resources and the foundry’s design rule decks. You can find more details in the whitepaper.

Siemens EDA offers its customers flexibility to use the cloud for surge compute demand and continued use of multi-vendor flows. As part of that freedom of choice focus, they collaborate with multiple companies to create best practices for efficiently and cost-effectively utilizing the cloud. In this context, another blog that may be of interest is “Library Characterization: A Siemens Cloud Solution using AWS.”

Summary

If you have not already moved to the cloud for your EDA computing needs, it may be worthwhile to revisit that choice. After evaluating and making the decision to adopt cloud EDA computing, availability of proven technology, flexible use models, support for multi-vendor flow and guidance through best practices methodology are imperative to maximize the benefits received. Click here to download the whitepaper and explore with Siemens EDA.

Share this post via:

Advancing Automotive Memory: Development of an 8nm 128Mb Embedded STT-MRAM with Sub-ppm Reliability