The big three EDA vendors are constantly putting more of their tools in the cloud in order to speed up the design and verification process for chip designers, but how do engineering teams approach using the cloud for functional verification tests and regressions? At the recent Cadence user group meeting (CDNLive) there was a presentation by Vishal Moondhra from Methodics, “Creating a Seamless Cloudburst Environment for Verification“.

Here’s a familiar challenge – how to run 1,000’s of tests and regressions in the shortest amount of time using hardware, EDA software licenses and disk space on hand. Well, if your existing, local resources aren’t producing results fast enough, then what about scaling into the cloud all or parts of the work?

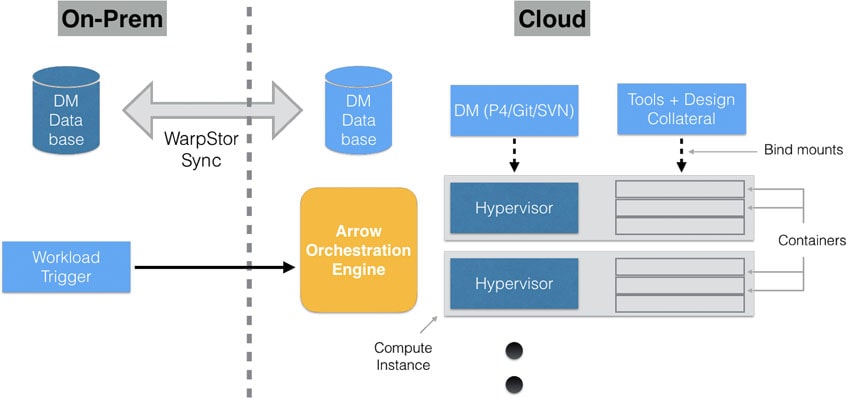

Regression testing is well suited for cloud-based simulations because the process is non-interactive. The hybrid approach taken by Methodics uses both on-premise and cloud resources as shown below:

OK, so the theory to seamlessly run jobs in the cloud for regression looks possible, but what are the obstacles?

[LIST=1]

Synchronizing Data

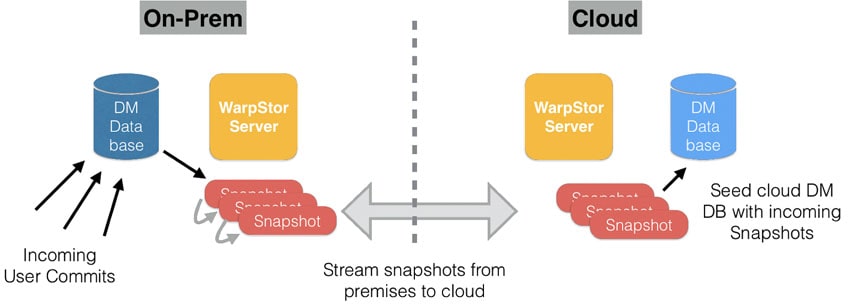

Many large IC teams can have engineers in multiple regions around the globe, doing work 24/7, making constant changes to design files. A modern project can have thousands of files, taking up hundreds of GB of space, containing both ASCII text files and binary files, simulation log files, even Office documents. Synching all of this data could be kind of slow.

At Methodics they’ve tackled this issue with a product named WarpStor, and under the hood it uses instantaneous snapshots of your design database, along with the delta’s of previous snapshots. All file types are properly handled with this method.

You get a new snapshot when there’s a new project release using the Percipient IP Lifecycle Management (IPLM) tool, or with a simple rule – maybe every 10 commits in the project.

Design environment in the cloud

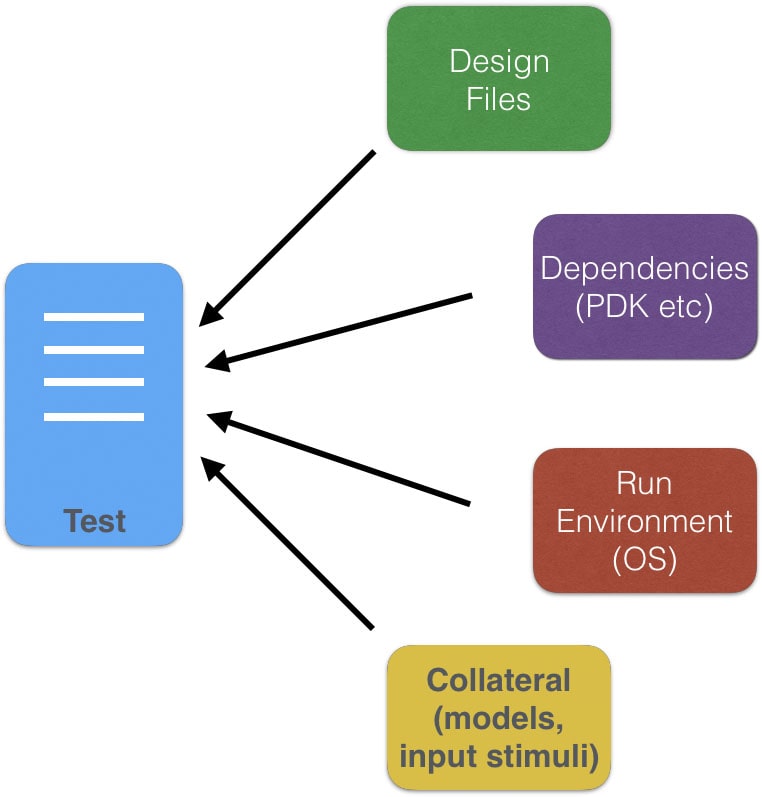

Testing an SoC involves lots of details, like the OS version and patch level, EDA vendor tools and version, environment variables, configuration files, design files, model files, input stimulus, foundry packages. You get the picture.

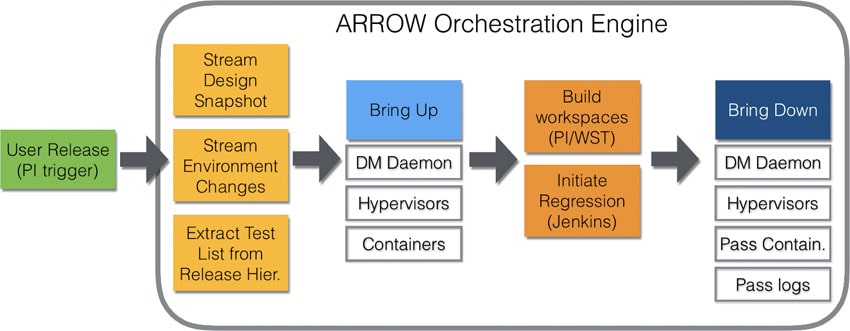

Your CAD and IT departments are familiar with these environment details, created with scripts and vendor frameworks, but what if they presume that all files are on NFS? Methodics has come up with a tool called Arrow to control how tests are executed in the cloud for you so the version dependencies are used, proper OS version is selected, and all of the synched design files are in the cloud ready to run.

Optimizing cloud resources

Sure, you have to pay for CPU cycles and disk storage in the cloud, so with the Arrow orchestration engine you get just the right compute and storage resources, while minimizing overhead. Arrow brings up hypervisors and container instances for each of your tests, then each container gets returned to the pool when it is complete and passes, or Arrow keeps around your failing tests in live containers, and tidies up by removing logs and other un-needed files in passing tests.

Containers get provisioned quickly in under a second using recipes, and your workspaces are built from a ‘snapshot’ using WarpStor.

A job manager like Jenkins can be used by Arrow whenever your container and workspace are ready.

Getting results quickly and efficiently

Running all of those functional verification tests is sure to create GB of output data for log files, coverage results, and extracted values from scripts. You decide exactly which information should be tagged and analyzed later, while un-needed data is purged.

Summary

IC design teams are stretching the limits of design and verification computing resources on premise, so many are looking to add cloud burst capabilities to help with demanding workloads like functional regression testing. Methodics has thought through this challenge and come up with the WarpStor Sync and Arrow tools that work together in enabling cloud based functional verification tasks.

Read the 10 page White Paper online, after a brief registration process.

Related Blogs

- Traceability and Design Verification Synergy

- IP Management using both Git and Methodics

- Combining IP and Product Lifecycle Tools

Comments

There are no comments yet.

You must register or log in to view/post comments.