No-one could accuse Badru Agarwala, GM of the Mentor/Siemens Calypto Division, of being tentative about high-level synthesis. (HLS). Then again, he and a few others around the industry have been selling this story for quite a while, apparently to a small and not always attentive audience. But times seem to be changing. I’ve written elsewhere about expanding use of HLS. Now Badru has written a white paper which gets very aggressive – you’d better get on-board with HLS if you want to remain competitive.

Now if that was just Badru, I might put this passion down to his entirely understandable belief that his baby (Catapult) is beautiful. But when some very heavy hitting design teams agree, I have to sit up and pay attention. Badru cites public quotes from Qualcomm, Google and NVIDIA, backed up by detailed white papers, to make his case. Naturally these applications center around video, camera, display and related applications. But for those who haven’t been paying attention, these areas represent a sizeable percentage of what’s hot in chip-design today. The references back that up, as do applications in the cloud, gaming, and real-time recognition in ADAS.

NVIDIA was able to cut the schedule of a JPEG encoder/decoder by 5 months while also upgrading, within 2 months, two 8-bit video decoders to 4K 10-bit color for Netflix and YouTube applications. In their view, these objectives would never have made it to design in an RTL-based flow. An important aspect of getting to the new architecture with a high QoR was the ability to quickly and incrementally refine between a high-level functional C model and the synthesizable C++ model, and run thousands of tests to ensure compatibility between these models, something that simply would not have been possible if had to run on RTL. In an integration with PowerPro, they were also able to cut power by 40%. NVIDIA say that they are no longer HLS skeptics – they plan to use this Catapult-based flow on future video and imaging designs, whether new or re-targeted.

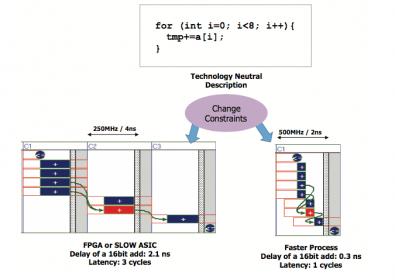

Google are big fans of open-sourcing development, as much in hardware as in software. They are supporting a project called WebM for the distribution of compressed media content across the web and want to provide a royalty-free hardware decoder IP for that content, called the VP9 G2 decoder. Again, this must handle up to 4K resolution playback, on smart TVs, tablets, mobile devices and PCs/laptops. In their view, C++ is a more flexible starting point than RTL for an open-source distribution, allowing users to easily target different technologies and performance points. To give you a sense of how big a deal this is, VP9 support is already available in more than 1 billion endpoints (Chrome, Android, FFmpeg and Firefox). So, hardware acceleration for the standard has a big customer base to target. Starting an open-source design in C++ rather than RTL is likely, as they say, to move the needle. Did I mention that Google uses Catapult as their HLS platform?

Beyond the value of C++ being a more flexible starting point for open-source design, Google observed a number of other design advantages:

Beyond the value of C++ being a more flexible starting point for open-source design, Google observed a number of other design advantages:

- Total C++ code for the design is about 69k lines. They estimate an RTL-based approach would have required ~300k lines. No matter how you slice it, creation, verification and debug time/effort scales with lines of code. You get to a clean design faster on a smaller line-count.

- Simulation (in C++) runs about 50X faster than in RTL. They could create, run verification and fix bugs in tight loops all day long rather than spinning their wheels waiting for RTL simulation runs to complete.

- Using C++ they could use widely available tools and flows to collaborate, share enhancements to the same file and merge when appropriate. You can do this kind of thing in RTL too, but in a system-centric environment there must be a natural pull to using tools and ecosystems used by millions of developers rather than thousands of developers.

- An HLS run on a block (14 of them in the design) took about an hour. Which allowed them to quickly explore different architectures through C++ changes or synthesis options. They believe this got them to the final code they wanted in 6 months rather than a year.

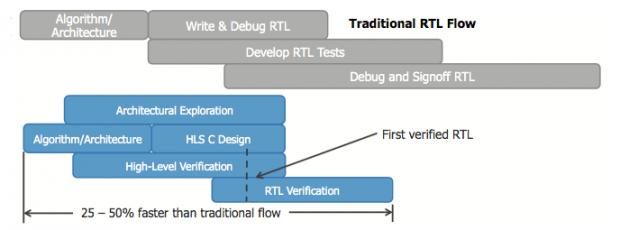

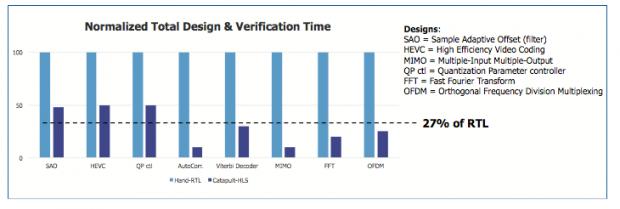

Qualcomm has apparently been using HLS and high-level verification (HLV, based on C++/ SystemC testbenches) for several years, on a wide range of video and image processing IP, some of which you will find in SnapDragon devices. They apparently started with HLS in the early 2000’s, partnering with Calypto, the company that created Catapult and is now a part of Mentor/Siemens. A big part of the attraction has been the fast turns that are possible in verifying an architecture-level model; they say they are seeing an even more impressive 100-500X performance improvement over RTL. Qualcomm also emphasizes that they do the bulk of their verification (for these blocks) in the C domain. By the time they get to HLS, they already have a very stable design. Verification at the C level is dramatically faster and proceeds in parallel with design, unlike traditional RTL flows where, no matter how much you shift left, verification always trails design. Verification speed means they can also get to very high coverage much faster. And they can reuse all of that verification infrastructure on the synthesized RTL produced by HLS. The only tweaking they have to do on the RTL is generally at the interface level.

I know we all love our RTL, we have lots of infrastructure and training built up around that standard and we unconsciously believe that RTL must be axiomatic for all hardware design for the rest of time. But we’re starting to see shifts, in important leading applications and in important leading companies. And when that kind of shift happens, doggedly refusing to change because we’ve always used RTL or “everyone knows” that C++ -based design is never going anywhere – these viewpoints may not be healthy. Might want to check out the white-paper HERE.

Comments

There are no comments yet.

You must register or log in to view/post comments.