OK – maybe that sounds a little weird, but it’s not a bad description of what Mentor suggests in a recent white-paper. There are at least three aspects to power verification – static verification of the UPF and the UPF against the RTL, formal verification of state transition logic, and dynamic verification of at least some critical inter-operations between the functional logic and power transitions such as correct req/ack handshaking with a turned-off function which must turn-on in order to acknowledge. This third set is where the white-paper introduces a Tcl-based methodology.

The author (Madhur Bhargava – lead MCS at Mentor) first contrasts the new approach with the traditional method to test compliance with any requirement in RTL verification – through adding SV assertions. He acknowledges that a number of verification tools provide support for power verification of this type, but points out that these come with several limitations:

-

- Built-in checks don’t cover all the checks you will want to perform, so you’re going to have to complement these with some of your own checks

- Adding your own checks is not so simple, particularly since you have to be very comfortably bilingual between UPF and SVA

- And whatever checks you are performing, these runs will be slow because you’ve added a lot more checking overhead to your mission-mode functional checks.

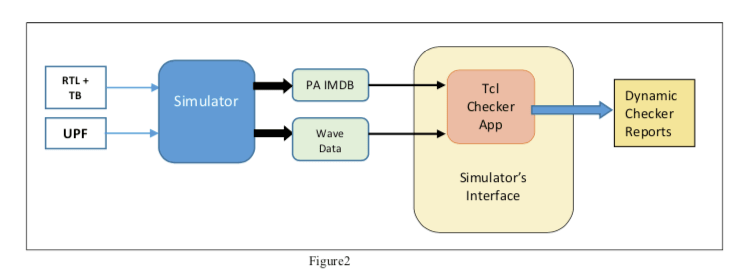

The core idea behind the Mentor approach is to do power-verification checking post-simulation. Immediately you fix the third problem for regular verification regression users, though you still have to run your power checker. Next your power checker runs on the (post-simulation database. This uses a Tcl app based on the UPF 3.0 Information Model APIs, running on top of the waveform CLIs to access simulation data for use in Tcl-based procedures. That fixes the second problem – a power expert verifier no longer has to be multi-lingual; Tcl know-how (and UPF know-how) is all they need.

Finally you need to run your power checkers. These are going to check compliance with power intent by:

-

- modeling control sequences/protocols

- iterating over the design/power domains to access low-power objects

- accessing the waveform info associated with these objects

- checking for any mismatch with intent and flagging errors as needed

I find a number of things interesting about this approach. First this means that dynamic power verification doesn’t need to slow down functional debug and can run in a separate thread from that debug. This for me is another example of how elastic-compute concepts are becoming prominent in the EDA world.

Second, it’s good to see more open-minded approaches to verification. There’s a lot of good things in SV/SVA but we don’t have to be compulsive about dynamic verification only having to work through that channel. Exporting the data to Tcl-based apps for verifying power intent written in Tcl is a natural extension.

Madhur wraps up with some limitations to the approach. First the UPF 3.0 model has no concept of connectivity so checks requiring knowledge of source to sink paths (as one example) cannot be coded within the standard. I think this is purely a standards issue; providing a Tcl API to design connectivity is a problem that has been solved a long time ago. The committee just needs to find a mutually agreeable way to incorporate appropriate APIs.

He also mentions that an SVA assertion can abort a simulation run as soon as an error is found whereas this approach, being post-sim-based, will not trigger an abort. I think this a minor consideration. If simulation regressions are also going for functional verification, that’s not necessarily a bad thing – much of the simulation may still be useful after that event. Power intent debug continues on a parallel path and power bugs can be fed back and rolled up with all other issues, as discovered.

You can read the white paper by registering HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.