In Mike Muller’s keynote at DAC he wanted to make formal approaches an integral part of writing RTL. After all, formal captures design intent and then, at least much of the time, can verify whether the RTL written actually matches that intent. Today, formal is not used that way and is typically something served “on the side” by specialist formal verification experts. It is still very valuable and regularly finds issues, especially corner cases, that simulation has missed. However, by letting the design engineers get away without having to document their intent it risks missing problems, since the formal experts inevitably have to deduce some of the intent from the RTL they are verifying, which is obviously a circular loop with potential for letting bugs escape into the wild.

The big EDA companies all have a formal solution of some sort, but they are unlikely to be the ones spearheading this. They all have simulators and simulation verification environments too, and will quite happily sell you as many licenses as you want. Indeed, if a few dozen formal licenses turn out to substitute for a server farm with a bazillion simulation licenses, it is not necessarily even good business for them to encourage the transition.

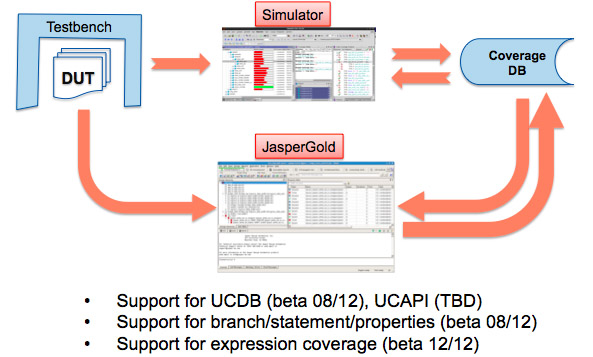

Jasper, however, only has a formal product line. If you use a lot of simulation as an alternative they don’t participate in that business. Of course simulation is not going away and Jasper’s approach is to combine metrics from formal verification with metrics from simulation-based verification to accelerate verification closure. In particular, there is never any point in running simulation to partially verify some aspect of a design that formal has already proven; it’s just a waste of computer time.

The metrics for formal verification come in two flavors. Firstly, how complete the coverage is based on the properties and the stimuli. Secondly, how successful JasperGold (or whatever formal tool you are using) is at proving those properties, which may be fully proven or a bounded proof (or it finds a counterexample which obviously doesn’t contribute to verification closure directly, but exposes an issue which must be fixed). This is all then integrated into a coverage database to include unreachable targets that do not need to be verified, along with coverage information from formal analysis that do not need to be re-verified using simulation.

The metrics for formal verification come in two flavors. Firstly, how complete the coverage is based on the properties and the stimuli. Secondly, how successful JasperGold (or whatever formal tool you are using) is at proving those properties, which may be fully proven or a bounded proof (or it finds a counterexample which obviously doesn’t contribute to verification closure directly, but exposes an issue which must be fixed). This is all then integrated into a coverage database to include unreachable targets that do not need to be verified, along with coverage information from formal analysis that do not need to be re-verified using simulation.

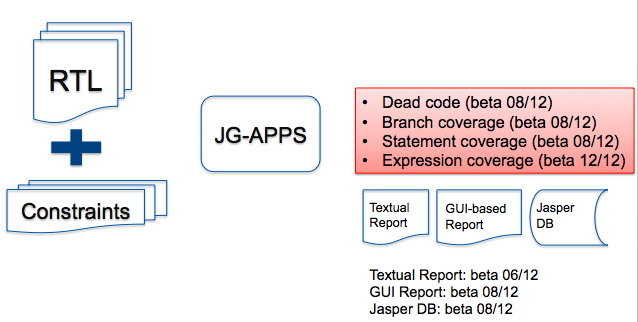

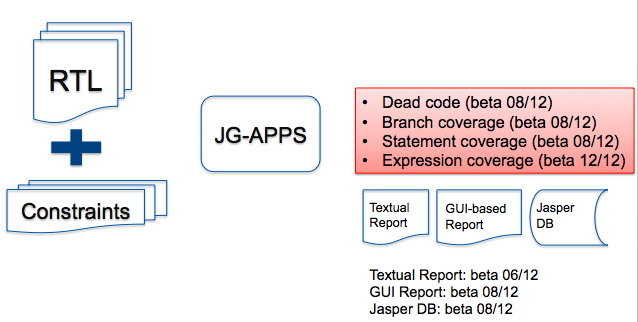

The first way to use this approach is to establish the completeness of the formal testbench. In particular, analyzing:

- Dead code

- Branch coverage

- Statement coverage

- Expression coverage

After the formal analysis, properties may be completely proven or only have a bounded proof. This means that only part of the reachable state-space was analyzed and that no violation was detected there. For example, all states within K cycles of the reset were examined and no violation was detected. Obviously this means that problems might occur after K cycles.

This approach allows confidence that the verification is not over-constrained (relying on an external property that still has to be proved) and allows proven (and perhaps bounded proven) aspects of the design to be eliminated from the simulation verification plan.

This approach allows confidence that the verification is not over-constrained (relying on an external property that still has to be proved) and allows proven (and perhaps bounded proven) aspects of the design to be eliminated from the simulation verification plan.

This information can then be combined with information in a simulation coverage database such as UCDB to merge all the coverage metrics into a single verification coverage metric that combines formal and simulation approaches.

These features in JasperGold will be released to beta during the second half of this year with production either late this year or in the first half of next year.

Share this post via:

A Century of Miracles: From the FET’s Inception to the Horizons Ahead