I wrote recently about Siemens EDA’s philosophy on designing quality in from the outset, rather than trying to verify it in. The first step is moving up the level of abstraction for design. They mentioned the advantages of HLS in this respect and I refined that to “for DSP-centric applications”. A Stanford group recently presented… Read More

Artificial Intelligence

Refined Fault Localization through Learning. Innovation in Verification

This is another look at refining the accuracy of fault localization. Once a bug has been detected, such techniques aim to pin down the most likely code locations for a root cause. Paul Cunningham (GM, Verification at Cadence), Raúl Camposano (Silicon Catalyst, entrepreneur, former Synopsys CTO and now Silvaco CTO) and I continue… Read More

CEO Interview: Vaysh Kewada of Salience Labs

Vaysh Kewada is cofounder and CEO at Salience Labs, a company developing an ultra high-speed multi-chip processor that packages a photonics chip together with standard electronics to enable exascale AI. Salience is funded by Oxford Sciences Enterprise, Cambridge Innovation Capital, Arm-backed Deeptech Labs, former Dialog… Read More

Why Software Rules AI Success at the Edge

It is an unavoidable fact that machine learning (ML) hardware architectures are evolving rapidly. Initially most visible in datacenters (many hyperscalars have built their own AI chips), the trend is now red-hot in inference engines for the edge, each spinning new ground-breaking methods. Markets demand these advances to … Read More

Tensilica Edge Advances at Linley

The Linley spring conference this year had a significant focus on AI at the edge, with all that implies. Low power/energy is a key consideration, though increasing performance demands for some applications are making this more challenging. David Bell (Product Marketing at Tensilica, Cadence) presented the Tensilica NNE110… Read More

High Efficiency Edge Vision Processing Based on Dynamically Reconfigurable TPU Technology

While many tough problems relating to computing have been solved over the years, vision processing is still challenging in many ways. Cheng Wang, Co-Founder and CTO of FlexLogix Technologies gave a talk on the topic of edge vision processing at Linley’s Spring 2022 conference. During that talk he references how Gerald Sussman… Read More

ML-Based Coverage Refinement. Innovation in Verification

We’re always looking for ways to leverage machine-learning (ML) in coverage refinement. Here is an intriguing approach proposed by Google Research. Paul Cunningham (GM, Verification at Cadence), Raúl Camposano (Silicon Catalyst, entrepreneur, former Synopsys CTO and now Silvaco CTO) and I continue our series on research… Read More

Quantum Computing Trends

Quantum Computing is the area of study focused on developing computer technology based on the principles of quantum theory. Tens of billions of public and private capitals are being invested in Quantum technologies. Countries across the world have realized that quantum technologies can be a major disruptor of existing businesses,… Read More

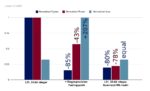

Optimizing AI/ML Operations at the Edge

AI/ML functions are moving to the edge to save power and reduce latency. This enables local processing without the overhead of transmitting large volumes of data over power hungry and slow communication links to servers in the cloud. Of course, the cloud offers high performance and capacity for processing the workloads. Yet, … Read More

Webinar: From Glass Break Models to Person Detection Systems, Deploying Low-Power Edge AI for Smart Home Security

Moving deep learning from the cloud to the edge is the holy grail when it comes to deploying highly accurate, low-power applications. Market demand for edge AI continues to grow globally as new hardware and software solutions are now more readily available, enabling any sized company to easily implement deep learning solutions… Read More

Semidynamics Unveils 3nm AI Inference Silicon and Full-Stack Systems