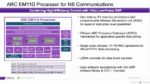

If you are like me, you will get a 5G phone because of the high bandwidth it offers. However, there is a lot more to 5G than just fast data. In fact, one of the appealing features of 5G is low bandwidth communication. This is useful for edge devices that perform infrequent and low volume data transfers and depend on long battery life. Prior… Read More

Tech Shows up for COVID-19: Time to Expand Horizons

Bring digital technology solutions to bear on more of our toughest societal problems

“We are all in this together”. The world faces 250,000 COVID-19 deaths, each a tragic human story. The pandemic will bring a litany of “lessons learned” including lack of preparedness, slow response and uneven recovery. The rapid… Read More

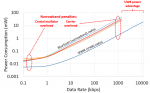

The Story of Ultra-WideBand – Part 5: Low power is gold

How can ultra-wideband done right do more with less energy

In the previous part, we discussed how the time-frequency duality can be used to reduce the latency. When you compress in time a wireless transmission, you reduce the time it takes to hop from a transmitter to a receiver. Another very interesting capability enabled by the… Read More

5G Infrastructure Opens Up

It seemed we were more or less resigned to Huawei owning 5G infrastructure worldwide. Then questions about security came to the fore, Huawei purchases were put on hold (though that position is being tested outside the US) and opportunity for other infrastructure suppliers (Ericsson, Nokia, etc) has opened up again.

Building … Read More

5G SoCs Demand New Verification Approaches

Lately, I’ve been cataloging the number of impossible-to-verify technologies we face. All forms of machine learning and inference applications fall into this category. I’ve yet to see a regression test to prove a chip for an autonomous driving system will do the right thing in all cases. Training data bias is another interesting… Read More

The Story of Ultra-WideBand – Part 4: Short latency is king

How Ultra-wideband aligns with 5G’s premise

In part 3, we discussed the time-frequency duality or how time and bandwidth are interchangeable. If one wants to compress in time a wireless transmission, more frequency bandwidth is needed. This property can be used to increase the accuracy of ranging, as we saw in part 3. Another very… Read More

The Story of Ultra-WideBand – Part 3: The Resurgence

In Part 2, we discussed the second false-start of Ultra-WideBand (UWB) leveraging over-engineered orthogonal frequency-division multiplexing (OFDM) transceivers, launching at the dawn of the great recession and surpassed by a new generation of Wi-Fi transceivers. These circumstances signed the end of the proposed applications… Read More

The Story of Ultra-WideBand – Part 2: The Second Fall

Over-engineered to perfection, outmaneuvered by Wi-Fi

In Part 1 of this series, we recounted the birth of wideband radio at the turn of the 20th century, and how superheterodyne radio killed wideband radios for messaging after 1920. But RADAR kept wideband research alive through World War 2 and the Cold War. Indeed, the story of… Read More

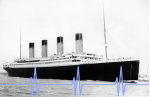

The Story of Ultra-WideBand – Part 1: The Genesis

In the middle of the night of April 14, 1912, the R.M.S. Titanic sent a distress message. It had just hit an iceberg and was sinking. Even though broadcasting an emergency wireless signal is common today, this was cutting edge technology at the turn of the 20th century. This was made possible by the invention of a broadband radio developed… Read More

Edge Computing – The Critical Middle Ground

Ron Lowman, product marketing manager at Synopsys, recently posted an interesting technical bulletin on the Synopsys website entitled How AI in Edge Computing Drives 5G and the IoT. There’s been a lot of discussion recently about the emerging processing hierarchy of edge devices (think cell phone or self-driving car), cloud… Read More

Semidynamics Unveils 3nm AI Inference Silicon and Full-Stack Systems