Ever wonder why coherent networks are needed beyond server design? The value of cache coherence in a multi-core or many-core server is now well understood. Software developers want to write multi-threaded programs for such systems and expect well-defined behavior when accessing common memory locations. They reasonably expect the same programming model to extend to heterogeneous SoCs, not just for the CPU cluster in the SoC but more generally. Say in a surveillance application based on an intelligent image processing pipeline feeding into inferencing and recognition to detect abnormal activity. Stages in such pipelines share data which must remain coherent. However, these components interface through AMBA CHI, ACE and AXI protocols – a mix of coherent and incoherent interfaces. The Arteris IP Ncore network is the only coherent network interface that can accomplish this objective.

Coherency in a Heterogeneous Design

An application like a surveillance camera depends on high-performance streaming all the way through the pipeline. A suspicious figure may be in-frame only for a short time, yet you still must capture and recognize a cause for concern. The frames/second inference rate must be high enough to meet that goal, enabling camera tracking to follow the detected figure.

The imaging pipeline starts with the CPU cluster processing images, tiled through multiple parallel threads for maximum performance. Further maximizing performance, memory accesses are cached to the greatest extent possible, and therefore that cache network must be coherent. The CPUs support CHI interfaces, so far, so good.

But image signal processing is a lot more complex than just reading images. There’s demosaicing, color management, dynamic range management and much more. Maybe handled in a specialized GPU or DSP function, which must deliver the same caching performance boost to not slow down the pipeline. And for which thread programming expects the same memory consistency model. Often this hardware function only supports an ACE interface. ACE is coherent but different from CHI. Now the design needs a coherent network that can support both.

Those threads feed into the AI engine to infer suspicious objects in images at, say, 30 frames/second. Aiming to detect not only such an object but also the direction of movement. AI engines commonly support an AXI interface, which is widely popular but is not coherent. However, the control front-end to that engine must still see a coherent view of processed image tiles streaming into the engine. Meeting that goal requires special support.

The Arteris IP Ncore coherent network

The Arteris IP FlexNoC non-coherent network serves the connectivity needs of much of a typical SoC, which may not need coherent memory sharing with CPUs and GPUs. The AI accelerator itself may be built on a FlexNoC network. But a connectivity solution is needed to manage the coherent domain as well. For this, Arteris IP has built its Ncore coherent NoC generator.

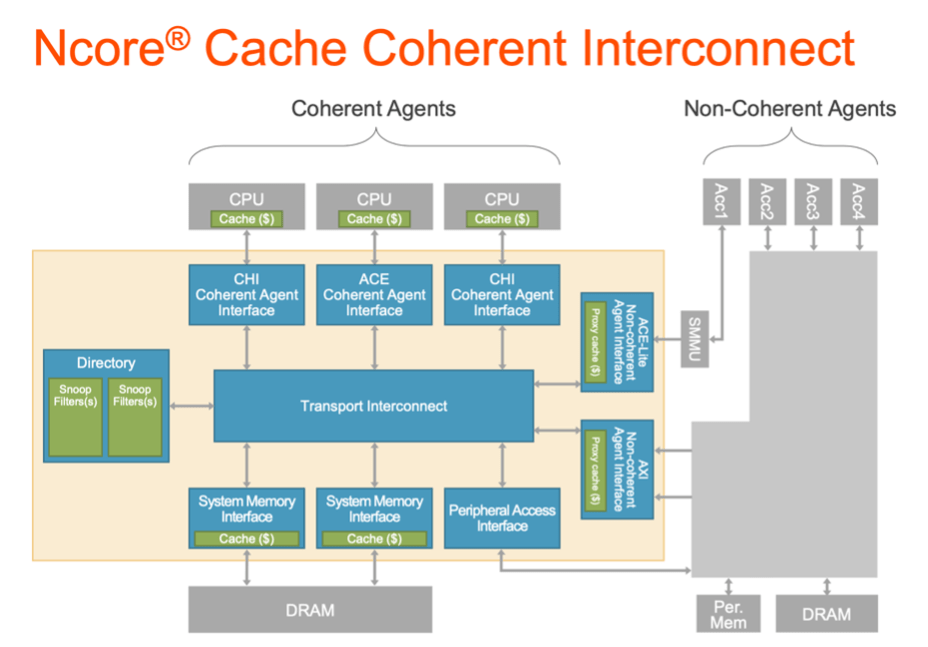

Think of Ncore as a NoC with all the regular advantages of such a network but with a couple of extra features. First, the network provides directory-based coherency management. All memory accesses within the coherent domain, such as CPU and GPU clusters, adhere to the consistency model. Second, Ncore supports CHI and ACE interfaces. It also supports ACE-Lite interfaces with embedded cache, which Arteris IP calls proxy caches. A proxy cache can connect to an AXI bus in the non-coherent domain, complementing the AXI data on the coherent side with the information required to meet the ACE-Lite specification. A proxy cache ensures that when the non-coherent domain reads from the cache or writes to the cache, those transactions will be managed coherently.

Bottom line, using Ncore provides the only commercial solution for network coherency between CHI, ACE and AXI networks. The kind of networks you will commonly find in most SoCs. If you’d like to learn more, click HERE.

Share this post via:

Comments

One Reply to “Coherency in Heterogeneous Designs”

You must register or log in to view/post comments.