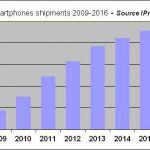

In a previous blog, I was forecasting smartphone shipment to reach 430 million units in 2011. I was wrong! Apparently, I am not better than other analysts, as the actual figures for the smartphone shipment in 2011 are more than slightly different, as these have topped 491.4 million units! After 304 million in 2010, that’s a 61.3 percent year over year increase, according with IDC. IPNEST has revised the smartphone shipments forecast for 2012- 2016, taking into account these latest figures for 2011: we expect almost 1 billion smartphoneto be shipped in 2016.

If we come back to the actual shipments, in 2011 one out of three wirelesses handset shipped was a smartphone. This is to be compared with 2010, when it was one out of five. What is the impact on our day to day business, IP and Semiconductor?

Application processor market: this is the obvious first segment to look at, even if it’s not the only one. With an Average Selling Price (ASP) in the $20 range in 2011 for an application processor dedicated to smartphone, this segment has represented a $10 billion market in 2011. If we look at the Total Addressable Market (TAM), it is slightly smaller… this is an understatement, as the difference between the total market and the TAM is made of the A5 (A4) shipments. Apple shipping application processor to… Apple. As Apple has shipped about 60 million iPhone during 2011, the application processor TAM is equal to (490 -60)*20 or $8.6 billion, or almost 3% of the total SC market of $300 billion.

PMIC market: those who have read my previous post about the Power Management IC market have discovered like I did that this segment, even if it’s not as impressive as application processor, is generating pretty decent sales, as the PMIC content has been evaluated around $8 by smartphone (and $20 by Media Tablet!). The PMIC market in the smartphone segment only can be evaluated at $4 billion. If we add the Media Tablet segment, with 63 million units shipped in 2011 according with IDC, we add $1.26 billion to the PMIC segment. Thus, for the smartphone and Media Tablet segment, the PMIC TAM was above $5 billionin 2011. Pretty impressive for a SC segment almost neglected by the IDC, Gartner et al., as they focus on the more sexy application processor segment!

MIPI IP: IPNEST analysis is that MIPI, as any emerging technologies, is firstly used in the high end market, or smartphone segment. As with any new technology, integrating one of the MIPI specifications (CSI, DSI, DigRF, UniPro, LLI and more, see MIPI organization websitefor more information) represent an investment to make, in order to understand the specification, design the function or acquire the IP externally, integrate it into the IC (it can be the application processor or one of the many peripheral IC), and so on… But the many advantages generated by MIPI usage will certainly bring a high return on this investment, especially to the system integrator (manufacturing the smartphone) and ultimately to the end user, as MIPI allow for:

- Lower power consumption compared with other interfaces

- Standardization in the way to interface the various peripheral devices with the application processor, like camera controller or display controller IC for example, allowing to build the complete system in an easier way, thanks to the guaranteed compatibility between IC compliant with the same interface specification.

If the smartphone shipments are rocketing, that should be good for MIPI adoption, as more than expected MIPI powered IC will be manufactured. Then, when the production volumes are growing, it’s usual to see the IC price going down. When the price is going down, the over-cost associated with the integration of a new technology tend to disappear. It’s a kind of virtuous cycle: more smartphone shipped means more MIPI powered IC manufactured at a more affordable price for the customer, then more customers supporting the technology. Look at Qualcomm: a couple of years ago, the company was not supporting MIPI (but MDDI, a homemade concurrent specification). Now, Qualcomm has dropped MDDI and fully support MIPI!

For more exaustive information about MIPI, see MIPI Orgor “MIPI IP Survey“

By Eric Estevefrom IPnest