HSPICE users gathered in January 2012 at the HSPICE SIG(Special Interest Group) to talk about their experiences using this circuit simulator for a variety of IC and signal integrity issues. I wasn’t able to attend in person however I did watch the video and wanted to summarize what I heard:

Continue reading “HSPICE Users Talking about Their Circuit Simulation Experience”

Does 14nm magically put Intel back on the lead smartphone lap?

I’ve often wanted to publish a book with nothing but photos of police cars, so that people wouldn’t have to slow down and gawk at them when they have someone pulled over on the side of the freeway. Intel roadmaps seem to have the same effect on people. No matter what is on them, even if there’s nothing really new, they stop people in their tracks just to look.

At Mobile World Congress today, Intel’s Paul Otellini created the latest traffic jam by laying out the plans to move from the Atom Z2460 (Medfield) at 32nm into the Atom Z2580, targeting their new 14nm process in two years. Also, he previewed the Z2000, the first “value” tier push targeting lower cost implementations.

Intel firmly believes they can compete in mobile, and is betting the farm on 14nm to catch up. With roughly twice as many transistors to deal with compared to an ARM Cortex-A9 core, they’re looking to faster memory interfaces, FinFETs, and process shrinks to get it done.

The push to 14nm isn’t surprising at all: Intel has to fill Fab 42. It’s the only play. With the news of HP shrinking their way to greatness in the PC business, it will have to come from Atom, smartphones, and embedded … er, intelligent systems.

While the initial Atom Z2460 performance numbers are in the range of the Qualcomm MSM8960 (BrowserMark: 116425 vs. 110345), they are coming at substantially higher power consumption – about 1W versus something less than 750mW, maybe as low as 450mW. The performance/watt figures still favor the ARM camp, by a lot, and that ecosystem isn’t standing still with things like the Cortex-A7 coming.

Intel has a huge gap to close here, and it’s good to see they are competing to get back on the lead lap, but they have a lot of work left to do.

Yalta is Dead! Synopsys offensive in VIP restart the cold war

Last year, you could claim (like I did in this blog) that Cadence was making money with large VIP port-folio, when Synopsys was managing sales of a large Design IP port-folio (thanks to a successful acquisition strategy in the 2000’s). But the latest acquisitions made by Synopsys of VIP centric companies like nSys or ExpertIO should have warned us (and Cadence, by the way): the EDA and IP centric company is investing the VIP field! After the acquisitions time come the new product launch, today the market is discovering… Discovery.

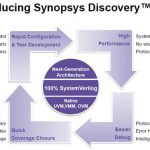

It looks like this product launch is a real offensive, and much more than just an announcement. Synopsys Discovery VIP is written entirely in SystemVerilog, includes native support for UVM, VMM, and OVM, and is compatible with all related verification environments… and supports all major simulators, including Cadence Incisive (!), the product realizes a breakthrough in the VIP jungle. Because of the multiple competing above mentioned standards (UVM, VMM and OVM), the only way to support all of these (and it was a strong market requirement) for a VIP product was to use wrappers. By using wrappers, on top of making the product inelegant, you made it slower. Diminishing simulation run time, offering a performance improved by 3 to 6X is a strong sales argument, I am sure that Synopsys’ sales force will be the first to benefit from this argument, the second being the customers!

Such an argument is based on an identified issue, that every design team trying to complete a SoC design, every marketing team fighting with Time To Market issue, every shareholder expecting a high return on investment knows very well; the cost and elapsed time due to Verification. In fact, I am not so sure that the basic shareholder understands anything about Verification, but he should understand ROI! The next picture is useful to understand the cost breakdown associated with Verification. If you look at the middle left box, you see a 3X cost (or license count, or resources) increase for almost every task (except “Tool, Support and Service” with 20% only, so I suspect that Synopsys will sell Discovery 20% higher than the previous solution?). So, offering a 3 to 6X run time improvement is welcome, to keep the design schedule and consequently the time to market within reasonable limits.

If you look at the product itself, Synopsys includes with Discovery VIP, a Protocol Analyzer which enables engineers to quickly understand, identify and debug protocols in their designs, and debug and coverage management features, so the complete solution is built within a single product.

If we look to Synopsys VIP port-folio, we can see that the war with Cadence will be face to face, protocol by protocol, as they both address the same customer needs. Except for the Memory Models, that Cadence is marketing after Denali acquisition, and where Denali had a monopoly for a long time, but on what constitute the Verification IP market, still to be evaluated, but probably in the $100 to $150 million range, we can expect to see an interesting battle. I am just waiting to know what will be Cadence’ answer to Synopsys’ Discovery offensive!

From Eric Estevefrom IPnest

ISSCC 2012: Silicon Systems for Sustainability!

What can we do for Earth’s sustainability? Besides sorting our garbage and recycling our lawn clippings? Sustainability must be the paramount theme for the future of human society! Semiconductors for a better life! Well, according to my kids, if you take away their smart phones there is no life!

Continue reading “ISSCC 2012: Silicon Systems for Sustainability!”

Universal Flash Storage: Webinar

There has been a general trend for over a decade now towards the use of very fast serial interfaces instead of wide parallel interfaces. This has been driven by a number of different factors ranging from the lack of pins on an SoC, the difficulty of keeping wide parallel interfaces free of skew, limitations on printed circuit board wiring space and so on. Apart from the difficulty of building very fast serial interfaces, almost everything is upside.

JEDEC and MIPI (the Mobile Industry Processor Interface) were both working on high-performance serial I/O standards for data transfers between portable consumer devices. In 2010 these efforts were merged to create the Universal Flash Storage (UFS) standard. UFS is designed to offer a fast, reliable and simple means of supporting storage requirements in portable applications such as smart phones. It supports both embedded and removable card applications. Since it is intended primarily for portable applications, the standard features low power consumption.

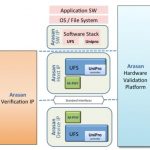

Next week Arasan is having two live seminars on UFS and the Arasan UFS solution:

- UniPro controller

- M-PHY interface block

- UFS block

- UFS and UniPro drivers

- Software stack

- Hardware verification IP

- Hardware validation platform

The live webinar will be on February 28th at 6pm PST (and a useful time for people attending in Asia) and again the next morning February 29th at 10AM PST. Register HERE.

Synopsys at DVCon: tutorial, lunch, keynote, exhibits and more

DVCon is next week, which I’m sure you know already if you are in verification. Of course Synopsys has a rich product portfolio in verification and verification IP (VIP) so is pretty visible at the show.

On Wednesday they are sponsoring lunch. Several Synopsys customers will talk about their view of how the verification landscape is changing. Lunch will be in the Pine/Cedar ballroom from 12.30 onwards. No need to register specially if you are attending DVCon.

Following the lunch, Aart de Geuss, CEO of Synopsys will give the DVCon keynote Systemic Collaboration: Principles for Success in IC Design which looks at the big picture view of how the combination of technology and economics drives everything.

On Thursday, from 1.30 to 3.30 there is a Synopsys tutorial New Levels of Verification IP Productivity for SoC Verification. You need to register here for this if you want to attend. The tutorial is fairly technical and is focused on tnew features added to VIP and how to enable VIP in different environments. There is a demo of debugging at the protocol level (that is, at a much higher level than the actual signals and registers).

Of course Synopsys has a booth in the exhibit hall, number 1105. Exhibits are open 3.30 to 6.30 on Tuesday and 4.30 to 7.00 on Wednesday. You can register for just the exhibits for free.

The main DVCon website is here.

Oh, and while I’m here, Monday evening I’m moderating a panel session Hardware/Software Co-Design from a Software Perspective. All the details are in my earlier blog on the subject here.

Will HP Seek to Merge with Dell?

It has come to that point in time when former great computer companies in the twilight of their years should consider a marriage of convenience that ends Continue reading “Will HP Seek to Merge with Dell?”

Call for Papers: International Gathering for Application Developers!

SemiWiki would like to call you, your co-workers, and your company to participate at ERSA Conference in Las Vegas , July 16-19, 2012. I will be there and it would be a pleasure to work with you on this very important event!

ERSA, is Engineering of Reconfigurable Systems and Algorithms. Since this year, the emphasis will be on the commercial and industrial challenges. We will have application developers’ session with many hot topics and featured sections. The aim is to connect researchers and developers, entrepreneurs; as well as developed countries with emerging markets. The industrial content of ERSA is not strictly reconfigurable computing.

The Hot Topic: “Reconfigurable Computing Application Development for Heterogeneous Run-time Environments”. Focus on challenges, tools, available technologies and opportunities when it comes to developing and supporting applications, both academic and commercial, that involve reconfigurable computing technologies across mobile, embedded, and HPC domains.

Featured sections:

“Hardware security and trust in reconfigurable heterogeneous systems”

“Developing IP cores and scalable libraries for heterogeneous systems”

You can arrange a demo, exhibition, seminar, tutorial, etc. Note there are over 2000 attendees together (ERSA is a part of Worldcomp). There are around 400 attendees with strong hardware design background. Thus, there is a high visibility of your company.

Note also that early advertisinghelps people to plan their travel to visit your presentation.

For more details, please visit ERSA website at http://ersaconf.org/ersa12 .

If you might be interested, please contactl: org@ersaconf.org , to discuss further details.

ERSA conference is also available as a Thread at SemiWiki:

http://www.semiwiki.com/forum/f2/international-conference-engineering-reconfigurable-systems-algorithms-1201.html, you can add comments there.

SemiWiki Blog:

http://www.semiwiki.com/forum/content/996-premier-international-gathering-%85-application-developers-ersa.html, forward to colleagues.

Seminar on IC Yield Optimization at DATE on March 14th

My first chip design at Intel was a DRAM and we had a 5% yield problem caused by electromigration issues, yes, you can have EM issues even with 6um NMOS technology. We had lots of questions but precious few answers on how to pinpoint and eliminate the source of yield loss. Fortunately, with the next generation of DRAM quickly introduced this yield issue was less urgent.

Continue reading “Seminar on IC Yield Optimization at DATE on March 14th”

Computer Architecture and the Wall

There is a new edition of Hennessy and Patterson’s book Computer Architecture: A Quantative Approach out. The 5th edition.

There is lots of fascinating stuff in it but what is especially interesting to those of us who are not actually computer architects, is the big picture stuff about what they choose to cover. Previous editions of the book have emphasized different things, depending on what the issues of the day were (RISC vs CISC in the first edition, for example). The more recent editions have mostly focused on instruction-level parallelism, namely how do we wring more performance from our microprocessor while keeping binary compatibility. I was at the Microprocessor Design Forum years ago and a famous computer architect, I forget who, said that all of the work on computer architecture over the years (long pipelines, out of order execution, branch prediction etc) had sped up microprocessors by 6X (Hennesy and Patterson reckon 7X in the latest edition of their book). All the remaining 1,000,000X improvement had come from Moore’s law.

If there are a few themes in the latest edition they are:

- How to do computation using the least amount of energy possible (battery life for your iPad, electricity and cooling costs for your warehose scale cloud computing installation)?

- How can we make use of very large numbers of cores efficiently?

- How can we structure systems to use memory efficiently?

The energy problem is acute for SoC design because without some breakthroughs we are going to run head on into the problem of dark silicon: we can design more stuff, especially multicore processors, onto a chip than we can power up at the same time. It used to be that power was free and transistors were expensive but now transistors are free and power is expensive. It is easy to put more transistors on a chip than we can power on.

The next problem is that instruction level parallelism has pretty much reached the limit. In the 5th edition it is relegated to a single chapter as opposed to being the focus of the book in the 4th edition. We have parallelism at the multicore SoC level (e.g. iPhone) and at the cloud computing (e.g. Google’s 100,000 processor datacenters). But for most stuff we simply don’t know how to exploit the architecture. Above 4 cores most algorithms see no speedup if you add more, and in many cases actually slow down, running more slowly on 16 cores than on 1 or 2.

Another old piece of conventional wisdom (just read anything about digital signal processing) is that multiplies are expensive and memory access is cheap. But a modern Intel microprocessor does a floating point multiply in 4 clock cycles but takes 200 to access DRAM.

These 3 walls, the power wall, the ILP wall, and the memory wall means that whereas CPU performance used to double every year and a half it is now getting to be over 5 years. How to solve this problem is getting harder too. Chips are too expensive for people to build new architectures in hardware. Compilers used to be thought of as more flexible than the microprocessor architecture but now it takes a decade for new ideas in compilers to get into production code, whereas the microprocessor running the code will go through 4 or 5 iterations in that time. So there is a crisis of sorts because we don’t know how to program the types of microprocessors that we know how to design and operate. We live in interesting times.