If I say “graphics chip”, most techies will say NVIDIA or AMD. But in the new post-PC world , neither of these players holds the key to the future. One that does is a little company making 43 cents on every latest version iPad and iPhone. Another is designing their own approach. Should you care what graphics is in your phone? Continue reading “The best graphics chip is the one seen the most”

MEMS and IC Co-design

This morning I attended a webinar about MEMS and IC co-design from a company called SoftMEMS along with Tanner EDA. I learned that you can co-design MEMS and IC either in a bottom-up or top-down methodology, and that this particular flow has import/export options to fit in with your mechanical simulation tools (Ansys, Comsol, Open Engineering) as well.

Continue reading “MEMS and IC Co-design”

Oasys Gets Funding from Intel and Xilinx

Oasys announced that it closed its series B funding round with investments from Intel Capital and Xilinx. The fact that any EDA company has closed a funding round is newsworthy these days; companies running out of cash and closing the doors seems to be a more common story.

Oasys has been relatively quiet, which some people have taken to mean that nobody is using RealTime Designer, their synthesis tool. But in fact they have announced that #2-4 US semiconductor companies, namely Texas Instruments, Qualcomm and Broadcom (via its acquisition of Netlogic) are customers. As is Xilinx, the #1 FPGA vendor, or vendor of programmable platforms as they seem to want to be known. Now with Intel, Oasys have filled out the enviable position of having relationships with the top 4 US semiconductor vendors and the top FPGA vendor. These are the companies doing many of the most advanced designs today.

On the SoC side, Oasys have tapeouts at both 45nm and 28nm already. Ramon Macias of Netlogic (now part of Broadcom) said publicly nearly a year ago, they had already taped out their first 45nm design and were now using RealTime Designer on 28nm designs. On the programmable platform side, Xilinx licensed Oasys’s technology a couple of years ago and have been using it internally. They have “achieved excellent results across a wide range of designs.”

Chip Synthesis is a fundamental shift in how synthesis is applied to the design and implementation of integrated circuits (ICs). Traditional block-level synthesis tools do a poor job of handling chip-level issues. RealTime Designer is the first design tool for physical register transfer level (RTL) synthesis of 100-million gate designs and produces better results in a fraction of the time needed by traditional logic synthesis products. It features a unique RTL placement approach that eliminates unending design closure iterations between synthesis and layout.

EDA Industry Talks about Smart Phones and Tablets, Yet Their Own Web Sites are Not Mobile-friendly

As a blogger I write weekly about the EDA industry and certainly our industry enables products like Smart Phones and Tablets to even exist, however if we really believed in these mobile devices then what should our web sites look like on a mobile device?

It’s a simple question, yet I first must define mobile-friendly before sharing what I discovered. Here’s what I consider to be a mobile-friendly web site:

[LIST=1]

Mobile-friendly web site design is not:

- The identical experience as the desktop.

Now that we know what mobile-friendly web site design is all about let’s see if any EDA company has optimized their web site so that mobile visitors have a pleasant experience. Today I visited the following sites and can report that NO major EDA company has a mobile friendly web site, what a disappointment. Kudos to ARM for leading the way on supporting mobile-friendly devices with their web site.

[TABLE] style=”width: 500px”

|-

| EDA and IP Sites

| Mobile Friendly

|-

| www.synopsys.com

|

|-

| www.cadence.com

|

|-

| www.mentor.com

|

|-

| www.ansys.com

|

|-

| www.atrenta.com

|

|-

| www.tannereda.com

|

|-

| www.agilent.com

|

|-

| www.aldec.com

|

|-

| www.arm.com

|

|-

| www.apsimtech.com

|

|-

| www.atoptech.com

|

|-

| www.berkeley-da.com

|

|-

| www.calypto.com

|

|-

| www.chipestimate.com

|

|-

| www.ciranova.com

|

|-

| www.eve-team.com

|

|-

| www.forteds.com

|

|-

| www.gradient-da.com

|

|-

| www.helic.com

|

|-

| www.icmanage.com

|

|-

| www.jasper-da.com

|

|-

| www.lorentzsolution.com

|

|-

| www.methodics-da.com

|

|-

| www.nimbic.com

|

|-

| www.oasys-ds.com

|

|-

| www.pulsic.com

|

|-

| www.realintent.com

|

|-

| www.sigrity.com

|

|-

| www.solidodesign.com

|

|-

| www.verific.com

|

|-

I could’ve researched more EDA and IP sites, but I think that you can see the clear trend here, we talk about the mobile industry but don’t apply mobile-friendly to our own web sites.

What about the media sites that write about our industry? They didn’t fare much better on being mobile-friendly:

[TABLE] style=”width: 500px”

|-

| EDA Media Site

| Mobile Friendly

|-

| www.semiwiki.com

|

|-

| www.eetimes.com

|

|-

| www.garysmitheda.com

|

|-

| www.marketingeda.com

|

|-

| www.eejournal.com/design/fpga

|

|-

| www.edacafe.com

|

|-

| www.deepchip.com

|

|-

| www.chipdesignmag.com

|

|-

| www.dac.com

|

|-

|

|

|-

The one media site that is mobile-friendly belongs to me, and I converted it in about one hour of effort.

High volume sites are mostly optimized with a few notable exceptions:

[TABLE] style=”width: 500px”

|-

| Popular Web Sites

| Mobile Friendly

|-

| www.apple.com

|

|-

| www.tsmc.com

|

|-

| www.intel.com

|

|-

| www.samsung.com

|

|-

| www.cnn.com

|

|-

| www.google.com

|

|-

| www.facebook.com

|

|-

| www.twitter.com

|

|-

| www.techcrunch.com

|

|-

| www.engadget.com

|

|-

Making a Web Site Mobile Friendly

Web sites that are dynamic and template-driven can be quickly adapted using Cascading Style Sheets (CSS) to detect the size of the browser and then serve up pages that are specifically formatted. For those of you who are curious and a bit geeky the basic approach is to use a style sheet which detects orientation and size of the browser for three configurations: Smart Phones, Tablets, Desktop

Beyond CSS, there is one more trick that you have to add in the Header of each web page to force the mobile browser to tell you it’s real dimensions:

Hopefully I can report back one year from now and show some marked improvement from the EDA and IP industries on getting their web sites to be mobile-friendly.

EDPS: SoC FPGAs

Mike Hutton of Altera spends most of his time thinking about a couple of process generations out. So a lot of what he worries about is not so much the fine-grained architecture of what they put on silicon, but rather how the user is going to get their system implemented. 2014 is predicted to be the year in which over half of all FPGAs will feature an embedded processor, and at the higher end of Altera and Xilinx’s product lines it is already well over that. Of course SoCs are everywhere, in both regular silicon (Apple, Nvidia, Qualcomm…) and FPGA SoCs from Xilinx, Altera and others.

The challenge is that more and more of systems is software, but the traditional FPGA programming model is hardware: RTL, state-machines, datapaths, arbitration, buffering, highly-parallel. But software guys are not going to learn Verilog and so there is a need for a programming model that represents an FPGA as a processor with hardware accelerators or as a configurable multi-core device. Taking a software-centric view of the world but also being able to build the FPGA so that the entire system meets its performance targets.

There have been many attempts to make C/C++/SystemC compile into gates (Forte,catapult,c2s,synphony,autoesl…) but these really only work well for for-loop type algorithms that can be unrolled, such as FIR filters. They don’t work so well for complex algorithms. What is really needed is to analyze the software to find the bottlenecks and then “automatically” build hardware for doing whatever faster.

The most promising approach at present seems to be OpenCL, a programming model developed by the Khronos group to support multicore programming and silicon acceleration. From a single source it can map an algorithm onto a CPU and GPU, onto an SoC and an FPGA or just directlly onto an FPGA. There is a natural separation between code that runs on accelerators and the code that manages the accelerators (which can run on any conventional processor).

Of course software is going to be the critical path in the schedule if these approaches are not also marged with virtual platform technology so that software development can proceed before hardware is available. Sometimes the software load already exists since software is longer-lived than hardware and may last for 4 or 5 hardware generations. But if it is being created from scratch then it may have a two year schedule, meaning that it is being targeted at a process generation that doesn’t yet have any silicon at all.

EDPS: Parallel EDA

EDPS was last Thursday and Friday in Monterey. I think that this is a conference that more people would benefit from attending. Unlike some other conferences, it is almost entirely focused around user problems rather than doing a deep dive into things of limited interest. Most of the presentations are more like survey papers and fill in gaps in areas of EDA and design methodology that you probably feel you ought to know more about but don’t.

For example, Tom Spyrou gave an interesting perspective on parallel EDA. He is now at AMD having spent most of his career in EDA companies, fox turned game-keeper if you like. So he gets to AMD and the first thing that he notices is that all the multi-thread features that he has spent the previous few years implementing are actually turned off almost all the time. The reality of how EDA tools are run in a modern semiconductor company makes it hard to take advantage of.

AMD, for example, has about 20,000 CPUs available in Sunnyvale. They are managed by lsf and people are encouraged to allocate machines fairly across the different groups. A result of this is that requiring multiple machines simultaneously doesn’t work well. Machines need to be used when they become available and waiting for a whole cohort of machines is not effective. It is also hard to take advantage of the best machine available, rather than one that has precisely the resources requested.

So given these realities, what sort of parallel programming actually makes sense?

The simplest case is where there is non-shared memory and coarse grained parallelism with separate processes. If you can do this, then do so. DRC and library characterization fits this model.

The next simplest case is when shared memory is required but it is almost all read-only access. A good example of this is doing timing analysis for multiple corners. Most of the data is the netlist and timing arcs. The best way to handle this is to build up all the data, and then fork off the separate processes to do the corner analysis using copy-on-write. Since most of the pages are never written then most will be shared and the jobs will run without thrashing.

The next most complex case is when shared memory is needed for both reading and writing. Most applications are actually I/O bound and don’t, in fact, benefit from this but some do: thermal analysis, for example, which is floating-point CPU-bound. But don’t expect too much: 3X speedup on 8 CPUs is pretty much the state of the art.

Finally, there is the possibility of using the GPU hardware. Most tools can’t actually take advantage of this but in certain cases the algorithms can be mapped onto the GPU hardware and get a massive speedup. But it is obviously hard to code, and hard to manage (needing special hardware).

Another big issue is that tools are not independent. AMD has a scalable simulator that runs very fast on 4 CPUs provided the other 4 CPUs are not being used (presumably because the simulator needs all the shared cache). On multicore CPUs, how a tool behaves depends on what else is on the machine.

What about the cloud? This is not really in the mindset yet. Potentially there are some big advantages, not just in terms of scalability but in ease of sharing bugs with EDA vendors (which maybe the biggest advantage).

Bottom line: in many cases it may not really be worth the investment to make the code parallel, the realities of how server farms are managed makes all but the coarsest grain parallelism a headache to manage.

Google Glasses = Darknet!

Google Project Glasses and Augmented Reality will be the tragic end to the world as we once knew it. As we become more and more dependent on mobile internet devices we become less and less independent in life. Consider how much of your critical personal and professional information (digital capital) is stored via the internet and none of it is safe. With a quick series of keystrokes from anywhere in the world your digital capital can be altered or wiped clean leaving nothing but flesh and bones!

“People of the past! I have come to you from the future to warn you of this deception. The few have used Artificial intelligence technology to enslave the many through the use of thought control. I lead a band of Anti Geeks who fight against oppressive technologies. But we alone are not strong enough.The revolution must begin now! Join us to fight for non augmented reality!”

If you haven’t read the “Daemon” and “Freedom” books by Daniel Suarez you should, if you dare to take a peek into what augmented reality has in store for us all. Daniel Suarez is an avid gamer and technology consultant to Fortune 1000 companies. He has designed and developed enterprise software for the defense, finance, and entertainment industries. The book name “Daemon” is quite clever. In technology, a daemon is a computer program that runs in the background and is not under the control of the user. In literature a daemon is a god, or a demon, or in this case both.

The book is centered on the death of Mathew Sobol, PhD, cofounder of CyberStorm Entertainment, a pioneer in online gaming. Upon his death, Sobol’s online games create an artificial intelligence based new world order “Darknet”, which is architected to take over the internet and everything connected to it for the greater good. The interface to Darknet is a pair of augmented reality glasses much like the ones Google is developing today. While the technology described in the books seem like fiction, most of it already exists and the rest certainly will. The technology speak is easy to follow for anyone who has a minimal understanding of computers and the internet, very little imagination is required.

The book’s premise is “Knowledge is power”or more specifically “He who controls digital capital wins”. So you have to ask yourself, how long before just a handful of companies rule the earth (Apple, Google, FaceBook)? Look at the amount of digital capital Google has access to:

- Google Search (Internet and corporate intranet data)

- Google Chrome (Personal and professional internet browsing)

- Android OS (Mobile communications)

- Google Email-Voice-Talk

- Google Earth-Maps-Travel

- Google Wallet

- Google Reader

There are dozens of Google products that can be used to collect and manipulate public and private data in order to thought control us and ultimately conquer the digital world.

The digital world is rampant with security flaws and back doors which could easily enable the destruction of a person, place, or thing. A company or brand name years in the making can be destroyed in a matter of keystrokes. In the book, a frustrated Darknet member erases the digital capital of a non Darknet member who cuts in line at Starbucks. Depending on my mood that day, I could easily do this.

It’s not like we have a choice in all this since the digital world is now a modern convenience. We no longer have to store our most private information in filing cabinets, safety deposit boxes, or even on our own computer hard drives. It’s a digital world and we are digital girls. The question is, who can be trusted to secure Darknet (Augmented Reality)?

Designing for Reliability

Analyzing the operation of a modern SoC, especially analyzing its power distribution network (PDN) is getting more and more complex. Today’s SoCs no longer operate on a continuous basis, instead functional blocks on the IC are only powered up to execute the operation that is required and then they go into a standby mode, perhaps not clocked and perhaps powered down completely. Of course this on-demand power makes a major impact in reducing the standby power.

The development of CPF and UPF over the last few years has had a major impact. Low-power techniques including power-gating, clock-gating, voltage and frequency scaling are represented in the CPF/UPF and are verified for proper implementation. But the electrical verification tools to simulate these complex behaviors in time are still evolving.

The problem is that outdated verification techniques are inadequate. Static voltage drop simulations or simple dynamic voltage drop simulations on the PDN will not adequately represent the complex switching or power transitions as blocks come on- and off-line. The state transitions need to be checked rigorously to guarantee that power supply noise does not affect functionality.

The variations in power as cores, peripherals, I/Os and IP go into different states can be huge, with large current inrushes. Identifying these critical transitions and using them for electrical simulation of the PDN is critical. The transitions can be identified at the RTL level where millions of cycles can be processed and the relatively few cycles of interest can be selected for full electrical simulation.

Another area requiring detailed simulation is voltage islands that either power down or operate at a lower voltage (and typically frequency). This can have a major impact on leakage power as well as dynamic power. But reliability verification is complex. Failures can happen if signal transitions occur before the voltage levels o these islands reach the full-rail voltage. There is a major tradeoff in that if the islands are powered up too fast the inrush current can cause errors in neighboring blocks or in the voltage regulator. If it is powered up too slowly, then signals can start to arrive before the block is ready. To further complicate things, the inrush currents interact with the package and board parasitics too.

As SoCs have become more and more complete, previously separate analog chips such as those for GPS or RF are now on the same silicon as high-speed digital cores. Sensitive analog circuits need to be analyzed in the context of noisy digital neighbors to check the impact of substrate coupling. Isolation techniques don’t always work well at all frequencies and, of course, this needs to be analyzed in detail.

3D techniques, such as wide-IO memory on top of logic, add a further level of complexity. These approaches have the potential to make major increases in performance and reductions in power but they also create power-thermal interactions which need to be analyzed. Not just that the heat on one die can affect its performance, but head from a neighboring die can do so too.

IC designers can no longer just assume things are correct by construction in this climate. Multi-physics simulations of thermal, mechanical, and electrical behavior is a must for reliability verification.

See Arvind’s blog on the subject here.

Synopsys Users Group 2012 Keynote: Dr Chenming Hu and Transistors in the Third Dimension!

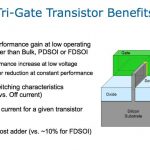

It was an honor to see DR. Chenming Huspeak and to learn more about FinFets, a technology he has championed since 1999. Chenming is considered an expert on the subject and is currently a TSMC Distinguished Professor of Microelectronics at University of California, Berkeley. Prior to that he was the Chief Technology Officer of TSMC. Hu coined the term FinFET 10+ years ago when he and his team built the first CMOS FinFETs and described them in a 1999 IEDM paper. The name FinFET because the transistors (technically known as Field Effect Transistors) look like fins. The fins are the 3D part in the name 3D transistors. Dr. Hu didn’t register patents on the design or manufacturing process to make it as widely available as possible and was confident the industry would adopt it, and he was right.

There is a six part series on YouTube entitled: FinFET-What it is and does for IC products, history and future scaling presented by Chenming, unfortunately it is in Mandarin. I have asked Paul McLellan to blog it since he speaks Mandarin but the slides look identical to what was presented at the keynote so it may be worth a look:

For those of you who have no idea what a FinFet is, watch this Tri-Gate for Dummies video by Intel. They call a FinFet a Tri-Gate transistor which they claim to have invented. I expect they are referring to the name rather than the technology itself. 😉

Probably the most comprehensive article on the subject was published last November by IEEE Spectrum “Transistor Wars: Rival architectures face off in a bid to keep Moore’s Law alive”. This is a must read for all of us semiconductor ecosystemites. See, like Intel I can invent words too.

Unfortunately, according to Chenming, lithography will not get easier, double patterning will still be required, and the SoC design and manufacturing cost incremental is still unknown. Intel has stated that there is a +2-3% cost delta but Chenming sidestepped the pricing issue by joking that he is a professor not an economist. In talking to Aart de Geus, Synopsys will be ready for FinFets at 20nm. Since Synopsys has the most complete design flow and semiconductor IP offering they should be the ones to beat in the third dimension, absolutely. You can read about the current Synopsys 3D offerings HERE.

Why the push to FinFets at 20nm you ask? Because of scaling, from 40nm to 28nm we saw significant opportunities for a reduction in die size and power requirements plus an increase in performance. Unfortunately standard planar transistors are not scaling well from 28nm to 20nm, causing a reduction of the power/die savings and performance boost customers have come to expect from a process shrink ( Nvidia Claims TSMC 20nm Will Not Scale?). As a result, TSMC will offer FinFets at the 20nm node, probably as a mid-life node booster, just my opinion of course. Expect 28nm, 20nm, and 14nm roadmap updates at the TSMC 2012 Technology Symposium next week. This is a must attend event! If you are a TSMC customer register HERE.

Why am I excited about transistors in the third dimension? Because it is the single most disruptive technology I will see in my illustrious semiconductor ecosystem career and it makes for good blog fodder. It also challenges the mind and pushes the laws of physics to the limits of our current understanding, that’s why.

IP-SoC day in Santa Clara: prepare the future, what’s coming next after IP based design?

D&R IP-SoC Days Santa Clara will be held on April 10, 2012 in Santa Clara, CA and if you plan to attend, just register here. IP market is a small world, and EDA a small market if you look at the generated revenue… but both are essential building blocks for the semiconductor industry. It was not clear back in 1995 that IP will become essential: at that time, the IP concept was devalued by some products exhibiting poor quality level, un-efficient technical support, leading program manager to be very cautious to simply decide to buy. Making was sometimes more efficient…

Since 1995, the market has been cleaned up, the poor quality product suppliers disappearing (being bankrupt or sold for asset) and the remaining IP vendors have understood the lesson. None of the renewed vendor marketing a protocol based (digital) function would take the chance to launch a product which has not passed an extensive verification program, and the vendors of mixed-signal IP functions know that the “Day of Judgment” will be when the Silicon prototypes will be validated. This leaves very small room for low quality products, even if you may still find some new comers deliberately launching a poor quality RTL function, naively thinking that lowering the development cost will allow to sell at low price and buy market share, or some respected Analog IP vendor failing to deliver “at spec” function, just because… analog is analog, and sometimes closer to black magic than to science!

If you don’t trust me, just look at products like Application Processor for Wireless handset, or for Set-Top-Box: these chips are made at 80% of reused functions, whether internal or coming from an IP vendor. This means literally that several dozen functions, digital or mixed-signal, are IP. Would only one of these failed and a $50+ million SoC development will miss the market window. That said, will the IP concept, as it is today in 2011, will be enough to support the “More than Moore” trend? In other word, if IP in the 2000-10’s is like Standard Cell was in the 1980-90’s, what will be the IP of the 2020’s? You will find people addressing this question at IP-SoC Days!

So, the interesting question will be to know where the IP industry stands on the spectrum starting from a single IP function, ending to a complete system. Nobody would allege that we have reached the upper side of the spectrum and claim that you can source complete system from an IP vendor. Maybe the SC industry is not ready to source a complete IP system: what would be the added value of the Fabless companies if/when will occur?

In the past, IP vendors were far to be able to provide subsystem IP, requiring strong understanding of specific application and market segment, associated technical know-how of such application and, even more difficult to met, adequate funding to support up-front development. But they are starting to offer IP Subsystem, just look at the recent release of a “Complete sound IP subsystem” by Synopsys, or the emphasis put by Cadence on EDA360… So IP-SoC days will be no more IP-centric only, but IP Subsystem centric!

By Eric Estevefrom IPnest