Synopsys is consolidating the company positioning on Verification IP. We have announced the launch of Discovery VIP in Semiwiki, in February this year, and we have commented about the acquisition of nSys and ExpertIO in January. This webinar, “Achieving Rapid Verification Convergence of ARM® AMBA® 4 ACE™ Designs using Discovery™ Verification IP”, to be held on May, 8 at 10am PDT, will allow the audience to better understand Cache coherency management problematic. Traditionally, cache coherency management has largely been performed in software, adding to software complexity and development time. With AMBA 4 ACE, system level coherency is performed in hardware, providing better performance and power efficiency for complex SoC designs. However, this shifts much of the complexity of verify cache coherency to functional verification.

This webinar begins with a short overview of the challenges of verifying a coherent design and goes on to show how the features and architecture of Synopsys’ new Discovery Verification IP helps overcome these challenges to simplify the verification of ACE designs.

The ever growing design time spent in verification, we have recently read figures of 70% of the overall hardware design effort being associated with the verification, is creating a demand for most efficient EDA tools, and accurate Verification IP (dedicated to a specific protocol).

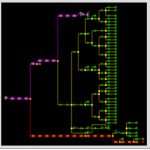

The next picture is useful to understand the cost breakdown associated with Verification. If you look at the middle left box, you see a 3X cost (or license count, or resources) increase for almost every task (except “Tool, Support and Service” with 20% only). So, offering a 3 to 6X run time improvement is welcome, to keep the design schedule and consequently the time to market within reasonable limits.

You can log to this webinar here

From Eric Esteve from IPnest