Tuesday morning at DAC I attended the Synopsys-hosted breakfast to hear from foundries and ARM about the challenges of designing and delivering silicon at the 32nm/28nm and 20nm nodes.

Cadence IP Strategy 2012

As I mentioned in a previous blog Cadence Update 2012, Martin Lund is now in charge of the Cadence IP strategy. Martin read my first blog and wanted to exchange IP strategies so we met at DAC 2012 for a chat. Not only did Martin connect with me on LinkedIn, he also joined the SemiWiki LinkedIn group, which now has 4,000+ members. So yes, he is serious about social media and the IP business.

During his 12 years at Broadcom, Martin grew the company to become the global leader in Ethernet switch SoCs for data center, service provider, enterprise, and SMB markets, and successfully drove several strategic acquisitions. His silicon and system level experience equips him well to scale the Cadence SoC Realization business.

Prior to Broadcom, Lund held various marketing and senior engineering management positions in the Network Systems Division of Intel Corporation and at Case Technology, a European networking equipment manufacturer acquired by Intel in 1997. Lund is an inventor on 26 issued and pending US patents. He holds a technical degree from Frederiksberg Technical College and Risø National Laboratory at the Technical University of Denmark.

Being Danish, it should not have surprised me when Martin used the Lego analogy for IP. Lego is a Danish company and often compared to semiconductor IP as the building blocks of modern semiconductor design. Legos were my number one toy as a kid. My father was convinced I would be an architect, until of course I got my hands on a Comodore Pet computer, sorry about that Dad. Did you know that Lego is the largest tire manufacturer in the world? Martin did.

As a father of four, my Lego habit continued through my kids and Lego blocks evolved into Lego subsystems with optimized sets targeted at vertical markets. I remember spending hours with my son building a Space Shuttle kit and not one part was left over. A good analogy of the emerging semiconductor IP subsystems, plug and play, no parts left over.

What happened next to the space shuttle is the future of the IP business according to Martin Lund and I agree whole heartedly. My son made changes and incrementally optimized the shuttle for many different uses, until of course it was reduced to a pile of building blocks by his baby sister, and we were on to the next project which was even bigger, more complex, and in desperate need of optimizing.

Bottom line: For advanced semiconductor design, complete IP kits (off the shelf subsystems) will not work. There must be a significant level of optimization for differentiation and ease of integration. Trade-offs are an integral part of modern semiconductor design: Power, Performance, Area, Yield and IP subsystems will be held to the same standard. Off the shelf subsystems will not win in competitive markets. Mass customization will be required. Software will be the key enabler. Clearly this is a Cadence IP versus Synopsys IP strategy, which I will blog more about later.

Side note: My oldest son, the Lego Master, very quickly mastered the computer and internet. He is co-architect and lead administrator of SemiWiki and just received his Masters Degree in Education. Moving forward, he will prepare the legions of Lego Masters for the mathematical challenges of the new world order.

What Will Happen to Nokia?

News today is that Moody’s has downgraded Nokia to junk status. They also announced that they will lay off 10,000 people (including about 1 in 4 of the people they employ in Finland, where Nokia is headquartered).

For those of you who don’t know all the inside-baseball stuff about Nokia, here is a recent little history. The current CEO of Nokia Stephen Elop came from Microsoft at the start of last year. He wrote a famous (infamous) memo known as the burning platforms (it started with the choice of people on burning oil platform to leap off). Since that memo sales have fallen for 5 consecutive quarters wiping out $13B in revenues and $4B in profits.

The first thing the memo did was to say that current products were not good and as a result people stopped buying them. This is known as the Rattner effect after a very profitable British jewellery chain called Rattners which had a store on every high street in Britain. At a dinner with finance types Gerald Rattner said that the stuff they sold was so cheap because it was “total crap.” It got into the press, people stopped going there and the chain quickly cratered in value and almost went bankrupt.

The next thing Elop did was decide that all smartphones would be based on Microsoft’s Windows Phone. This at a time when Nokia still dominated the smartphone market (outside the US) with phones based on Symbian and another internal operating system called Meego (despite the memo saying sales of Symbian-based smartphones were in terminal decline they were actually growing strongly and outselling Apple 2:1). The only problem was that these WP-based phones would not be available for the best part of a year. This is the Osborne effect, named after a silicon valley businessman (coincidentally also British) who announced that their next product would be compatible with the IBM PC. This was obviously desirable so everyone stopped buying the current products and the company ran out of money before it could deliver the sexy IBM-compatible one.

The effect of all of this is that Nokia has had the biggest loss of market share of any major business anywhere ever. By that measure Elop has to qualify as one of the worst CEOs of any company ever.

And worse, once the Lumia (WP-based) smartphone was available it didn’t sell very well. If you are going to put all your wood behind one arrow it had better be a good one and this one is not.

My go-to guy on anything to do with the phone market in general and Nokia in particular is Tomi Ahonen (who used to work at Nokia, is Finnish, but lives in Hong Kong these days). His view on what is going on are always worth reading. Cell-phones are not sold like other products (DVD players say). You can’t just go and buy any old cell phone and then go find a carrier (at least in most countries). The carriers control which phones get sold and which do not. Many cell-phone carriers also have landline businesses (often one cellphone license would go to the incumbent telecom operator in a country) and there is one thing that they absolutely hate: Skype. And who owns Skype? Microsoft. So the carriers hate Microsoft and aren’t going to do anything to help them. Hence the lukewarm launch of Lumia (on Easter Sunday when all the stores were closed, wonderful). Even Elop admits this saying that retail salespeople “are reticent to recommend Lumia smartphones to potential buyers.” So this hate goes all the way to the front lines.

So Nokia is in trouble, as I’ve said before. Of course they won’t go bankrupt and shut down, someone will buy them. So who? Tomi’s pick is Samsung although that was before the current round of layoffs and firings (he thinks they are damaged goods now). They are the only company other than Apple making any real money in handsets and would have huge market share, pick up some additional manufacturing lines in other parts of the world and so on. Microsoft could buy them but it would be easier for them to keep Nokia on life support by sending them money. Lots of people, in particular, Apple, could buy them for patents (although Nokia has been selling a lot of patents to keep afloat) and to stop Samsung getting them. Facebook could buy them if they really wanted to be in the handset business (but at lot of Nokia’s business is “feature phone” i.e. dumb phone). Lots of other choices of course. But this blog has gone on long enough.

TSMC Theater Presentation: Ciranova!

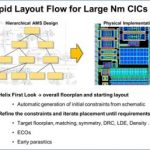

Ciranova presented a hierarchical custom layout flow used on several large advanced-node designs to reduce total layout time by about 50%. Ciranova itself does automated floorplanning and placement software with only limited routing; but since the first two constitute the majority of custom layout time, and strongly influence the remainder, the overall impact can be substantial. Designs sensitive to nanometer effects like Layout Dependent Effects (LDE) and poly density are particularly well suited to automation; one example was a 28nm, 40,000 device mixed-signal IP block which had been completely placed by one engineer in 8 days, including density optimization.

The Ciranova-enabled flow has two main phases. In the first phase, the software automatically generates a first-pass set of constraints for the entire design hierarchy, and a range of accurate floorplans. This phase is “push button” – it starts with a schematic and requires no intervention or user constraint entry. In the second phase, the user interactively refines the initial constraints, running and rerunning hierarchical placement until the entire layout matches the user’s floorplan targets and other criteria. The whole process is very fast; since the layouts are DRC-correct irrespective of rule complexity, tens of thousands of devices can be placed accurately in a few days. Ciranova’s output is an OpenAccess database which can be opened in any OA environment.

Two major advantages of this flow over normal schematic-driven-layout are (1) the DRC correct by construction aspect; and (2) the entire layout is optimized at once. This approach lends itself especially well to handling proximity-related effects like LDE, where the behavior of a given device changes depending on what happens to be nearby. Since Ciranova optimizes entire regions at once, multiple LDE spacing constraints are managed together.

In a TSMC design, TSMC provides tools at the schematic level to help a user identify LDE-sensitive devices in his or her schematic, and determine the relevant spacing constraints necessary for those devices to perform correctly. Ciranova then takes this information and produces a correct-by-construction layout which optimizes not only to the LDE directives but also to any other requirements: design rules, density, designer guidance such as symmetry, etc. Also, the approach is a general one and not limited to individual modules like current mirrors and differential pairs.

The diagram above includes a post-placement simulation study with alternate layouts of the same design: one with LDE rules applied, and one without (net result: the LDE-optimized placement clocks slightly faster). Most users never get to see a comparison like this, because hand layout takes so long that few people ever do it more than one way. But an automated flow makes this kind of study and tradeoff analysis easy.

Using this approach, even very large custom IC designs under very complex design rules can be done quickly; and typically at equal or better quality to handcraft, since much broader optimizations can be achieved than a human mask designer normally has time to explore.

Genevi, isn’t that a city in Switzerland?

I got an email from Mentor Embedded this morning about a webinar on Implementing a GENIVI-compliant System. I have to admit I had no idea what GENIVI is, which surprised me. I spent several years working in the embedded space and so I usually have at least a 50,000 foot view of most things going on there. One reason for my ignorance is that it only started in November 2011 unlike automotive standards such as FlexRay and CAN that have been around for a long time.

GENIVI turns out to be an automotive infotainment standard (the IVI on GENIVI stands for In-Vehicle Infotainment). This means it gets used in things like GPS maps, CD players, satellite access, but is not used for things like powertrain management or ABS braking (which are safety critical and so have a completely different set of tradeoffs). The GENIVI website describes itself as:”a non-profit industry alliance committed to driving the broad adoption of an In-Vehicle Infotainment (IVI) open-source development platform.”

GENIVI seems to be based in that hotbed of automotive…San Ramon, one valley over from Silicon Valley. Unlike, say, Android, Genivi is not an open-source development at the code level, it is a set of standard services, some of which are not optional, along with compliance programs.

The most recent specification of the specification is 2.0. On the prior iteration (that would be 1.0) 19 platforms from 9 member companies were declared compliant. Already under the 2.0 there are compliant platforms from:

- Accenture

- Intel/Samsung

- Mentor Graphics (not exactly a surprise)

- MontaVista

- Renesas

- Wind River

The obvious missing name is Google and Android.

The seminar will cover:

- Implementing IVI, current trends and infrastructure

- Gaining GENIVI compliance

- Integrating open source and proprietary components

- Building in adjacent functions, such as AutoSar and Android-based applications

The webinar is at 11am central time (9am on West Coast) on June 21st. Register for it here.

Semiconductor equipment returns to growth

Semiconductor manufacturing equipment has returned to growth after a falloff in the second half of 2011. Combined data from SEMI (U.S. andEuropean companies) and SEAJ (Japanese companies) show billings peaked at a three-month-average of $3.2 billion in May 2011. Bookings and billings began todrop in June 2011, with billings reaching a low of $2.3 billion in November 2011. Bookings and billings began to turn around in December. Billings recovered to $2.9 billion in April 2012.

Total semiconductor manufacturing equipment billings were $33.4 billion in 2011. SEMI’s May 2012 forecast for wafer fab equipment called for 2% growth in 2012. 2% growth would result in $34.1 billion in billings for 2012, still 2% below 2007 billings of $34.7 billion and well below the record high of over $45 billion in 2000. Thus semiconductor capacity appears to be headed for moderate growth, but nowhere near the overheated growth which could lead to overcapacity.

What is the current capacity utilization in the semiconductor industry? The demise of the Semiconductor Industry Capacity Statistics (SICAS) program makes the answer difficult to determine. The final SICAS data for 4Q 2011 showed MOS IC capacity utilization of 88.9%, down from 91.7% in 3Q 2011. WSTS data showed a 2.3% decline in the semiconductor market in 1Q 2012 from 4Q 2012. Thus MOS IC shipments likely declined by a similar amount. MOS IC capacity growth was probably roughly flat in 1Q 2012 based on the falloff in manufacturing equipment billings in the second half of 2011. We at Semiconductor Intelligence estimate industry MOS IC capacity utilization was86% to 87% in 1Q 2012.

Forecasts for 2012 semiconductor market growth are generally fairly low. Mike Cowan’s June forecast was 0.3% growth, similar to WSTS’ May forecast of 0.4% growth. Our forecast at Semiconductor Intelligence is for 2% growth. Forecasts made in March and April from major semiconductor analyst firms range from 4% to 6%. Slow growth in the semiconductor market combined with slow growth in the semiconductor manufacturing equipment market should result in no significant movement in capacity utilization. MOS IC utilization should remain in the mid 80% range through 2012.

The two largest semiconductor foundries, TSMC and UMC, provide information on their wafer capacity and shipments. Data over the last year reflects the industry trends above. Both companies saw declines in revenue and shipments in 3Q 2011 and 4Q 2011. Shipments began to pick up in 1Q 2012. Both companies expect strong growth in business in 2Q 2012. TSMC expects 2Q 2012 revenue to increase 20% from 1Q 2012. UMC expects wafer shipment to increase 15%. Capacity growth has slowed significantly from prior quarters. TSMC expects capacity will decline 1.3% in 2Q 2012. UMC forecasts a 0.6% increase in capacity in 2Q 2012 following a 0.9% decline in 1Q 2012.

The Black Swan that Catapulted Intel into 2012

Black Swan Events are not to be embraced, they are to be feared, if conventional wisdom holds true. And yet, the 2011 Black Swan that slammed the PC market (i.e. the Thailand Floods that wiped out a large part of the disk drive market) has turned out to be the key catalyst for reshaping the semiconductor industry in 2012 and 2013. Instead of hobbling through what was seen to be at least a 6 month crises with underutilized 32nm fabs, Intel made a decision last November to ramp 22nm production faster and thus catapult them to a position that will result in Apple entering its fabs and Samsung dropping ARM development, embracing x86 across the board within two years. This may seem an extreme view, but the announcement that TSMC will see 28nm shortages into 2013 highlights that Mobile Tsunami, like PCs require leading edge process technology and there is only one true leading edge volume semiconductor supplier: Intel.

Intel reported its best quarter ever (Q3 2011) on October 18 2011 and projected a further 3% revenue upside for Q4 2011. This announcement came just three days after WD’s Hard Disk Drive factory was flooded and thus the extent of any impact was probably not understood. However, because Intel is a heavy CapEx, fast depreciating driven company, it is key for them to assess quickly how external events affect market demand and drives fab loading. In the past they have been known to quickly shift gears to get to where the market is going at the cost of short-term revenue. And so as they admitted in their January 2012 conference call they converted some of their production from 32nm to 22nm. We will never know how much was converted, but I speculate it was significant. Wall St. was initially not pleased and AMD benefited from Intel’s pull back. But now the benefits are starting to accrue as Qualcomm, AMD and nVidia scramble for 28nm production that targets the Mobile Tsunami marketplace. Only Apple and Intel are prepared.

Apple’s announcement on its refresh of the MacBook Air and MacBook Pro line this week shows that Intel has gained additional sockets with its Ivy Bridge with integrated graphics at the expense of AMD and nVidia. Whereas two years ago, an external graphics chip was used across the Apple product line, now it is relegated to the $1799 and higher MacBook Pro notebooks. Expect Intel to make similar inroads with the Ultrabook market.

Question: How much of the 28nm TSMC shortage has forced nVidia to focus its limited supply on desktop add-in cards instead of mobiles? It’s a Major Retreat that is likely unrecoverable.

Now consider Qualcomm and their 28nm ramp for the upcoming Apple iPhone5 and the Snapdragon all-in-one chip going into a wide range of non-Apple smartphones and tablets. Two months ago, it was revealed that they would not be able to meet demand until Q4. Now they won’t meet demand until the end of Q4 2012. As I speculated in a previous blog, I believe Apple has worked Qualcomm into a position that it has its supply needs met at the expense of its competitors and thus setting themselves up for a strong second half ramp with reduced competition from Samsung, HTC and the rest of the mobile players.

All of the above however doesn’t encompass the full extent of what is transpiring in the mobile semiconductor market and how Intel is prepared to increase its presence. Intel’s Medfield processor is late to the smartphone market but still competitive. Built in written off 32nm fabs, I presume Intel is cutting some very nice deals in order to ramp the product in volume and create havoc for nVidia, Mediatek, TI, Broadcom and Marvell. Qualcomm’s dominance at the high end with Snapdragon combined with Medfield at the low end will cause a market squeeze with several of the above exiting in the next twelve months.

Looking ahead to 2013, I expect Intel to enter the market with a 4G LTE solution and the mobile market merchant suppliers to narrow down to Qualcomm and Intel with Apple and Samsung continuing to develop for their own needs. If Apple gains significant market share in the Smartphone market over the next 9 months due to the 4G LTE supply situation, then I expect Intel will make inroads at Samsung with x86 Atoms. Samsung will have to do some serious soul searching as to whether it makes sense to design ARM based processors when Intel can offer solutions that are lower in cost and power and higher in performance – all due to Intel’s three to four year lead in process technology. If Samsung balks, look for Intel to leverage another player like HTC, Motorola to undercut the market.

Apple, unlike Samsung, can get Intel to build an ARM based processor in its fabs and likely has silicon running in its 22nm process at this moment. The dilemma they face is that going to Intel offers all the upsides mentioned above but the risk of losing other alternative suppliers. In addition, for Apple to get the best deal, they have to get to Intel before Samsung signs up. It is a true high stakes negotiation as Intel will try to land both Samsung and Apple at the exclusion of none.

Looking back over the past 18 months, one can see a definite trend that likely drove Paul Otellini’s decision to ramp 22nm hard last November. In my experience, the PC market has always had a need for processors built on the most leading edge process technology. Qualcomm is experiencing this same phenomenon today in the smartphone market with their 4G LTE chips. Intel, with its process lead, is making a bet that they will win the entire mobile market by 2014 when the successors to Medfield will integrate all functions, including baseband and be significantly cheaper, higher performance and lower power than anything its competitors can delivery. The Black Swan of 2011 likely gave Otellini the excuse he needed to break free from Wall Street’s quarterly demands in order to embrace the Mobile Tsunami Leading Edge Process End Game much sooner.

FULL DISCLOSURE: I am Long AAPL, INTC, ALTR, QCOM

Fast Monte Carlo from Infiniscale at DAC

Firas Mohamed, President and CEO (Ph.D.) of Infiniscale met with me on Monday at DAC to provide an overview of what EDA software they offer to IC designers at the transistor-level.

Vision – analog flow that Monte Carlo simulation is required, which is thousands of circuit simulations, however the higher the sigma the more simulations like 10,000 or 100,000 – way too slow, or too expensive to simulate.

IC Layout Tools from Japan at DAC

Last Monday I met with Nobuto Ono, VP Business development at Jedat (Japan EDA Technologies) while attending the DAC conference.

The company started in Tokyo and is Ex Seiko Instruments, in 2004.

Main product – layout editor for IC (SX 9000). New system is ALpha SX in 2002. 2007 listed on JASDAQ market. Like Virtuoso tools, based on OA for AMS design. Continue reading “IC Layout Tools from Japan at DAC”

TSMC Theater Presentation: Atrenta SpyGlass!

Atrenta presented an update on the TSMC Soft IP Alliance Program at TSMC’s theater each day at DAC. Mike Gianfagna, Atrenta VP of Marketing, presented an introduction to SpyGlass, an overview of the program and a progress report. Dan Kochpatcharin, TSMC Deputy Director of IP Portfolio, was also there. Between Mike, Dan, and I there are about 100 years of semiconductor ecosystem experience. If Paul McLellan was there it would be double that.

TSMC and Atrenta announced the Soft IP Alliance Program last year at DAC. The program uses a special set of SpyGlass rules specified by TSMC to validate that soft IP meets an established set of quality goals before it is included in TSMC online. The program leverages Atrenta’s SpyGlass platform that is used by about 200 companies worldwide to analyze and optimize their RTL designs before handoff to back-end implementation. SpyGlass checks things like linting rules, power, clock synchronization, testability, timing constraints and routing congestion. It highlights problems and provides guidance to improve the design.

SpyGlass can also be used as an IP validation tool, and this is the foundation of the Soft IP Alliance Program with TSMC and Atrenta. Atrenta developed a set of SpyGlass rules specifically tuned to verify the completeness and integration risks associated with soft, or synthesizable IP. Called the IP Kit, the integrated package also produces concise DashBoard and DataSheet reports that summarize the results of the IP Kit tests. Figure 1 shows an example of a DashBoard Report.

TSMC and Atrenta collaborated on the development of a version of the IP Kit that met TSMC’s requirements for delivered quality of soft IP. This technology formed the basis of the Soft IP Alliance Program, and testing of partner IP began last year. Deliverables for the Soft IP Alliance Program include: installation instructions, a reference design to test installation procedures, documentation, automated generation of all reports and a training module. For soft IP to be listed in TSMC Online, the DashBoard report must show a clean (or passing) grade for all tests. Figure 2 illustrates the kind of tests that are performed before IP is listed on TSMC Online.

At DAC this year, a progress report was presented on the results of the program. Ten IP vendors have joined the program, including Arteris, Inc., CEVA, Chips&Media, Inc., Digital Media Professionals Inc. (DMP), Imagination Technologies, Intrinsic-ID, MIPS Technologies, Inc., Sonics, Inc., Tensilica, Inc. and Vivante Corporation. Three additional soft IP vendors have recently joined the program as well.

TSMC and Atrenta are now working on the second generation of the soft IP validation test suite. This version will add physical routing congestion metrics. An update for the program will be presented at the TSMC Open Innovation Platform® Ecosystem Forum in San Jose on October 16, 2012. I hope to see you there!