In my circuit design past I did DRAM work at Intel, so I was interested in learning more about Novocell Semiconductor and their design of One Time Programmable (OTP) IP. Walter Novosell is the President/CTO of Novocell and talked with me by phone on Thursday. Continue reading “IC Design at Novocell Semiconductor”

Managing Differences with Schematic-Based IC Design

At DAC in June I didn’t get a chance to visit ClioSoft for a product update so instead I read their white paper this week, “The Power of Visual Diff for Schematics & Layouts“. My background is transistor-level IC design so anything with schematics is familiar and interesting.

The Challenge

Hand-crafted chip designs provide the highest performance and give the designer greatest control over silicon IP area and specs, however when you are part of a team of designers there is a need to communicate with other team members on what has changed on your schematic since the last version.

Approaches for Text Diff

When I started doing DRAM circuit design in the 1970’s we used colored highlighters during the compare of schematics versus the netlist. Word processors like the Microsoft Office suite added text comparison features so that as you worked on a document a history of what just changed could be seen.

Unix users have the difficulty, even my MacBook Pro today has diff on it for the occasional times that I use the terminal and need to compare text files.

In EDA you can even use an LVS (Layout Versus Schematic) tool like Calibre to compare two versions of a schematic or layout netlist and get some idea of what has changed.

Approaches for Graphic Diff

With a schematic-based design you need a visual way to markup your schematics or IC layout to see what has changed since the last rev. You could try several approaches for tracking schematic or layout changes:

- Netlist your schematics into EDIF or SPICE files

- On IC Layout do an XOR of the GDS versions

The netlist approach works only on Text, so you cannot really see what changed which is more intuitive.

The XOR approach shows you visually what changed on the layout, but not the difference on a schematic level which is more intuitive to a circuit designer.

The Visual Design Diff Approach

ClioSoft has created a visual diff tool that works in a Cadence Virtuoso design flow to quickly highlight what has changed on your schematic since the last rev.

You just click a new menu choice in Virtuoso under Design Manager to see changes to:

- Nets

- Instances

- Layers

- Labels

- Properties

This new class of EDA tool can be used by both the circuit designer or the layout designer in determining what has changed between versions of either a schematic or layout. This kind of automation will eliminate hours and even days worth of manual work compared to the old way of managing changes manually.

In June 2011 ClioSoft added support for hierarchy in the visual diff tool, so now you can traverse all the levels of hierarchy of your designs and see the differences.

Also Read

IC Test Sessions at SEMICON West 2012

SEMICON West is coming up this July 10-12 at the Moscone Center in San Francisco. It covers a broad swath of the microelectronics supply chain, but I was particularly interested in the test sessions. Here are two that I recommend.

“The Value of Test for Semiconductor Yield Learning” on Tuesday, July 10, at 1:30p. The focus of this presentation by Geir Eide, the manager of yield learning products at Mentor Graphicsis, is on using software-based diagnosis of test failures to find the defect type and location of failures “based on the design description, scan test patterns, and tester fail data.” Advanced techniques like the “diagnosis-driven yield analysis” he describes are becoming necessary with the emergence of design-sensitive defects. He’ll cover two of my favorite things: DFM-aware yield analysis, and machine learning techniques that allow for keen statistical analysis of diagnosis data. As a side note, if you are interested in test and yield issues, Semiwiki has a new forum on the topic.

On Wednesday, we get the greatest hits of the most recent International Test Conference ITC). It starts with Friedrich Hapke, the director of engineering for silicon test at Mentor Graphics in Germany reprising a popular ITC paper, “Cell-aware Analysis for Small-delay Effects and Production Test Results from Different Fault Models.” He describes how to find defects inside of standard cells. This “cell-aware” testing greatly improves overall defect coverage. He will show industrial results (from AMD) on 45nm and 32nm designs to support that claim.

Cell-aware testing uses user defined fault models (UDFM), and you can learn about that

from this Mentor whitepaper, User Defined Fault Models.

After taking in all the interesting test and yield analysis sessions, be sure to mosey over to the show floor for happy hour refreshments, and to tell me what you found most interesting. Happy hour is Wednesday at 3:30. Don’t forget to register for SEMICON West.

Cadence’s NVM Express: fruit from subsystem IP based strategy

If we look at SoC design evolution, we have certainly successfully passed several steps: from transistor by transistor IC design using Calma up to design methodology based on the integration of 500K + gates IP like PCIe gen-3 Controller, one out of several dozens of IP integrated in today’ SoC for Set-Top-Box or Wireless Application Processor. Are we naïve if we think the next step should be subsystem IP? It would really make sense to use an IP aggregation, organized in such a way that it could support a specific application!

The list of benefits is long: at first, it will certainly speed up the design integration phase, thus offering a better Time-To-Market. Above mentioned SoC, designed on 28nm technology requires an investment in the $40M to $80M, and they have to hit the market on line with the end user expectations (better Video quality, lower power consumption offering longer time between batteries charging, higher data throughput allowing faster data download, and so on). Being able to deliver the right SoC just in time will allow to sell at the right price, offering enough gross profit margins (GPM), so the chip maker can satisfy investors and continue to invest and gain market share. Missing the market window could put the product line, if not the overall company, at risk.

A subsystem IP based approach will also speed up the software development and validation phase: if the IP provider is able to propose the right tools, like the associated Verification IP (VIP), the software development tools tightly coupled with the subsystem description model, as well as proper emulation and prototyping support, the (S/W & H/W) development team can simply go faster. Good for the TTM, allowing hitting the market window, good again for the GPM!

The Cadence Design IP for NVM Express is the industry’s first IP subsystem for the development of SoCs supporting the NVM Express 1.0c standard, an interface technology used in the rapidly growing solid-state drive (SSD) market, and, to my opinion, an important step on the above mentioned SoC evolution, as this solution includes:

- PCIe 8GT/s PHY IP

- NVMe and PCIe Controller IP combine (reducing engineering effort while optimizing overall solution)

- Firmware accelerates the HW/SW integration effort

- Capabilities from VIP, UVM and IP products along with,

- Fast prototyping and virtualized software products to simplify the design process

Cadence has not chosen NVM Express by chance, in fact the Denali acquisition has bring to Cadence IP port-folio both the PCIe Controller IP product and the Nand Flash Controller (Non Volatile Memory = NVM) IP product, complemented with a PHY IP coming from internal source.

The move from HDD to SSD is good news for end users (including myself, I definitely vote for HDD replacement by SSD!) and the move from SATA interface to PCI Express is also good news: PCI Express offers much more features than SATA (Virtualization, High Outstanding request count, to name a very few of them), and also offers scalability, that SATA does not, and configurability, which is good for a subsystem IP, as Cadence potential customers will need to differentiate each other’s. I may come back in a later blog and talk about the –potential- drawbacks with subsystem IP, but I am intimately convinced that the benefits coming from this type of IP solution are higher, and that the SoC evolution will go on.

Eric Estevefrom IPNEST–

Apache Low Power Webinars

For those of you who didn’t get to DAC you can catch up on low power issues with Apache’s series of low-power webinars taking place late in July. All webinars are at 11am Pacific Time. Full details and registration on the Apache website here.

Continue reading “Apache Low Power Webinars”

Crushed Blackberry

I wasn’t going to write about the cell phone business again for some time. After all, this is a site about semiconductor and EDA primarily. But the cell-phone business in all its facets is a huge semiconductor consumer and continues to grow fast (despite my morbid focus on those companies that do anything but).

But Research in Motion (RIM) announced their results last week. RIM is more famous by the name of its product, Blackberry. Crackberry as it used to be called. Now, not so much, it’s lost its addiction.

They lost 30% of their market share. In one quarter. A couple of years ago they had 20% market share. Now they have 5%. And the market grew four-fold, so in unit terms they are not off as much as it might seem. But if you stay static in a market that is growing explosively then you are not going to survive. And RIM is not going to survive. They have engaged bankers to explore strategic options (most of which probably involve dismembering the company since it is hard to see it as a going concern in its current form).

They announced two other big things. They are going to lay off 5,000 people. Since they have 15,000 employees that is a third of the company. Any company can lay of 5-10% of their people with minimal effect: people who don’t contribute, marginal products and so on. But nobody can lay of 30% of the company and “cut to success”. I don’t know anyone who ever did it.

Perhaps worse is that the new Blackberry operating system 10, which was meant to come out in 3Q and is now delayed until 2013 (and, as some commentators say, will probably never come out since RIM’s realistic runway isn’t that long). RIM had decided to focus on the business market (their core strength but also the slowest growing segment, especially in the new world of BYOD, Bring Your Own Device) so that might not matter that much since business is less affected by Christmas than consumer. But it’s a disaster by any measure. As a software manager in past lives I know how hard it is to forecast product delivery dates, especially when the world is changing around you (and management is changing your priorities on a daily basis) but a 6 month slip now is likely to be fatal. That poor software manager is also probably being told to do without 25% of his engineers too. Doesn’t add up.

Anecdotally, what is killing RIM is Android. I have 3 friends who had Blackberries a couple of years ago. All three have Android phones now. Obviously iPhone is in the picture too. If it weren’t for Nokia, this would be the worst track record ever. And I don’t just mean in cell-phones, no companies have ever collapsed so fast as RIM and Nokia. Moreover, they play in a trillion dollar industry (of which there are only a handful: mobile, automotive, finance, medicine…) so a percentage point is tens of millions of dollars.

Synopsys IP Strategy 2012

Synopsys is the dominant player in the commercial EDA and semiconductor IP markets so it is always interesting to hear what John Koeter, Vice President of Marketing for IP, Services and System Level Solutions, has to say. John presented “The Role of IP in a Changing Landscape” at the SemiCO IMPACT Conference and I talked to him again at DAC 2012.

John and I are science fiction fans and agree that fiction sometimes becomes fact. John and I both are currently reading a new David Brin book called Existence. Too early to tell if it will finish strongly but it certainly opens with some interesting ideas including people in the near future who wear VR glasses to see virtual advertisements (real advertisements are banned — bad for the environment). Deals are concluded with a handshake duly witnessed by the goggles and immediately backed up to a secure site creating a legally binding contract. The glasses can also tap into other people POV (if unencrypted). If you want to be really narcissistic, you can enter “tsoosu” mode “to see ourselves as others see us” and compile multiple POVs of yourself. And this is all covered in just two short pages at the beginning of the book.

John said he is looking forward to working with semiconductor designers to make amazing technology like this a reality:

“It is truly an exciting, dynamic, and challenging time in technology. Complex devices will require sophisticated SoCs which will require IP and EDA vendors to continue to provide increasingly sophisticated, well-integrated IP and EDA solutions.”

Well integrated EDA and IP solutions……… Interesting!

Notes from the Presentation:

- Smart everything

- Internet of things

- Everything connected

- Cloud Mobile

Interesting facts from Cisco Visual Networking Index: Global Mobile Data Traffic Forecast Feb 14, 2012:

- Last year’s mobile data traffic eight timesthe size of the entire global Internet in 2000

- Global mobile data traffic grew 2.3-foldin 2011, more than doubling for 4[SUP]th[/SUP] year in a row

- Mobile video traffic exceeded 50%for the first time in 2011

- Average smartphone usage nearly tripledin 2011

- In 2011, a 4[SUP]th[/SUP] generation (4G) connection generated 28x more traffic on average than non-4G connection

Tomorrow’s World:

- Reality -> Augmented Reality -> Blended Reality

- Search ->Agents -> Info that finds you and networks that know you

- 2D ->3D -> Immersed Video -> Holographic

- Medical -> Mobile Medical -> Personal Medical

- Person to person -> Machine to Machine -> Human Machines

How Does This Affect Semiconductor Design:

- Computing -> Connectivity

- Creating Info -> Consuming Info

- Compute Power -> Battery Power

- Business -> Consumer

- At Your Desk -> Anywhere, Anytime

- Work -> Entertainment

Trends drive semiconductor process migration, increasing gate count and faster designs while requiring aggressive power management. IE: Design challenges are multiplying.

SoC = System on Chip = Software on Chip. Software development is half the time to market for a typical SoC and half the cost.

Software guys are pessimists:

Page’s Law = Software gets twice as slow every 18 months.

Wirth’s Law = Software gets slower more rapidly than hardware gets faster.

Economics of Scaling are tough but can be addressed:

- Process technology advances (20nm, 16nm, 14nm…)

- New transistor technology (FinFET)

- Innovative circuit design

- New layout techniques

- New transistor biasing techniques

IP Vendors need to provide more function and functionality!

IP Subsystems are the next evolution in the IP market. What is an IP Subsystem?

Complete solution: HW, SW, Prototype, pre-integrated and verified

SoC Ready: Seamlessly drop in and go

Ultimate SoC example: The human brain is capable of exaflop processing speeds, petabyte of storage, full 3D image processing, fully capable of augmented reality, and runs on 12 watts of power. Semiconductor technology has a ways to go but we can get there.

Dragon Boats and TSMC 20nm Update!

My luck continues as I missed last week’s typhoon. Fortunately it did not disrupt the annual Dragon Boat Festival. More than just a Chinese tradition, dragon boat racing is an international sports event with teams from around the world coming to Taiwan every year. It is very exciting with the colorful dragon boats and the wild beating of the drums to spur the rowers on. It is an early version of crew (rowing), which is one of the oldest Olympic sports I’m told.

Even more exciting, TSMC has 20nm up on the TSMC website now! Exciting for me at least! This is really cool stuff and it is right around the corner. I also like the new TSMC website and banner ads. It really does show a much more progressive communication style for a foundry.

TSMC provides the broadest range of technologies and services in the Dedicated IC Foundry segment. In addition to general-purpose logic process technology, TSMC’s More-Than-Moore technologies support customers’ wide-ranging needs for devices that integrate specialty features with CMOS logic ICs. TSMC’s More-Than-Moore technologes offer the segment’s richest technology mix, and unmatched manufacturing excellence. Through TSMC Open Innovation Platform™, we provide a robust portfolio of time-to-volume foundry and design services, including front-end design, mask and prototyping services, backend packaging and test services, and front to back-logistics, to speed up More-Than-Moore innovations.

Applications driving 20nm anytime, anywhere, any device

20nm technology is under development to provide best speed/power value for both performance driven products like CPU (Central Processing Unit), GPU (Graphics Processing Unit), APU (Accelerated Processing Unit), FPGA (Field-Programmable Gate Array) and mobile computing applications including smartphones, tablets and high-end SoC (System-on-a-Chip).

In regards to the constant 20nm scaling questions, TSMC 20nm is said to offer a 30%+ performance gain and 25%+ power savings versus 28nm. Has anybody heard what other foundries are claiming lately? It will be interesting to see what the fabless companies can do with 20nm silicon. The success of 28nm will certainly be hard to beat but I can tell you one thing, the fabless guys are spending a lot of time in Hschinsu, EDA and IP vendors are camping out there as well. You will be hard pressed to tell the difference between the old guard IDMs and the leading edge fabless company’s process technology groups, except of course their CAPEX! Expect 20nm risk production to start in Q4 2013, two years to the quarter after 28nm.

TSMC is the world’s largest dedicated semiconductor foundry, providing the industry’s leading process technology and the foundry segment’s largest portfolio of process-proven libraries, IPs, design tools and reference flows. The Company’s managed capacity in 2011 totaled 13.22 million (8-inch equivalent) wafers and is the first foundry to provide 28nm production capabilities.

TSMC’s mission is to be the trusted technology and capacity provider for the global logic IC industry for years to come.

Notice it says “capacity” now. Company mission statements are also reminders for employees so you can bet capacity will be on everyone’s mind for process nodes to come, believe it.

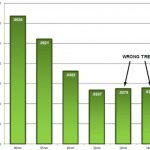

The Scariest Graph I’ve Seen Recently

Everyone knows Moore’s Law: the number of transistors on a chip doubles every couple of years. We can take the process roadmap for Intel, TSMC or GF and pretty much see what the densities we will get will be when 20/22nm, 14nm and 10nm arrive. Yes the numbers are on track.

But I have always pointed out that this is not what drives the semiconductor industry. It is much better to look at Moore’s Law the other way around, namely that the cost of any given functionality implemented in semiconductors halves every couple of years. It is this which has meant that you can buy (or even your kid can buy) a 3D graphics console that contains graphics way beyond what would have cost you millions of dollars 20 years ago in a state of the art flight simulator.

But look at this graph:

This shows the cost for a given piece of functionality (namely a million gates) in the current process generation and looking out to 20nm and 14nm. It is flat (actually perhaps getting worse). This might not matter too much for Intel’s server business since those have such high margins that they can probably live with a price that doesn’t come down as much as it has done historically. And they can make real money by putting more and more onto a chip. But it is terrible for businesses like mobile computing that don’t live on the bleeding edge of the maximum number of transistors on a chip. If you are not filling up your 28nm die and a 20nm die costs just the same (and is much harder to design) why bother? Just design a bigger 28nm die (there may be some power savings but even that is dubious since leakage is typically an increasing challenge).

If this graph remains the case, then Moore’s Law carries on in the technical sense that you can put twice as many transistors on your chip if you can think of something clever to do with them and can find a way to keep enough of them powered on. But it means there is no longer an economic driver to move to a new process unless you have run out of space on the old one.

Since EDA mostly makes money on designs in new processes (because they need new tools which can be sold at a premium) this is bad for EDA. It actually doesn’t make money on the first few designs coming through a new process because there is so much corresponding engineering to be done. But if the mainstream never moves, the cash-cow aspect of selling EDA tools to the mainstream won’t happen. And just like there is no business selling “microprocessor design tools” since there are too few groups who would buy them and their needs are too different, there might never be a big enough market for “14nm design tools” to justify the investment.

So that’s why this is the scariest graph in EDA.

Chip Synthesis at DAC

I visited Oasys Design Systems and talked to Craig Robbins, their VP sales. For the first time this year, Oasys has a theater presentations and demos of RealTime Designer which are open to anyone attending the show. In previous years, they have had suite demos for appropriately qualified potential customers but outside they have just had videos. Funny videos, but you don’t really get to look under the hood in them.

The theme of the theater presentation was “right here, right now” to reflect the fact that RealTime Designer is…err…real. As is Oasys themselves, having just had a cash injection from the #1 semiconductor company and the #1 FPGA company. That would be Intel Capital and Xilinx.

Oasys are proud of their customer list too. Qualcomm, Netlogic (now part of Broadcom), Texas Instruments. With Xilinx and Intel Capital they have relationships with the top US semiconductor companies. After all if companies like these are doing their most challenging designs with Oasys then that is a true vote of confidence in the technology. It is really hard to tell if an engine is any good just by taking the cylinder-head off, much easer to see who is confident enough to put the engine in their cars.

RealTime Designer’s big claim to fame is that it is blazingly fast and has huge capacity. Traditional synthesis takes in RTL and converts it to a rough-and-ready netlist and then optimizes that netlist. This requires the whole netlist to be in memory (so needs a lot of it) and means that only small incremental improvements are possible. Thus to get anywhere, it needs to make millions of these little changes which takes a long time. RealTime Designer operates by partitioning the RTL into small regions and each reducing each of those to a fully-placed netlist. If the design doesn’t make its constraints (paths with negative slack, meaning the netlist is too slow) then it returns to the RTL level, repartitions (if appropriate), resynthesizes and re-places just that small regions and perhaps its neighbors. This turns out to converge must faster on a solution with good quality of results (QoR) requiring only thousands of adjustments.

As a result RealTime Designer has a capacity of over 100M gates and runs 5-40 times faster than traditional synthesis tools.

The demo on the show floor didn’t actually run RealTime Designer live (most of the time) since most people don’t even have the patience to watch a 15 minute demo and presentation. But when they did that’s all that the design they used for the demo took to synthesize. How big was it? It was a full-chip 6 million gate quad-core SPARC T1, 421 macros, 261 I/O pads, 1.2GHz clock in 65nm.