Paul Otellini’s greatest fear in his chase to have Intel win the Smartphone and Tablet space is that he opens the door to significant ASP declines in his current PC business. This is the Innovator’s Dilemma writ large. In 2011, Intel’s PC business (excluding servers) was $36B at an average ASP of $100. Within that model is an Ultra Low Voltage (ULV) product line that powers the Apple MAC Air and upcoming ultrabooks that sell for over $200. For 15 years, Intel placed a significant premium on low power because they yielded from the top speed bins and they were for a time, quite frankly, a nuisance. With the Ultrabook push, Intel was looking forward to being able to increase ASPs because they would garner more of the graphics content and not have AMD around as competition. However, Apple’s success with the iPAD using a $25 part at less than half of Ivy Bridge’s performance and running on older process technologies is upsetting Intel’s model. What comes next?

Continue reading “Intel’s Haswell and the Tablet PC Dilemma”

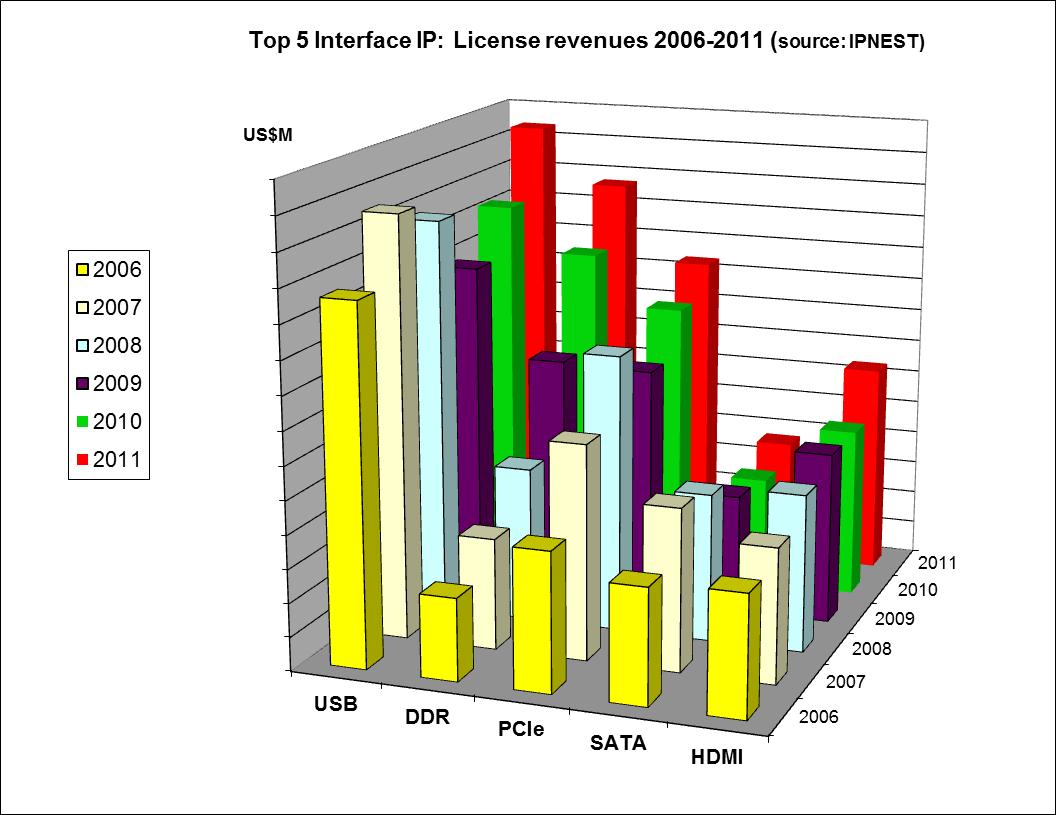

A brief history of Interface IP, the 4th version of IPNEST Survey

The industry is moving extremely fast to change the “old” way to interconnect devices using parallel bus, to the most efficient approach based on High Speed Serial Interconnect (HSSI) protocols. The use of HSSI has become the preferred solution compared with the use of parallel busses for new products developed across various segments. These new Interface functions are differentiated from the parallel bus based Interface like PCI by the use of High Speed Serial I/O, based on a multi-gigabit per second SerDes, this analog part interacting with the external world – the PHY. Another strong differentiation is the specification of packet based protocols, coming from the Networking world (think about ATM or Ethernet), requiring using a complex digital function, the Controller. PHY and Controller can be designed in-house, but both being at the leading edge of their respective technologies – Analog and Mixed-Signal (AMS) or Digital – the move to external sourcing of IP functions is becoming the trend.

The list of the Interfaces technologies we will review is becoming longer almost every year: USB, PCI Express, HDMI, SATA, MIPI, DisplayPort, Serial RapidIO, Infiniband, Ethernet and DDRn. In fact, Infiniband and DDRn do not exactly fit with the definition given above, as the data are still sent in a parallel way, and the clock as a specific signal. But, the race for higher data bandwidth has pushed DDR4 bandwidth specification up to 2,133 Gb/s, which lead in practice to use a specific hard wired I/O (PHY) and require to use a controller, like for the other serial interfaces.

IPNEST has proposed the first version of the “Interface IP Survey” back in 2009, as this specific IP market was already weighting $230 million in 2008, and was expected to grow with 10%+ CAGR for the next 5 to 7 years. The Interface IP was, and still is, a promising market (large size and still growing), attracting many new comers and generating good business for established companies like Synopsys, Denali (now Cadence) as well as for smaller companies like Virage Logic (now Synopsys), ChipIdea (now Synopsys), Arasan or PLDA, to name a few. The success of the survey came last year, with the 3[SUP]rd[/SUP] version, which has been sold to major IP vendors, but also to the smaller vendors, to ASIC Design Services companies and also to Silicon foundries, as well as to IDM and Fabless companies.

What type of information could be found in the survey, and in the latest version, the 4[SUP]th[/SUP], issued these days? In fact, IPNEST is not only providing the market share information, protocol by protocol and year by year for 2006 to 2011 (when relevant), but do a real research work, in order to answer many other questions, which are the questions you try to answer when you are Marketing Manager for an IP vendor (I was in charge of this job for IP vendors), or when you are in charge of the Business development for an ASIC (Design Service or Foundry) company (that I did for TI and Atmel a while ago), or when you need to take the make-or-buy decision when managing a project for an IDM or fabless chip maker – and if the decision is finally to buy, who should I buy and at what price? The type of answers IPNEST customers find in the “Interface IP Survey” are:

- 2012-2016 Forecast, by protocol, for USB, PCIe, SATA, HDMI, DDRn, MIPI, Ethernet, DisplayPort, based on a bottom-up approach, by design start by application

- License price by type for the Controller (Host or Device, dual Mode)

- License price by technology node for the PHY

- License price evolution: technology node shift for the PHY, Controller pricing by protocol generation

- By protocol, competitive analysis of the various IP vendors: when you buy an expensive and complex IP, the price is important, but other issues count as well, like

- Will the IP vendor stay in the market, keep developing the new protocol generations?

- Is the PHY IP vendor linked to one ASIC technology provider only or does he support various foundries?

- Is one IP vendor “ultra-dominant” in this segment, so the success chance is weak, if I plan to enter this protocol market?

These are precise questions that you need to answer before developing an IP, or buying it to integrate it in your latest IC, in both cases you’re most important challenge, whether you are IP vendor or chip maker. But the survey also address questions, for which a binary answer does not necessarily exist, but which gives you the guidance’s you need to select a protocol, and keep sure your roadmap will align with the market trends, and your customer needs. For example, if you develop for the storage market, you will not ignore SSD technology. But, when deciding to support SSD, will you interface it with SATA, SATA Express, NVM Express or homemade Nand Flash controller? Or, if you develop a portable electronic device for Consumer electronic market, should you jump start integrating MIPI technology now? For many of these questions, we propose answers, based on a 30 years industry experience, acquired in the field… That’s why also we have built for each survey a 5 years forecast, based on a bottom-up approach, that we propose with a good level of confidence to our customers, even if we know that any economic recession could heavily modify it.

You probably better know why IPNEST is the leader on the IP dedicated surveys, enjoying this long customer list:

For those who want to know more, you can read the height pages Introduction, extracted from the 4[SUP]th[/SUP] version of the “Interface IP Survey”, issue in August 2012, and proposed to Semiwiki readers very soon here.

Eric Esteve from IPNEST –

Table of Content for “Interface IP Survey 2006-2011 – Forecast 2012-2016” available here

Have You Ever Heard of the Carrington Event? Will Your Chips Survive Another?

In one of those odd coincidences, I was having dinner with a friend last week and somehow the Carrington Event came up. Then I read a a piece in EETimesabout whether electrical storms could cause problems in the near future. Even that piece didn’t mention the Carrington Event so I guess George Leopold, the author, hasn’t heard of it.

It constantly surprises me that people in electronics have not heard of the Carrington Event, the solar storm of 1859. In fact my dinner companion (also in EDA) was surprised that I’d heard of it. But it is a “be afraid, be very afraid” type of event that electronics reliability engineers should all know about as a worst case they need to be immune to.

Solar flares go in an 11-year cycle, aka the sunspot cycle. The peak of the current cycle is 2013 or 2014. This cycle is unusual for its low number of sunspots and there are predictions that we could be in for an extended period of low activity like the Maunder minimum from 1645-1715 (the little ice age when the Thames froze every winter) or the Dalton minimum from 1790-1830 (where the world was also a couple of degrees colder than normal). Global warming may be just what we need, it is cooling that causes famines.

But for electronics, the important thing is the effect of coronal mass ejection which seems to cause solar flares (although the connection isn’t completely understood). Obviously the most vulnerable objects are satellites since they lack protection from the earth’s magnetic field. There was actually a major event in March but the earth was not aligned at a vulnerable angle and so nothing much happened and the satellites seemed to survive OK.

But let’s go back to the Carrington Event. It took place from August 28th to September 2nd 1857. It was in the middle of a below average solar cycle (like the current one). There were numerous solar flares. One on September 1st, instead of taking the usual 4 days to reach earth, got here in just 17 hours, since a previous one had cleared out all the plasma solar wind.

On September 1st-2nd the largest solar geomagnetic storm ever recorded occurred. We didn’t have electronics in that era to be affected but we did have telegraph by then. They failed all over Europe and North America, in many cases shocking the operators. There was so much power that some telegraph systems continued to function even after they had been turned off.

Aurora Borealis (Northern Lights) was visible as far south as the Caribbean. It was so bright in places that people thought dawn had occurred and got up to go to work. Apparently it was bright enough to read a newspaper.

Of course telegraph systems stretch for hundreds or thousands of miles so have lots of wire to intercept the fields. But the voltages involved seemed to be huge. It doesn’t seem that different from the magnetic pulse of a nuclear bomb. And these days, our power grids are huge, and connected to everything except our portable devices.

Imagine the chaos if the power grid shuts down, if datacenters go down, if chips in every car’s engine-control-unit fries or and all our cell-phones stop working. And even if your cell-phone survives and there is no power, you don’t have that long before its battery is out. It makes you realize just how dependent we are on electronics continuing to work.

In 1859 telegraph systems were down for a couple of days and people got to watch some interesting stuff in the sky. But from a NASA event last year in Washington looking at what would happen if another Carrington Event occured:In 2011 the situation would be more serious. An avalanche of blackouts carried across continents by long-distance power lines could last for weeks to months as engineers struggle to repair damaged transformers. Planes and ships couldn’t trust GPS units for navigation. Banking and financial networks might go offline, disrupting commerce in a way unique to the Information Age. According to a 2008 report from the National Academy of Sciences, a century-class solar storm could have the economic impact of 20 hurricane Katrinas.

Actually, to me, it sounds a lot worse than that. More like Katrina hitting everywhere, not just New Orleans. That’s a lot more than 20. To see how bad a smaller event can be:In March of 1989, a severe solar storm induced powerful electric currents in grid wiring that fried a main power transformer in the HydroQuebec system, causing a cascading grid failure that knocked out power to 6 million customers for nine hours while also damaging similar transformers in New Jersey and the United Kingdom.

Wikipedia on the Carrington Event is here. The discussion at NASA here.

Built to last: LTSI, Yocto, and embedded Linux

The open source types say it all the time: open is better when it comes to operating systems. If you’re building something like a server or a phone, with either a flexible configuration or a limited lifetime, an open source operating system like Linux can put a project way ahead.

Linux has always started with a kernel distribution, with a set of features ported to a processor. Drivers for common functions, like disk storage, Ethernet, USB, and OpenGL graphics were abstracted enough so they either dropped in or were easily ported. Support for new peripheral devices usually emerged from the community very quickly. Developers grew to love Linux because it gave them, instead of vendors, control.

Freedom comes with a price, however. In the embedded world, where change can be costly and support can be the never-ending story over years and even decades, deploying Linux has not been so easy. Mind you, it sounds easy, until one thing becomes obvious: Linux has been anything but stable when viewed over a period of years. Constant innovation from the community means the source tree is a moving target, often changing hourly. What was built yesterday may not be reproducible today, much less a year from now.

The first attempts at developing embedded Linux involved “freezing” distributions, which produced a stable point configuration. A given kernel release with support for given peripherals could be integrated, built and tested, and put into change control and released. In moderation this worked well, but after about 100 active products showed up in a lab with 100 different frozen build configurations, the scale of juggling became problematic. Without the advantage of being able to retire obsolete configurations, Linux started becoming less attractive for longer lifecycles, and embedded developers were forced to step back and look harder.

In what the folks at The Linux Foundation have termed “the unholy union of innovation and stability”, best brains are trying to bring two efforts – defining what to build and how to build it – to bear to help Linux be a better choice for embedded developers.

LTSI, the Long Term Support Initiative, is backed by many of the companies we discuss regularly here, including Intel, Mentor Graphics, NVIDIA, Qualcomm, and Samsung. LTSI seeks to create a stable tree appropriate for consumer devices living 2 to 3 years, and yet pick up important new innovations in a road-mapped effort. The goal is to “reduce the number of private [Linux] trees current in use in the CE industry, and encourage more collaboration and sharing of development resources.”

The Yocto Project focuses on tools, templates, and methods to build a Linux tree into an embedded distribution. This doesn’t sound like a breakthrough until one considers that almost all embedded development on Linux has been roll-your-own, and as soon as a development team deviates and does something project specific to meet their needs, they lose the benefits of openness because they can’t pull from the community without a big retrace of their steps. The Yocto Project recently announced a compliance program, with Mentor Graphics among the first companies to comply, and Huawei, Intel, Texas Instruments and others participating and moving toward compliance. Yocto has also announced a joint roadmap with LTSI.

With software becoming a larger and larger part of projects – some say 70% and growing – and open source here to stay, these initiatives seek to help Linux be built to last.

The GLOBALFOUNDRIES Files

There’s a new blogger in town, Kelvin Low from GLOBALFOUNDRIES. Kelvin was a process engineer for Chartered Semiconductor before moving on to product marketing for GF. His latest post talks about the GF 28nm SLP which is worth a read. There was quite the controversy over this Gate-First HKMG implementation of 28nm that IBM/GF/Samsung uses versus the Intel and TSMC Gate-Last implementation. One of the benefits of the GF version being very low power:

SLP targets low-power applications including cellular base band, application processors, portable consumer and wireless connectivity devices. SLP utilizes HKMG and presents a 2x gate density benefit, but is a lower cost technology in terms of the performance elements utilized to boost carrier mobilities.

Anyway, Kelvin is a great addition to GF’s Mojy Chian and Michael Noonan. I look forward to reading more about customer applications at 28nm and beyond.

28nm-SLP technology – The Superior Low Power, GHz Class Mobile Solution

Posted on September 4, 2012

By Kelvin Low

In my previous blog post, I highlighted our collaborative engagement with Adapteva as a key factor in helping them deliver their new 64-core Epiphany-4 microprocessor chip. Today I want to talk about the second key ingredient in enabling their success: the unique features of our

28nm-SLP technology: Enabling Innovation on Leading Edge Technology

Posted on August 30, 2012

By Kelvin Low

It’s always great to see a customer celebrate their product success, especially when it’s developed based on a GLOBALFOUNDRIES technology. Recently, one of our early lead partners, Adapteva, announced sampling of their 28nm 64-core Epiphany-4 microprocessor chip. This chip is designed on our 28nm-SLP technology which offers the ideal balance of low power, GHz class performance and optimum cost point. I will not detail the technical results of the chip but will share a quote by Andreas Olofsson, CEO of Adapteva, in the recent company’s press release…

Innovation in Design Rules Verification Keeps Scaling on Track

Posted on August 28, 2012

By Mojy Chian

There is an interesting dynamic that occurs in the semiconductor industry when we talk about process evolution, roadmaps and generally attempt to peer into the future. First, we routinely scare ourselves by declaring that scaling can’t continue and that Moore’s Law is dead (a declaration that has happened more often than the famously exaggerated rumors of Mark Twain’s death). Then, we unfailingly impress ourselves by coming up with solutions and workarounds to the show-stopping challenge of the day. Indeed, there has been a remarkable and consistent track record of innovation to keep things on track, even when it appears the end is surely upon us…

Breathing New Life into the Foundry-Fabless Business Model

Posted on August 21, 2012

By Mike Noonen

Early last week, GLOBALFOUNDRIES jointly announced with ARM another important milestone in our longstanding collaboration to deliver optimized SoC solutions for ARM® processor designs on GLOBALFOUNDRIES’ leading-edge process technology. We’re extending the agreement to include our 20nm planar offering, next-generation 3D FinFET transistor technology, and ARM’s Mali™ GPUs…

Re-defining Collaboration

Posted on July 18, 2012

By Mojy Chian

The high technology industry is well known for its use – and over-use – of buzzwords and jargon that can easily be rendered meaningless as they get saturated in the marketplace. One could argue ‘collaboration’ is such an example. While the word itself may seem cliché, the reality is that what it stands for has never meant more…

Wireless Application: DSP IP core is dominant

If we look back in the early 90’s, when the Global System for Mobile Communication (GSM) standard was just an emerging technology, the main innovation was the move from Analog to the Digital Processing of the Signal (DSP), allowing to make unlimited manipulation to an Analog signal, once digitized by the means of a converter (ADC). To run the Digital baseband Processing (see picture), the system designer had to implement the Vocoder, Channel codec, Interleaving, Ciphering, Burst formatting, Demodulator and (Viterbi) Equalizer. Digital Signal Processing science was already heavily used for military application like Radar, but was emerging in telecommunication. The very first GSM mobile handset built based on standard part (ASSP) were using no less than three TI 320C25 DSP, each of them costing several dozen of dollar!

Very quickly, it appears that the chip makers developing IC for mobile handset baseband processing should rely on ASIC technology rather than using ASSP, for two major reasons: cost and power consumption. As one company was dominating the DSP market, Texas Instruments, the mobile handset manufacturers, Ericsson, Nokia and Alcatel had to push TI to propose a DSP core, which could be integrated into an ASIC, developed by the above mentioned OEM. During the years 1995 to early 2000’s, thanks to their dominant position in DSP market, TI was the undisputed leader in manufacturing the baseband processor, through ASIC technology, for the GSM handset OEM, who also developed the IC, at that time.

But a small company named DSP Group, had appeared in the late 90’s, proposing a DSP IP core, not linked to any existing DSP vendor (TI, Motorola or Analog Devices), and even more important, to any ASIC technology vendor. The merge of the IP licensing division of DSP Group and Parthus has been named CEVA and CEVA’ DSP was specially tailored for the wireless handset application. TI competition (VSLI Technology, STMicroelectronics and more) was certainly happy as they could propose an alternative solution to the Nokia et al., but it took some time before these OEM decide to move the S/W installed base from TI DSP to CEVA DSP IP core, that they had to do if they decide to move from TI to another supplier. It was a long route, but CEVA is enjoying today most of the Application Processors chip maker leaders in their customer list, namely:

This help to understand why CEVA has enjoyed 70% market share for DSP IP products in 2011, according with the Linley Group. A 70% market share simply means that CEVA’ DSP IP have been integrated into 1 billion IC shipped in production in 2011! If the Teak DSP IP core was the company flagship in early 2000, the ever increasing need for digital signal processing power associated with 3G and Long Term Evolution (LTE or 4G) has led to propose various new products, the latest being CEVA XC4000 DSP IP core:

And, by the way, the XC4000 target various applications, on top of the wireless handset:

- Wireless Infrastructure

A scalable solution for Femtocells up to Macrocells

- Wireless connectivity

A single platform for: Wi-Fi 802.11a/b/g/n/ac, GNSS, Bluetooth and more

- Universal DTV Demodulator

A programmable solution targeting digital TV demodulation in software

Target standards: DVB-T, DVB-T2, ISDB-T, ATSC, DTMB, etc.

- SmartGrid

A single platform for: wireless PAN (802.11, 802.15.4, etc.), PLC (Power Line Communication), and Cellular communication (LTE, WCMDA, etc.)

- Wireless Terminals

Handsets, Smartphones, Tablets, data cards, etc.

Addressing: LTE, LTE-A, WiMAX, HSPA/+, and legacy 2G/3G standards

If we look at the Wireless handset market, it appears that Smartphone and Media tablet, both being based on the same SoC, the Application Processor, will represent the natural evolution, and the analysts forecast the shipment of one billion Smartphone and 200 million Media tablets in 2015. If you look more carefully, you will discover that at least 50%, if not the majority of these devices will be shipped in ASIA, and to be more specific, in China for most of these. An IP vendor neglecting China today would certainly decline in a few years. Looking again at CEVA’s customer list, we can see that many of the Application Processor chip makers selling in these new “Eldorado” markets have selected CEVA. This is a good sign that CEVA will maintain their 70% market share of the DSP IP market in the future!

Like ARM IP core is coming in mind immediately when you consider a CPU core for Application Processor wireless handset phone or smartphone, CEVA DSP IP is the dominant solution for the same. Just a final remark: CEVA is claiming to have design-in their DSP IP in the Chinese version of the Samsung Galaxy S3, which will probably be the most selling smartphone on a world-wide basis…

Eric Esteve from IPNEST –

Custom IC and AMS Tool Flow with Synopsys

The big three EDA companies all have Custom IC and AMS tool flows as shown in the following comparison table: Continue reading “Custom IC and AMS Tool Flow with Synopsys”

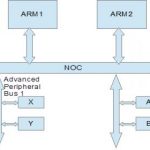

Hardware Intelligence for Low Power

Low power is the hottest topic these days. The designers of hardware and software are trying to find instances where they can save power . This article tries to identify the role that can be played by the hardware which traditionally it is always software who drives it.

Continue reading “Hardware Intelligence for Low Power”

Wiring Harness Design

In 2003 Mentor acquired a company doing wiring harness design. Being a semiconductor guy this wasn’t an area I’d had much to do with. But more than most semiconductor people I expect.

But back when I was an undergraduate, I had worked as a programmer for a subsidiary of Philips called Unicam that made a huge range of spectrometers and similar equipment for chemical analysis. These contained a brass cam, hence the name, that moved all the optics that had to be carefully machined. So carefully that the cam cutting lathes were in a temperature controlled room.

Each of these machines also contained a complicated wiring harness to hook up the electronics and the front panel and so on. The production volumes of spectrometers did not justify automating the manufacture of the harnesses. They were assembled by hand by a room full of women seated in front of drawing boards. On each drawing board was a carefully drawn full-scale diagram of the harness with pins to bend the wires around. When a harness was complete, someone would check it, and then the harness would be wrapped with cable-ties and sent off to be put into a spectrometer as it was assembled. The design was clearly done on the drawing boards by draftsmen by hand.

Then when I was at VaST I got involved in many meetings with automotive manufacturers. There is a wiring harness in each car. One of the reasons that automotive was increasingly interested in in-car networks such as CAN bus (unfortunately the C stands for ‘controller’ but ‘car area network’ seemed a better name) was that the wiring harnesses were starting to be embarrassingly large. They might weigh over 50kg, which affected mileage, and they had got so thick it was a real problem to find enough space to thread them through the car to get to the engine compartment, the dashboard, the rear lights and all the other places they needed to go. They were also incredibly complex to design. The wiring in the Airbus 380 caused major delays in the program; I hate to think how complex that wiring must be.

Wally Rhines told me once that the wiring harness products were a surprisingly fast growing business as they got more and more complex. Of course when you are used to putting a few billion wires on a chip, putting together a wiring harness seems almost primitive by comparison. Anyway, I decided to find out more and to watch Mentor’s webinar on the topic. It is given by John Wilson who created the company that Mentor acquired back in 2003.

The webinar is Virtual Design, Testing and Engineering Speeds Electrical and Wire Harness Design. It covers the wiring design process and how it integrates into the system and process designing the vehicle itself. Typically harness design is outsourced to supplier who have to bid on it, then design and manufacture it.

VeSys Design provides an intuitive wiring design tool for the creation of wiring diagrams and the associated service documentation. It has integrated simulation facilities that can validate the design as it’s created, ensuring high quality. VeSys Harness is a tool for harness and formboard design tool with automated part selector and manufacturing report generation. It shrinks the design time and automates many steps in the design process. Vesa is a mid-level product for smaller companies.

For larger problems such as aerospace and automotive with more complex harnesses, there is a more powerful product line called Capital. This is oriented towards enterprise level deployment.

View the webinar here. White papers on electrical and wire harness design are here.

A Brief History of Semiconductors: the Foundry Transition

A modern fab can cost as much as $10B dollars. That’s billion with a B. Since it has a lifetime of perhaps 5 years, owning a fab costs around $50 per second and that’s before you buy any silicon or chemicals or design any chips. Obviously anyone owning a fab had better be planning on making and selling a lot of chips if they are going to make any money. A modern fab manufactures over 50,000 dinner-plate sized wafers every month.

In the past, fabs were cheaper. As a result most semiconductor companies owned their own fabs. In fact around 1980 there were no semiconductor companies that didn’t own their own fabs since there would be no way for them to manufacture their designs.

The first thing that happened was that some companies found they had excess capacity in their own fabs because an economically large fab might turn out to be larger than their own needs for their own product lines. Correspondingly, other companies may have the opposite problem: they didn’t build a big enough fab or they were late constructing it, and they could sell more product than they could manufacture.

So semiconductor companies would buy and sell wafers from each other to even out their capacity needs. This was known as foundry business, analogous to a steel foundry. In a similar way, semiconductor companies with shortages would take their designs to other semiconductor companies with surplus capacity (often even competitors) and have them manufactured for them.

The next step in the evolution of the ecosystem was that in the mid-1980s some companies realized that they didn’t need to own a fab to have chips manufactured. These companies would purchase foundry wafers just like any other semiconductor company. These companies came to be called, for obvious reasons, fabless semiconductor companies. Two of the earliest were Chips and Technologies, who made graphics chips for the PCs of the day and Xilinx who made what are now known as field-programmable gate-arrays (FPGAs). They purchased wafers from other semiconductor companies and sold them just as if they’d manufactured them themselves. Chips and Technologies eventually was acquired after falling on hard times, but Xilinx is still the leader in FPGAs today. And despite being number one, it still doesn’t have its own fab, it outsources all manufacturing.

Semiconductor companies with fabs became known as integrated device manufacturers, or IDMs, to distinguish them from the fabless companies. In 1987 the first of another new breed of semiconductor companies was created with the founding of Taiwan Semiconductor Manufacturing Company (TSMC). TSMC was the first foundry, created only to do foundry business for other companies who needed to purchase wafers either because they were fabless or because they were capacity limited. It was also known, when the distinction was important, as a pure-play foundry to distinguish it from IDMs selling excess capacity who would often be competing at the component level with their foundry customers.

Until TSMC and its competitors came into existence, getting a semiconductor company off the ground was difficult and expensive. To build an IDM required an expensive fab. To build a fabless semiconductor company required a complicated negotiation for excess foundry capacity at a friendly IDM which might go away if the IDM switched from surplus to shortage as its business changed. Once TSMC existed, buying wafers was no longer a strategic partnership, you just gave TSMC an order.

This lowered the cost and the risk of creating a semiconductor company and during the 1990s, many fabless semiconductor companies were funded by Silicon Valley venture capitalists. Historically, a semiconductor company had to be large since it had to have enough business to fill its fab. Now a semiconductor company could have just a single product, buy wafers or finished parts from TSMC and sell them.

Over time, another change happened. Many system companies also switched from using the ASIC companies to doing their designs independently and then buying wafers from the foundries. The specialized knowledge about how to design integrated circuits that was lacking in the system companies in the 1980s was gradually acquired and by the 1990s many system companies had very large integrated circuit design teams. The ASIC companies gradually started selling more and more of their own products until they became, in effect, IDMs.

As fabs got more expensive, another change happened. IDMs such as Texas Instruments and AMD that had always had their own fabs found they could no longer afford them. Instead some switched to being completely fabless. For example, AMD sold its fabs to an investment consortium that turned it into a foundry called Global Foundries. Alternatively they kept their own fabs for some of their capacity and purchased additional capacity, typically in the most advanced processes, externally. This was known as fab-lite.

This is the landscape today. There are a few IDMs such as Intel who build almost all of their own chips in their own fabs. There are foundries such as TSMC and Global Foundries who build none of their own chips, they just build wafers for other companies. Then there are fabless semiconductor companies such as Xilinx and Qualcomm along with their fab-lite brethren such as Texas Instruments, who do their own design, sell their own products, but use foundries for all or part of their manufacturing.

A Brief History of Semiconductors

A Brief History of ASICs

A Brief History of Programmable Devices

A Brief History of the Fabless Semiconductor Industry

A Brief History of TSMC

A Brief History of EDA

A Brief History of Semiconductor IP

A Brief History of SoCs