There is an interesting Gizmodo review of an HTC Android-based smartphone. The basically positive review (as good as the iPhone, best Android phone at the time) ends up with an update:UPDATE: After more extensive testing there’s something a little weird going on. You’ll probably only see this while gaming, but there’s a little bit of stuttering that happens. You really notice it in games like Temple Run, where the processor seems to get a little overloaded and it misses a finger-swipe which kills you dead.

It’s troubling. If we had to hazard a guess as to what’s going on, it seems that the new Snapdragon S4 processor may be the culprit. While it doesn’t really have this problem with the One S, it has many more pixels to drive on the higher resolution One X and the EVO 4G LTE screens. It seems to strain under the weight of driving those pixels while handling a lot of graphics processing at once.

These sorts of problems are just the type of thing that the combination of virtual platforms along with cycle-accurate processor models can discover ahead of time to get the problem fixed. There is nothing so expensive as a bug in an SoC that escapes into the field. Of course the problem might not be in the S4 and so nothing to do with Qualcomm, but Gizmodo’s guess is certainly very plausible.

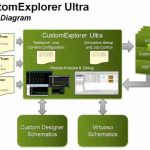

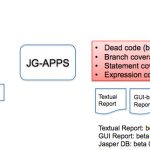

Companies that are Carbon’s customers indeed find these sort of low-level problems, everything from driver bugs to performance issues like this. The use model is to start with fast models of the processor to boot the operating system and start up an application (such as a game in this case). Then the processor state is frozen and the fast models are swapped for cycle-accurate models initialized with the frozen state. If this sounds tricky, that’s because it is. I think Carbon is the only virtual platform supplier that can do it. But it is the only way to get both speed and accuracy since you can’t get both in a single model without compromising one or the other (or usually both).

Bill Neifert, Carbon’s CTO, wrote about virtual prototypes in wireless development in EETimes although with their usual unhelpful rules that opinion pieces can’t mention companies or products. Like Carbon sells virtual platforms (and other stuff), Bill is writing about virtual platforms and…wink, wink…I wonder what specific products he could possibly be referring to.