Our EDA industry loves three letter acronyms so credit the same industry for creating a five letter acronym CoWoS. Two weeks ago TSMC announced tape-out of their first CoWoS test chip integrating with JEDEC Wide I/O mobile DRAM interface, making me interested enough to read more about it. At the recent TSMC Open Innovation Platform there was a presentation from John Park, Methodology Architect at Mentor Graphics called –A Platform for the CoWoS Reference Flow. Continue reading “Chip On Wafer On Substrate (CoWoS)”

Electromigration (EM) with an Electrically-Aware IC Design Flow

Electromigration (EM) is a reliability concern for IC designers because a failure in the field could spell disaster as in lost human life or even bankruptcy for a consumer electronics company. In the old days of IC design we would follow a sequential and iterative design process of: Continue reading “Electromigration (EM) with an Electrically-Aware IC Design Flow”

ARM TechCon 2012 Trip Report

I must say the ARM conference gets better every year, as do the attendance numbers. More than 4,000 people showed up including 5 SemiWiki bloggers, two of which I had not yet had the pleasure of meeting.

First I have to mention my favorite vendor booth. I don’t remember what company it was but the girls in fishnet stockings giving out bottle openers were funny. I even chatted with one, a very nice lady from Ireland with a six year old daughter just trying to make some money in a difficult economy. I also saw Granny Peggy Aycinena lurking in the shadows so expect fireworks on this one. The Granny nickname comes not from her age but her bias against so called ‘booth babes” at trade shows. You may remember Peggy from the DAC 2012 Cheerleader fiasco. Funny stuff!

Back to the conference, the Keynotes were very well done and even “Apple esque”. The speakers were polished, the presentations were content rich, there were nice videos, and the intermingling of industry executives and panels from the massive ARM ecosystem was great. I sat with Paul McLellan for the most part and he writes way much more better than me do so be sure and read his ARM TechCon blogs:

ARM 64-Bit

IBM Tapes Out 14nm ARM on Cadence Flow

The last keynote (Thursday morning) was ARM CEO Warren East. Saving the best for last was a good strategy to bolster traffic on the final day. The most interesting part of the keynote was the embedded FinFET panel discussion between TSMC, Samsung, and GLOBALFOUNDRIES. Spoiler alert: TSMC’s Shang-yi Chiang stole the show! I hope the video is up soon because it was great!

The first question was on the challenges we face moving forward and Shang-Yi said, “FORECASTING!” As in the 28nm shortages this year were due to bad forecasting not yield and manufacturing delays, which is absolutely true. As Shang-Yi said, “28nm demand was 2-3x the forecast.”

Shang-Yi was the first person to talk to me candidly about the 40nm yield problems while they were happening so I have faith in what he says. He is a genuine guy who puts the “trusted” in the “Our mission is to be the trusted technology and capacity provider of the global logic IC industry for years to come”.

Shang-Yi also mentioned cost as a challenge and for 20nm that is absolutely true. He also discussed the cost of an empty fab and let me tell you, there is going to be plenty of extra fab capacity next year as Samsung, UMC, and GLOBALFOUNDRIES get 28nm into full production.

The panel also discussed FinFETS which is a common theme amongst most of the conferences this year. I will blog my issues with FinFETS next but the panel response was quite funny. Shang-Yi went first and said that Chenming Hu (The father of FinFETS) worked at TSMC and TSMC has been working on FinFETS for 10+ years so no worries. Not to be out done, the Samsung guy said they have been working on FinFETS as long as TSMC and his concern was with variation which is absolutely true with the Fins. On Tuesday I was at a presentation about IBM’s 14nm FinFET test chip. Since when does IBM talk openly about stuff like that? GF also has FinFET bragging rights and had their own fireside chat at ARMTechCon talking about the “Lab to Fab Gap”:

Given all that boasting, if you had to bet today, who do you think will be first to get FinFETS into volume production? Would it be TSMC, Samsung, or GF? Remember, Intel does not use FinFETS, they use Tri-Gate transistors. 😉 Check out the SemiWiki FinFET poll HERE. Anybody can vote so please do.

Internet of Things

Another announcement from the Warren East’s ARM keynote this morning was the creation of a SIG within Weightless, which is an organization responsible for delivering royalty-free open standards to enable the Internet of Things (IoT). The SIG is focused on accelerating the adoption of Weightless as a wireless wide area global standard for machine to machine short to medium range communication. The founder companies are ARM, Cable and Wireless Worldwide, CSR (formerly Cambridge Silicon Radio) and Neul.

As Warren pointed out in his keynote, if you are very local there are a number of good standards such as WiFi and Bluetooth that you can use for wireless connectivity. If you are outside and want to go over 100m, say, then pretty much you have to use cellular technology such as GSM, CDMA or LTE. The trouble with that is that it is optimized for human communication such as voice or surfing the net and as a result it is very time and power intensive and the batteries don’t last long (think months).

Weightless operates in the whitespace being freed up as analog terrestial television gives back its spectrum. This turns out to be ideal for low power medium distance transmission for all the same reasons that back when TV was invented it was picked as the band: it transmits long distances at low power well, can go through wall, into basements and so on. Plus this spectrum is standard worldwide: although there are (mostly were) several different analog TV standards (NTSC, PAL, SECAM) the spectrum used for analog TV was internationally standard. This gives it a 4-10X gain in efficiency over the bands where cellular operates. The spectrum, as it frees up, is unlicensed and does not require complex antenna engineering.

For machine to machine communication, where latency is not a problem, the SoCs can sleep most of the time. Unlike cellular where you can’t wait 15 minutes for your phone call to connect (but you can wait a few seconds, I bet you didn’t know you already are doing), in applications like monitoring irrigation or power metering, this is not an issue. And the protocols can be optimized for this.

As an example of the mismatch between cellular standards, Gary Atkinson of ARM pointed out that for a smart power meter using GPRS (the data standard part of GSM) to transmit a single little bit of data (such as the current meter reading) takes 2000 packets. It is not a fair comparison, of course, but that is the point. GPRS is set up to assume you are going to want to send a lot of data in a timely manner and has a heavyweight protocol to make that happen.

The target is chipset cost under $2, range up to 10kM and a battery life of 10 years. A little different from the spec Apple is working on for the iPhone6.

Jasper Apps White Paper

Just in time for the Jasper User Group meeting, Jasper have a new white paper explaining the concept of JasperGold Apps.

First the User Group Meeting. It is in Cupertino at the Cypress Hotel November 12-13th. For more details and to register, go here. The meeting is free for qualified attendees (aka users). One thing I noticed at the meeting last year was just how innovative some of the users were in how they used Jasper’s products and, as a result, how much the users learned from each other.

The white-paper covers the motivation for changing the way in which Jasper technology is configured and delivered. Historically, formal verification technology has been licensed as a comprehensive suite of tools that can be used to address a broad range of formal verification applications and problems. Such deployment required a wide range of in-depth skills on the user’s part before the technology could be leveraged by not only first time users, but also experienced ones. New users were often overwhelmed by the comprehensive nature of the technology and the steep learning curve, while experienced users wishing to deploy a narrow application scope across the organization were impeded by the all-in-one approach.

Early stage users generally prefer to adopt and deploy new design and verification methods using a low risk, step-by-step approach, which also allows them to accumulate skills and expertise incrementally. Experienced formal users are more likely to utilize focused capabilities to tackle specific issues and applications within design and verification, but must do so across an organization rather than on an individual basis. In both cases, users traditionally had to license an entire tool suite in order to access only a subset of its capability. Consequently, the all-in-one approach did not and does not provide for efficient deployment for either type of user.

Rather than deploying a general-purpose, all-in-one tool suite, many design teams need application-specific solutions that:

- Address a wide variety of verification applications throughout the design flow, enabling them to adopt formal technology, application-by-application;

- Enable teams to acquire the expertise necessary to address only the verification task(s) in hand;

- Allow teams to license only the technology appropriate for a particular application; and

- Eliminate or significantly mitigate the perceived risk in adopting unfamiliar technology.

JasperGold Apps are interoperable solutions, each of which targets an individual formal verification application. Using JasperGold Apps, design teams can adopt and expand their use of formal verification by employing a low-risk, application-by-application approach. Each JasperGold App provides all of the tool functionality and formal methodology necessary to perform its intended application-specific task, eliminating the need to license a complete formal verification suite. Each JasperGold App enables the user to acquire only the expertise necessary for the particular task at hand, eliminating the need to become expert in every aspect of formal verification.

Download the white paper from this page.

SpyGlass IP Kit 2.0

On Halloween, Atrenta and TSMC announced the availability of SpyGlass IP Kit 2.0. IP Kit is a fundamental element of TSMC’s soft IP9000 Quality Assessment program that assesses the robustness and completeness of soft (synthesizable) IP.

IP Kit 2.0 will be fully supported on TSMC-Online and available to all TSMC’s soft IP alliance partners on Nov. 20, 2012, just in time to make sure your turkey is SpyGlass Clean.

TSMC’s soft IP quality assessment program is a joint effort between TSMC and Atrenta to deploy a series of SpyGlass checks that create detailed reports of the completeness and robustness of soft IP. Currently, over 15 soft IP suppliers have been qualified through the program. IP Kit 2.0 represents an enhanced set of checks that adds physical implementation data (e.g., area, timing and congestion) and advanced formal lint checks (e.g., X-assignment, dead code detection). IP Kit 2.0 also allows easier integration into the end user’s design flow and enhanced IP packaging options.

On the same subject, IPExtreme had an all day meeting at the computer history museum about, duh, IP. One of the companies presenting was Atrenta and here is a video of Michael Johnson’s presentation on IP Kit.

And, completely off topic, at the end of the IPExreme event they served wine and beer and had a short presentation on each beforehand. Jessamine McLellan, then the sommelier at Chez TJ in Mountain View (now the bar manager at the not-yet-open Hakkasan in San Francisco) gave a presentation on pairing wine with food. That last name sounds a little familiar…

ARM and a LEG

I went to Warren East’s keynote speech at ARM Techcon today. There had been some hints earlier in the week that some significant announcements would be made and, while they were not earth-shattering, I think that they will be significant in the long term.

One interesting thing that Warren pointed out is that the ARM partner alliance is over 1000 companies strong. I don’t think anyone else can come anywhere close to that. It covers semiconductor partners, EDA partners, system companies, embedded software companies, standards bodies and more. It is quite an achievement to build up that large a portfolio of support so quickly. Back in the mid-1990s when I was at VLSI Technology, ARM had to set up a fund (that VLSI contributed to) to pay the real-time-operating-system companies such as Wind River and Green Hills to port to ARM. Now they could charge for the privilege. That’s quite a change.

For me the most interesting announcement was the creation of the Lenaro Enterprise Group (or LEG, ARM and a LEG, geddit?). Lenaro is the non-profit set up by partners to share development costs of Linux implementations primarily for mobile on ARM processors. It is all open-source, collaborative, sustainable and organic. They have 150 engineers. Lenaro Enterprise Group (LEG) is a second group within the Lenaro organization to do the same for Linux for ARM-based servers. They plan to add another 150 engineers by the end of this year. If they really mean 2012 that is a fast hiring ramp

The initial companies signed up for LEG are AMD, AppliedMicro, Calxeda, Canonical, Cavium, Facebook, HP, Marvell and Red Hat join existing Linaro members ARM, HiSilicon, Samsung and ST-Ericsson. The company that immediately leaps out from that list, of course, is Facebook. The other companies are the usual suspects, semiconductor and SoC companies, and operating system companies. But Facebook is a user of this sort of technology.

There is a press release announcing LEG, full of the usual press-release sort of stuff. But echoing what I said earlier this week,”ARM servers are expected to be initially adopted in hyperscale computing environments, especially in large web farms and clusters, where flexible scaling, energy efficiency and an optimal footprint are key design requirements. The Linaro Enterprise Group will initially work on low-level Linux boot architecture and kernel software for use by SoC vendors, commercial Linux providers and OEMs in delivering the next generation of low-power ARM-based 32- and 64-bit servers. Linaro expects initial software delivery before the end of 2012 with ongoing releases thereafter.”

Oracle (not a member of LEG it seems) appeared and discussed how strategic it is to port Java to ARM’s 64 bit architecture. I was expecting them to announce a more ARM-focused server strategy in their own cloud datacenters or something, but things seemed to be restricted to Java.

There were also some announcements about the Internet of Things (IoT) but that deserves a separate post.

I was completely ready for some high-up from Facebook to come out and talk about how they are ready to deploy ARM-based datacenters but it was not to be. That had been my prediction at the start of the keynote to Dan Nenni who was sitting beside me.

Power, Predictions and Pills: Jonathan Koomey, ARM TechCon

ARM TechCon Software and Systems Keynote: Why Ultra-Low Power Computing Will Change Everything Simon Segars, speaking of the importance of continuing low power initiatives, introduced Dr. Jonathan Koomey, Consulting Professor at Stanford. (First impression, our kind of guy: He wears engineer shoes, not sales shoes!)

Koomey spoke for “Revolutionary change, and that’s not just loose talk….” in energy efficiency for the future. He cited Proteus Digital Health in biomedical; testing a new device embedded in a pill with no battery! It uses digestive juices to power the device! You take the pill, it communicates to report compliance…(Orwellian?) Just one example of how low power devices will transform medicine: delivering more info about the individual. Imagine sensors which can report pH, temperatures, etc.

Koomey summarized results of a 2011 study on computing energy efficiency he was part of. He stated:

- Energy efficiency of computation has doubled every year and a half (actually 1.6 years) from 1946 (ENIAC) to the present, which is a 100x improvement every decade…..….think Moores Law!

- Definition of energy efficiency: Computations/kWh, based on full load, using measured power data.

My personal note: A real weakness in the usefulness of this study is that it does not reflect idle modes, which are of critical importance in really understanding the issues.

Toomey delivered several truisms this audience well understands, such as: The things we did to improve performance also benefitted power efficiency. Laptops are taking over desktop market share. Revolution is driven by confluence of trends: computing, communications, sensors, controls. Importance of idle modes.

He presented interesting data on cell phone efficiency (minutes of talk time/Wh) vs year of introduction; on this graph, the efficiency increase he is seeing is 8% per year.

Are we burning up our planet? Well, we are using more devices worldwwide, but they are more efficient. Cloud is more efficient than data centers. Data centers in the US use 2% of our power, use around 1.5% in the world. Overall electronics is maybe 5% of our worldwide energy usage.

Power management trends: from process technology, to multicore, to software optimization matters, to “approximate computing”…its not just a silicon problem, it’s a system challenge.

The most fascinating topic: Energy harvesting, from ambient sources….and the future….

• Intelligent trash cans, self-solar-powered, compact trash, send a text when full, for fewer truck trips! Economic and environmental home run!

• Wireless no-battery sensors from Univ of Washington scavenge power from radio and tv signals in metro areas….

• MicroMote, generic sensing platform with ARM core, at University of Michigan. Ultra low power: 40uWatts in active mode, an astounding 11nW in sleep mode. Devices like this could someday monitor growth of a tumour.

• Streetline Networks, promoting sensors in parking spaces, and a mesh grid, in LA. Motes use only 400uW, with Mote Technology from Dust Networks. Think about variable messages signs or subscriptions to find parking spaces.

Deeper implications Toomey summarized included:

• Move bits not atoms (things)

• Customized data collection (nanodata not big data)

• Ever more precise control of processes

• Real time analysis

• Enabling “the internet of things”

How far can these energy efficiency trends continue?

- One physicist calculated a theoretical limit…this graph takes us to 2041, three decades!!!

- That is if we are clever…our horizon includes exciting applications…

- Vision of a world with low power, cheap devices distributed everywhere..for medical, for transportation, for services, for manufacturing…..

And as Toomey commented, “Our community has a high “do to talk” ratio…..”

(Yes, the shoes don’t lie…..)

Shhh…..Researchers at Purdue and the University of New South Wales recently invented a 1 electron computer…

Beneath the Surface lies the first real test

At CES 2011, Steven Sinofsky of Microsoft stepped on the stage and went off the map of proven Windows territory. Announcing the next version of Windows would support the ARM Architecture, including SoCs from Qualcomm, NVIDIA, and TI, set a new course for Microsoft.

But Windows, being the battleship-sized behemoth that it is, would take nearly two years to turn onto the new heading. Getting the entire fleet of PCs, smartphones, and tablets sailing together in formation would prove no easy task. Along the way, Microsoft provided hints of what was coming. A unified user interface, leveraging social-saavy tiles previewed on the smartphone, would usher in a new interface and steer clear of patent problems Android experienced.

In parallel during those two years, ARM has become the heart of almost all smartphones and tablets. Yes, there is a Motorola Droid Razr i running around Europe, a LAVA XOLO X900 in India, and probably a few more Intel smartphone designs waiting in the wings. But there isn’t direct, line-up-for-hours demand clamoring for Intel-based phones yet, and there hasn’t been an apps crisis since most Android apps use the Dalvik VM.

On another parallel path, ARM has been preparing a push into servers with a new 64-bit architecture (via our Paul McLellan), anchored by the Cortex-A57 processor, and the addition of the largest processor partner to date in AMD. For the Linux community running server farms, this is welcome news, albeit the first hardware is still at least a full year away. But again, no lines of rabid consumers ready to ditch Intel Architecture servers exist – the buzz is confined to IT.

What the average consumer will see, right now, is the Microsoft Surface. Just turn on the TV and notice the Surface logo in the live weather shots of Times Square, or one of several very hip Windows 8 commercials in a $1B campaign. In the first juxtaposition of ARM tablet and Intel PC processing cores, Surface comes in two versions presenting a direct choice of price, weight, battery life, and apps support.

But first comes the barking. The same IT departments looking forward to ARM-based servers find themselves conflicted over Windows 8. Their rationale is the slick new tile-happy interface is too radical a departure from Windows 7. It’s going to require a lot of retraining. That will be really expensive, and consume too many support resources. Therefore, we’re not moving to Windows 8. (Stamping of feet and holding of breath goes here.)

That will last until the CFO walks in with a Windows 8 tablet, from one of several suppliers including Dell and Samsung. The same forces of bring-your-own-device, or BYOD, that created an initial backlash against Microsoft will now start to work in its favor. A few Windows 8 devices in the right places will make headway.

The real test is consumers are showing up in retail stores hoping to get their hands on a Surface for a closer look, and what they are seeing is ARM and Windows RT which was cleverly spotted a head start. That same slick tile-happy interface from the commercials is right there, but under the Surface are all-new apps. For consumers coming from smartphones or tablets, an app store isn’t a foreign idea. RT apps will come as more developers get through certification and into the Windows store, and in the short term some may be missing.

Studies like this one from Google show most tablets are used most of the time on the couch or in bed, as a social or multimedia or gaming or reading screen, and there aren’t a whole lot of apps in play. Nobody has 700,000 apps installed. This “my app store is bigger than yours” is nonsense after the initial critical mass level is reached, and Facebook and other biggies are supported.

Now entering November 2012, we have the first test where ARM and Intel sit truly side-by-side for consumer hands to pick up and try, featuring the same look-and-feel but a different user experience. Will consumers choose the Windows 8 version of Surface and other tablets, to satisfy their reflexive impulse to install legacy Windows apps? Or will they embrace the ARM platform and Windows RT, grab the essential apps, and enjoy a lighter, thinner, and less-oft-charged experience with a lower price tag?

So you can infer which side I’m on, here’s my Sammy Hagar reference:

“If I’m wrong, hey, then I’ll pay for it.

If I’m right, yeah, you’re gonna DEAL WITH IT.”

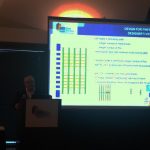

IBM Tapes Out 14nm ARM Processor on Cadence Flow

An announcement at the ARM conference was of a joint project to tape out an ARM Cortex-M0 in IBM’s 14nm FinFET process. In fact they taped out 3 different versions of the chip using different routing architectures to see the impact on yield.

This was the first 14nm ARM tapeout, it seems. I’m sure Intel has built plenty of 14nm test chips but it seems safe to assume none of them included ARM processors.

Cadence went into a lot of detail about how the flows need to be changed, especially to cope with double patterning and to do transistor extraction of the FinFETs themselves.

For me the most interesting part was that IBM actually revealed a certain amount about their process. The FinFETs need to manufactured in a complete grid and then cut to separate them into individual transistors, some of which might not actually be used. The photograph above shows the FinFETs in yellow running horizontally with the vertical metal to interconnect them into standard cells (I would have a decent picture except that the meeting had the oddly inconsistent policy that we couldn’t have a copy of the presentation but we could take pictures of the screen).

Here are some more details of the process[TABLE] align=”left” style=”width: 470px”

|-

|

| style=”text-align: center” | 32nm

| style=”text-align: center” | 28nm

| style=”text-align: center” | 20nm

| style=”text-align: center” | 14nm

|-

| Architecture

| Planar

| Planar

| Planar

| FinFET

|-

| Contacted poly pitch

| 126nm

| 114nm

| 90nm

| 80nm

|-

| Metal pitch

| 100nm

| 90nm

| 64nm

| 64nm

|-

| Local interconnect

| No

| No

| Yes

| Yes

|-

| Self-aligned contact

| No

| No

| No

| No

|-

| Strain engineering

| Yes

| Yes

| Yes

| Yes

|-

| Double patterning

| No

| No

| Yes

| Yes

|-

es at IBM:

Just like the Global Foundries 14nm announcement, this has a metal pitch unchanged from 20nm. Some cells might be smaller but in general I think this means that designs won’t really be any smaller using this technology. Lower power, faster, better leakage. But not smaller.

In the TSMC keynote earlier, the focus was on PPA (power, performance, area). We used to say power, performance, price. But the big question is how costly these processes will be compared to 28nm which looks set to be a workhorse process for a long time. The IBM/Cadence presentation was the same. Of course it is early in development and no yield optimization has been done but somehow the fact that nobody is bragging about how cheap the technology is and how everyone will want to use it immediately implies that it will not be cheaper. And worse, that designs will not get hundreds of times cheaper after several process generations.