An email update from John Cooley at DeepChip this morning prompted the bloggers here at SemiWiki to continue the discussion and point out incorrect data, so that readers realize what is really happening with our media portals as sources of timely and relevant news and opinion. I respect what John Cooley has done with DeepChip over the decades, but let’s talk about the arcane numbers of online publications a bit.

Schematic Capture and SPICE from Symica

At DAC in June I first blogged about Symicabecause they offered a Fast SPICE circuit simulator, and today I discovered a free version so I decided to write up a mini-review for you.

A Brief History of Synopsys

One of the largest software companies in the world, Synopsys is a market and technology leader in the development and sale of electronic design automation (EDA) tools and semiconductor intellectual property (IP). Synopsys is also a strong supporter of local education through the Synopsys Outreach Foundation. Each year in multiple regions, the company conducts science fairs, offering funding, equipment, supplies and training to students and teachers. This fusion of technology and education, designed to spark innovation, served as the catalyst for forming the company.

As a young immigrant to the United States in the late 1970s, Dr. Aart de Geus, Synopsys’ co-founder, chairman and co-CEO, enrolled at Southern Methodist University in Dallas, and became immersed in the school’s electrical engineering program. He soon went from writing programs designed to teach the basics of electrical engineering, to hiring students to do the programming. In the process, he discovered the value of taking a technical idea, creatively building on it, and then motivating other people to do the same. This principle ultimately fueled the creation of Synopsys.

After earning his Ph.D. and gaining a wealth of CAD experience at General Electric, Dr. de Geus and a team of engineers from GE’s Microelectronics Center in Research Triangle Park, N.C. – Bill Krieger, Dave Gregory and Rick Rudell – co-founded synthesis startup Optimal Solutions in 1986.

The following year, the company moved to Mountain View, Calif., became Synopsys (for SYNthesis and OPtimization SYStems), and proceeded to commercialize automated logic synthesis via the company’s flagship Design Compiler tool. Without this foundational technology that transitioned chip design from schematic- to language-based, today’s highly complex designs – and the productivity engineers can now achieve in creating them – would not be possible. The advent of EDA enabled engineers to address scale complexity and systemic complexity simultaneously.

Over the past quarter century, Synopsys has grown from that small, one-product startup to a global leader with more than $1.7 billion in annual revenue in fiscal 2012. Early on, Synopsys established relationships with virtually all of the world’s leading chipmakers, and gained a foothold with its first products. Synopsys’ tools quickly broadened to: front-end design including simulation, timing, power and test; system level design to encompass higher levels of abstraction; and physical implementation to address place and route, extraction and increasing manufacturing awareness.

Synopsys established strategic partnerships with the leading foundries and FPGA providers, acquired some early EDA point tool providers, launched more than two dozen products, and completed its initial public offering (IPO) in 1992. Fewer than 10 years after its founding, Synopsys had achieved a run rate of $250 million.

Synopsys began donating to local communities in 1989 and formalized charitable giving in 1992 under the leadership of employee giving committees. In 1999, the Synopsys Foundation and the Synopsys Outreach Foundation were formed, making science and math education and community support initiatives part of their charter.

In the 2000s, Synopsys introduced an integrated design platform that allows customers to take a design from specification all the way through to silicon fabrication. In all of its development activities, Synopsys works closely with customers to formulate strategies and implement solutions to address the latest semiconductor advances.

Growing smarter

Over the years, the company assembled a team with diverse global backgrounds and many decades of combined semiconductor industry know-how. In 2012, Dr. Chi-Foon Chan (Synopsys’ president and chief operating officer since 1998) expanded his role and joined Dr. de Geus as co-CEO. Acknowledging the effectiveness of their longtime partnership and the breadth and complexity of Synopsys’ business, Dr. Chan will help nurture the company through its next phase of growth.

Both in-house technology innovation and strategic acquisitions have driven Synopsys’ success as the company extended beyond its core business to address emerging areas of great importance to its customers. For example, early in the 1990s, Synopsys saw the need to integrate EDA and IP. Today, IP libraries are critically important to design efforts at the chip and system level. Synopsys’ concentration on the IP space has made it the leading supplier of interface IP – essential to today’s many communications standards – and the no. 2 supplier of commercial IP overall.

Synopsys has also been highly effective in creating a successful services offering. The company’s design consultants, focused on understanding customers’ evolving technology challenges, utilize a broad portfolio of consulting and design services to help chip developers accelerate innovation and success for their design projects.

Complementary acquisitions

Synopsys has been an active acquirer of companies to round out and enhance its product portfolio. When making acquisitions, Synopsys focuses on technology that complements organic R&D growth in its core offering or broadens its capabilities beyond traditional EDA.

Synopsys executed two of the largest acquisitions in EDA history: Avant!, with its advanced implementation tools, and Magma Design Automation, whose core EDA products were highly complementary to Synopsys’ portfolio.

A number of acquisitions helped Synopsys build out its IP portfolio over the years, while also recognizing the growing importance of high-level synthesis and embedded system-level design. Early on, Synopsys also saw the trend toward advanced prototyping technology, including virtual prototyping and FPGA-based prototyping for hardware/software co-design.

As challenges associated with analog/mixed-signal (AMS) design escalated, Synopsys enhanced its portfolio with complementary technology to address various AMS design aspects.

Mask synthesis and data prep became important additions to Synopsys’ manufacturing tool offering, as did the development and support of products for the design and analysis of high-performance, cost-effective optical systems.

Each technology advancement or acquisition has built upon the developments that preceded it, adding a new layer of value to the company. Dr. de Geus has a philosophy: If something already has value, how can it be moved to the next level? It was this approach that essentially informed the discovery of how fostering talent, technology and education can yield exciting results that drive ongoing innovation. One can only imagine what future years will hold.

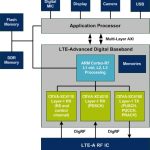

When ARM and CEVA team-up for “Designing a Multi-core LTE-A Modem”

ARM and CEVA have launched a white paper, addressing one of the hottest topics of the day: LTE-Advanced modem architecture. This very exhaustive paper, written by David Maidment (Mobile Segment Manager, ARM), Chris Turner (Senior Product Manager, ARM) and Eyal Bergman (VP Product Marketing, Baseband & Connectivity, CEVA Inc.) explains how Designing an efficient LTE-Advanced modem architecture with ARM[SUP]®[/SUP] Cortex™-R7 MPCore™ and CEVA XC4000 processors. The title is self-explaining, but having a look at the high level architecture allow to identify which core does what task. The Cortex-R7 does L1 control and L2, L3 processing, when no less than three CEVA-XC4000 DSP cores are in charge of the Layer-1 RX (XC4110 and XC4210) and TX (XC4100 on the right).

This LTE-Advanced Digital Modem Architecture is precisely described in this very comprehensive white paper, as we can see with this more detailed description, showing the AMBA AXI busses, the hardened FFT, Turbo Decoded, XC-DMA, Cipher RoHC, L1 Interrupt Controller, IFFT and DDR Controller functions.

In such a complex, multi-core design, the memory utilization and assignment is a key architecture decision. The table below summarizes both the size and the location (on chip or off chip) of the various memory blocks.

Let’s take a minute to have a look at the LTE penetration forecast for the next 5 years (in red) to be compared with the smartphone shipments (in blue). LTE adoption growth rate is impressive, and the “feeling” can be confirmed by facts (extracted from the white paper):

“The LTE (Long-Term Evolution) standard was first ratified by 3GPP in Release-8 at December 2008 and was conceived to provide wireless broadband access using an entirely packet based protocol and was the basis for the first wave of LTE equipment. LTE has now been adopted by over 347 carriers in 104 countries (Ref GSA) including such territories as USA, Japan, Korea and China to name but a few, making it the fastest adopted wireless technology in history”

And, because the SW development can not be separated anymore from the HW development, the SW mapping is completely described in the article, as we can see per the picture below:

You will find a lot more information by reading the complete white paper, you will find it here.

Eric Esteve from IPNEST

Happy Holidays

At times of this year, companies usually get their salespeople to submit the names and addresses of all their customers. They then get an expensive card printed and mail it out. What the recipient does is anyone’s guess, from throwing it straight in the bin to using it to decorate the office.

Atrenta decided to do something different this year, and professionally make a holiday video to wish everyone Happy Holidays. But they have customers in so many different countries, that it seemed very boring to do it only in English. So they didn’t. Enjoy (1 minute long).

Oh, and let me add my own greetings. Happy Holidays. Bonnes fetes. 圣诞节快乐。OK, that’s all the languages I can muster. Oh, I do know it in Hawaiian too, having spent Christmas there a couple of times years ago: Mele Kalikimaka.

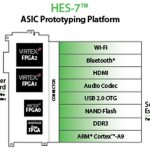

Zynq out of the box, in FPGA-based prototyping

Roaming around the hall at ARM TechCon 2012 left me with eight things of note, but one of the larger ideas showing up everywhere is the Xilinx Zynq. Designers are enthralled with the idea of a dual-core ARM Cortex-A9 closely coupled with programmable logic.

Continue reading “Zynq out of the box, in FPGA-based prototyping”

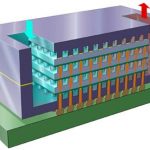

3D Architectures for Semiconductor Integration and Packaging

There is obviously a lot going on in 3D IC these days. And I don’t mean at the micro level of FinFETs which is also a way of going vertical. I mean through-silicon-via (TSV) based approaches for either stacking die or putting them on an interposer. Increasingly the question is no longer if this technology will be viable (there are already production designs) but when it will go mainstream.

The most informative conference I’ve attended on this is held in December. This year it is in Sofitel in Redwood City (down near the Oracle towers) from December 12-14th at the end of this week. If you are interested in this area at all then you should be attending. I was planning on attending myself (they gave me a press pass) but I’m actually going to be out of town.

This meeting is not focused on EDA, although it does cover that. It covers the entire ecosystem and all sorts of issues around who does what on the supply chain, how you keep wafers from breaking once you’ve thinned them (glue them onto something else) and so on. It gives the best overview of all the issues of anything I’ve been to.

I won’t try and summarize the whole agenda, you can look at it for yourself here (pdf). But the first morning is all keynotes, all the time, from 8-10.30:

- The Evolution of 3-D ICs: Leaping Ahead of Moore’s Law to Deliver a 6.8B Transistor Device , Liam Madden, Xilinx

- Revolutionary Changes in Memory Technology and the Role of 3-D, Thomas Pawlowski, Micron

- Moore’s Law, Semiconductor Economics, and Other Bellwethers of 3-D IC, Vinod Kariat, Cadence

- Technical Challenges and Progress in an Open Supply Chain Model, David McCann, Global Foundries

Online registration still seems to be open here.

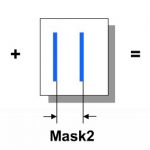

Double Patterning Verification

You can’t have failed to notice that 20nm is coming. There are a huge number of things that are different about 20nm from 28nm, but far and away the biggest is the need for double patterning. You probably know what this is by now, but just in case, here is a quick summary.

Lithography is done using 193nm light. Today we use immersion lithography (so instead of the gap between the wafer and the last lens being an air gap it is water). Amazingly, when you think of what you learned in high-school physics (young’s slits, diffraction gratings, never mind anything you might have learned about quantum mechanics) this worked down to 28nm and we could print the features needed with light with 6 times the wavelength. But at 20nm it doesn’t work any more.

Double patterning requires two masks. There are variations on the approach but for 20nm (probably not true for future generations) we split the polygons on the mask into two sets (this is known as coloring since that is what graph-theorists call this sort of thing). Each mask goes through a lithography cycle and we end up with photoresist that has been exposed to both masks. The reason we can just use one mask is that diffraction effects on that one mask would mean the exposure wouldn’t work. It is a light problem, not a problem of manufacturing what gets exposed.

So the new step is separating the polygons on the masks into two groups that are all double the minimum pitch. If you think of a bus just running parallel signals across the chip, then the odd ones go on one mask and the even on the other. But in more complex cases this may not work. It is simple to think up cases where A has to be on a different mask from B (because they are too close) and B has to be on a different mask from C, but that polygon C also goes close to A so it has to be on a different mask from A. Impossible (or we use triple patterning but that is not economic although we will get there if EUV doesn’t work effectively).

But there is another problem. The two masks are not self-aligning (there are self-aligning approaches which will be needed in the future, but for now it looks like most double patterning will be the simpler, aka cheaper, approach). So sidewall capacitance between two adjacent polygons will be much more variable than before depending on whether the masks are at the maximum allowed misalignment in one direction, versus the other direction, versus ‘typical’ meaning perfectly aligned. So a verification methodology that is color aware is needed to get useful parasitic information that can be used to close the design (timing, power, signal integrity, reliability and so on are all affected by this variability).

Synopsys has been working with TSMC and there was webinar presented by both companies to go into the details. The presenters are Anderson Chiu of TSMC and Beifang Qiu from Synopsys. Anderson is the program owner of the TSMC 20nm reference flow (I bet he gets lots of calls from EDA marketing folks). Beifang is the senior R&D manager for StarRC extraction.

Since both presenters are Chinese the webinar was presented not only in English but also in traditional and simplified Mandarin (the difference is in the slides not the language). I’m assuming that all 3 versions of the webinar will be online soon. The Synopsys home page for the webinar still links back to the registration. And if you are interested in that kind of thing, Synopsys and TSMC have a joint press release about the methodology here.

HP Loses Its Autonomy!

HP buys Autonomy for $11B then does an $8.8B writedown?!?!?! Was HP swindled by Autonomy? As a long time HP customer I’m outraged by this behavior. Not just the over priced acquisition but the behavior of HP on a whole! Even today 4 of the 6 laptops in my house are HP as are my printers. How am I supposed to buy HP products with a straight face after this?

Having friends at Oracle, HP, and Autonomy, this virtual reality show interests me and is a continuing humorous email thread amongst friends. Humorous, as in we can’t believe this is happening!

The cast of characters all have one thing in common, they all should have known better:

Raymond J. Lane Executive Chairman since 2011

Mr. Lane has served as HP’s Executive Chairman since September 2011. Previously, Mr. Lane served as HP’s non-executive Chairman from November 2010 to September 2011. Mr. Lane has served as Managing Partner of Kleiner Perkins Caufield & Byers, a private equity firm, since 2000. Prior to joining Kleiner Perkins, Mr. Lane was President and Chief Operating Officer and a director of Oracle Corporation, a software company. Before joining Oracle in 1992, Mr. Lane was a senior partner of Booz Allen Hamilton, a consulting company. Prior to Booz Allen Hamilton, Mr. Lane served as a division vice president with Electronic Data Systems Corporation, an IT services company that HP acquired in August 2008. Mr. Lane also is a director of Quest Software, Inc. and several private companies.

Given Ray’s depth of experience how does he get duped into acquiring Autonomy for an inflated price? The same could be said for the entire HP board who in my opinion should be held accountable for this $11B debacle.

The most interesting character is Frank Quattrone who represented Autonomy in this deal. I have written about him before, Frank handled the Synopsys acquisition of Magma.

Investment banker Frank Quattrone, formerly of Credit Suisse First Boston (CSFB), took dozens of technology companies public including Netscape, Cisco, Amazon.com, and coincidentally Magma Design Automation. Unfortunately CSFB got on the wrong side of the SEC by using supposedly neutral CSFB equity research analysts to promote technology stocks in concert with the CSFB Technology Group headed by Frank Quattrone. Frank was also prosecuted personally for interfering with a government probe.

To make a long story short: Frank Quattrone went to trial twice: the first ended in a hung jury in 2003 and the second resulted in a conviction for obstruction of justice and witness tampering in 2004. Frank was sentenced to 1.5 years in prison before an appeals court reversed the conviction. Prosecutors agreed to drop the complaint a year later. Frank didn’t pay a fine, serve time in prison, nor did he admit wrongdoing! Talk about a clean getaway! Quattrone is now head of the merchant banking firm Qatalyst Partners which is staffed with cronies and former CSFB people.

Another interesting note, Quattrone took Netscape public, Marc Andreessen co-founded Netscape, and Marc Andreessen is on the board of HP. The plot thickens!

Here’s a two sentence version of what happened based on what I have read and heard:

Simply stated, Autonomy over reported their product revenues by including services revenue as product sales at the time of the sale rather than at the time of the service. For example, if you sell a 5 year service agreement you are supposed to recognize that revenue over the five year period versus all 5 years up front. Revenue recognition 101, this really is the oldest trick in the M&A book.

Shame on Autonomy CEO Dr. Mike Lynch for allowing it, double shame on the auditors hired by HP for not catching it, but for me the blame sits squarely on the HP board of directors for not doing their jobs and protecting the stakeholders of HP. Just my opinion of course!

Apple Will NOT Manufacture SoCs at Intel

The internet is a funny place where rumors are true and truths are rumors. The latest one has Apple using Intel as a foundry. This is fuel for the rivalry between SemiWiki blogger Ed McKernan and me. Ed says Apple will use Intel, I say Apple will use TSMC, we have a very expensive dinner riding on this one.

TSMC falls on possible competition from Intel

Taiwan Semiconductor Manufacturing Co. (TSMC), the world’s largest made-to-order chip-maker, fell yesterday on reports it may compete with Intel for Apple orders...

Yes, TSM stock took a hit based on a rumor so we have motive (Apple consumes more than 200,000 12-inch wafers per year). The Intel rumors started last year with Intel saying they would “like” to manufacture the Apple A4 and A5 SoCs. Clearly that did not happen nor will it. Moving an SoC amongst foundries is serious business, serious technical and political business.

Samsung and Intel manufacturing processes could not be farther from compatible. I work with Sagantec, the leading process migration company, and can tell you a migration between Intel and Samsung is not technically feasible. It would be a complete re-design. Apple uses Samsung IP. Apple uses commercial EDA tools certified by Samsung. The ROI is not there for Apple to move the Apple Ax SoC between fabs.

Let’s not forget how Apple became a fabless semiconductor company. They first started with Samsung as an ASIC customer. Apple did the initial RTL design and tossed it over the wall to Samsung for IP integration and physical implementation. Apple then acquired P.A. Semi (Palo Alto Semiconductor) for $278M in 2008 which brought serious SoC design experience in-house. Do a quick search on LinkedIn and you will see quite a few PA Semi people are still at Apple. Apple has also made other acquisitions and investments in the fabless semiconductor ecosystem.

Politically it is a problem as well. Apple’s experience with Samsung, where a vendor (Samsung) directly competes with their largest foundry customer (Apple), will definitely change the way we all do business. Qualcomm is in the same boat with Samsung. Samsung was the largest Snapdragon SoCcustomer and now the Samsung Exynos SoC is replacing Snapdragon. I was at ISSCC when Samsung first presented a paper on Exynos and saw the question line queue up quickly with competing SoC engineers from QCOM, BCOM, TI, Apple, etc. Few if any questions were directly answered as is the Samsung way.

For the record, I have no problem with Samsung competing with customers by providing low cost alternatives. It is good for consumers. It is good for the consumer electronics industry. It is good for the semiconductor ecosystem. It will force Apple and Qualcomm to innovate and differentiate at the different pricing levels. I also have no problem with the resulting legal actions as it will force Samsung to innovate and differentiate rather than replicate. It’s all about the customer experience, better products at affordable price points, that is what business is all about.

Back to the Apple “TSMC versus Intel” debate:

Can Intel be successful in the foundry business? Of course they can but it will not happen anytime soon. It took Samsung 10+ years to get to the number 4 spot. Today Intel Foundry uses the ASIC business model like Samsung did in the early days. Customers throw RTL designs over the Intel wall for physical implementation. This helps Intel learn the SoC foundry business and it protects Intel process secrets. Moving forward Intel will have to develop a fabless semiconductor ecosystem (exposing process secrets) and forge EDA and IP partnerships with the likes of ARM.

Intel will also have to avoid the competing with customers conundrum. The Intel UltraBooks are a blatant copy of Macbooks. The Intel Atom will someday compete with ARM and don’t be surprised if Intel comes out with an SoC of their own. Sounds a bit like Samsung right? Deja vu all over again. TSMC on the other hand is a pure play foundry and does not compete with customers.

My bet is: moving forward Apple will use Samsung for 28nm (iPhone 5s) and TSMC for 20nm (iPhone 6). Intel certainly has a shot at 14nm and 10nm but never ever count out TSMC. If you want to bet a lunch on Apple manufacturing at Samsung or Intel for 20nm post it in the comment section. I will cover all lunch bets against TSMC.

Full disclosure: I can eat my weight in sushi!