Apache Design Solutions was founded in 2001 by Andrew Yang and three researchers from HP Labs (Norman Chang, Shen Lin, Weize Xie). They realized that engineers striving to meet the goal of increased device miniaturization, as defined by Moore’s Law, would eventually hit stumbling blocks in their progress. The founding team believed that success in the end would depend on how well chip designers can manage the power consumption, power delivery and power density of their designs. They also believed that how well engineers navigated the fragmented, silo-based electronic design eco-system would help them achieve competitive differentiation and cost reduction for their end-products. The team realized that engineers use various architectural and circuit techniques, including reducing voltage supply, to control power consumed by smartphones, tablets and hand-held devices. But these techniques made circuits more vulnerable to fluctuations and anomalies, either when manufactured or operated.

Before the release of Apache’s first flagship product RedHawk™ in 2003, chip designers relied on approximations, and ‘DC’ (or static) analysis methods, that are insufficient for predicting fluctuations coming from the interaction of the chip with its package and board. Apache’s RedHawk provided the industry with its first ‘dynamic’ power noise simulation technology, allowing chip designers to simulate their entire design, along with the package and board, and predict operation prior to manufacturing the parts. RedHawk introduced the industry to several key technologies that have become increasingly relevant, and are still unmatched over ten years later. These technologies include: (a) extraction of inductance for on-chip power/ground interconnects, in addition to their resistive and capacitive components, (b) linear model creation of on-chip MOSFET devices through the use of Apache Power Models, (c) automatic generation of on-chip vectors using a VectorLess™ approach, and (d) simultaneous analysis of multiple voltage domains handling billion+ node matrices, and considering various operating modes on the chip such as power up, power down, etc. In its debut year, RedHawk was presented with EDN Magazine’s Innovation of the Year Award (2003).

RedHawk’s first-in-class technologies, built on best-in-class extraction and solver engines, has led to its adoption as the ‘sign-off’ solution of choice by electronic system designers around the world for power noise simulation and design optimization. The release of RedHawk was followed by the introduction of Totem™, meeting the distinct needs of analog, IP, memory, and custom circuit design engineers for power noise and reliability analysis.

Figure1: ANSYS-Apache products / company timelines.

The introduction of the Chip Power Model (CPM™) technology and Sentinel™ product family in 2006, were seminal events in Apache’s history. For the first time, chip designers could create compact Spice-compatible models of their chips that preserved the time and frequency domain electrical characteristics of the chip layout. This model soon became the ‘currency’ for the exchange of power data enabling chip-aware package and system analysis. For its industry contribution, Apache’s CPM was presented with EDN Magazine’s Innovation of the Year Award (2006).

Figure2: The growing gap between power consumption in electronic systems

and the maximum allowed power budget.

The success of smart hand-held devices depended on how efficiently designers could squeeze increasing functionality within much smaller form factors, while managing to meet the end device’s power budget. Apache’s acquisition of Sequence Design in 2009, and the introduction of the PowerArtist™ platform, addressed chip designers growing need to simulate designs very early during the design phase, when architectural definitions are created using register transfer language (RTL) descriptions. PowerArtist enables accurate and predictive RTL-based power analysis and helps identify power bugs and cases of wasted power consumption in the design. This simulation driven design-for-power methodology allows engineers to address the growing gap between device power consumption and the maximum allowed power in end electronics systems.

Figure3: Increasing divergence between current flowing in wires versus their ability to handle such current levels.

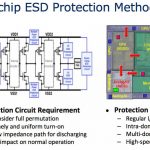

Increasing functionality requirements in smaller form factors have accelerated the adoption and use of advanced technology nodes such as 28-nanometer (nm) and below. The logic operations performed in a chip result in a flow of current in various inter-connects on the chip. But the wires and vias fabricated using advanced technology nodes cannot sustain increased current levels, and are at risk for field failures. Additionally, MOS devices and on-chip interconnects are increasingly susceptible to failures from high levels of current flow, resulting from an electro-static discharge (ESD) event. To address this growing design challenge, Apache introduced PathFinder™, the industry’s first full-chip level, package-aware ESD integrity simulation and analysis platform. PathFinder enables designers to predict and address ESD induced failure they would not be able to identify otherwise, until very late in the product design cycle. Apache’s PathFinder was also presented with EDN Magazine’s Innovation of the Year Award (2010).

Figure4: The ANSYS-Apache simulation ecosystem brings semiconductor foundry, IP providers, SoC design houses, package vendors, and system integrators together.

Apache’s innovative product platforms solve specific analysis needs and enable a simulation environment that brings together disparate design teams such as automotive or communications system companies and their IC suppliers, or IC design firms and their foundry or ASIC manufacturing partners.

Apache’s revenue increased 3X from 2006 to 2010, underscoring the strong adoption of its products and technologies by semiconductor and system design companies worldwide. Apache’s achievements were recognized by being a recipient of Deloitte Technology’s Fast 50 Award in 2008, for being among the top 15 fastest growing software and information technology companies in Silicon Valley. The following year, Apache was again honored by being selected to receive Deloitte’s 2009 Technology Fast 500 Award, as one of the fastest growing technology companies in North America. Apache’s R&D team focuses on consistently delivering first-in-class technologies and best-in-class engines to meet the capacity, performance and accuracy requirements for the customers, which include all of the top twenty semiconductor companies.

Apache filed for an initial public offering under the symbol APAD in March 2011. On June 30, 2011, ANSYS signed a definitive agreement to acquire Apache, and on August 1, 2011, Apache Design, Inc. became a wholly-owned subsidiary of ANSYS, Inc.

ANSYS’ simulation technologies address complex multi-physics challenges and enable simulation-driven product development. As semiconductor devices pervade every aspect of our lives, in cars, smartphones or in smart-meters, their impact on the end systems (and vice-versa) is becoming a key design challenge for customers. The combination of Apache’s chip-level power, thermal, signal and EMI modeling solutions, along with ANSYS’ package and system electro-magnetic, thermal and mechanical simulation platforms, enables faster convergence for the next generation of low-power, energy-efficient designs. Together the joint team solves design challenges such as those associated with high-speed signal transmission, stacked-die design integration, automotive, and mission-critical electronic system reliability validation. ANSYS-Apache remains committed to providing consistent customer support and delivering continuous technology innovation in all our products and platforms.